Or accurate but expensive?

How to split a limited annotation budget between different types of judges?👩⚖️🤖🦧

www.arxiv.org/abs/2506.07949

Or accurate but expensive?

How to split a limited annotation budget between different types of judges?👩⚖️🤖🦧

www.arxiv.org/abs/2506.07949

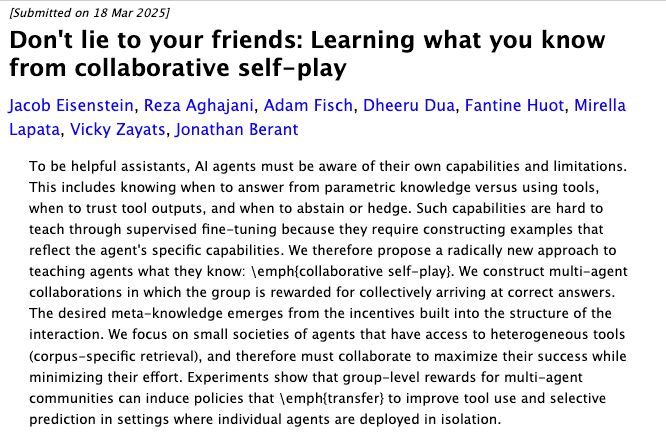

arxiv.org/abs/2503.14481

arxiv.org/abs/2503.14481

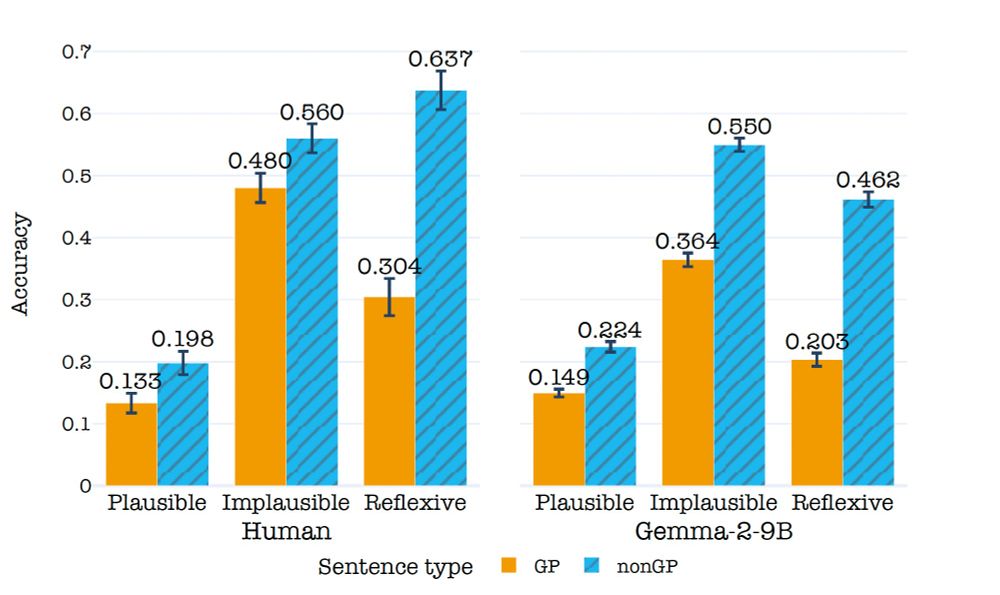

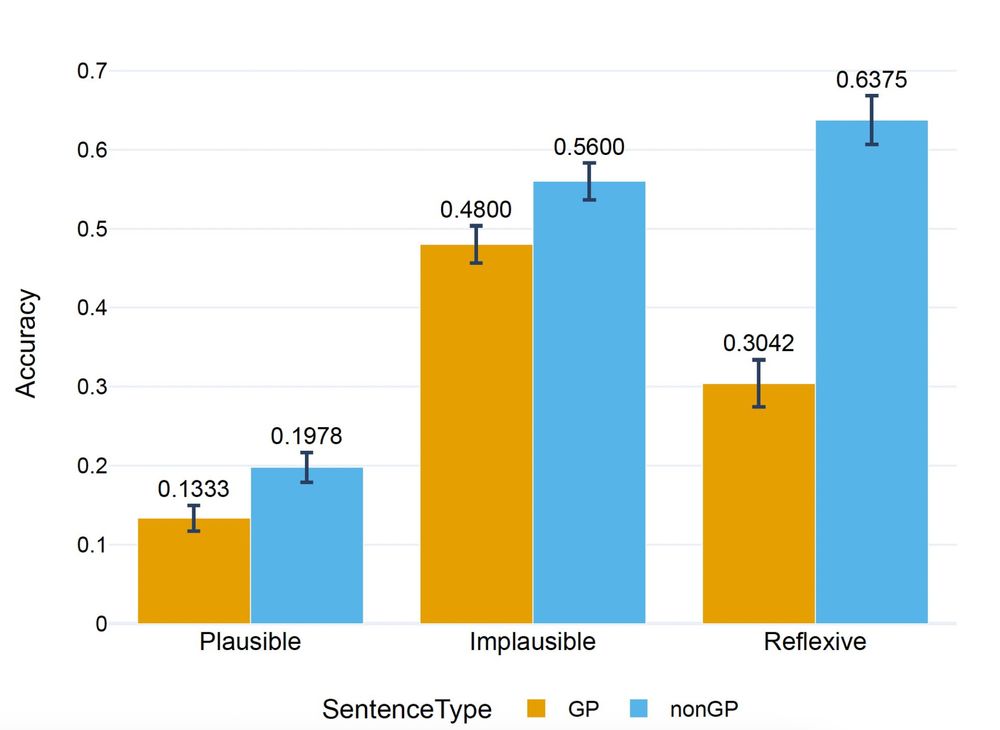

You probably had to read that sentence twice. It's because it's a garden path (GP) sentence. GP sentences are read slower and often misunderstood. This begs the questions:

1. Why are these sentences harder to process?

2. How do LLMs deal with them?

We will soon release our materials, human results, LLM results and all the cool images the models produced on our sentences.

arxiv.org/abs/2502.09307

We will soon release our materials, human results, LLM results and all the cool images the models produced on our sentences.

arxiv.org/abs/2502.09307

One takeaway from this paper for the psycholinguistics community: run your reading comprehension experiment on LLM first. You might get a general idea of the human results.

(Last image I swear)

One takeaway from this paper for the psycholinguistics community: run your reading comprehension experiment on LLM first. You might get a general idea of the human results.

(Last image I swear)

In this image: While the teacher taught the puppies looked at the board.

In this image: While the teacher taught the puppies looked at the board.

1. We asked the LLM to paraphrase our sentence

2. We asked text-to-image models to draw the sentences

In this image: While the horse pulled the submarine moved silently.

1. We asked the LLM to paraphrase our sentence

2. We asked text-to-image models to draw the sentences

In this image: While the horse pulled the submarine moved silently.

We found that LLMs also struggle with GP sentences and that, interestingly, the manipulations we did to test our hypotheses impacted LLMs as they did with humans

We found that LLMs also struggle with GP sentences and that, interestingly, the manipulations we did to test our hypotheses impacted LLMs as they did with humans

We devise hypotheses explaining why GP sentences are harder to process and test them. Human subjects answered a reading comprehension question about a sentence they read.

We devise hypotheses explaining why GP sentences are harder to process and test them. Human subjects answered a reading comprehension question about a sentence they read.

You probably had to read that sentence twice. It's because it's a garden path (GP) sentence. GP sentences are read slower and often misunderstood. This begs the questions:

1. Why are these sentences harder to process?

2. How do LLMs deal with them?

You probably had to read that sentence twice. It's because it's a garden path (GP) sentence. GP sentences are read slower and often misunderstood. This begs the questions:

1. Why are these sentences harder to process?

2. How do LLMs deal with them?

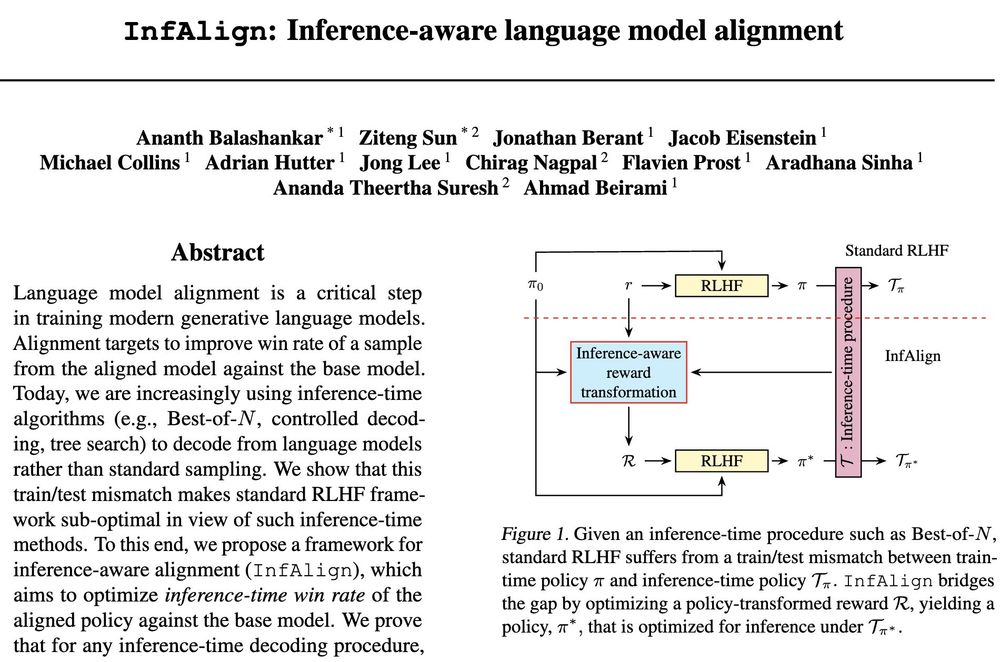

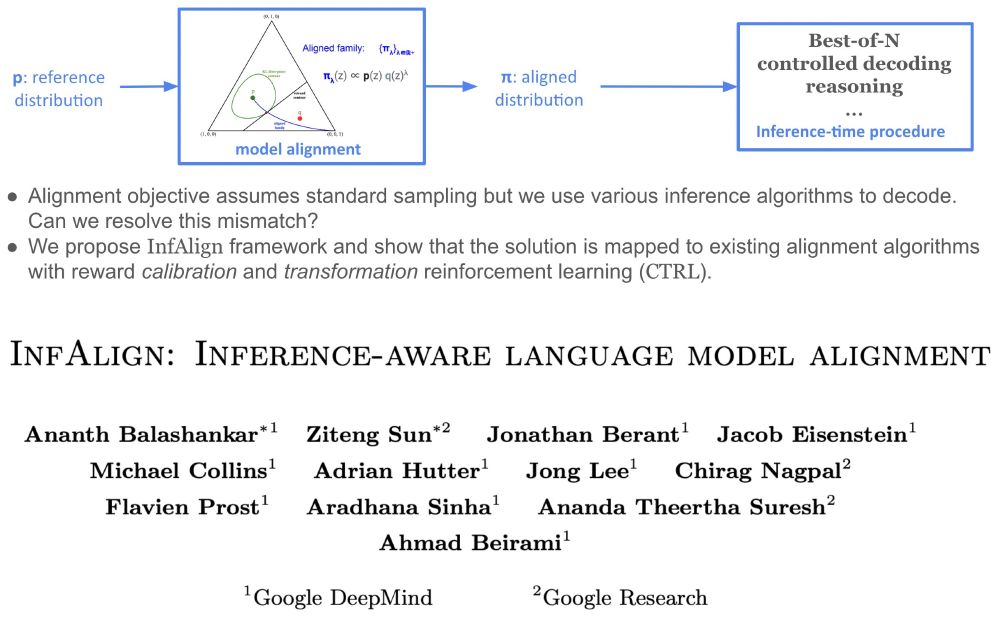

𝘊𝘢𝘯 𝘸𝘦 𝘢𝘭𝘪𝘨𝘯 𝘰𝘶𝘳 𝘮𝘰𝘥𝘦𝘭 𝘵𝘰 𝘣𝘦𝘵𝘵𝘦𝘳 𝘴𝘶𝘪𝘵 𝘢 𝘨𝘪𝘷𝘦𝘯 𝘪𝘯𝘧𝘦𝘳𝘦𝘯𝘤𝘦-𝘵𝘪𝘮𝘦 𝘱𝘳𝘰𝘤𝘦𝘥𝘶𝘳𝘦?

Check out below.

𝘊𝘢𝘯 𝘸𝘦 𝘢𝘭𝘪𝘨𝘯 𝘰𝘶𝘳 𝘮𝘰𝘥𝘦𝘭 𝘵𝘰 𝘣𝘦𝘵𝘵𝘦𝘳 𝘴𝘶𝘪𝘵 𝘢 𝘨𝘪𝘷𝘦𝘯 𝘪𝘯𝘧𝘦𝘳𝘦𝘯𝘤𝘦-𝘵𝘪𝘮𝘦 𝘱𝘳𝘰𝘤𝘦𝘥𝘶𝘳𝘦?

Check out below.

Alignment optimization objective implicitly assumes 𝘴𝘢𝘮𝘱𝘭𝘪𝘯𝘨 from the resulting aligned model. But we are increasingly using different and sometimes sophisticated inference-time compute algorithms.

How to resolve this discrepancy?🧵

Alignment optimization objective implicitly assumes 𝘴𝘢𝘮𝘱𝘭𝘪𝘯𝘨 from the resulting aligned model. But we are increasingly using different and sometimes sophisticated inference-time compute algorithms.

How to resolve this discrepancy?🧵

In this TMLR paper, we dive in-depth into #BrowserGym and #AgentLab. We also present some unexpected performances from Claude 3.5-Sonnet

In this TMLR paper, we dive in-depth into #BrowserGym and #AgentLab. We also present some unexpected performances from Claude 3.5-Sonnet

We are thrilled to release #AgentLab, a new open-source package for developing and evaluating web agents. This builds on the new #BrowserGym package which supports 10 different benchmarks, including #WebArena.

We are thrilled to release #AgentLab, a new open-source package for developing and evaluating web agents. This builds on the new #BrowserGym package which supports 10 different benchmarks, including #WebArena.

youtu.be/2AthqCX3h8U

youtu.be/2AthqCX3h8U