6/6

6/6

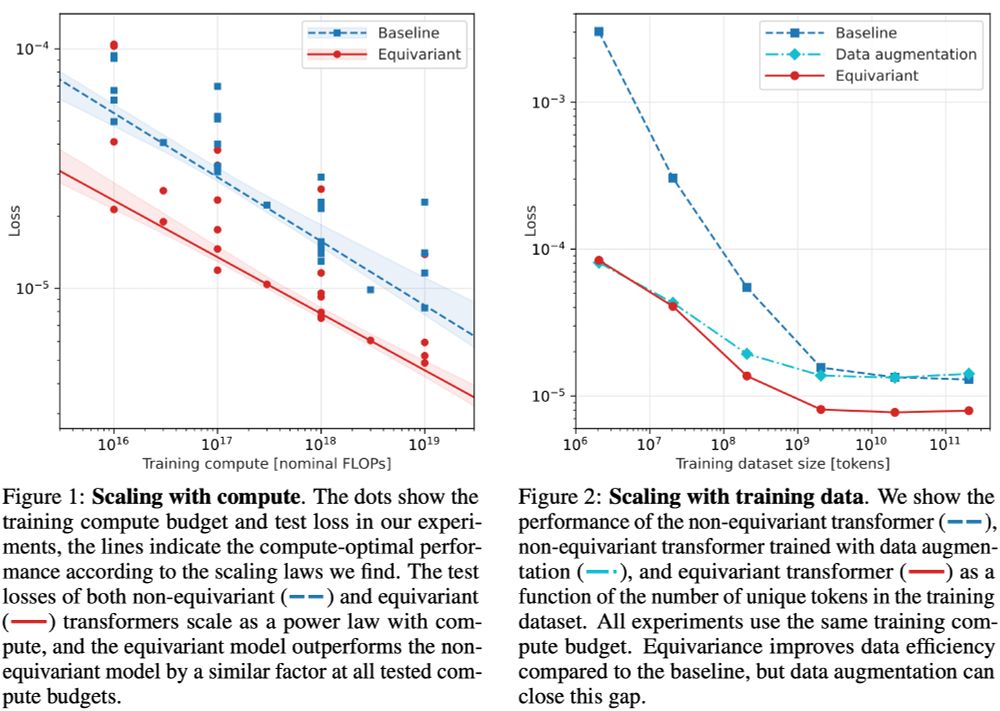

But did you expect equivariant models to also be more *compute*-efficient? Learning symmetries from data costs FLOPs!

arxiv.org/abs/2410.23179

With Sönke Behrends, @pimdh.bsky.social, and @taco-cohen.bsky.social.

5/6

But did you expect equivariant models to also be more *compute*-efficient? Learning symmetries from data costs FLOPs!

arxiv.org/abs/2410.23179

With Sönke Behrends, @pimdh.bsky.social, and @taco-cohen.bsky.social.

5/6

We studied empirically how equivariant and non-equivariant architectures scale as a function of training data, model size, and training steps.

4/6

We studied empirically how equivariant and non-equivariant architectures scale as a function of training data, model size, and training steps.

4/6

arxiv.org/abs/2405.14806

With @jonasspinner.bsky.social, Victor Bresó, @pimdh.bsky.social, Tilman Plehn, and Jesse Thaler.

3/6

arxiv.org/abs/2405.14806

With @jonasspinner.bsky.social, Victor Bresó, @pimdh.bsky.social, Tilman Plehn, and Jesse Thaler.

3/6

They built L-GATr 🐊: a transformer that's equivariant to the Lorentz symmetry of special relativity. It performs remarkably well across different tasks in high-energy physics.

2/6

They built L-GATr 🐊: a transformer that's equivariant to the Lorentz symmetry of special relativity. It performs remarkably well across different tasks in high-energy physics.

2/6