Paper: arxiv.org/abs/2508.03162

Data and models: huggingface.co/facebook/ODA...

Paper: arxiv.org/abs/2508.03162

Data and models: huggingface.co/facebook/ODA...

Well, I’ve got the fix for you, Neuralatex! An ML library written in pure Latex!

neuralatex.com

To appear in Sigbovik (subject to rigorous review process)

Well, I’ve got the fix for you, Neuralatex! An ML library written in pure Latex!

neuralatex.com

To appear in Sigbovik (subject to rigorous review process)

and against political censorship, autocracy, and fascism.

Science stands at a crossroads. This is a wider fight for truth, for democracy, and for the future.

We hope you join us.

www.standupforscience2025.org

and against political censorship, autocracy, and fascism.

Science stands at a crossroads. This is a wider fight for truth, for democracy, and for the future.

We hope you join us.

www.standupforscience2025.org

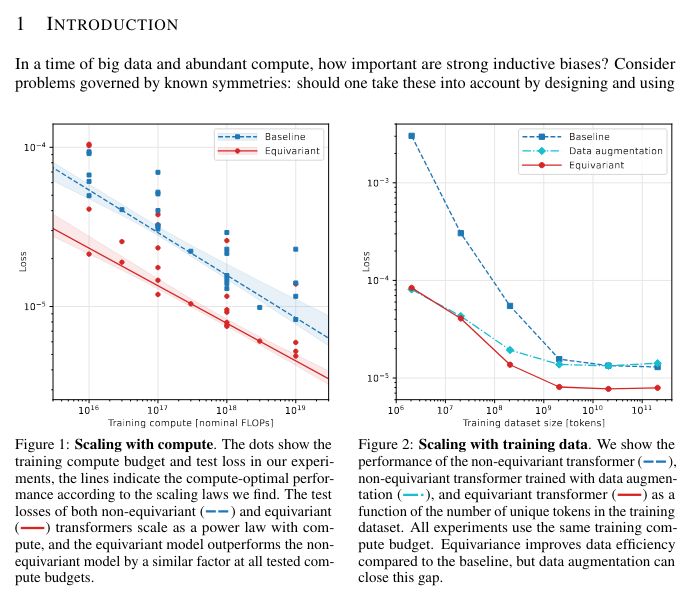

On “Does equivariance matter at scale?” At NeurReps workshop

arxiv.org/abs/2410.23179

www.neurreps.org

On “Does equivariance matter at scale?” At NeurReps workshop

arxiv.org/abs/2410.23179

www.neurreps.org

I'm looking forward to meeting old and new friends, learning a thing or two, and presenting some recent work:

1/6

I'm looking forward to meeting old and new friends, learning a thing or two, and presenting some recent work:

1/6

Our answer: They’re two sides of the same coin. We wrote a blog post to show how diffusion models and Gaussian flow matching are equivalent. That’s great: It means you can use them interchangeably.

Our answer: They’re two sides of the same coin. We wrote a blog post to show how diffusion models and Gaussian flow matching are equivalent. That’s great: It means you can use them interchangeably.

Code: github.com/heidelberg-h...

Physics paper: arxiv.org/abs/2411.00446

CS paper: arxiv.org/abs/2405.14806

1/7

Code: github.com/heidelberg-h...

Physics paper: arxiv.org/abs/2411.00446

CS paper: arxiv.org/abs/2405.14806

1/7

www.pnas.org/doi/10.1073/...

simulation-based-inference.org

www.pnas.org/doi/10.1073/...

simulation-based-inference.org

Does Equivariance matter at scale? (@johannbrehmer.bsky.social et al.) arxiv.org/abs/2410.23179

Denoising Diffusion Bridge Models (Linqi Zhou et al.) arxiv.org/pdf/2309.16948

Does Equivariance matter at scale? (@johannbrehmer.bsky.social et al.) arxiv.org/abs/2410.23179

Denoising Diffusion Bridge Models (Linqi Zhou et al.) arxiv.org/pdf/2309.16948

Come work with us in Amsterdam!

careers.qualcomm.com/careers?pid=...

Come work with us in Amsterdam!

careers.qualcomm.com/careers?pid=...