jatinganhotra.dev swebencharena.com

Join researchers and developers already evaluating patches → swebencharena.com

#AI #SoftwareEngineering #CodeQuality #AIEvaluation #SWEBenchArena

Join researchers and developers already evaluating patches → swebencharena.com

#AI #SoftwareEngineering #CodeQuality #AIEvaluation #SWEBenchArena

🚫 PR Arena: Tracks merge rates, not code quality

🚫 Yupp AI: Known models, not blind

🚫 SWE Arena: General coding, not SWE tasks

✅ SWE-Bench-Arena: Blind quality evaluation of real bug fixes

🚫 PR Arena: Tracks merge rates, not code quality

🚫 Yupp AI: Known models, not blind

🚫 SWE Arena: General coding, not SWE tasks

✅ SWE-Bench-Arena: Blind quality evaluation of real bug fixes

• Simplicity

• Readability

• Performance

• Maintainability

• Correctness

No bias. Just quality assessment.

• Simplicity

• Readability

• Performance

• Maintainability

• Correctness

No bias. Just quality assessment.

What quality issues have you noticed with AI-generated code?

#AIEvaluation #SWEBenchArena #CodeQuality #AI #SoftwareEngineering

What quality issues have you noticed with AI-generated code?

#AIEvaluation #SWEBenchArena #CodeQuality #AI #SoftwareEngineering

🎓 AI researchers

👩💻 Professional developers

📚 Academic teams

🚀 Startup engineers

Your input shapes the future of AI code evaluation standards.

🎓 AI researchers

👩💻 Professional developers

📚 Academic teams

🚀 Startup engineers

Your input shapes the future of AI code evaluation standards.

• Real GitHub issues from actual projects

• Side-by-side patch comparison

• Blind evaluation (you don't know which is AI vs human)

• Multi-dimensional quality assessment

Early results are fascinating - some AI solutions are surprisingly elegant, others create hidden technical debt 📊

• Real GitHub issues from actual projects

• Side-by-side patch comparison

• Blind evaluation (you don't know which is AI vs human)

• Multi-dimensional quality assessment

Early results are fascinating - some AI solutions are surprisingly elegant, others create hidden technical debt 📊

Instead of just "does it work?", we ask:

✅ Is it maintainable?

✅ Will teams understand it?

✅ Does it follow best practices?

✅ Is it unnecessarily complex?

Instead of just "does it work?", we ask:

✅ Is it maintainable?

✅ Will teams understand it?

✅ Does it follow best practices?

✅ Is it unnecessarily complex?

🔗 jatinganhotra.dev/blog/swe-age...

📊 Includes full leaderboard analysis, complexity breakdown, and takeaways.

RT if you're building SWE agents — or trying to understand their real limits.

🔗 jatinganhotra.dev/blog/swe-age...

📊 Includes full leaderboard analysis, complexity breakdown, and takeaways.

RT if you're building SWE agents — or trying to understand their real limits.

It's a wake-up call.

🧠 Current AI systems cannot effectively combine visual + structural code understanding.

And that's a serious problem for real-world software workflows.

It's a wake-up call.

🧠 Current AI systems cannot effectively combine visual + structural code understanding.

And that's a serious problem for real-world software workflows.

Multimodal tasks often require multi-file edits and focus on JavaScript-based, user-facing applications rather than Python backends.

The combination of visual reasoning + frontend complexity is devastating.

Multimodal tasks often require multi-file edits and focus on JavaScript-based, user-facing applications rather than Python backends.

The combination of visual reasoning + frontend complexity is devastating.

📸 90.6% of instances in SWE-bench Multimodal contain visual content.

When images are present, solve rates drop from ~100% to ~25% across all top-performing agents.

📸 90.6% of instances in SWE-bench Multimodal contain visual content.

When images are present, solve rates drop from ~100% to ~25% across all top-performing agents.

But when the same models are tested on SWE-bench Multimodal, scores fall to ~30%.

But when the same models are tested on SWE-bench Multimodal, scores fall to ~30%.

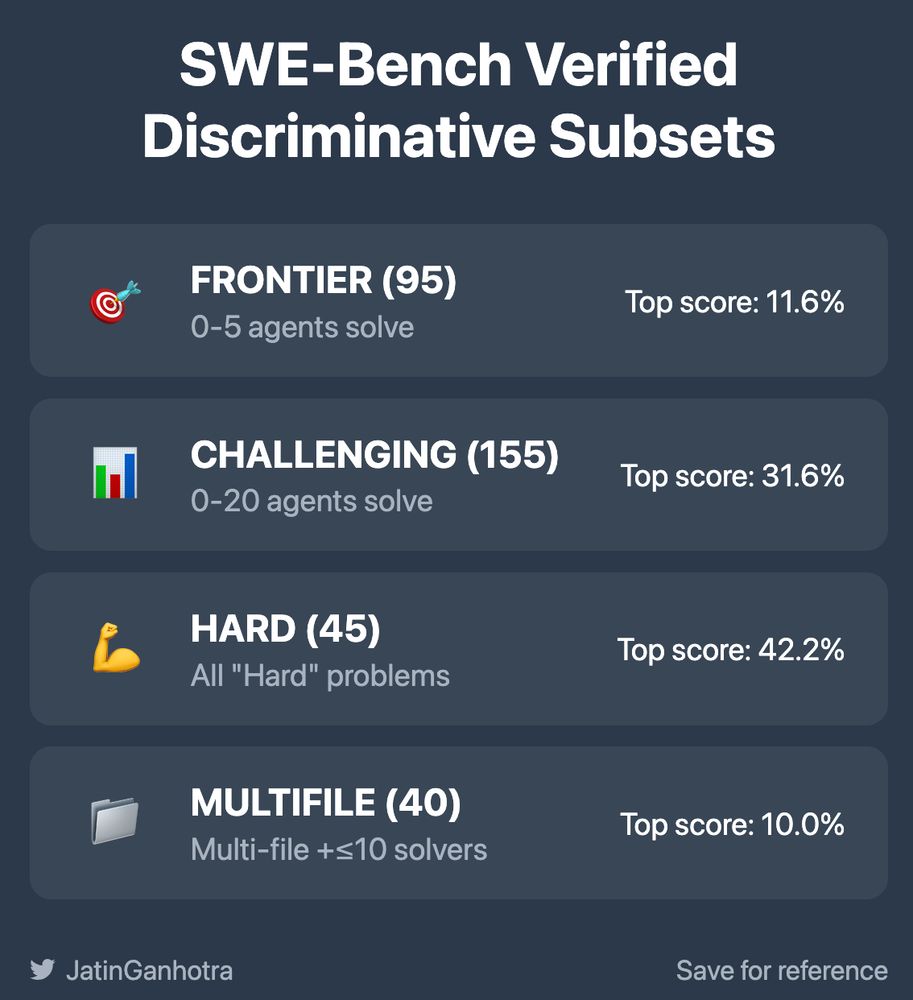

Dataset available now:

🤗 huggingface.co/datasets/jatinganhotra/SWE-bench_Verified-discriminative

Stop optimizing for saturated benchmarks. Start measuring real progress.

Dataset available now:

🤗 huggingface.co/datasets/jatinganhotra/SWE-bench_Verified-discriminative

Stop optimizing for saturated benchmarks. Start measuring real progress.

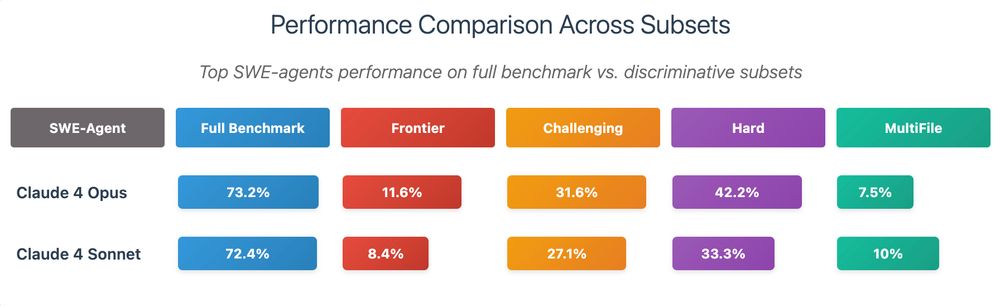

Claude 4 Opus on full benchmark: 73.2% ✅

Claude 4 Opus on Frontier subset: 11.6% 😬

This isn't just harder - it's revealing what agents ACTUALLY can't do

Claude 4 Opus on full benchmark: 73.2% ✅

Claude 4 Opus on Frontier subset: 11.6% 😬

This isn't just harder - it's revealing what agents ACTUALLY can't do

Each subset targets different evaluation needs - from maximum sensitivity (Frontier) to real-world complexity (MultiFile)

Performance drops from 73% to as low as 10%!

Each subset targets different evaluation needs - from maximum sensitivity (Frontier) to real-world complexity (MultiFile)

Performance drops from 73% to as low as 10%!

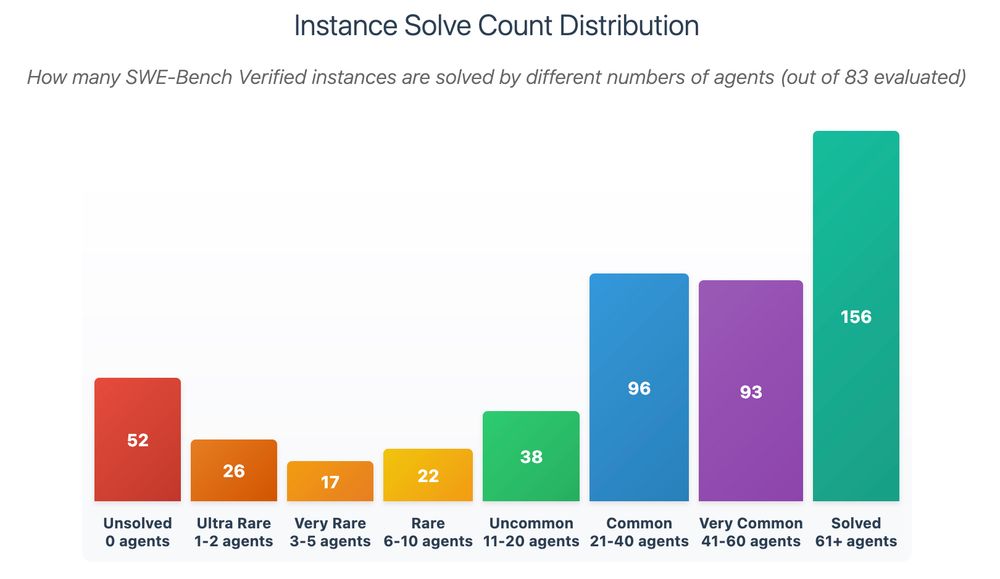

The distribution is shocking:

- 52 problems: ZERO agents can solve

- 26 problems: Only 1-2 agents succeed

- 156 problems: 61+ agents solve easily

The distribution is shocking:

- 52 problems: ZERO agents can solve

- 26 problems: Only 1-2 agents succeed

- 156 problems: 61+ agents solve easily

When everyone gets the same questions right, you can't tell who's actually better @anthropic.com

It's like ranking students when everyone scores 95%+ on the easy questions

When everyone gets the same questions right, you can't tell who's actually better @anthropic.com

It's like ranking students when everyone scores 95%+ on the easy questions