Helen Toner

@hlntnr.bsky.social

AI, national security, China. Part of the founding team at @csetgeorgetown.bsky.social (opinions my own). Author of Rising Tide on substack: helentoner.substack.com

Read Miranda's full piece here:

helentoner.substack.com/p/personaliz...

And the research brief she wrote that inspired this post:

cdt.org/insights/its...

helentoner.substack.com/p/personaliz...

And the research brief she wrote that inspired this post:

cdt.org/insights/its...

Personalized AI is rerunning the worst part of social media's playbook

The incentives, risks, and complications of AI that knows you

helentoner.substack.com

July 22, 2025 at 12:49 AM

Read Miranda's full piece here:

helentoner.substack.com/p/personaliz...

And the research brief she wrote that inspired this post:

cdt.org/insights/its...

helentoner.substack.com/p/personaliz...

And the research brief she wrote that inspired this post:

cdt.org/insights/its...

I honestly don't know how big the potential harms of personalization are—I think it's possible we end up coping fine. But it's crazy to me how little mindshare this seems to be getting among people who think about unintended systemic effects of AI for a living.

July 22, 2025 at 12:49 AM

I honestly don't know how big the potential harms of personalization are—I think it's possible we end up coping fine. But it's crazy to me how little mindshare this seems to be getting among people who think about unintended systemic effects of AI for a living.

Thinking about this, I keep coming back to two stories—

1) how FB allegedly trained models to identify moments when users felt worthless, then sold that data to advertisers

2) how we're already seeing chatbots addicting kids & adults and warping their sense of what's real

1) how FB allegedly trained models to identify moments when users felt worthless, then sold that data to advertisers

2) how we're already seeing chatbots addicting kids & adults and warping their sense of what's real

July 22, 2025 at 12:49 AM

Thinking about this, I keep coming back to two stories—

1) how FB allegedly trained models to identify moments when users felt worthless, then sold that data to advertisers

2) how we're already seeing chatbots addicting kids & adults and warping their sense of what's real

1) how FB allegedly trained models to identify moments when users felt worthless, then sold that data to advertisers

2) how we're already seeing chatbots addicting kids & adults and warping their sense of what's real

Full video here (21 min):

www.youtube.com/watch?v=dzwi...

www.youtube.com/watch?v=dzwi...

Helen Toner - Unresolved Debates on the Future of AI [Tech Innovations AI Policy]

YouTube video by FAR․AI

www.youtube.com

June 30, 2025 at 8:40 PM

Full video here (21 min):

www.youtube.com/watch?v=dzwi...

www.youtube.com/watch?v=dzwi...

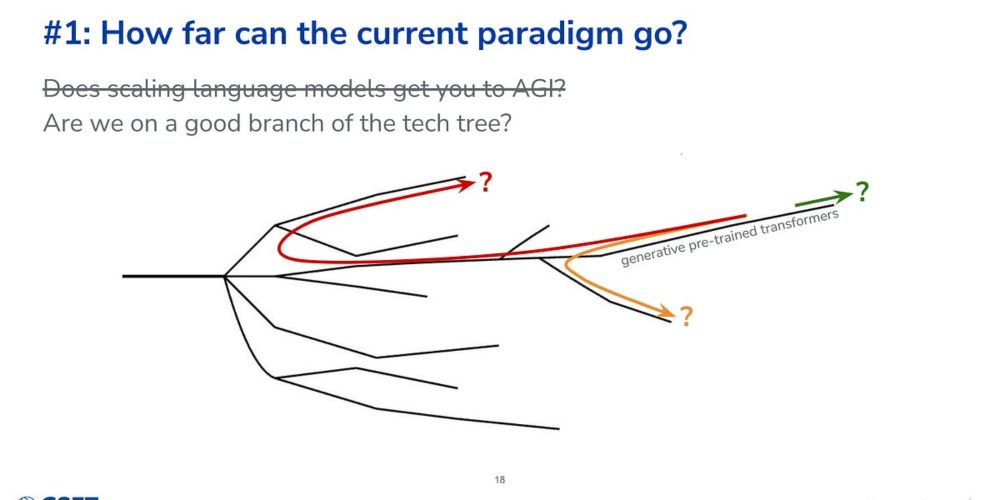

The 3 disagreements are:

How far can the current paradigm go?

How much can AI improve AI?

Will future AI still basically be tools, or will they be something else?

Thanks to

@farairesearch

for the invitation to do this talk! Transcript here:

helentoner.substack.com/p/unresolved...

How far can the current paradigm go?

How much can AI improve AI?

Will future AI still basically be tools, or will they be something else?

Thanks to

@farairesearch

for the invitation to do this talk! Transcript here:

helentoner.substack.com/p/unresolved...

Unresolved debates about the future of AI

How far the current paradigm can go, AI improving AI, and whether thinking of AI as a tool will keep making sense

helentoner.substack.com

June 30, 2025 at 8:40 PM

The 3 disagreements are:

How far can the current paradigm go?

How much can AI improve AI?

Will future AI still basically be tools, or will they be something else?

Thanks to

@farairesearch

for the invitation to do this talk! Transcript here:

helentoner.substack.com/p/unresolved...

How far can the current paradigm go?

How much can AI improve AI?

Will future AI still basically be tools, or will they be something else?

Thanks to

@farairesearch

for the invitation to do this talk! Transcript here:

helentoner.substack.com/p/unresolved...

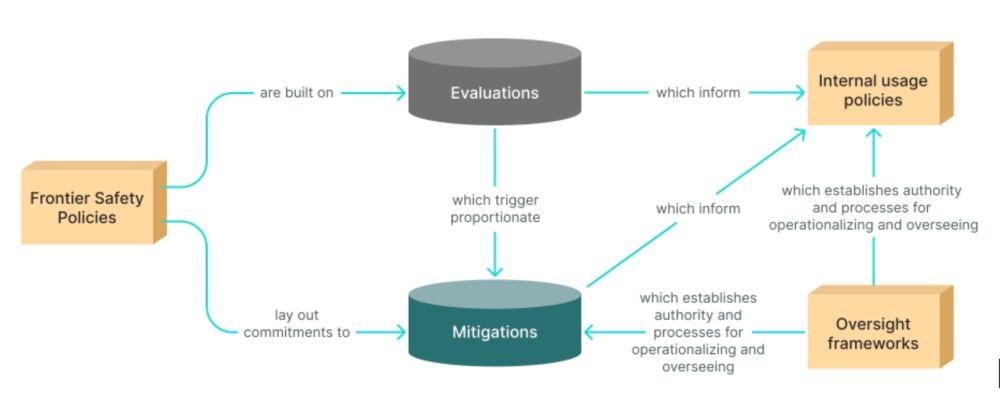

Great report from Apollo Research on the underlying issues motivating this call for research ideas:

www.apolloresearch.ai/research/ai-...

www.apolloresearch.ai/research/ai-...

AI Behind Closed Doors: a Primer on The Governance of Internal Deployment — Apollo Research

In the race toward increasingly capable artificial intelligence (AI) systems, much attention has been focused on how these systems interact with the public. However, a critical blind spot exists in ou...

www.apolloresearch.ai

May 19, 2025 at 4:59 PM

Great report from Apollo Research on the underlying issues motivating this call for research ideas:

www.apolloresearch.ai/research/ai-...

www.apolloresearch.ai/research/ai-...

More on our Foundational Research Grants program, including info on past grants we've funded:

cset.georgetown.edu/foundational...

cset.georgetown.edu/foundational...

Foundational Research Grants | Center for Security and Emerging Technology

Foundational Research Grants (FRG) supports the exploration of foundational technical topics that relate to the potential national security implications of AI over the long term. In contrast to most C...

cset.georgetown.edu

May 19, 2025 at 4:59 PM

More on our Foundational Research Grants program, including info on past grants we've funded:

cset.georgetown.edu/foundational...

cset.georgetown.edu/foundational...

Related reading:

Virginia Postrel's book (recommended!): amazon.com/FUTURE-ITS-E...

And a recent post from Brendan McCord: cosmosinstitute.substack.com/p/the-philos...

What else?

Virginia Postrel's book (recommended!): amazon.com/FUTURE-ITS-E...

And a recent post from Brendan McCord: cosmosinstitute.substack.com/p/the-philos...

What else?

The FUTURE AND ITS ENEMIES: The Growing Conflict Over Creativity, Enterprise, and Progress

The FUTURE AND ITS ENEMIES: The Growing Conflict Over Creativity, Enterprise, and Progress [Postrel, Virginia] on Amazon.com. *FREE* shipping on qualifying offers. The FUTURE AND ITS ENEMIES: The Growing Conflict Over Creativity, Enterprise, and Progress

amazon.com

May 12, 2025 at 6:21 PM

Related reading:

Virginia Postrel's book (recommended!): amazon.com/FUTURE-ITS-E...

And a recent post from Brendan McCord: cosmosinstitute.substack.com/p/the-philos...

What else?

Virginia Postrel's book (recommended!): amazon.com/FUTURE-ITS-E...

And a recent post from Brendan McCord: cosmosinstitute.substack.com/p/the-philos...

What else?

...But too many critics of those stasist ideas try to shove the underlying problems under the rug. With this post, I"m trying to help us hold both things at once.

Read the full post on AI Frontiers: www.ai-frontiers.org/articles/wer...

Or my substack: helentoner.substack.com/p/dynamism-v...

Read the full post on AI Frontiers: www.ai-frontiers.org/articles/wer...

Or my substack: helentoner.substack.com/p/dynamism-v...

We’re Arguing About AI Safety Wrong | AI Frontiers

Helen Toner, May 12, 2025 — Dynamism vs. stasis is a clearer lens for criticizing controversial AI safety prescriptions.

www.ai-frontiers.org

May 12, 2025 at 6:21 PM

...But too many critics of those stasist ideas try to shove the underlying problems under the rug. With this post, I"m trying to help us hold both things at once.

Read the full post on AI Frontiers: www.ai-frontiers.org/articles/wer...

Or my substack: helentoner.substack.com/p/dynamism-v...

Read the full post on AI Frontiers: www.ai-frontiers.org/articles/wer...

Or my substack: helentoner.substack.com/p/dynamism-v...

From The Future and Its Enemies by Virginia Postrel:

Dynamism: "a world of constant creation, discovery, and competition"

Stasis: "a regulated, engineered world... [that values] stability and control"

Too many AI safety policy ideas would push us toward stasis. But...

Dynamism: "a world of constant creation, discovery, and competition"

Stasis: "a regulated, engineered world... [that values] stability and control"

Too many AI safety policy ideas would push us toward stasis. But...

May 12, 2025 at 6:21 PM

From The Future and Its Enemies by Virginia Postrel:

Dynamism: "a world of constant creation, discovery, and competition"

Stasis: "a regulated, engineered world... [that values] stability and control"

Too many AI safety policy ideas would push us toward stasis. But...

Dynamism: "a world of constant creation, discovery, and competition"

Stasis: "a regulated, engineered world... [that values] stability and control"

Too many AI safety policy ideas would push us toward stasis. But...

Link to full post (subscribe!): helentoner.substack.com/p/2-big-ques...

2 Big Questions for AI Progress in 2025-2026

On how good AI might—or might not—get at tasks beyond math & coding

helentoner.substack.com

April 23, 2025 at 3:46 PM

Link to full post (subscribe!): helentoner.substack.com/p/2-big-ques...

Find them by searching "Stop the World" and "Cognitive Revolution" in your podcast app, or links here:

www.aspi.org.au/news/stop-wo...

www.cognitiverevolution.ai/helen-toner-...

www.aspi.org.au/news/stop-wo...

www.cognitiverevolution.ai/helen-toner-...

Stop the World: The road to artificial general intelligence, with Helen Toner

Stop the World: The road to artificial general intelligence, with Helen Toner

www.aspi.org.au

April 22, 2025 at 1:27 AM

Find them by searching "Stop the World" and "Cognitive Revolution" in your podcast app, or links here:

www.aspi.org.au/news/stop-wo...

www.cognitiverevolution.ai/helen-toner-...

www.aspi.org.au/news/stop-wo...

www.cognitiverevolution.ai/helen-toner-...

Cognitive Revolution (🇺🇸): More insidery chat with @nathanlabenz.bsky.social getting into why nonproliferation is the wrong way to manage AI misuse; AI in military decision support systems, and a bunch of other stuff.

Clip on my beef with talk about the "offense-defense" balance in AI:

Clip on my beef with talk about the "offense-defense" balance in AI:

April 22, 2025 at 1:27 AM

Cognitive Revolution (🇺🇸): More insidery chat with @nathanlabenz.bsky.social getting into why nonproliferation is the wrong way to manage AI misuse; AI in military decision support systems, and a bunch of other stuff.

Clip on my beef with talk about the "offense-defense" balance in AI:

Clip on my beef with talk about the "offense-defense" balance in AI:

Stop the World (🇦🇺): Fun, wide-ranging conversation with David Wroe of @aspi-org.bsky.social on where we're at with AI, reasoning models, DeepSeek, scaling laws, etc etc.

Excerpt on whether we can "just" keep scaling language models:

Excerpt on whether we can "just" keep scaling language models:

April 22, 2025 at 1:27 AM

Stop the World (🇦🇺): Fun, wide-ranging conversation with David Wroe of @aspi-org.bsky.social on where we're at with AI, reasoning models, DeepSeek, scaling laws, etc etc.

Excerpt on whether we can "just" keep scaling language models:

Excerpt on whether we can "just" keep scaling language models:

cc @binarybits.bsky.social re hardening the physical world,

@vitalik.ca re d/acc, @howard.fm re power concentration... plus many others I'm forgetting whose takes helped inspire this post. I hope this is a helpful framing for these tough tradeoffs.

@vitalik.ca re d/acc, @howard.fm re power concentration... plus many others I'm forgetting whose takes helped inspire this post. I hope this is a helpful framing for these tough tradeoffs.

April 5, 2025 at 6:09 PM

cc @binarybits.bsky.social re hardening the physical world,

@vitalik.ca re d/acc, @howard.fm re power concentration... plus many others I'm forgetting whose takes helped inspire this post. I hope this is a helpful framing for these tough tradeoffs.

@vitalik.ca re d/acc, @howard.fm re power concentration... plus many others I'm forgetting whose takes helped inspire this post. I hope this is a helpful framing for these tough tradeoffs.

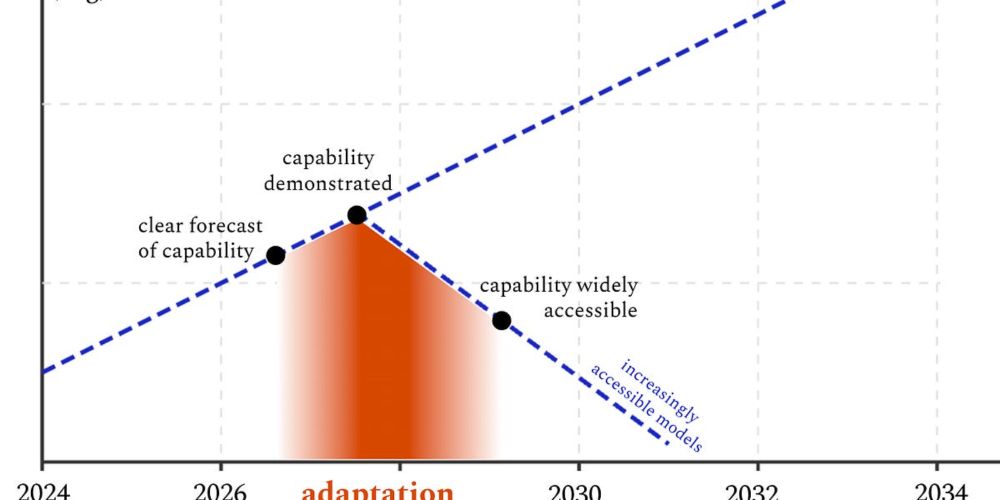

I don't think this approach will obviously be enough, I don't think it's equivalent to "just open source everything YOLO," and I don't think any of my argument applies to tracking/managing the frontier or loss of control risks.

More in the full piece: helentoner.substack.com/p/nonprolife...

More in the full piece: helentoner.substack.com/p/nonprolife...

Nonproliferation is the wrong approach to AI misuse

Making the most of “adaptation buffers” is a more realistic and less authoritarian strategy

helentoner.substack.com

April 5, 2025 at 6:09 PM

I don't think this approach will obviously be enough, I don't think it's equivalent to "just open source everything YOLO," and I don't think any of my argument applies to tracking/managing the frontier or loss of control risks.

More in the full piece: helentoner.substack.com/p/nonprolife...

More in the full piece: helentoner.substack.com/p/nonprolife...