Delighted to feature @mbogen.bsky.social on Rising Tide today, on what's being built and why we should care:

Delighted to feature @mbogen.bsky.social on Rising Tide today, on what's being built and why we should care:

Just posted a transcript from a talk where I go into this + a couple other key open qs/disagreements (not p(doom)!).

🔗 below, preview here:

Just posted a transcript from a talk where I go into this + a couple other key open qs/disagreements (not p(doom)!).

🔗 below, preview here:

Internal deployments of frontier AI models are an underexplored source of risk. My program at @csetgeorgetown.bsky.social just opened a call for research ideas—EOIs due Jun 30.

Full details ➡️ cset.georgetown.edu/wp-content/u...

Summary ⬇️

Internal deployments of frontier AI models are an underexplored source of risk. My program at @csetgeorgetown.bsky.social just opened a call for research ideas—EOIs due Jun 30.

Full details ➡️ cset.georgetown.edu/wp-content/u...

Summary ⬇️

In short: it's about dynamism vs stasis.

In short: it's about dynamism vs stasis.

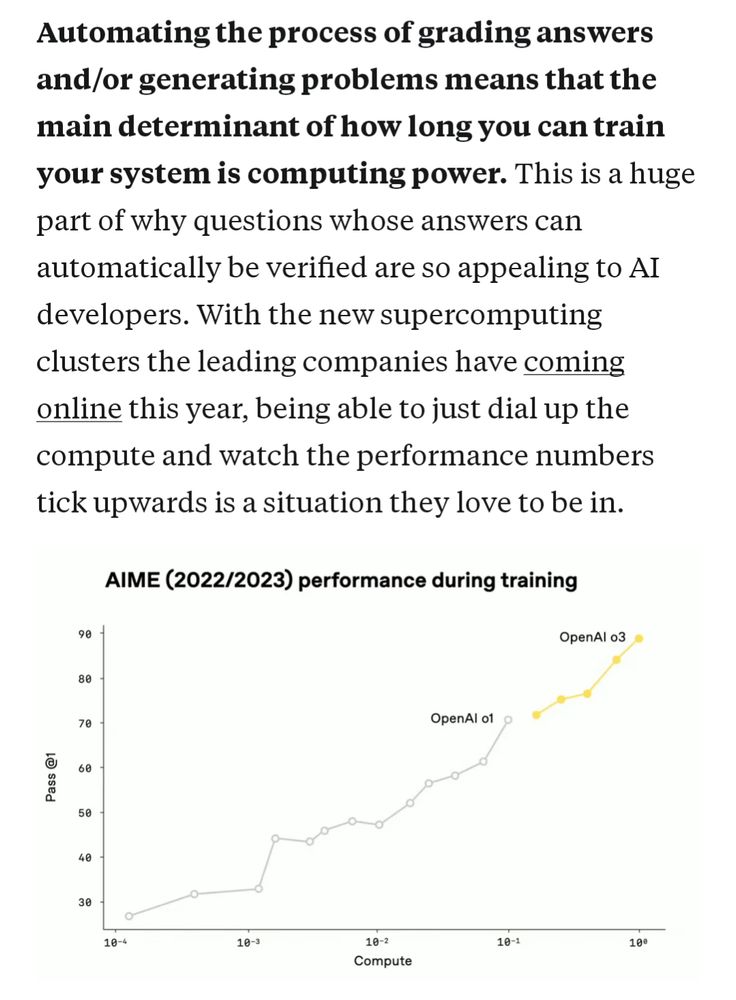

How well these go will determine if AI just gets super good at math & coding vs. mastering many domains. Post excerpts:

How well these go will determine if AI just gets super good at math & coding vs. mastering many domains. Post excerpts:

Clip on my beef with talk about the "offense-defense" balance in AI:

Clip on my beef with talk about the "offense-defense" balance in AI:

Excerpt on whether we can "just" keep scaling language models:

Excerpt on whether we can "just" keep scaling language models:

You can also pick your accent (I'm from Australia and sound that way when I talk to other Aussies, but mostly in professional settings I sound ~American)

You can also pick your accent (I'm from Australia and sound that way when I talk to other Aussies, but mostly in professional settings I sound ~American)

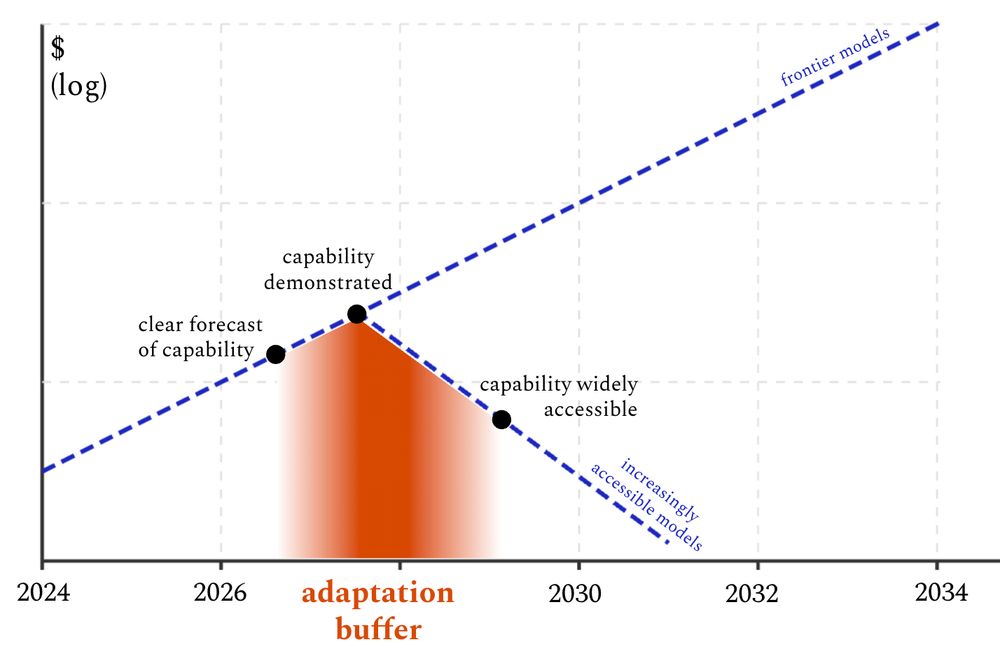

During that time, we need massive efforts to build as much societal resilience as we can.

During that time, we need massive efforts to build as much societal resilience as we can.

Rising Tide launch week post 3/3 is on why I disagree 🧵

helentoner.substack.com/p/nonprolife...

Rising Tide launch week post 3/3 is on why I disagree 🧵

helentoner.substack.com/p/nonprolife...

Talked with @axios.com's Alison Snyder at SXSW about the many in-between worlds, and all the things we can be doing now to help things go better in those worlds.

Talked with @axios.com's Alison Snyder at SXSW about the many in-between worlds, and all the things we can be doing now to help things go better in those worlds.

For anyone else who'd find it helpful, here's a side-by-side comparison with v1:

draftable.com/compare/NNBy...

One snap take: the rewritten taxonomy of systemic risks (p29) is much stronger.

For anyone else who'd find it helpful, here's a side-by-side comparison with v1:

draftable.com/compare/NNBy...

One snap take: the rewritten taxonomy of systemic risks (p29) is much stronger.

www.nytimes.com/2024/12/29/b...

www.nytimes.com/2024/12/29/b...

sohl-dickstein.github.io/2022/11/06/s...

sohl-dickstein.github.io/2022/11/06/s...

www.techpolicy.press/the-end-of-t...

www.techpolicy.press/the-end-of-t...