Eric Brachmann

@ericbrachmann.bsky.social

Niantic Spatial, Research.

Throws machine learning at traditional computer vision pipelines to see what sticks. Differentiates the non-differentiable.

📍Europe 🔗 http://ebrach.github.io

Throws machine learning at traditional computer vision pipelines to see what sticks. Differentiates the non-differentiable.

📍Europe 🔗 http://ebrach.github.io

‼️ Major update to the #ACEZero code base. We added the capabilities of the #ICCV2025 paper on Scene Coordinate Reconstruction Priors.

Gives you, e.g., a RGB-D version of ACE0. In the snipped below, I jointly reconstruct multiple RGB-D scans of ScanNet.

github.com/nianticlabs/...

Gives you, e.g., a RGB-D version of ACE0. In the snipped below, I jointly reconstruct multiple RGB-D scans of ScanNet.

github.com/nianticlabs/...

November 10, 2025 at 9:49 AM

‼️ Major update to the #ACEZero code base. We added the capabilities of the #ICCV2025 paper on Scene Coordinate Reconstruction Priors.

Gives you, e.g., a RGB-D version of ACE0. In the snipped below, I jointly reconstruct multiple RGB-D scans of ScanNet.

github.com/nianticlabs/...

Gives you, e.g., a RGB-D version of ACE0. In the snipped below, I jointly reconstruct multiple RGB-D scans of ScanNet.

github.com/nianticlabs/...

Looking forward to a busy #ICCV2025.

I will give three (very different) talks at workshops and tutorials, see info below.

We also present two papers, ACE-G and SCR Priors.

And it's the 10th (!) anniversary of the R6D workshop, which we co-organize.

I will give three (very different) talks at workshops and tutorials, see info below.

We also present two papers, ACE-G and SCR Priors.

And it's the 10th (!) anniversary of the R6D workshop, which we co-organize.

October 17, 2025 at 8:11 AM

Looking forward to a busy #ICCV2025.

I will give three (very different) talks at workshops and tutorials, see info below.

We also present two papers, ACE-G and SCR Priors.

And it's the 10th (!) anniversary of the R6D workshop, which we co-organize.

I will give three (very different) talks at workshops and tutorials, see info below.

We also present two papers, ACE-G and SCR Priors.

And it's the 10th (!) anniversary of the R6D workshop, which we co-organize.

🌟 Scene Coordinate Reconstruction Priors at #ICCV2025 🌟

Can we learn what a successful reconstruction looks like, and use this knowledge when reconstructing new scenes?

Explainer: youtu.be/RkV6U5xYc20

Project Page: nianticspatial.github.io/scr-priors

Inspiring work by Wenjing Bian et al.!

Can we learn what a successful reconstruction looks like, and use this knowledge when reconstructing new scenes?

Explainer: youtu.be/RkV6U5xYc20

Project Page: nianticspatial.github.io/scr-priors

Inspiring work by Wenjing Bian et al.!

October 15, 2025 at 7:36 AM

🌟 Scene Coordinate Reconstruction Priors at #ICCV2025 🌟

Can we learn what a successful reconstruction looks like, and use this knowledge when reconstructing new scenes?

Explainer: youtu.be/RkV6U5xYc20

Project Page: nianticspatial.github.io/scr-priors

Inspiring work by Wenjing Bian et al.!

Can we learn what a successful reconstruction looks like, and use this knowledge when reconstructing new scenes?

Explainer: youtu.be/RkV6U5xYc20

Project Page: nianticspatial.github.io/scr-priors

Inspiring work by Wenjing Bian et al.!

🔥 ACE-G, the next evolutionary step of ACE at #ICCV2025 🔥

We disentangle coordinate regression and latent map representation which lets us pre-train the regressor to generalize from mapping data to difficult query images.

Page: nianticspatial.github.io/ace-g/

Stellar work by Leonard Bruns et al.!

We disentangle coordinate regression and latent map representation which lets us pre-train the regressor to generalize from mapping data to difficult query images.

Page: nianticspatial.github.io/ace-g/

Stellar work by Leonard Bruns et al.!

October 14, 2025 at 8:24 AM

🔥 ACE-G, the next evolutionary step of ACE at #ICCV2025 🔥

We disentangle coordinate regression and latent map representation which lets us pre-train the regressor to generalize from mapping data to difficult query images.

Page: nianticspatial.github.io/ace-g/

Stellar work by Leonard Bruns et al.!

We disentangle coordinate regression and latent map representation which lets us pre-train the regressor to generalize from mapping data to difficult query images.

Page: nianticspatial.github.io/ace-g/

Stellar work by Leonard Bruns et al.!

*whisper* Only one way to know...

October 2, 2025 at 6:45 PM

*whisper* Only one way to know...

FastForward was trained on challenging frame sets. Therefore, it is able to solve difficult relocalization cases, like opposing shots in the video below. We only have mapping images of the front of the sign. Still FF relocalizes well even behind the sign.

October 2, 2025 at 8:48 AM

FastForward was trained on challenging frame sets. Therefore, it is able to solve difficult relocalization cases, like opposing shots in the video below. We only have mapping images of the front of the sign. Still FF relocalizes well even behind the sign.

FastForward uses 20 retrieved images by default. But no need to compute attention over 21 images. To keep the model lean, we sub-sample the feature tokens of mapping images.

Each token is combined with its ray embedding. Hence, FastForward operates on samples of visual tokens in scene space.

Each token is combined with its ray embedding. Hence, FastForward operates on samples of visual tokens in scene space.

October 2, 2025 at 8:48 AM

FastForward uses 20 retrieved images by default. But no need to compute attention over 21 images. To keep the model lean, we sub-sample the feature tokens of mapping images.

Each token is combined with its ray embedding. Hence, FastForward operates on samples of visual tokens in scene space.

Each token is combined with its ray embedding. Hence, FastForward operates on samples of visual tokens in scene space.

Building visual maps via reconstruction (SfM, SCR) can take time. Instead:

⏩ Get poses for your mapping images, e.g. via SLAM in real time.

⏩ Build a retrieval index in seconds.

⏩ FastForward predicts the relative pose between a query image and all retrieved images in one forward pass.

⏩ Get poses for your mapping images, e.g. via SLAM in real time.

⏩ Build a retrieval index in seconds.

⏩ FastForward predicts the relative pose between a query image and all retrieved images in one forward pass.

October 2, 2025 at 8:48 AM

Building visual maps via reconstruction (SfM, SCR) can take time. Instead:

⏩ Get poses for your mapping images, e.g. via SLAM in real time.

⏩ Build a retrieval index in seconds.

⏩ FastForward predicts the relative pose between a query image and all retrieved images in one forward pass.

⏩ Get poses for your mapping images, e.g. via SLAM in real time.

⏩ Build a retrieval index in seconds.

⏩ FastForward predicts the relative pose between a query image and all retrieved images in one forward pass.

⏩ FastForward ⏩ A new model for efficient visual relocalization. Accurate camera poses without building structured 3D maps.

nianticspatial.github.io/fastforward/

Work by @axelbarroso.bsky.social, Tommaso Cavallari and Victor Adrian Prisacariu.

nianticspatial.github.io/fastforward/

Work by @axelbarroso.bsky.social, Tommaso Cavallari and Victor Adrian Prisacariu.

October 2, 2025 at 8:48 AM

⏩ FastForward ⏩ A new model for efficient visual relocalization. Accurate camera poses without building structured 3D maps.

nianticspatial.github.io/fastforward/

Work by @axelbarroso.bsky.social, Tommaso Cavallari and Victor Adrian Prisacariu.

nianticspatial.github.io/fastforward/

Work by @axelbarroso.bsky.social, Tommaso Cavallari and Victor Adrian Prisacariu.

Small but important quality of life update by @rerun.io: Customizable frustum colors. Finally we can distinguish estimates and ground truth in the same 3D view ;)

September 29, 2025 at 9:00 AM

Small but important quality of life update by @rerun.io: Customizable frustum colors. Finally we can distinguish estimates and ground truth in the same 3D view ;)

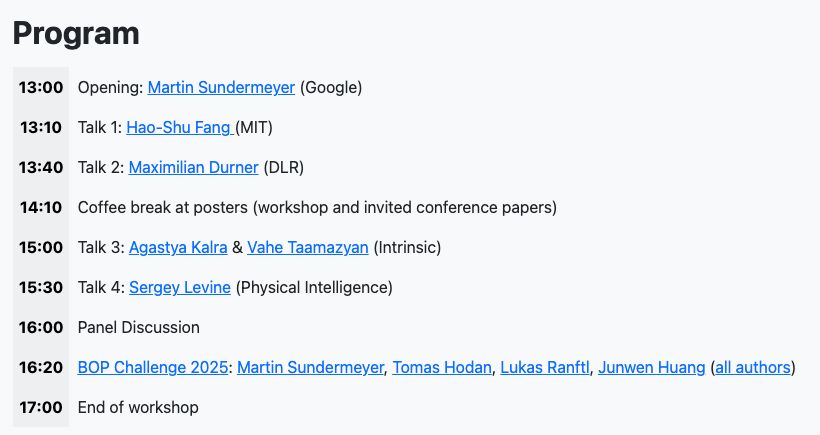

Recovering 6D Object Pose - Looking forward to the R6D workshop at #ICCV2025 in Hawaii! (not looking forward to the jet lag)

cmp.felk.cvut.cz/sixd/worksho...

The accompanying challenge is still open for submissions until Oct 1st. And the workshop also still accepts non-proceeding paper submission!

cmp.felk.cvut.cz/sixd/worksho...

The accompanying challenge is still open for submissions until Oct 1st. And the workshop also still accepts non-proceeding paper submission!

September 26, 2025 at 1:44 PM

Recovering 6D Object Pose - Looking forward to the R6D workshop at #ICCV2025 in Hawaii! (not looking forward to the jet lag)

cmp.felk.cvut.cz/sixd/worksho...

The accompanying challenge is still open for submissions until Oct 1st. And the workshop also still accepts non-proceeding paper submission!

cmp.felk.cvut.cz/sixd/worksho...

The accompanying challenge is still open for submissions until Oct 1st. And the workshop also still accepts non-proceeding paper submission!

The television:

September 26, 2025 at 1:15 PM

The television:

Did you know in Germany, there are signs in every city indicating the way to the closest H100, in case of an upcoming deadline?

September 3, 2025 at 7:27 AM

Did you know in Germany, there are signs in every city indicating the way to the closest H100, in case of an upcoming deadline?

How about a fun game of career path domino instead?

July 1, 2025 at 6:58 AM

How about a fun game of career path domino instead?

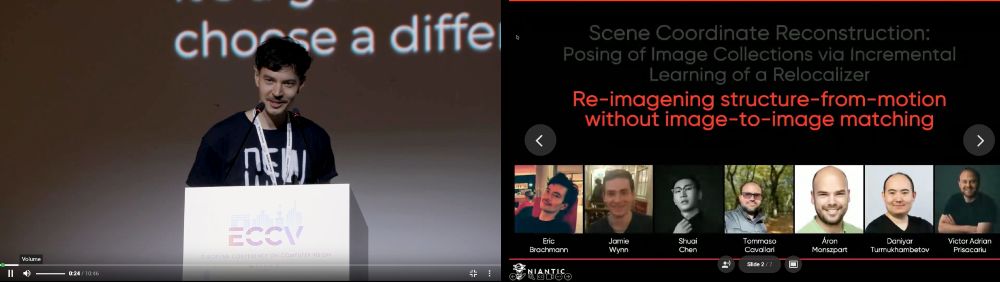

Thank you, but I think your talk was awesome :) And here for all to enjoy again: eccv.ecva.net/virtual/2024...

June 23, 2025 at 3:28 PM

Thank you, but I think your talk was awesome :) And here for all to enjoy again: eccv.ecva.net/virtual/2024...

I was not aware that the #ECCV2024 oral recordings are publicly available... So here is the #ACEZero talk: eccv.ecva.net/virtual/2024...

June 23, 2025 at 2:37 PM

I was not aware that the #ECCV2024 oral recordings are publicly available... So here is the #ACEZero talk: eccv.ecva.net/virtual/2024...

This is from the #ICCV2025 workshop on Camera Calibration and Pose Estimation (CALIPOSE), organized by

@sattlertorsten.bsky.social et al.

Now I have 5 months to get used to the fact of giving a talk after Richard Hartley 🙈

@sattlertorsten.bsky.social et al.

Now I have 5 months to get used to the fact of giving a talk after Richard Hartley 🙈

May 27, 2025 at 11:00 AM

This is from the #ICCV2025 workshop on Camera Calibration and Pose Estimation (CALIPOSE), organized by

@sattlertorsten.bsky.social et al.

Now I have 5 months to get used to the fact of giving a talk after Richard Hartley 🙈

@sattlertorsten.bsky.social et al.

Now I have 5 months to get used to the fact of giving a talk after Richard Hartley 🙈

The BOP challenge 2025 is already running: bop.felk.cvut.cz/challenges/b...

Your chance to shine on the task mentioned above. But beware that BOP is one step ahead already, by adding another set of nightmarish datasets. 👻

Your chance to shine on the task mentioned above. But beware that BOP is one step ahead already, by adding another set of nightmarish datasets. 👻

April 8, 2025 at 8:01 AM

The BOP challenge 2025 is already running: bop.felk.cvut.cz/challenges/b...

Your chance to shine on the task mentioned above. But beware that BOP is one step ahead already, by adding another set of nightmarish datasets. 👻

Your chance to shine on the task mentioned above. But beware that BOP is one step ahead already, by adding another set of nightmarish datasets. 👻

The BOP report of 2024 is out: arxiv.org/abs/2504.02812

BOP is a benchmark for 6D object pose estimation, with a new challenge organised yearly.

Community: Model-based 6D localisation? Pff, easy-peasy. 💪

BOP: Ok. Model-free 6D detection on the datasets below 👇

Community: 🙈 (0 entries submitted)

BOP is a benchmark for 6D object pose estimation, with a new challenge organised yearly.

Community: Model-based 6D localisation? Pff, easy-peasy. 💪

BOP: Ok. Model-free 6D detection on the datasets below 👇

Community: 🙈 (0 entries submitted)

April 8, 2025 at 7:55 AM

The BOP report of 2024 is out: arxiv.org/abs/2504.02812

BOP is a benchmark for 6D object pose estimation, with a new challenge organised yearly.

Community: Model-based 6D localisation? Pff, easy-peasy. 💪

BOP: Ok. Model-free 6D detection on the datasets below 👇

Community: 🙈 (0 entries submitted)

BOP is a benchmark for 6D object pose estimation, with a new challenge organised yearly.

Community: Model-based 6D localisation? Pff, easy-peasy. 💪

BOP: Ok. Model-free 6D detection on the datasets below 👇

Community: 🙈 (0 entries submitted)

I do not think so. You get the same moment vector for different choices of the reference point along the line.

See e.g. faculty.sites.iastate.edu/jia/files/in...

See e.g. faculty.sites.iastate.edu/jia/files/in...

April 4, 2025 at 1:48 PM

I do not think so. You get the same moment vector for different choices of the reference point along the line.

See e.g. faculty.sites.iastate.edu/jia/files/in...

See e.g. faculty.sites.iastate.edu/jia/files/in...

Back to healthier habits, after the deadline. </promotion>

March 10, 2025 at 9:16 AM

Back to healthier habits, after the deadline. </promotion>