Eric Brachmann

@ericbrachmann.bsky.social

Niantic Spatial, Research.

Throws machine learning at traditional computer vision pipelines to see what sticks. Differentiates the non-differentiable.

📍Europe 🔗 http://ebrach.github.io

Throws machine learning at traditional computer vision pipelines to see what sticks. Differentiates the non-differentiable.

📍Europe 🔗 http://ebrach.github.io

Pinned

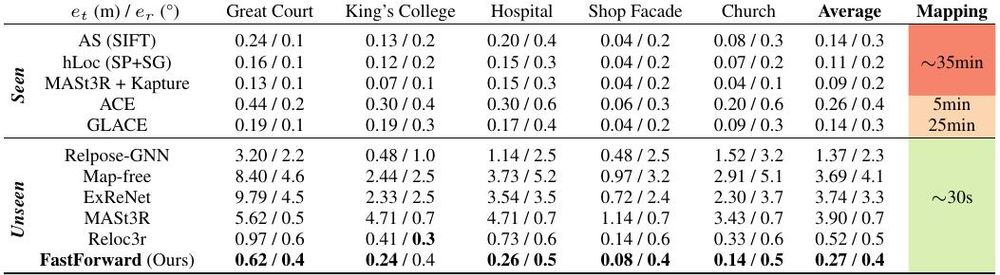

⏩ FastForward ⏩ A new model for efficient visual relocalization. Accurate camera poses without building structured 3D maps.

nianticspatial.github.io/fastforward/

Work by @axelbarroso.bsky.social, Tommaso Cavallari and Victor Adrian Prisacariu.

nianticspatial.github.io/fastforward/

Work by @axelbarroso.bsky.social, Tommaso Cavallari and Victor Adrian Prisacariu.

‼️ Major update to the #ACEZero code base. We added the capabilities of the #ICCV2025 paper on Scene Coordinate Reconstruction Priors.

Gives you, e.g., a RGB-D version of ACE0. In the snipped below, I jointly reconstruct multiple RGB-D scans of ScanNet.

github.com/nianticlabs/...

Gives you, e.g., a RGB-D version of ACE0. In the snipped below, I jointly reconstruct multiple RGB-D scans of ScanNet.

github.com/nianticlabs/...

November 10, 2025 at 9:49 AM

‼️ Major update to the #ACEZero code base. We added the capabilities of the #ICCV2025 paper on Scene Coordinate Reconstruction Priors.

Gives you, e.g., a RGB-D version of ACE0. In the snipped below, I jointly reconstruct multiple RGB-D scans of ScanNet.

github.com/nianticlabs/...

Gives you, e.g., a RGB-D version of ACE0. In the snipped below, I jointly reconstruct multiple RGB-D scans of ScanNet.

github.com/nianticlabs/...

Code is out. We provide the pre-trained ACE-G model, as well as code to replicate the paper results, and to evaluate ACE-G on new scenes. #ICCV2025

github.com/nianticspati...

github.com/nianticspati...

November 5, 2025 at 2:39 PM

Code is out. We provide the pre-trained ACE-G model, as well as code to replicate the paper results, and to evaluate ACE-G on new scenes. #ICCV2025

github.com/nianticspati...

github.com/nianticspati...

Hi everyone. Can we please stop naming methods in leetspeak? It slows down my writing - it is a skill I happily abandoned 20 years ago.

November 4, 2025 at 11:19 AM

Hi everyone. Can we please stop naming methods in leetspeak? It slows down my writing - it is a skill I happily abandoned 20 years ago.

Reposted by Eric Brachmann

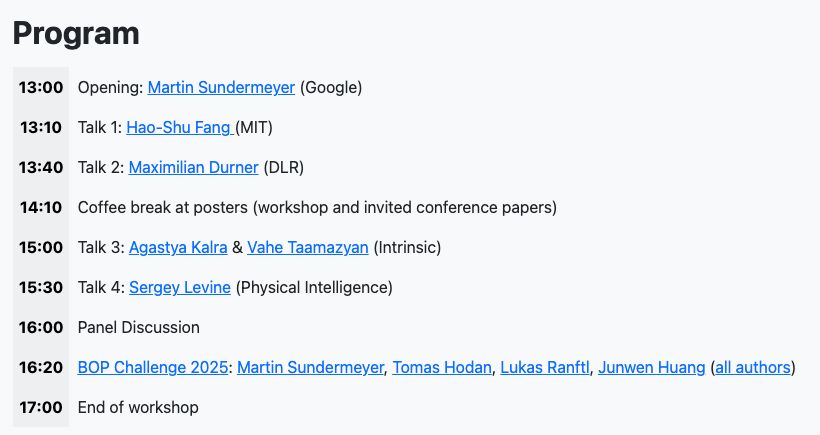

10th (!!) R6D workshop @ ICCV 2025:

cmp.felk.cvut.cz/sixd/worksho...

🤖 Object pose estimation for industrial robotics

📢 Speakers:

- Sergey Levine (UC Berkeley)

- Haoshu Fang (MIT)

- Maximilian Durner (DLR)

- Agastya Kalra & Vahe Taamazyan (Intrinsic)

📊 BOP 2025 with new BOP-Industrial datasets

cmp.felk.cvut.cz/sixd/worksho...

🤖 Object pose estimation for industrial robotics

📢 Speakers:

- Sergey Levine (UC Berkeley)

- Haoshu Fang (MIT)

- Maximilian Durner (DLR)

- Agastya Kalra & Vahe Taamazyan (Intrinsic)

📊 BOP 2025 with new BOP-Industrial datasets

October 17, 2025 at 4:01 PM

10th (!!) R6D workshop @ ICCV 2025:

cmp.felk.cvut.cz/sixd/worksho...

🤖 Object pose estimation for industrial robotics

📢 Speakers:

- Sergey Levine (UC Berkeley)

- Haoshu Fang (MIT)

- Maximilian Durner (DLR)

- Agastya Kalra & Vahe Taamazyan (Intrinsic)

📊 BOP 2025 with new BOP-Industrial datasets

cmp.felk.cvut.cz/sixd/worksho...

🤖 Object pose estimation for industrial robotics

📢 Speakers:

- Sergey Levine (UC Berkeley)

- Haoshu Fang (MIT)

- Maximilian Durner (DLR)

- Agastya Kalra & Vahe Taamazyan (Intrinsic)

📊 BOP 2025 with new BOP-Industrial datasets

After next week, I REALLY need a break from PowerPoint.

Looking forward to a busy #ICCV2025.

I will give three (very different) talks at workshops and tutorials, see info below.

We also present two papers, ACE-G and SCR Priors.

And it's the 10th (!) anniversary of the R6D workshop, which we co-organize.

I will give three (very different) talks at workshops and tutorials, see info below.

We also present two papers, ACE-G and SCR Priors.

And it's the 10th (!) anniversary of the R6D workshop, which we co-organize.

October 17, 2025 at 8:13 AM

After next week, I REALLY need a break from PowerPoint.

Looking forward to a busy #ICCV2025.

I will give three (very different) talks at workshops and tutorials, see info below.

We also present two papers, ACE-G and SCR Priors.

And it's the 10th (!) anniversary of the R6D workshop, which we co-organize.

I will give three (very different) talks at workshops and tutorials, see info below.

We also present two papers, ACE-G and SCR Priors.

And it's the 10th (!) anniversary of the R6D workshop, which we co-organize.

October 17, 2025 at 8:11 AM

Looking forward to a busy #ICCV2025.

I will give three (very different) talks at workshops and tutorials, see info below.

We also present two papers, ACE-G and SCR Priors.

And it's the 10th (!) anniversary of the R6D workshop, which we co-organize.

I will give three (very different) talks at workshops and tutorials, see info below.

We also present two papers, ACE-G and SCR Priors.

And it's the 10th (!) anniversary of the R6D workshop, which we co-organize.

Reposted by Eric Brachmann

Bruns et al., "ACE-G: Improving Generalization of Scene Coordinate Regression Through Query Pre-Training"

Train a scene coordinate regressor with "map codes" (ie, trainable inputs) so that you can train one generalizable regressor. Then, find these "map codes" to localize.

Train a scene coordinate regressor with "map codes" (ie, trainable inputs) so that you can train one generalizable regressor. Then, find these "map codes" to localize.

October 16, 2025 at 7:37 PM

Bruns et al., "ACE-G: Improving Generalization of Scene Coordinate Regression Through Query Pre-Training"

Train a scene coordinate regressor with "map codes" (ie, trainable inputs) so that you can train one generalizable regressor. Then, find these "map codes" to localize.

Train a scene coordinate regressor with "map codes" (ie, trainable inputs) so that you can train one generalizable regressor. Then, find these "map codes" to localize.

Reposted by Eric Brachmann

Interested in doing a PhD in machine learning at the University of Edinburgh starting Sept 2026?

My group works on topics in vision, machine learning, and AI for climate.

For more information and details on how to get in touch, please check out my website:

homepages.inf.ed.ac.uk/omacaod

My group works on topics in vision, machine learning, and AI for climate.

For more information and details on how to get in touch, please check out my website:

homepages.inf.ed.ac.uk/omacaod

October 16, 2025 at 9:15 AM

Interested in doing a PhD in machine learning at the University of Edinburgh starting Sept 2026?

My group works on topics in vision, machine learning, and AI for climate.

For more information and details on how to get in touch, please check out my website:

homepages.inf.ed.ac.uk/omacaod

My group works on topics in vision, machine learning, and AI for climate.

For more information and details on how to get in touch, please check out my website:

homepages.inf.ed.ac.uk/omacaod

🌟 Scene Coordinate Reconstruction Priors at #ICCV2025 🌟

Can we learn what a successful reconstruction looks like, and use this knowledge when reconstructing new scenes?

Explainer: youtu.be/RkV6U5xYc20

Project Page: nianticspatial.github.io/scr-priors

Inspiring work by Wenjing Bian et al.!

Can we learn what a successful reconstruction looks like, and use this knowledge when reconstructing new scenes?

Explainer: youtu.be/RkV6U5xYc20

Project Page: nianticspatial.github.io/scr-priors

Inspiring work by Wenjing Bian et al.!

October 15, 2025 at 7:36 AM

🌟 Scene Coordinate Reconstruction Priors at #ICCV2025 🌟

Can we learn what a successful reconstruction looks like, and use this knowledge when reconstructing new scenes?

Explainer: youtu.be/RkV6U5xYc20

Project Page: nianticspatial.github.io/scr-priors

Inspiring work by Wenjing Bian et al.!

Can we learn what a successful reconstruction looks like, and use this knowledge when reconstructing new scenes?

Explainer: youtu.be/RkV6U5xYc20

Project Page: nianticspatial.github.io/scr-priors

Inspiring work by Wenjing Bian et al.!

Reposted by Eric Brachmann

The #ICCV2025 main conference open access proceedings is up:

openaccess.thecvf.com/ICCV2025

Workshop papers will be posted shortly. Aloha!

openaccess.thecvf.com/ICCV2025

Workshop papers will be posted shortly. Aloha!

October 14, 2025 at 7:42 PM

The #ICCV2025 main conference open access proceedings is up:

openaccess.thecvf.com/ICCV2025

Workshop papers will be posted shortly. Aloha!

openaccess.thecvf.com/ICCV2025

Workshop papers will be posted shortly. Aloha!

🔥 ACE-G, the next evolutionary step of ACE at #ICCV2025 🔥

We disentangle coordinate regression and latent map representation which lets us pre-train the regressor to generalize from mapping data to difficult query images.

Page: nianticspatial.github.io/ace-g/

Stellar work by Leonard Bruns et al.!

We disentangle coordinate regression and latent map representation which lets us pre-train the regressor to generalize from mapping data to difficult query images.

Page: nianticspatial.github.io/ace-g/

Stellar work by Leonard Bruns et al.!

October 14, 2025 at 8:24 AM

🔥 ACE-G, the next evolutionary step of ACE at #ICCV2025 🔥

We disentangle coordinate regression and latent map representation which lets us pre-train the regressor to generalize from mapping data to difficult query images.

Page: nianticspatial.github.io/ace-g/

Stellar work by Leonard Bruns et al.!

We disentangle coordinate regression and latent map representation which lets us pre-train the regressor to generalize from mapping data to difficult query images.

Page: nianticspatial.github.io/ace-g/

Stellar work by Leonard Bruns et al.!

When you present other peoples work with them sitting in the audience.

a man with sweat running down his face

ALT: a man with sweat running down his face

media.tenor.com

October 10, 2025 at 9:01 AM

When you present other peoples work with them sitting in the audience.

I think it is a great time to have such a tutorial again. As we see competitive RANSAC-free approaches arise, it is worth looking back - and looking forward.

For those going to @iccv.bsky.social, welcome to our RANSAC tutorial on October 2025 with

- Daniel Barath

- @ericbrachmann.bsky.social

- Viktor Larsson

- Jiri Matas

- and me

danini.github.io/ransac-2025-...

#ICCV2025

- Daniel Barath

- @ericbrachmann.bsky.social

- Viktor Larsson

- Jiri Matas

- and me

danini.github.io/ransac-2025-...

#ICCV2025

October 8, 2025 at 11:26 AM

I think it is a great time to have such a tutorial again. As we see competitive RANSAC-free approaches arise, it is worth looking back - and looking forward.

Reposted by Eric Brachmann

For those going to @iccv.bsky.social, welcome to our RANSAC tutorial on October 2025 with

- Daniel Barath

- @ericbrachmann.bsky.social

- Viktor Larsson

- Jiri Matas

- and me

danini.github.io/ransac-2025-...

#ICCV2025

- Daniel Barath

- @ericbrachmann.bsky.social

- Viktor Larsson

- Jiri Matas

- and me

danini.github.io/ransac-2025-...

#ICCV2025

October 8, 2025 at 11:22 AM

For those going to @iccv.bsky.social, welcome to our RANSAC tutorial on October 2025 with

- Daniel Barath

- @ericbrachmann.bsky.social

- Viktor Larsson

- Jiri Matas

- and me

danini.github.io/ransac-2025-...

#ICCV2025

- Daniel Barath

- @ericbrachmann.bsky.social

- Viktor Larsson

- Jiri Matas

- and me

danini.github.io/ransac-2025-...

#ICCV2025

Whenever I do a voice over, I realize that I sound like Arnold Schwarzenegger when I say "coordinate". Unfortunately, in my line of work, I have to say "coordinate" a lot...

October 8, 2025 at 8:00 AM

Whenever I do a voice over, I realize that I sound like Arnold Schwarzenegger when I say "coordinate". Unfortunately, in my line of work, I have to say "coordinate" a lot...

Trendy.

𝗔 𝗦𝗰𝗲𝗻𝗲 𝗶𝘀 𝗪𝗼𝗿𝘁𝗵 𝗮 𝗧𝗵𝗼𝘂𝘀𝗮𝗻𝗱 𝗙𝗲𝗮𝘁𝘂𝗿𝗲𝘀: 𝗙𝗲𝗲𝗱-𝗙𝗼𝗿𝘄𝗮𝗿𝗱 𝗖𝗮𝗺𝗲𝗿𝗮 𝗟𝗼𝗰𝗮𝗹𝗶𝘇𝗮𝘁𝗶𝗼𝗻 𝗳𝗿𝗼𝗺 𝗮 𝗖𝗼𝗹𝗹𝗲𝗰𝘁𝗶𝗼𝗻 𝗼𝗳 𝗜𝗺𝗮𝗴𝗲 𝗙𝗲𝗮𝘁𝘂𝗿𝗲𝘀

Axel Barroso-Laguna, Tommaso Cavallari, Victor Adrian Prisacariu, Eric Brachmann

arxiv.org/abs/2510.00978

Trending on www.scholar-inbox.com

Axel Barroso-Laguna, Tommaso Cavallari, Victor Adrian Prisacariu, Eric Brachmann

arxiv.org/abs/2510.00978

Trending on www.scholar-inbox.com

October 3, 2025 at 8:40 AM

Trendy.

Great to have Kwang on Bluesky! (And quite flattering that this is his first post 😳)

Barroso-Laguna et al., "A Scene is Worth a Thousand Features: Feed-Forward Camera Localization from a Collection of Image Features"

When contexting your feed-forward 3D point-map estimator, don't use full image pairs -- just randomly subsample! -> fast compute, more images.

When contexting your feed-forward 3D point-map estimator, don't use full image pairs -- just randomly subsample! -> fast compute, more images.

October 2, 2025 at 7:55 PM

Great to have Kwang on Bluesky! (And quite flattering that this is his first post 😳)

Reposted by Eric Brachmann

A Scene is Worth a Thousand Features: Feed-Forward Camera Localization from a Collection of Image Features

@axelbarroso.bsky.social, Tommaso Cavallari, Victor Adrian Prisacariu, @ericbrachmann.bsky.social

arxiv.org/abs/2510.00978

@axelbarroso.bsky.social, Tommaso Cavallari, Victor Adrian Prisacariu, @ericbrachmann.bsky.social

arxiv.org/abs/2510.00978

October 2, 2025 at 10:57 AM

A Scene is Worth a Thousand Features: Feed-Forward Camera Localization from a Collection of Image Features

@axelbarroso.bsky.social, Tommaso Cavallari, Victor Adrian Prisacariu, @ericbrachmann.bsky.social

arxiv.org/abs/2510.00978

@axelbarroso.bsky.social, Tommaso Cavallari, Victor Adrian Prisacariu, @ericbrachmann.bsky.social

arxiv.org/abs/2510.00978

⏩ FastForward ⏩ A new model for efficient visual relocalization. Accurate camera poses without building structured 3D maps.

nianticspatial.github.io/fastforward/

Work by @axelbarroso.bsky.social, Tommaso Cavallari and Victor Adrian Prisacariu.

nianticspatial.github.io/fastforward/

Work by @axelbarroso.bsky.social, Tommaso Cavallari and Victor Adrian Prisacariu.

October 2, 2025 at 8:48 AM

⏩ FastForward ⏩ A new model for efficient visual relocalization. Accurate camera poses without building structured 3D maps.

nianticspatial.github.io/fastforward/

Work by @axelbarroso.bsky.social, Tommaso Cavallari and Victor Adrian Prisacariu.

nianticspatial.github.io/fastforward/

Work by @axelbarroso.bsky.social, Tommaso Cavallari and Victor Adrian Prisacariu.

Small but important quality of life update by @rerun.io: Customizable frustum colors. Finally we can distinguish estimates and ground truth in the same 3D view ;)

September 29, 2025 at 9:00 AM

Small but important quality of life update by @rerun.io: Customizable frustum colors. Finally we can distinguish estimates and ground truth in the same 3D view ;)

Reposted by Eric Brachmann

Here we go again 😅 This time I’m planning to take a more senior role to help mentor the next gen of publicity chairs. Please consider volunteering!

#CVPR2026 is looking for Publicity Chairs! The role includes working as part of a team to share conference updates across social media (X, Bluesky, etc.) and answering community questions.

Interested? Check out the self-nomination form in the thread.

Interested? Check out the self-nomination form in the thread.

September 28, 2025 at 11:03 PM

Here we go again 😅 This time I’m planning to take a more senior role to help mentor the next gen of publicity chairs. Please consider volunteering!

Recovering 6D Object Pose - Looking forward to the R6D workshop at #ICCV2025 in Hawaii! (not looking forward to the jet lag)

cmp.felk.cvut.cz/sixd/worksho...

The accompanying challenge is still open for submissions until Oct 1st. And the workshop also still accepts non-proceeding paper submission!

cmp.felk.cvut.cz/sixd/worksho...

The accompanying challenge is still open for submissions until Oct 1st. And the workshop also still accepts non-proceeding paper submission!

September 26, 2025 at 1:44 PM

Recovering 6D Object Pose - Looking forward to the R6D workshop at #ICCV2025 in Hawaii! (not looking forward to the jet lag)

cmp.felk.cvut.cz/sixd/worksho...

The accompanying challenge is still open for submissions until Oct 1st. And the workshop also still accepts non-proceeding paper submission!

cmp.felk.cvut.cz/sixd/worksho...

The accompanying challenge is still open for submissions until Oct 1st. And the workshop also still accepts non-proceeding paper submission!

Reposted by Eric Brachmann

This is 100% true, including the H100 being 12.3 km away.

Did you know in Germany, there are signs in every city indicating the way to the closest H100, in case of an upcoming deadline?

September 3, 2025 at 11:35 AM

This is 100% true, including the H100 being 12.3 km away.

Did you know in Germany, there are signs in every city indicating the way to the closest H100, in case of an upcoming deadline?

September 3, 2025 at 7:27 AM

Did you know in Germany, there are signs in every city indicating the way to the closest H100, in case of an upcoming deadline?

Reposted by Eric Brachmann