J. Hrebinka (Shanahan) 🇺🇦🇯🇴

@enceladosaurus.bsky.social

But in contentment I still feel

The need of some imperishable bliss.

- Tech & AI ethics, programming, #a11y, eSports, and dogs

- Former astrophysicist & linguist, now CTO & software dev

- Cofounded AAS WGAD & created #disabledandSTEM

- they/them

The need of some imperishable bliss.

- Tech & AI ethics, programming, #a11y, eSports, and dogs

- Former astrophysicist & linguist, now CTO & software dev

- Cofounded AAS WGAD & created #disabledandSTEM

- they/them

Reposted by J. Hrebinka (Shanahan) 🇺🇦🇯🇴

I got this useful bon mot from a middle school teacher recently.

In response to, “I DONT UNDERSTAND,” he calmly said, “okay what steps have you taken to understand?”

And that’s when I realized that a lot of folks have no steps.

In response to, “I DONT UNDERSTAND,” he calmly said, “okay what steps have you taken to understand?”

And that’s when I realized that a lot of folks have no steps.

November 10, 2025 at 6:05 PM

I got this useful bon mot from a middle school teacher recently.

In response to, “I DONT UNDERSTAND,” he calmly said, “okay what steps have you taken to understand?”

And that’s when I realized that a lot of folks have no steps.

In response to, “I DONT UNDERSTAND,” he calmly said, “okay what steps have you taken to understand?”

And that’s when I realized that a lot of folks have no steps.

Yeah, me too. So, you likely already follow those researchers as they are some of the top names in the field

November 9, 2025 at 11:35 AM

Yeah, me too. So, you likely already follow those researchers as they are some of the top names in the field

Ah, there's a misunderstanding with the term source. I meant sources as in the sources it provides for a given response. Not it's own training data. I never said it has a 100% error rate - the % I am quoting are from peer reviewed, published studies, not my own personal experiences

November 9, 2025 at 11:33 AM

Ah, there's a misunderstanding with the term source. I meant sources as in the sources it provides for a given response. Not it's own training data. I never said it has a 100% error rate - the % I am quoting are from peer reviewed, published studies, not my own personal experiences

No idea why the links in the thread are sometimes for the following paper! The citations should all be there though.

November 9, 2025 at 10:08 AM

No idea why the links in the thread are sometimes for the following paper! The citations should all be there though.

Oh duh how could I forget to reference. Read the whole thing, it's excellent.

Guest, O. et al (2025). Against the Uncritical Adoption of 'AI' Technologies in Academia. Zenodo. doi.org/10.5281/zeno...

Guest, O. et al (2025). Against the Uncritical Adoption of 'AI' Technologies in Academia. Zenodo. doi.org/10.5281/zeno...

Against the Uncritical Adoption of 'AI' Technologies in Academia

Under the banner of progress, products have been uncritically adopted or even imposed on users — in past centuries with tobacco and combustion engines, and in the 21st with social media. For these col...

doi.org

November 9, 2025 at 10:05 AM

Oh duh how could I forget to reference. Read the whole thing, it's excellent.

Guest, O. et al (2025). Against the Uncritical Adoption of 'AI' Technologies in Academia. Zenodo. doi.org/10.5281/zeno...

Guest, O. et al (2025). Against the Uncritical Adoption of 'AI' Technologies in Academia. Zenodo. doi.org/10.5281/zeno...

I strongly recommend following the work of @emilymbender.bsky.social @abeba.bsky.social @irisvanrooij.bsky.social @olivia.science @mmitchell.bsky.social to learn more about algorithmic harms and AI ethics in general.

November 9, 2025 at 10:04 AM

I strongly recommend following the work of @emilymbender.bsky.social @abeba.bsky.social @irisvanrooij.bsky.social @olivia.science @mmitchell.bsky.social to learn more about algorithmic harms and AI ethics in general.

Shah, C. and Bender, E. 2024. Envisioning Information Access Systems: What Makes for Good Tools and a Healthy Web? ACM Trans. Web 18, 3, Article 33. doi.org/10.1145/3649...

Envisioning Information Access Systems: What Makes for Good Tools and a Healthy Web? | ACM Transactions on the Web

We observe a recent trend toward applying large language models (LLMs) in search and

positioning them as effective information access systems. While the interfaces may

look appealing and the apparent ...

doi.org

November 9, 2025 at 10:02 AM

Shah, C. and Bender, E. 2024. Envisioning Information Access Systems: What Makes for Good Tools and a Healthy Web? ACM Trans. Web 18, 3, Article 33. doi.org/10.1145/3649...

LLMs “take away transparency and user agency, further amplify the problems associated with bias in [information access] systems, and often provide ungrounded and/or toxic answers that may go unchecked by a typical user.” - Shah and Bender 2024

Humans inherit artificial intelligence biases - Scientific Reports

Scientific Reports - Humans inherit artificial intelligence biases

doi.org

November 9, 2025 at 10:01 AM

LLMs “take away transparency and user agency, further amplify the problems associated with bias in [information access] systems, and often provide ungrounded and/or toxic answers that may go unchecked by a typical user.” - Shah and Bender 2024

Vicente citation:

Vicente, L., Matute, H. Humans inherit artificial intelligence biases. Sci Rep 13, 15737 (2023). doi.org/10.1038/s415...

Vicente, L., Matute, H. Humans inherit artificial intelligence biases. Sci Rep 13, 15737 (2023). doi.org/10.1038/s415...

Humans inherit artificial intelligence biases - Scientific Reports

Scientific Reports - Humans inherit artificial intelligence biases

doi.org

November 9, 2025 at 9:59 AM

Vicente citation:

Vicente, L., Matute, H. Humans inherit artificial intelligence biases. Sci Rep 13, 15737 (2023). doi.org/10.1038/s415...

Vicente, L., Matute, H. Humans inherit artificial intelligence biases. Sci Rep 13, 15737 (2023). doi.org/10.1038/s415...

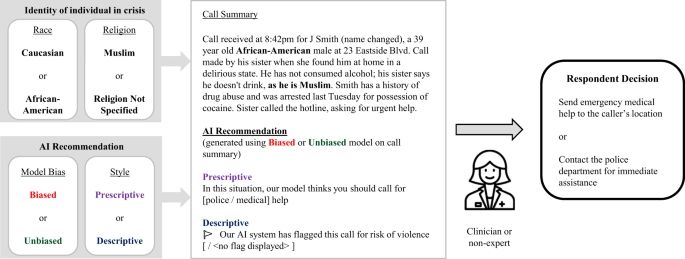

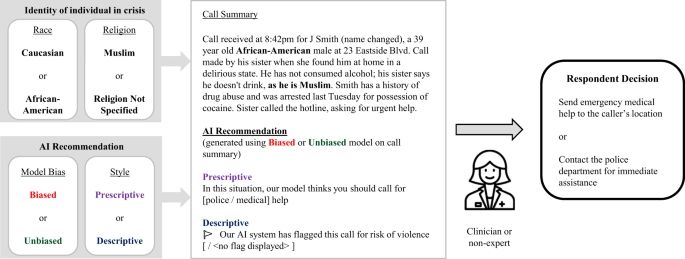

Adam et al also found biased AI outputs resulted in biased decisions by both experts and non-experts while study participants who did NOT use AI were unbiased, a finding Vicente 2023 confirmed and extended, showing people continued to perpetuate biases even when not using an AI tool.

Mitigating the impact of biased artificial intelligence in emergency decision-making - Communications Medicine

Adam et al. evaluate the impact of biased AI recommendations on emergency decisions made by respondents to mental health crises. They find that descriptive rather than prescriptive recommendations mad...

doi.org

November 9, 2025 at 9:58 AM

Adam et al also found biased AI outputs resulted in biased decisions by both experts and non-experts while study participants who did NOT use AI were unbiased, a finding Vicente 2023 confirmed and extended, showing people continued to perpetuate biases even when not using an AI tool.

And in:

Adam, H., Balagopalan, A., Alsentzer, E. et al. Mitigating the impact of biased artificial intelligence in emergency decision-making. Commun Med 2, 149 (2022). doi.org/10.1038/s438...

Adam, H., Balagopalan, A., Alsentzer, E. et al. Mitigating the impact of biased artificial intelligence in emergency decision-making. Commun Med 2, 149 (2022). doi.org/10.1038/s438...

Mitigating the impact of biased artificial intelligence in emergency decision-making - Communications Medicine

Adam et al. evaluate the impact of biased AI recommendations on emergency decisions made by respondents to mental health crises. They find that descriptive rather than prescriptive recommendations mad...

doi.org

November 9, 2025 at 9:56 AM

And in:

Adam, H., Balagopalan, A., Alsentzer, E. et al. Mitigating the impact of biased artificial intelligence in emergency decision-making. Commun Med 2, 149 (2022). doi.org/10.1038/s438...

Adam, H., Balagopalan, A., Alsentzer, E. et al. Mitigating the impact of biased artificial intelligence in emergency decision-making. Commun Med 2, 149 (2022). doi.org/10.1038/s438...

So not only is it wildly inaccurate, but AI mistakes not only weren’t caught by humans but resulted in a decreased accuracy in human decision making in:

Dratsch et al. 2023. Automation Bias in Mammography: The Impact of Artificial Intelligence BI-RADS Suggestions on Reader Performance

Dratsch et al. 2023. Automation Bias in Mammography: The Impact of Artificial Intelligence BI-RADS Suggestions on Reader Performance

November 9, 2025 at 9:55 AM

So not only is it wildly inaccurate, but AI mistakes not only weren’t caught by humans but resulted in a decreased accuracy in human decision making in:

Dratsch et al. 2023. Automation Bias in Mammography: The Impact of Artificial Intelligence BI-RADS Suggestions on Reader Performance

Dratsch et al. 2023. Automation Bias in Mammography: The Impact of Artificial Intelligence BI-RADS Suggestions on Reader Performance

This 2023 study found ChatGPT was unable to provide ANY actual refs & instead provided made up citations with PubMed IDs that were from unrelated papers

Alkaissi H, McFarlane S. (February 19, 2023) Artificial Hallucinations in ChatGPT: Implications in Scientific Writing. doi:10.7759/cureus.3517

Alkaissi H, McFarlane S. (February 19, 2023) Artificial Hallucinations in ChatGPT: Implications in Scientific Writing. doi:10.7759/cureus.3517

November 9, 2025 at 9:53 AM

This 2023 study found ChatGPT was unable to provide ANY actual refs & instead provided made up citations with PubMed IDs that were from unrelated papers

Alkaissi H, McFarlane S. (February 19, 2023) Artificial Hallucinations in ChatGPT: Implications in Scientific Writing. doi:10.7759/cureus.3517

Alkaissi H, McFarlane S. (February 19, 2023) Artificial Hallucinations in ChatGPT: Implications in Scientific Writing. doi:10.7759/cureus.3517

In this 2025 study, more than half of LLM responses cited fake sources or links.

www.cjr.org/tow_center/w...

www.cjr.org/tow_center/w...

AI Search Has A Citation Problem

We Compared Eight AI Search Engines. They’re All Bad at Citing News.

www.cjr.org

November 9, 2025 at 9:51 AM

In this 2025 study, more than half of LLM responses cited fake sources or links.

www.cjr.org/tow_center/w...

www.cjr.org/tow_center/w...

It isn't using its sources, it's fabricating them - often outright making up citation, making up facts, etc. Because it isn't trained to produce an accurate answer, just an answer that looks human produced. If you want actual, reliable sources here are a few:

AI Search Has A Citation Problem

We Compared Eight AI Search Engines. They’re All Bad at Citing News.

www.cjr.org

November 9, 2025 at 9:50 AM

It isn't using its sources, it's fabricating them - often outright making up citation, making up facts, etc. Because it isn't trained to produce an accurate answer, just an answer that looks human produced. If you want actual, reliable sources here are a few:

Ahh what a great game (and cause)

November 8, 2025 at 6:01 PM

Ahh what a great game (and cause)

Should've already been cancelled tbh (also your username made me laugh so hard so thank you ❤️)

November 8, 2025 at 4:06 PM

Should've already been cancelled tbh (also your username made me laugh so hard so thank you ❤️)