Code: github.com/echonet/Echo...

Trial: clinicaltrials.gov/study/NCT072...

Blog: substack.com/home/post/p-...

Press: divisionofresearch.kaiserpermanente.org/large-ai-int...

If you feed it a video or echo reports, it can find similar historical studies that match those concepts.

If you feed it a video or echo reports, it can find similar historical studies that match those concepts.

Substantial time and effort was put into curating the largest existing echocardiography database.

EchoPrime is trained on 1000x the data of EchoNet-Dynamic, our first model for AI-LVEF, and 10x the data of existing AI echo models.

Substantial time and effort was put into curating the largest existing echocardiography database.

EchoPrime is trained on 1000x the data of EchoNet-Dynamic, our first model for AI-LVEF, and 10x the data of existing AI echo models.

Preprint: medrxiv.org/content/10.1...

Code: github.com/echonet/dias...

Preprint: medrxiv.org/content/10.1...

Code: github.com/echonet/dias...

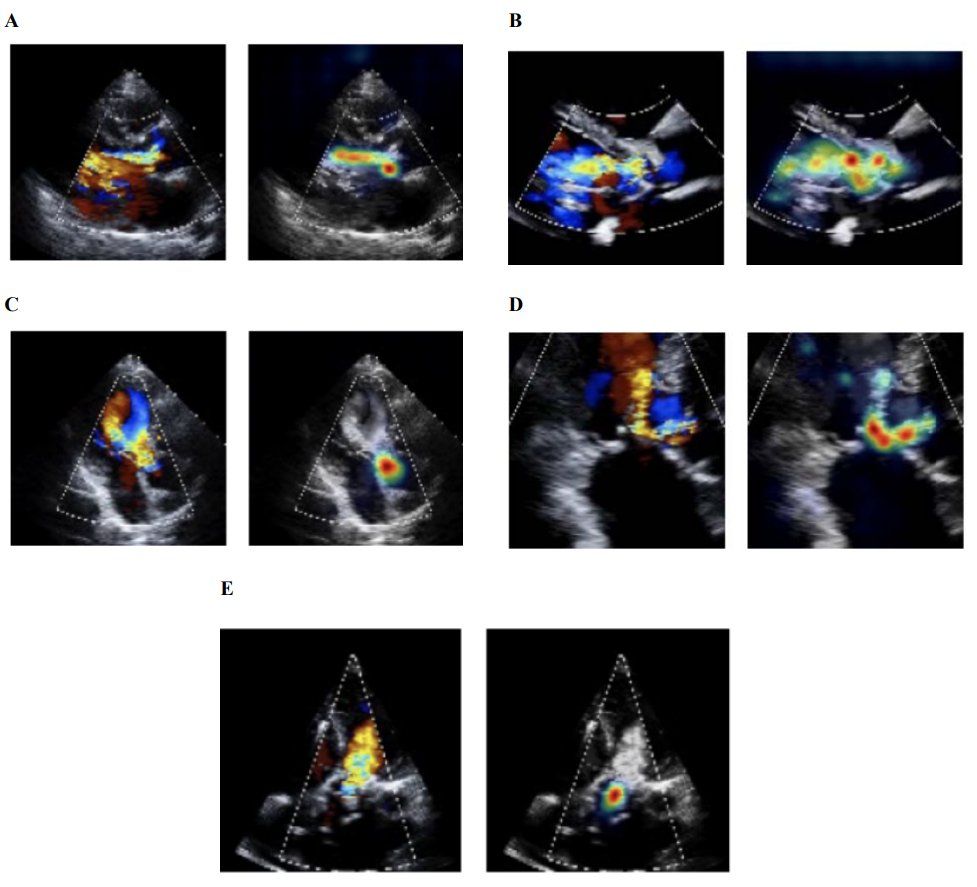

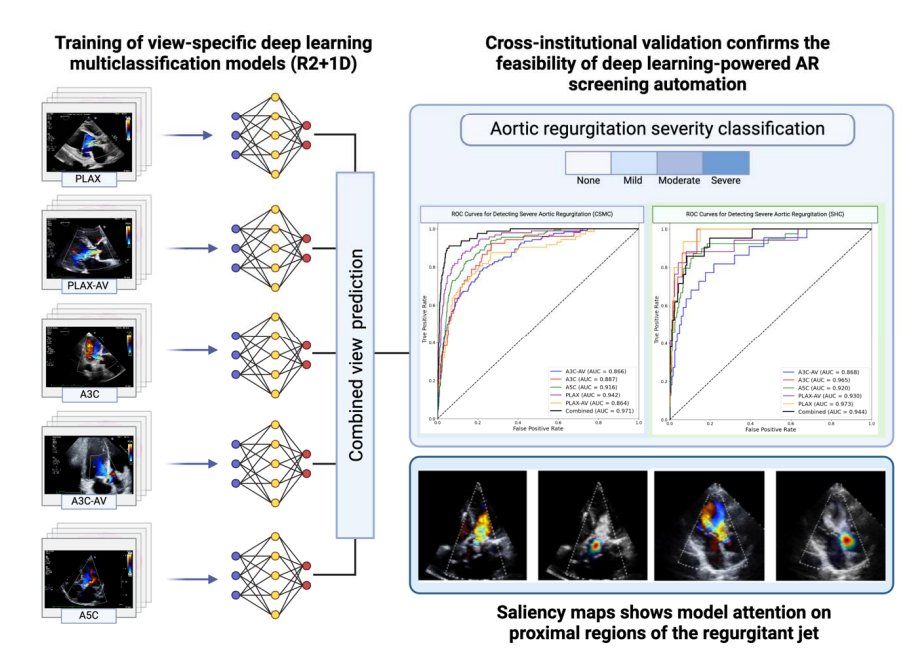

@ase360.bsky.social expert guidelines integrate many elements for comprehensive assessment, however its complexity lead to missingness and inconsistency in clinical practice.

@ase360.bsky.social expert guidelines integrate many elements for comprehensive assessment, however its complexity lead to missingness and inconsistency in clinical practice.

Access to #POCUS and #echofirst improves accuracy and access to care.

x.com/David_Ouyang...

Access to #POCUS and #echofirst improves accuracy and access to care.

x.com/David_Ouyang...

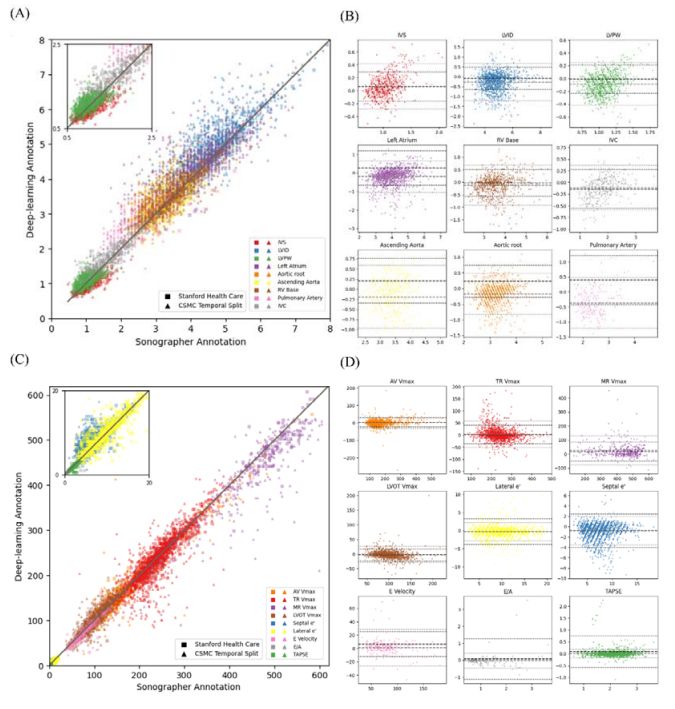

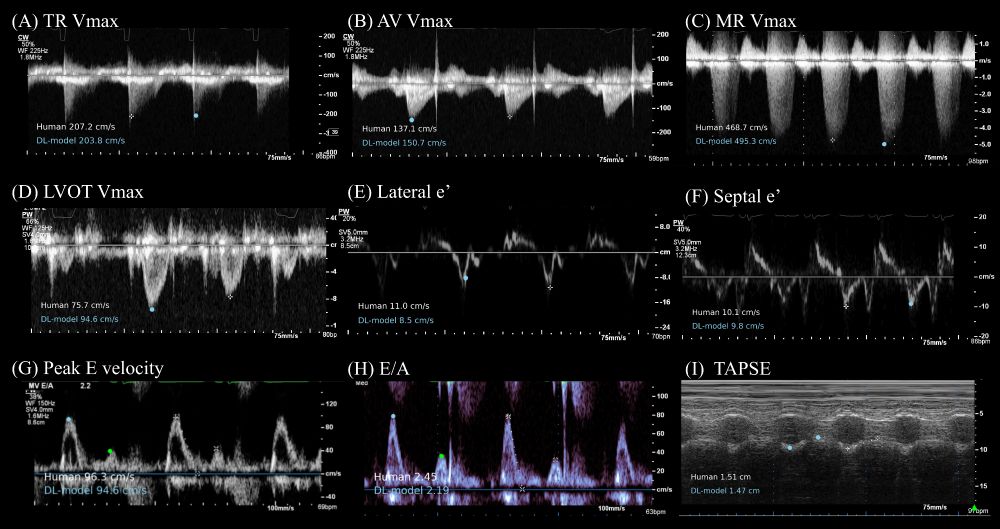

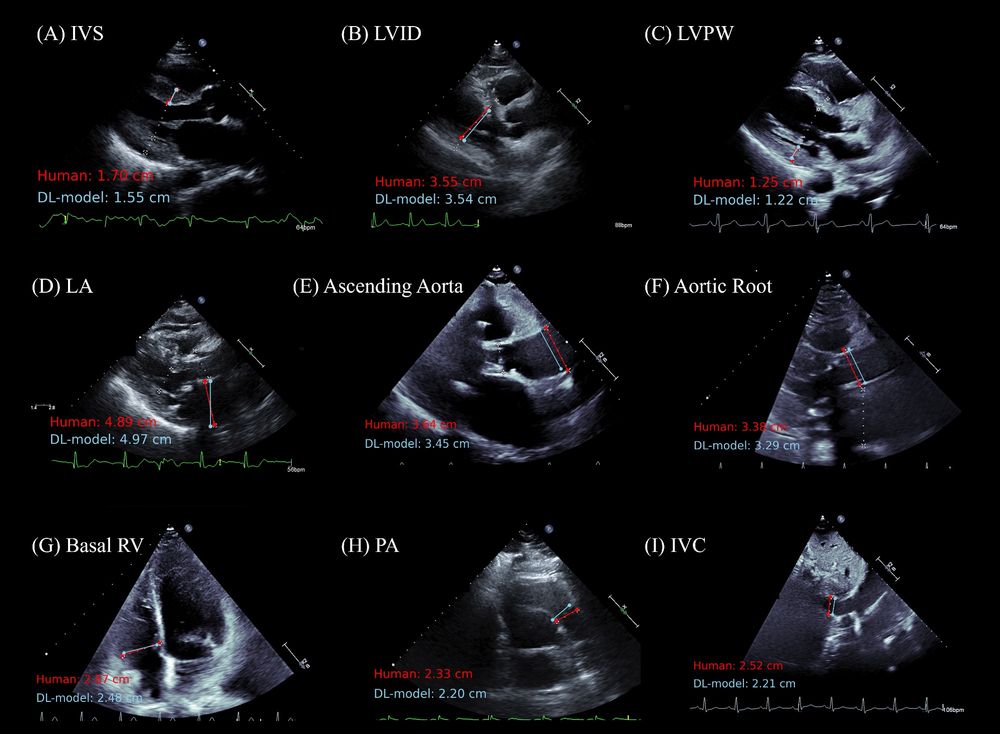

Excellent performance in test cohorts (overall R2 of 0.967 in the held-out CSMC dataset and 0.987 in the SHC dataset)

Excellent performance in test cohorts (overall R2 of 0.967 in the held-out CSMC dataset and 0.987 in the SHC dataset)

Talk to us at #acc25 if any questions!

Preprint: medrxiv.org/content/10.1...

Github: github.com/echonet/obst...

Talk to us at #acc25 if any questions!

Preprint: medrxiv.org/content/10.1...

Github: github.com/echonet/obst...

Original Article: Opportunistic Screening of Chronic Liver Disease with Deep-Learning–Enhanced Echocardiography nejm.ai/3XJuPNl

Original Article: Opportunistic Screening of Chronic Liver Disease with Deep-Learning–Enhanced Echocardiography nejm.ai/3XJuPNl