If you feed it a video or echo reports, it can find similar historical studies that match those concepts.

If you feed it a video or echo reports, it can find similar historical studies that match those concepts.

Substantial time and effort was put into curating the largest existing echocardiography database.

EchoPrime is trained on 1000x the data of EchoNet-Dynamic, our first model for AI-LVEF, and 10x the data of existing AI echo models.

Substantial time and effort was put into curating the largest existing echocardiography database.

EchoPrime is trained on 1000x the data of EchoNet-Dynamic, our first model for AI-LVEF, and 10x the data of existing AI echo models.

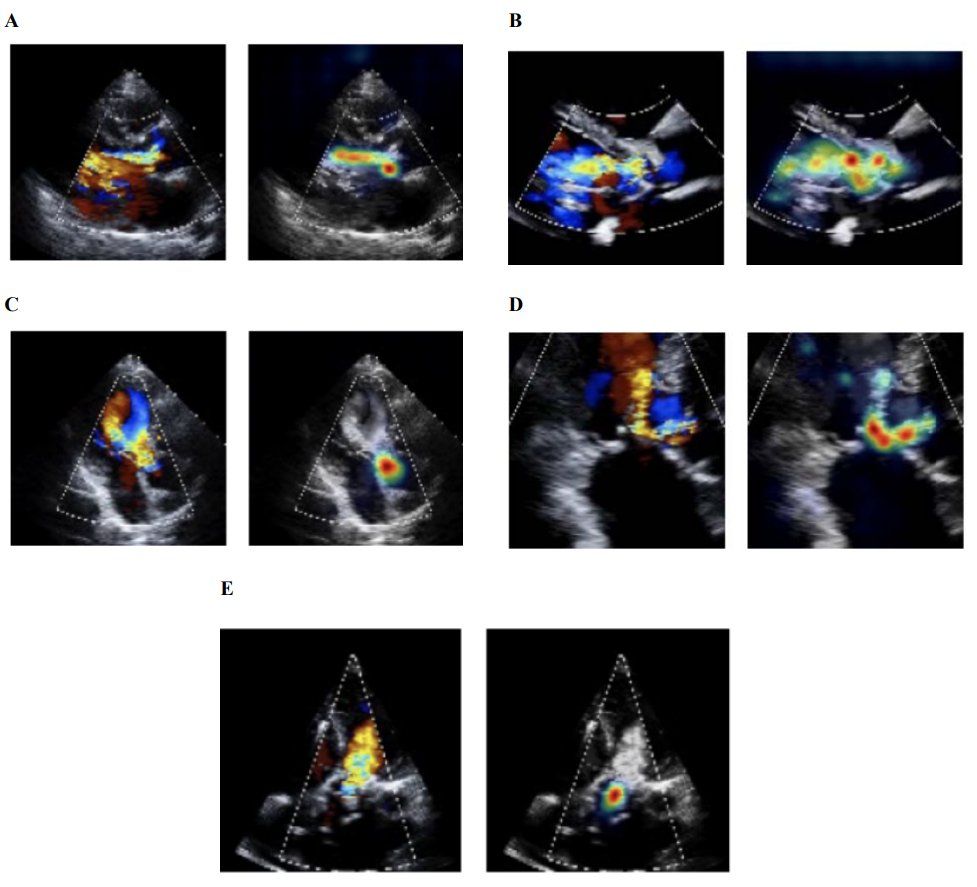

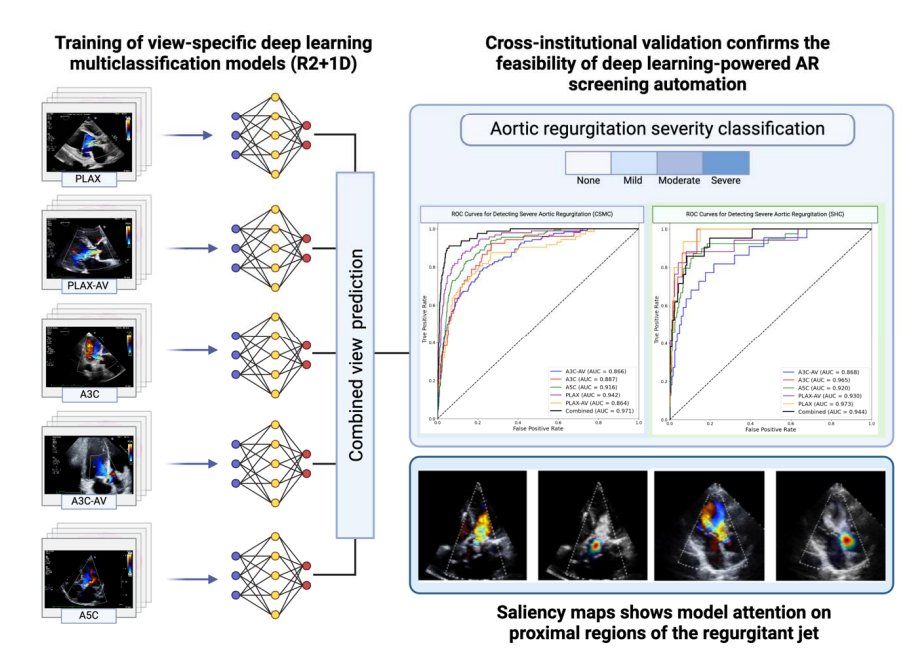

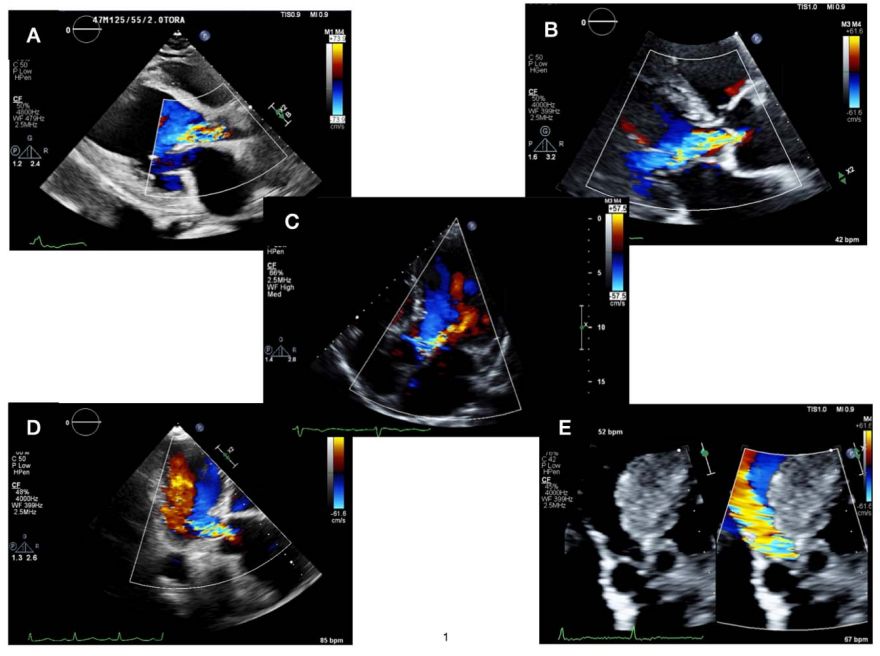

from @MedUni_Wien on the AI automated assessment of Aortic Regurgitation (AR) on #echofirst using 59,500 videos from @smidtheart.bsky.social. Lead by Dr. Bob Siegel.

from @MedUni_Wien on the AI automated assessment of Aortic Regurgitation (AR) on #echofirst using 59,500 videos from @smidtheart.bsky.social. Lead by Dr. Bob Siegel.

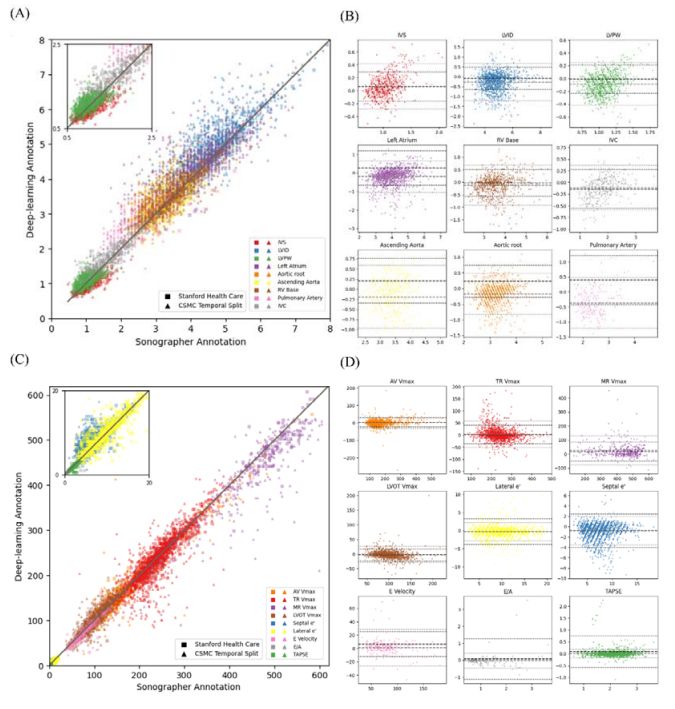

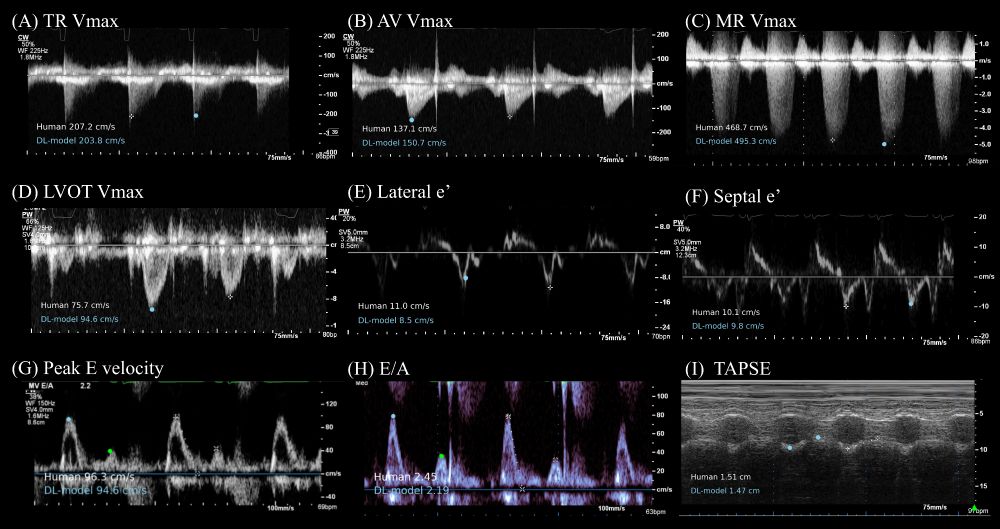

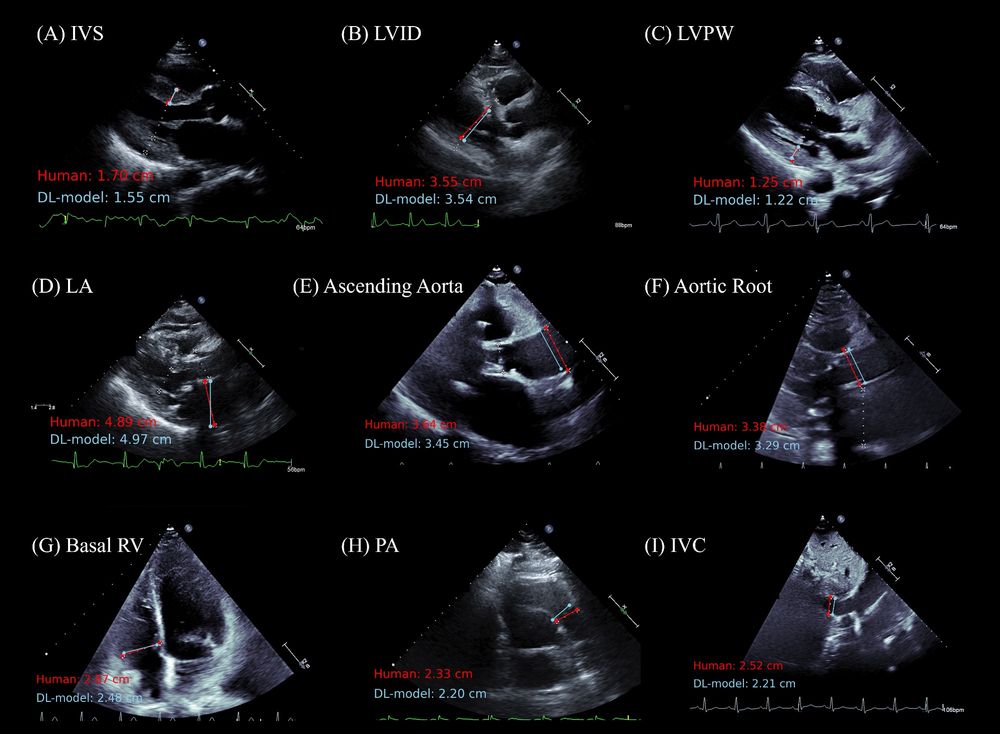

Excellent performance in test cohorts (overall R2 of 0.967 in the held-out CSMC dataset and 0.987 in the SHC dataset)

Excellent performance in test cohorts (overall R2 of 0.967 in the held-out CSMC dataset and 0.987 in the SHC dataset)

Using more than 1,414,709 annotations from 155,215 studies from 78,037 patients for training, this is the most comprehensive #echofirst segmentation model.

Using more than 1,414,709 annotations from 155,215 studies from 78,037 patients for training, this is the most comprehensive #echofirst segmentation model.

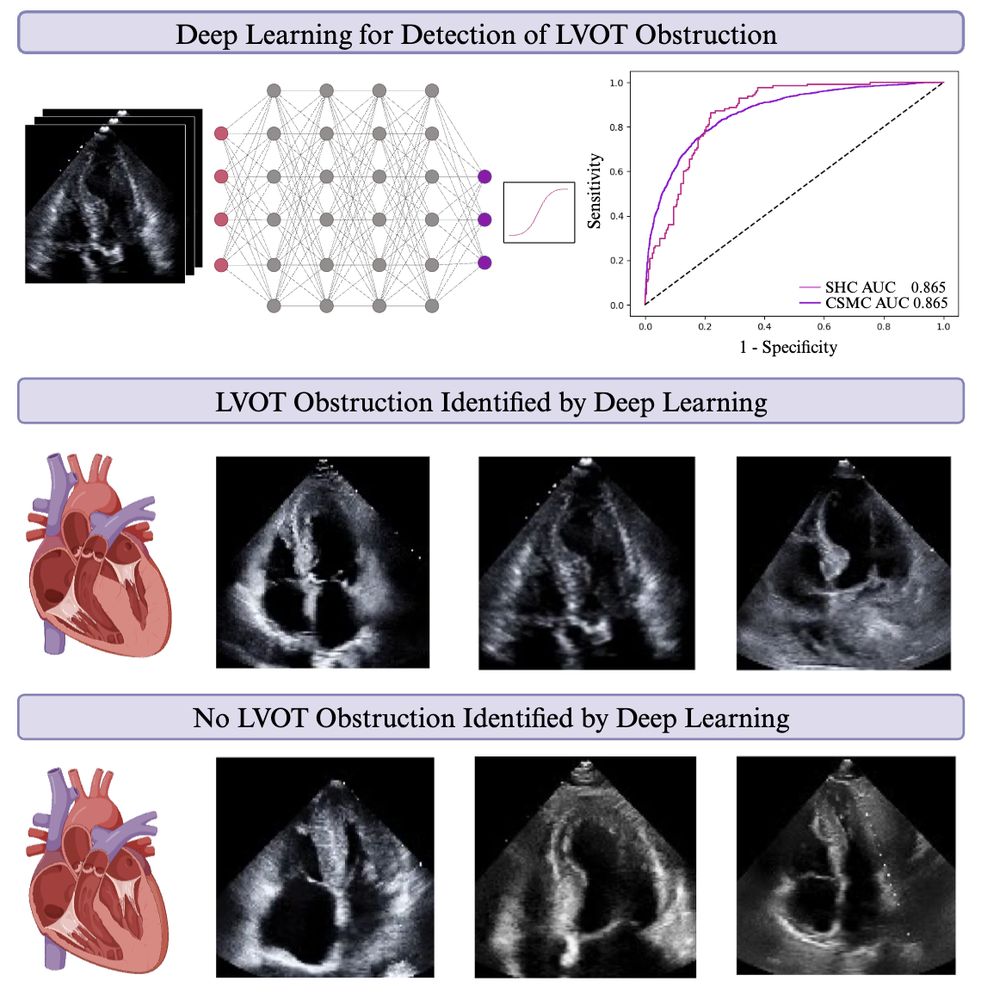

There are new therapies for obstructive HCM, however obstruction is frequently missed. Extra #echofirst work is required to eval for obstruction.

We develop an AI model on standard A4C videos to identify patients w/ obstruction.

There are new therapies for obstructive HCM, however obstruction is frequently missed. Extra #echofirst work is required to eval for obstruction.

We develop an AI model on standard A4C videos to identify patients w/ obstruction.

I've noticed that AI models trained on small datasets tend to jitter - jumping a lot from frame to frame - while robust models tend to have consistent measurements across the entire video.

Coming soon...

I've noticed that AI models trained on small datasets tend to jitter - jumping a lot from frame to frame - while robust models tend to have consistent measurements across the entire video.

Coming soon...