Dana Arad

@danaarad.bsky.social

NLP Researcher | CS PhD Candidate @ Technion

In this work we take a step towards understanding and mitigating the vision-language performance gap, but there's still more to explore!

This was an awesome collaboration w\ Yossi Gandelsman, @boknilev.bsky.social, led by Yaniv Nikankin 🤩

Paper and code: technion-cs-nlp.github.io/vlm-circuits...

This was an awesome collaboration w\ Yossi Gandelsman, @boknilev.bsky.social, led by Yaniv Nikankin 🤩

Paper and code: technion-cs-nlp.github.io/vlm-circuits...

Same Task, Different Circuits – Project Page

technion-cs-nlp.github.io

June 26, 2025 at 10:41 AM

In this work we take a step towards understanding and mitigating the vision-language performance gap, but there's still more to explore!

This was an awesome collaboration w\ Yossi Gandelsman, @boknilev.bsky.social, led by Yaniv Nikankin 🤩

Paper and code: technion-cs-nlp.github.io/vlm-circuits...

This was an awesome collaboration w\ Yossi Gandelsman, @boknilev.bsky.social, led by Yaniv Nikankin 🤩

Paper and code: technion-cs-nlp.github.io/vlm-circuits...

By simply patching visual data tokens from later layers back into earlier ones, we improve of 4.6% on average - closing a third of the gap!

June 26, 2025 at 10:41 AM

By simply patching visual data tokens from later layers back into earlier ones, we improve of 4.6% on average - closing a third of the gap!

4. Zooming on data positions, we show that visual representations gradually align with their textual analogs across model layers (also shown by

@zhaofeng_wu

et al.). We hypothesize this may happen too late in the model to process the information, and fix it with back-patching.

@zhaofeng_wu

et al.). We hypothesize this may happen too late in the model to process the information, and fix it with back-patching.

June 26, 2025 at 10:41 AM

4. Zooming on data positions, we show that visual representations gradually align with their textual analogs across model layers (also shown by

@zhaofeng_wu

et al.). We hypothesize this may happen too late in the model to process the information, and fix it with back-patching.

@zhaofeng_wu

et al.). We hypothesize this may happen too late in the model to process the information, and fix it with back-patching.

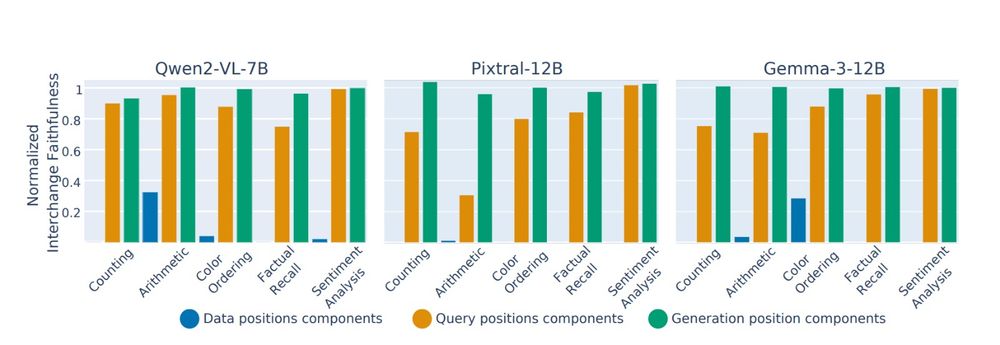

3. Data sub-circuits, however, are modality-specific; Swapping them significantly degrades performance. This is critical - this highlights that the differences in data processing are a key factor in the performance gap.

June 26, 2025 at 10:41 AM

3. Data sub-circuits, however, are modality-specific; Swapping them significantly degrades performance. This is critical - this highlights that the differences in data processing are a key factor in the performance gap.

2. Structure is only half the story: different circuits can still implement similar logic. We swap sub-circuits between modalities to measure cross-modal faithfulness.

Turns out, query and generation sub-circuits are functionally equivalent, retaining faithfulness when swapped!

Turns out, query and generation sub-circuits are functionally equivalent, retaining faithfulness when swapped!

June 26, 2025 at 10:41 AM

2. Structure is only half the story: different circuits can still implement similar logic. We swap sub-circuits between modalities to measure cross-modal faithfulness.

Turns out, query and generation sub-circuits are functionally equivalent, retaining faithfulness when swapped!

Turns out, query and generation sub-circuits are functionally equivalent, retaining faithfulness when swapped!

1. Circuits for the same task are mostly structurally disjoint, with an average of only 18% components shared between modalities!

The overlap is extremely low in data and query positions, and moderate in the generation (last) position only.

The overlap is extremely low in data and query positions, and moderate in the generation (last) position only.

June 26, 2025 at 10:41 AM

1. Circuits for the same task are mostly structurally disjoint, with an average of only 18% components shared between modalities!

The overlap is extremely low in data and query positions, and moderate in the generation (last) position only.

The overlap is extremely low in data and query positions, and moderate in the generation (last) position only.

We identify circuits (task-specific computational sub-graphs composed of attention heads and MLP neurons) used by VLMs to solve both variants.

What did we find? >>

What did we find? >>

June 26, 2025 at 10:41 AM

We identify circuits (task-specific computational sub-graphs composed of attention heads and MLP neurons) used by VLMs to solve both variants.

What did we find? >>

What did we find? >>

Consider object counting: we can ask a VLM “how many books are there?” given either an image or a sequence of words. Like Kaduri et al., we consider three types of positions within the input - data (image or word sequence), query ("how many..."), and generation (last token).

June 26, 2025 at 10:41 AM

Consider object counting: we can ask a VLM “how many books are there?” given either an image or a sequence of words. Like Kaduri et al., we consider three types of positions within the input - data (image or word sequence), query ("how many..."), and generation (last token).

Thank you! Added to my reading list ☺️

May 28, 2025 at 5:30 AM

Thank you! Added to my reading list ☺️

SAEs have sparked a debate over their utility; we hope to add another perspective. Would love to hear your thoughts!

Paper: arxiv.org/abs/2505.20063

Code: github.com/technion-cs-...

Huge thanks to @boknilev.bsky.social, @amuuueller.bsky.social, it’s been great working on this project with you!

Paper: arxiv.org/abs/2505.20063

Code: github.com/technion-cs-...

Huge thanks to @boknilev.bsky.social, @amuuueller.bsky.social, it’s been great working on this project with you!

SAEs Are Good for Steering -- If You Select the Right Features

Sparse Autoencoders (SAEs) have been proposed as an unsupervised approach to learn a decomposition of a model's latent space. This enables useful applications such as steering - influencing the output...

arxiv.org

May 27, 2025 at 4:06 PM

SAEs have sparked a debate over their utility; we hope to add another perspective. Would love to hear your thoughts!

Paper: arxiv.org/abs/2505.20063

Code: github.com/technion-cs-...

Huge thanks to @boknilev.bsky.social, @amuuueller.bsky.social, it’s been great working on this project with you!

Paper: arxiv.org/abs/2505.20063

Code: github.com/technion-cs-...

Huge thanks to @boknilev.bsky.social, @amuuueller.bsky.social, it’s been great working on this project with you!

These findings have practical implications: after filtering out features with low output scores, we see 2-3x improvements for steering with SAEs, making them competitive with supervised methods on AxBench, a recent steering benchmark ( Wu and @aryaman.io et al.)

May 27, 2025 at 4:06 PM

These findings have practical implications: after filtering out features with low output scores, we see 2-3x improvements for steering with SAEs, making them competitive with supervised methods on AxBench, a recent steering benchmark ( Wu and @aryaman.io et al.)

We show that high scores rarely co-occur, and emerge at different layers: features in earlier layers primarily detect input patterns, while features in later layers are more likely to drive the model’s outputs, consistent with prior analyses of LLM neuron functionality.

May 27, 2025 at 4:06 PM

We show that high scores rarely co-occur, and emerge at different layers: features in earlier layers primarily detect input patterns, while features in later layers are more likely to drive the model’s outputs, consistent with prior analyses of LLM neuron functionality.