@zhaofeng_wu

et al.). We hypothesize this may happen too late in the model to process the information, and fix it with back-patching.

@zhaofeng_wu

et al.). We hypothesize this may happen too late in the model to process the information, and fix it with back-patching.

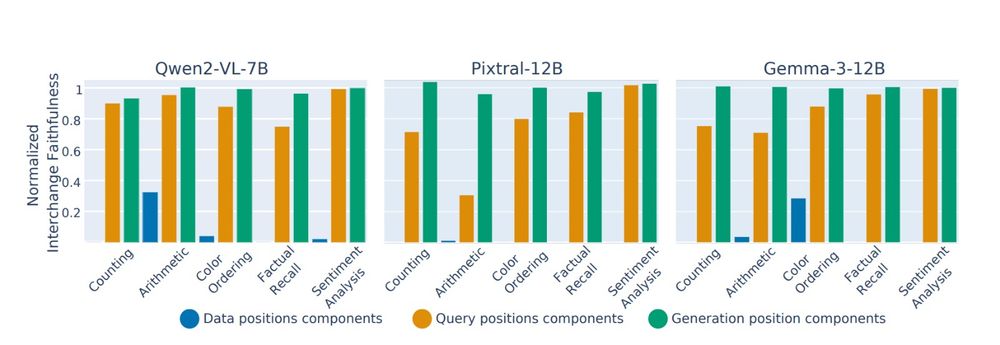

Turns out, query and generation sub-circuits are functionally equivalent, retaining faithfulness when swapped!

Turns out, query and generation sub-circuits are functionally equivalent, retaining faithfulness when swapped!

The overlap is extremely low in data and query positions, and moderate in the generation (last) position only.

The overlap is extremely low in data and query positions, and moderate in the generation (last) position only.

What did we find? >>

What did we find? >>

In a new project led by Yaniv (@YNikankin on the other app), we investigate this gap from an mechanistic perspective, and use our findings to close a third of it! 🧵

In a new project led by Yaniv (@YNikankin on the other app), we investigate this gap from an mechanistic perspective, and use our findings to close a third of it! 🧵

Durmus et al., see image), but had not been systematically analyzed.

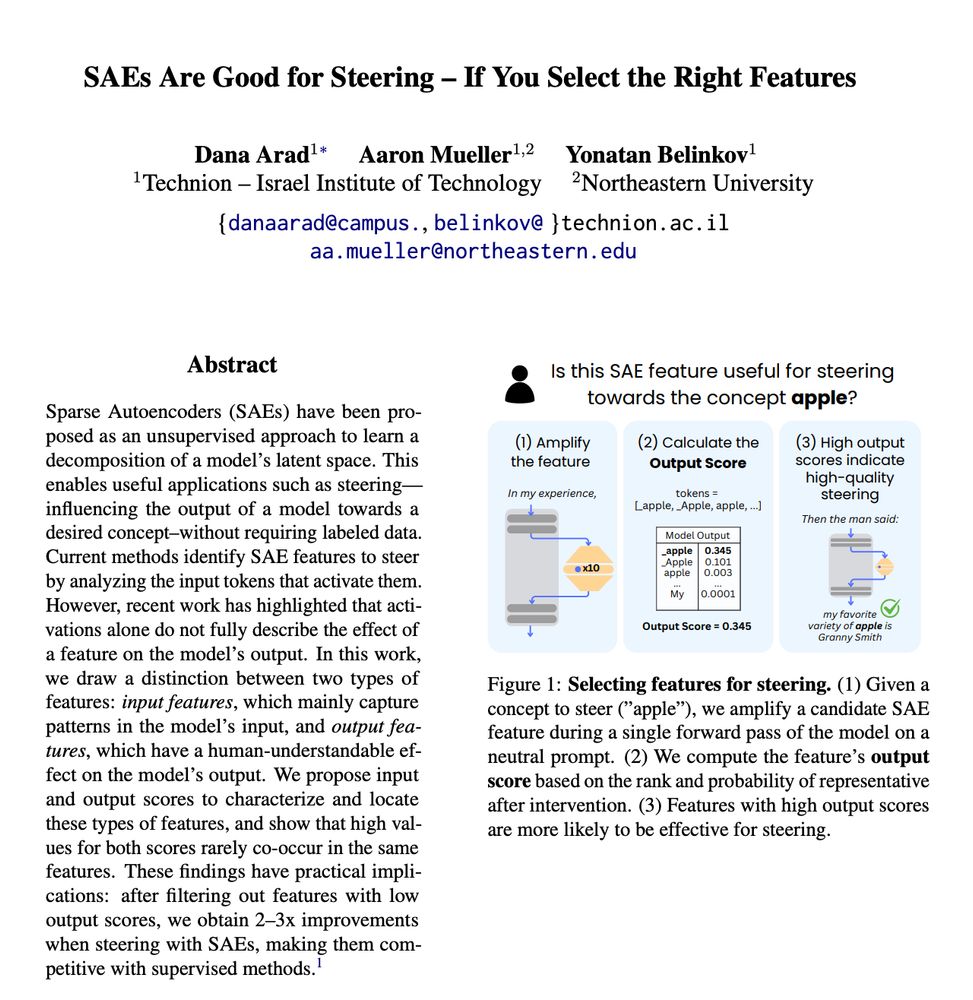

We take an additional step by introducing two simple, efficient metrics to characterize features: the input score and the output score.

Durmus et al., see image), but had not been systematically analyzed.

We take an additional step by introducing two simple, efficient metrics to characterize features: the input score and the output score.

Steering with each yields very different effects!

Steering with each yields very different effects!

Check out our new preprint - "SAEs Are Good for Steering - If You Select the Right Features" 🧵

Check out our new preprint - "SAEs Are Good for Steering - If You Select the Right Features" 🧵