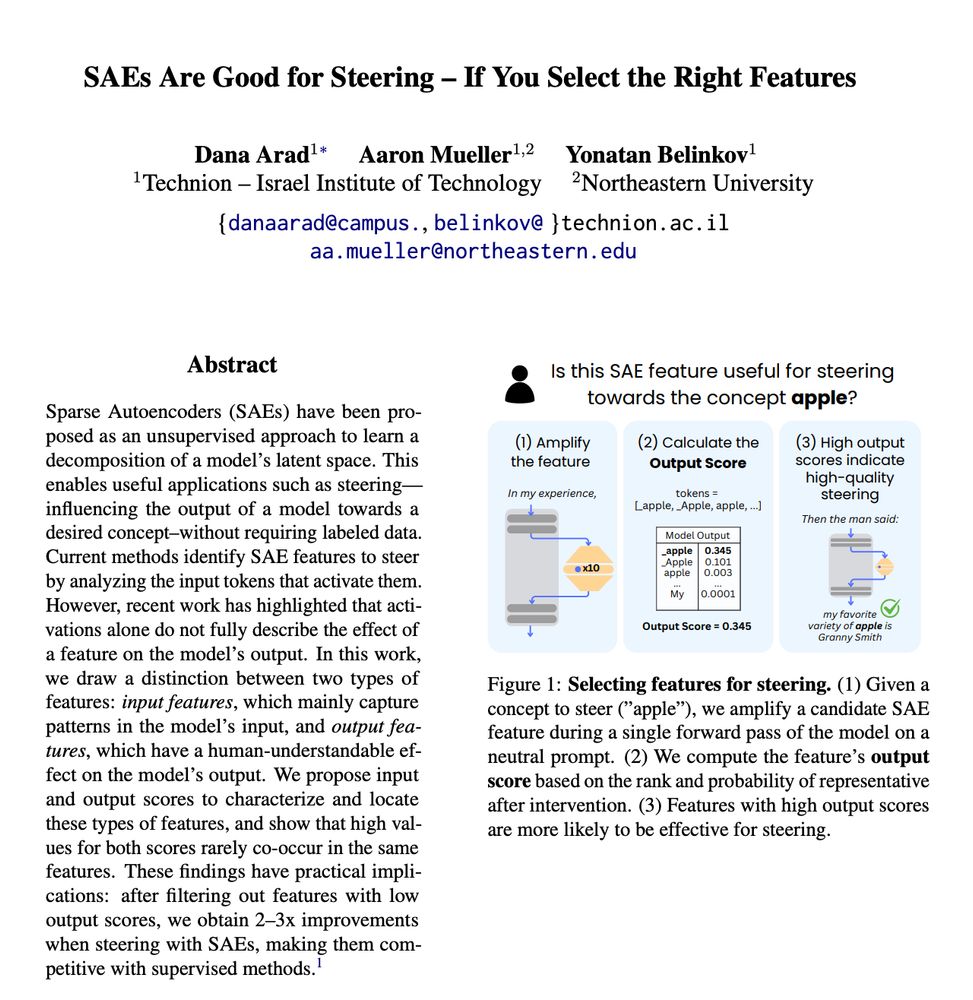

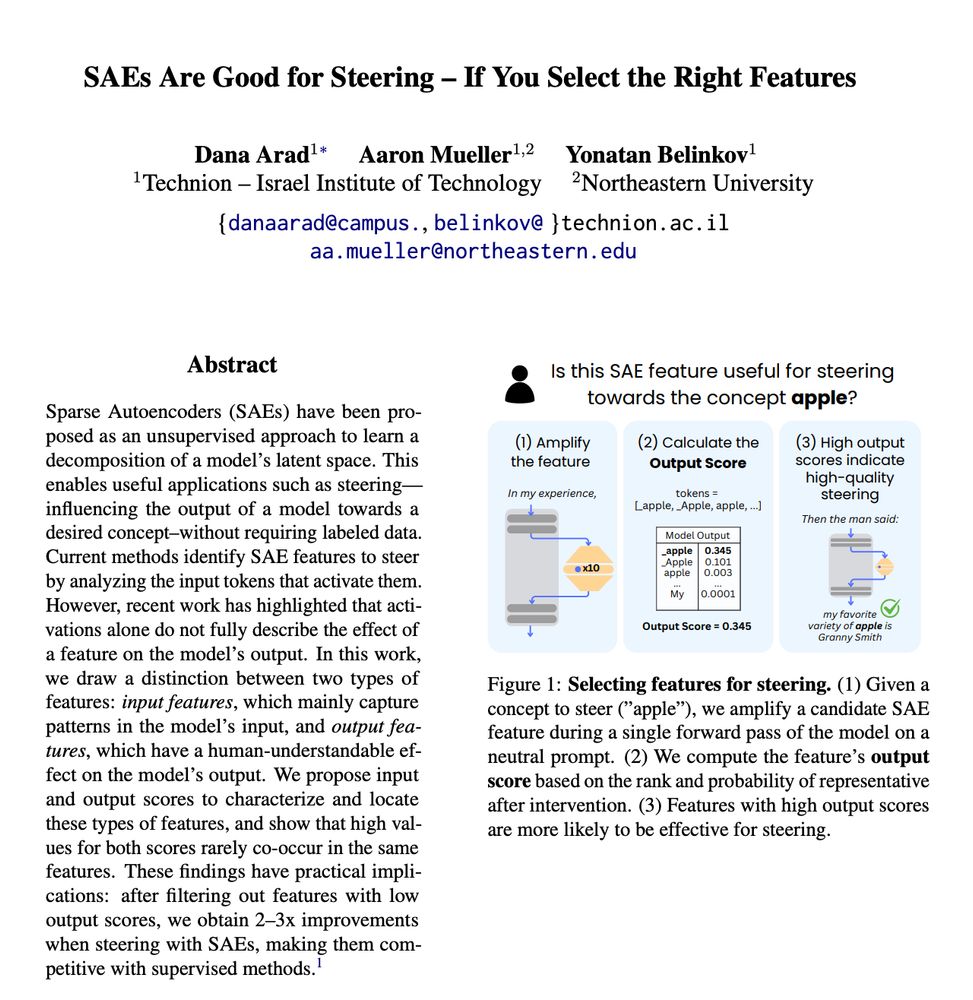

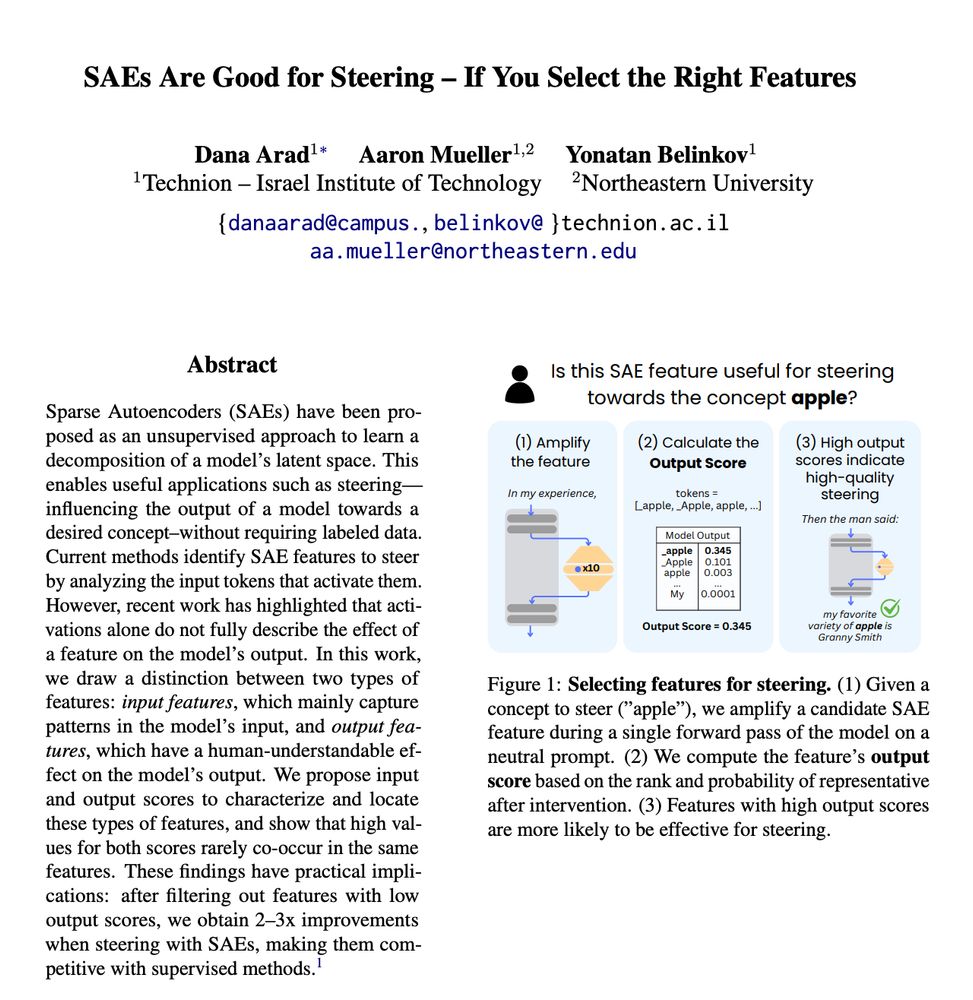

Check out our new preprint - "SAEs Are Good for Steering - If You Select the Right Features" 🧵

Check out our new preprint - "SAEs Are Good for Steering - If You Select the Right Features" 🧵

#BlackboxNLP 2025 invites the submission of archival and non-archival papers on interpreting and explaining NLP models.

📅 Deadlines: Aug 15 (direct submissions), Sept 5 (ARR commitment)

🔗 More details: blackboxnlp.github.io/2025/call/

Now hungry for discussing:

– LLMs behavior

– Interpretability

– Biases & Hallucinations

– Why eval is so hard (but so fun)

Come say hi if that’s your vibe too!

Now hungry for discussing:

– LLMs behavior

– Interpretability

– Biases & Hallucinations

– Why eval is so hard (but so fun)

Come say hi if that’s your vibe too!

If you're working on:

🧠 Circuit discovery

🔍 Feature attribution

🧪 Causal variable localization

now’s the time to polish and submit!

Join us on Discord: discord.gg/n5uwjQcxPR

Whether you're working on circuit discovery or causal variable localization, this is your chance to benchmark your method in a rigorous setup!

Check out the full task description here: blackboxnlp.github.io/2025/task/

Check out the full task description here: blackboxnlp.github.io/2025/task/

The MIB shared task is a great opportunity to experiment:

✅ Clean setup

✅ Open baseline code

✅ Standard evaluation

Join the discord server for ideas and discussions: discord.gg/n5uwjQcxPR

The MIB shared task is a great opportunity to experiment:

✅ Clean setup

✅ Open baseline code

✅ Standard evaluation

Join the discord server for ideas and discussions: discord.gg/n5uwjQcxPR

In a new project led by Yaniv (@YNikankin on the other app), we investigate this gap from an mechanistic perspective, and use our findings to close a third of it! 🧵

In a new project led by Yaniv (@YNikankin on the other app), we investigate this gap from an mechanistic perspective, and use our findings to close a third of it! 🧵

Consider submitting your work to the MIB Shared Task, part of #BlackboxNLP at @emnlpmeeting.bsky.social 2025!

The goal: benchmark existing MI methods and identify promising directions to precisely and concisely recover causal pathways in LMs >>

Consider submitting your work to the MIB Shared Task, part of #BlackboxNLP at @emnlpmeeting.bsky.social 2025!

The goal: benchmark existing MI methods and identify promising directions to precisely and concisely recover causal pathways in LMs >>

Mechanistic Interpretability (MI) is quickly advancing, but comparing methods remains a challenge. This year at #BlackboxNLP, we're introducing a shared task to rigorously evaluate MI methods in language models 🧵

Mechanistic Interpretability (MI) is quickly advancing, but comparing methods remains a challenge. This year at #BlackboxNLP, we're introducing a shared task to rigorously evaluate MI methods in language models 🧵

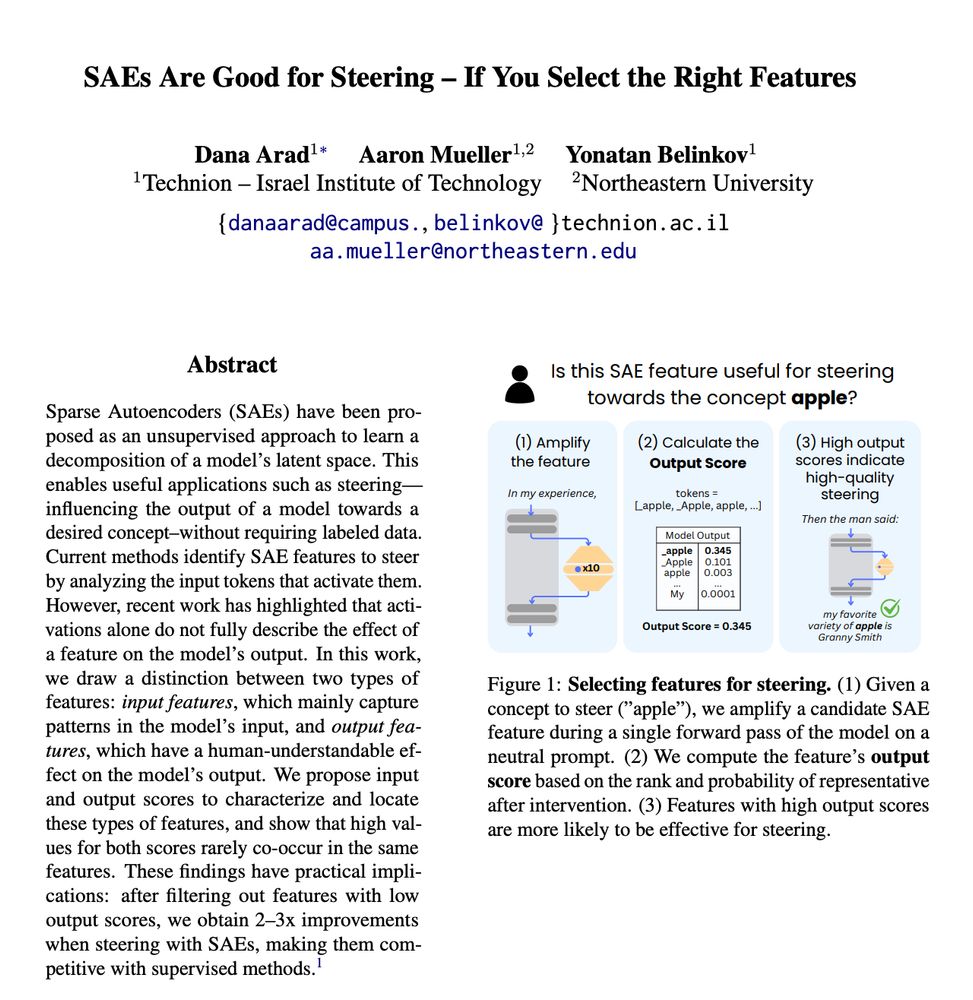

In new work led by @danaarad.bsky.social, we find that this problem largely disappears if you select the right features!

Check out our new preprint - "SAEs Are Good for Steering - If You Select the Right Features" 🧵

In new work led by @danaarad.bsky.social, we find that this problem largely disappears if you select the right features!

Check out our new preprint - "SAEs Are Good for Steering - If You Select the Right Features" 🧵

Check out our new preprint - "SAEs Are Good for Steering - If You Select the Right Features" 🧵

We propose 😎 𝗠𝗜𝗕: a 𝗠echanistic 𝗜nterpretability 𝗕enchmark!

We propose 😎 𝗠𝗜𝗕: a 𝗠echanistic 𝗜nterpretability 𝗕enchmark!

Ever wonder whether verbalized CoTs correspond to the internal reasoning process of the model?

We propose a novel parametric faithfulness approach, which erases information contained in CoT steps from the model parameters to assess CoT faithfulness.

arxiv.org/abs/2502.14829

Ever wonder whether verbalized CoTs correspond to the internal reasoning process of the model?

We propose a novel parametric faithfulness approach, which erases information contained in CoT steps from the model parameters to assess CoT faithfulness.

arxiv.org/abs/2502.14829

LLMs can hallucinate - but did you know they can do so with high certainty even when they know the correct answer? 🤯

We find those hallucinations in our latest work with @itay-itzhak.bsky.social, @fbarez.bsky.social, @gabistanovsky.bsky.social and Yonatan Belinkov

LLMs can hallucinate - but did you know they can do so with high certainty even when they know the correct answer? 🤯

We find those hallucinations in our latest work with @itay-itzhak.bsky.social, @fbarez.bsky.social, @gabistanovsky.bsky.social and Yonatan Belinkov

go.bsky.app/LisK3CP

go.bsky.app/LisK3CP