https://christophreich1996.github.io

www.career.tu-darmstadt.de/tu-darmstadt...

www.career.tu-darmstadt.de/tu-darmstadt...

by Xinrui Gong*, @olvrhhn.bsky.social *, @christophreich.bsky.social , Krishnakant Singh, @simoneschaub.bsky.social , @dcremers.bsky.social @stefanroth.bsky.social

by Xinrui Gong*, @olvrhhn.bsky.social *, @christophreich.bsky.social , Krishnakant Singh, @simoneschaub.bsky.social , @dcremers.bsky.social @stefanroth.bsky.social

by @jev-aleks.bsky.social *, @christophreich.bsky.social *, @fwimbauer.bsky.social , @olvrhhn.bsky.social , Christian Rupprecht, @stefanroth.bsky.social, @dcremers.bsky.social

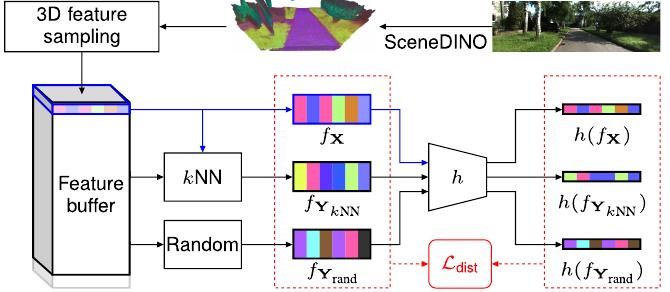

🌍 visinf.github.io/scenedino/

by @jev-aleks.bsky.social *, @christophreich.bsky.social *, @fwimbauer.bsky.social , @olvrhhn.bsky.social , Christian Rupprecht, @stefanroth.bsky.social, @dcremers.bsky.social

🌍 visinf.github.io/scenedino/

Come by our SceneDINO poster at NeuSLAM today 14:15 (Kamehameha II) or Tue, 15:15 (Ex. Hall I 627)!

W/ Jevtić @fwimbauer.bsky.social @olvrhhn.bsky.social Rupprecht, @stefanroth.bsky.social @dcremers.bsky.social

Come by our SceneDINO poster at NeuSLAM today 14:15 (Kamehameha II) or Tue, 15:15 (Ex. Hall I 627)!

W/ Jevtić @fwimbauer.bsky.social @olvrhhn.bsky.social Rupprecht, @stefanroth.bsky.social @dcremers.bsky.social

@ellis.eu @tuda.bsky.social

ellis.eu/news/ellis-p...

@ellis.eu @tuda.bsky.social

ellis.eu/news/ellis-p...

For more details, check out our project page, 🤗 demo, and the hashtag #ICCV2025 paper 🚀

🌍Project page: visinf.github.io/scenedino/

🤗Demo: visinf.github.io/scenedino/

📄Paper: arxiv.org/abs/2507.06230

@jev-aleks.bsky.social

For more details, check out our project page, 🤗 demo, and the hashtag #ICCV2025 paper 🚀

🌍Project page: visinf.github.io/scenedino/

🤗Demo: visinf.github.io/scenedino/

📄Paper: arxiv.org/abs/2507.06230

@jev-aleks.bsky.social

Aleksandar Jevtić, Christoph Reich, Felix Wimbauer ... Daniel Cremers

arxiv.org/abs/2507.06230

Trending on www.scholar-inbox.com

Aleksandar Jevtić, Christoph Reich, Felix Wimbauer ... Daniel Cremers

arxiv.org/abs/2507.06230

Trending on www.scholar-inbox.com

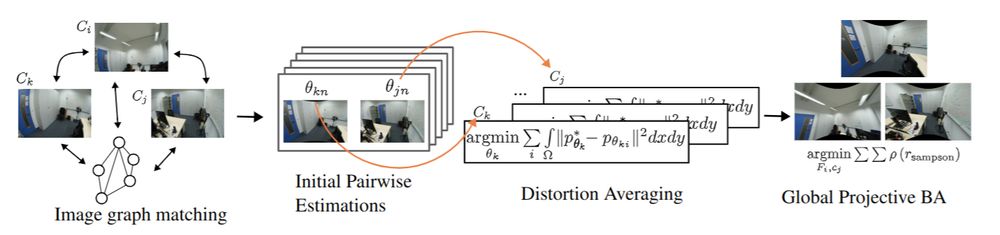

Turns out distortion calibration from multiview 2D correspondences can be fully decoupled from 3D reconstruction, greatly simplifying the problem

arxiv.org/abs/2504.16499

github.com/DaniilSinits...

Turns out distortion calibration from multiview 2D correspondences can be fully decoupled from 3D reconstruction, greatly simplifying the problem

arxiv.org/abs/2504.16499

github.com/DaniilSinits...

🌍: visinf.github.io/scenedino/

📃: arxiv.org/abs/2507.06230

🤗: huggingface.co/spaces/jev-a...

@jev-aleks.bsky.social @fwimbauer.bsky.social @olvrhhn.bsky.social @stefanroth.bsky.social @dcremers.bsky.social

🌍: visinf.github.io/scenedino/

📃: arxiv.org/abs/2507.06230

🤗: huggingface.co/spaces/jev-a...

@jev-aleks.bsky.social @fwimbauer.bsky.social @olvrhhn.bsky.social @stefanroth.bsky.social @dcremers.bsky.social

Feed-Forward SceneDINO for Unsupervised Semantic Scene Completion

https://arxiv.org/abs/2507.06230

Feed-Forward SceneDINO for Unsupervised Semantic Scene Completion

https://arxiv.org/abs/2507.06230

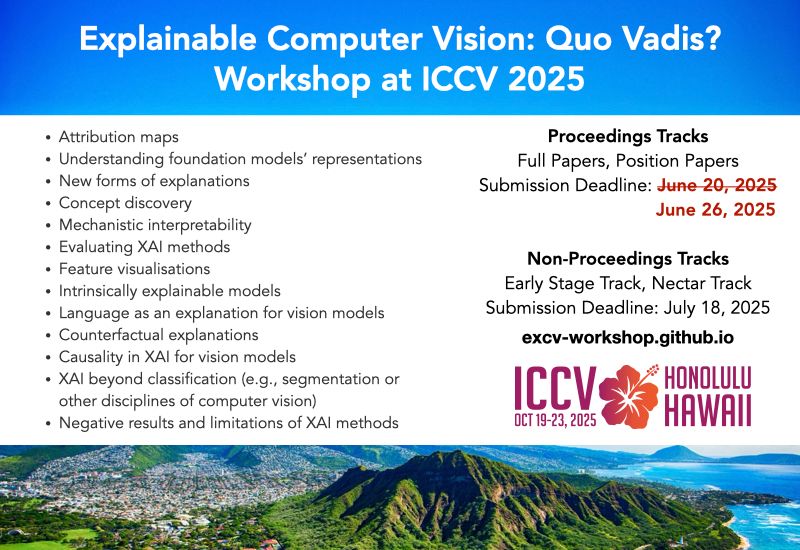

🗓️ Submissions open until June 26 AoE.

📄 Got accepted to ICCV? Congrats! Consider our non-proceedings track.

#ICCV2025 @iccv.bsky.social

🗓️ Submissions open until June 26 AoE.

📄 Got accepted to ICCV? Congrats! Consider our non-proceedings track.

#ICCV2025 @iccv.bsky.social

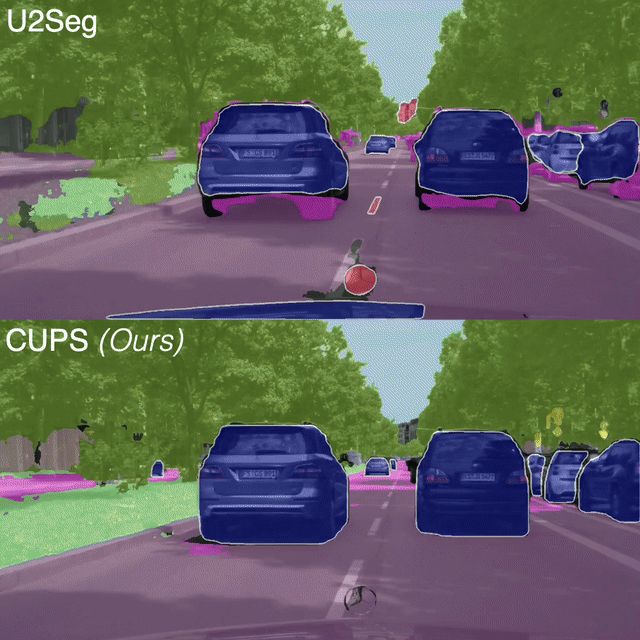

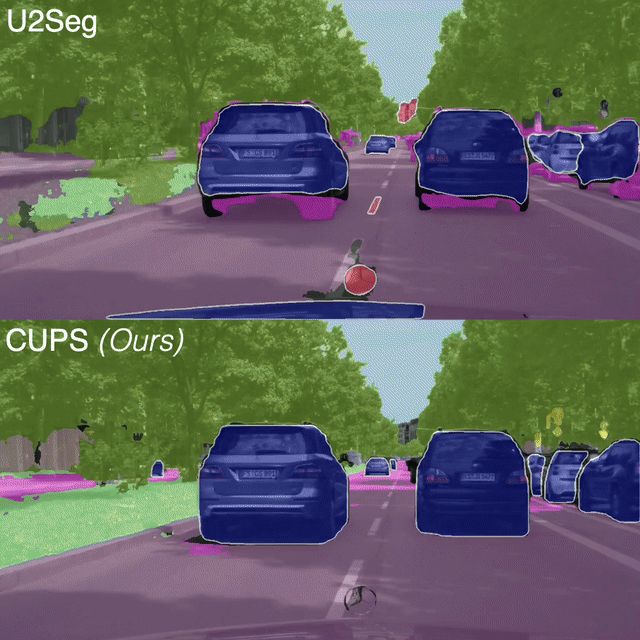

by @olvrhhn.bsky.social , @christophreich.bsky.social , @neekans.bsky.social , @dcremers.bsky.social, Christian Rupprecht, and @stefanroth.bsky.social

Sunday, 8:30 AM, ExHall D, Poster 330

Project Page: visinf.github.io/cups

by @olvrhhn.bsky.social , @christophreich.bsky.social , @neekans.bsky.social , @dcremers.bsky.social, Christian Rupprecht, and @stefanroth.bsky.social

Sunday, 8:30 AM, ExHall D, Poster 330

Project Page: visinf.github.io/cups

Surprisingly, yes!

Our #CVPR2025 paper with @neekans.bsky.social and @dcremers.bsky.social shows that the pairwise distances in both modalities are often enough to find correspondences.

⬇️ 1/4

Surprisingly, yes!

Our #CVPR2025 paper with @neekans.bsky.social and @dcremers.bsky.social shows that the pairwise distances in both modalities are often enough to find correspondences.

⬇️ 1/4

Turns out you can!

In our #CVPR2025 paper AnyCam, we directly train on YouTube videos and achieve SOTA results by using an uncertainty-based flow loss and monocular priors!

⬇️

Turns out you can!

In our #CVPR2025 paper AnyCam, we directly train on YouTube videos and achieve SOTA results by using an uncertainty-based flow loss and monocular priors!

⬇️

1️⃣ Can be directly trained on casual videos without the need for 3D annotation.

2️⃣ Based around a feed-forward transformer and light-weight refinement.

Code and more info: ⏩ fwmb.github.io/anycam/

1️⃣ Can be directly trained on casual videos without the need for 3D annotation.

2️⃣ Based around a feed-forward transformer and light-weight refinement.

Code and more info: ⏩ fwmb.github.io/anycam/

🌍 visinf.github.io/cups/

We present CUPS, the first unsupervised panoptic segmentation method trained directly on scene-centric imagery.

Using self-supervised features, depth & motion, we achieve SotA results!

🌎 visinf.github.io/cups

🌍 visinf.github.io/cups/

For more details check out cvg.cit.tum.de

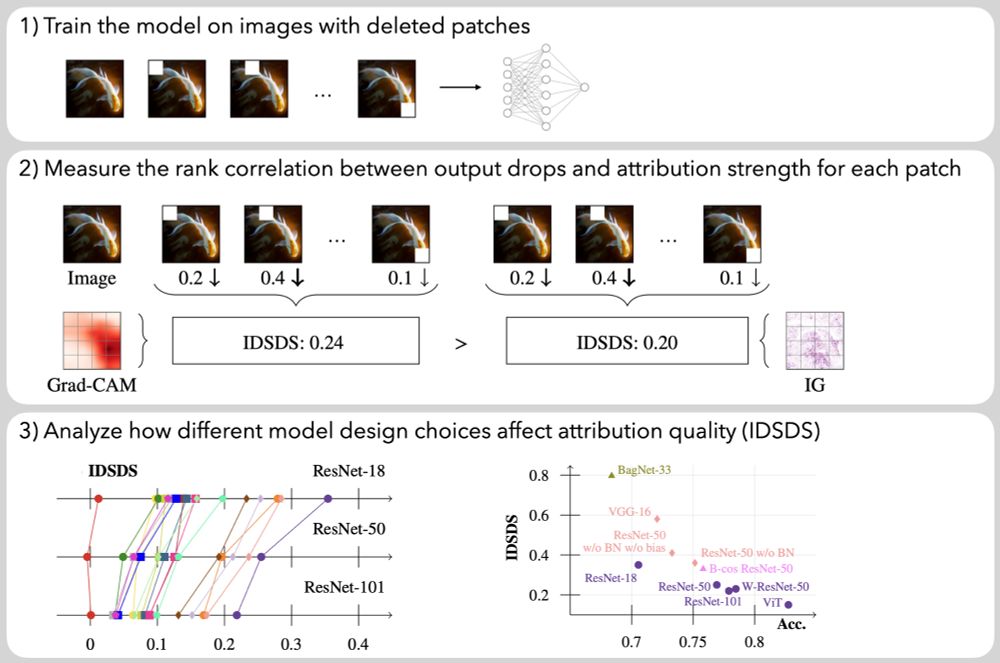

Paper: arxiv.org/abs/2407.11910

Code: github.com/visinf/idsds

Paper: arxiv.org/abs/2407.11910

Code: github.com/visinf/idsds

visinf.github.io/primaps/

PriMaPs generate masks from self-supervised features, enabling to boost unsupervised semantic segmentation via stochastic EM.

visinf.github.io/primaps/

PriMaPs generate masks from self-supervised features, enabling to boost unsupervised semantic segmentation via stochastic EM.