• Yoshua Bengio — Regulatable ML — Sun

• Dawn Song — LOCK-LLM — Sat

• Max Tegmark — MATH-AI — Sat

• Chelsea Finn — Embodied World Models — Sat

robinhesse.github.io/workshop_spe...

(double-check for last-minute changes)

#NeurIPS

📢 Stop missing great workshop speakers just because the workshop wasn’t on your radar. Browse them all in one place:

robinhesse.github.io/workshop_spe...

(also available for @euripsconf.bsky.social)

#NeurIPS #EurIPS

• Yoshua Bengio — Regulatable ML — Sun

• Dawn Song — LOCK-LLM — Sat

• Max Tegmark — MATH-AI — Sat

• Chelsea Finn — Embodied World Models — Sat

robinhesse.github.io/workshop_spe...

(double-check for last-minute changes)

#NeurIPS

📢 Stop missing great workshop speakers just because the workshop wasn’t on your radar. Browse them all in one place:

robinhesse.github.io/workshop_spe...

(also available for @euripsconf.bsky.social)

#NeurIPS #EurIPS

📢 Stop missing great workshop speakers just because the workshop wasn’t on your radar. Browse them all in one place:

robinhesse.github.io/workshop_spe...

(also available for @euripsconf.bsky.social)

#NeurIPS #EurIPS

We are inviting the community and the stakeholders to submit questions, which will be discussed with our experts at the workshop! 🎤💡

👉 Submit your questions: forms.gle/8cYb4Ce3dGHi...

Workshop: excv-workshop.github.io

@iccv.bsky.social

#ICCV2025 #eXCV

We are inviting the community and the stakeholders to submit questions, which will be discussed with our experts at the workshop! 🎤💡

👉 Submit your questions: forms.gle/8cYb4Ce3dGHi...

Workshop: excv-workshop.github.io

@iccv.bsky.social

#ICCV2025 #eXCV

For more details, check out our project page, 🤗 demo, and the hashtag #ICCV2025 paper 🚀

🌍Project page: visinf.github.io/scenedino/

🤗Demo: visinf.github.io/scenedino/

📄Paper: arxiv.org/abs/2507.06230

@jev-aleks.bsky.social

For more details, check out our project page, 🤗 demo, and the hashtag #ICCV2025 paper 🚀

🌍Project page: visinf.github.io/scenedino/

🤗Demo: visinf.github.io/scenedino/

📄Paper: arxiv.org/abs/2507.06230

@jev-aleks.bsky.social

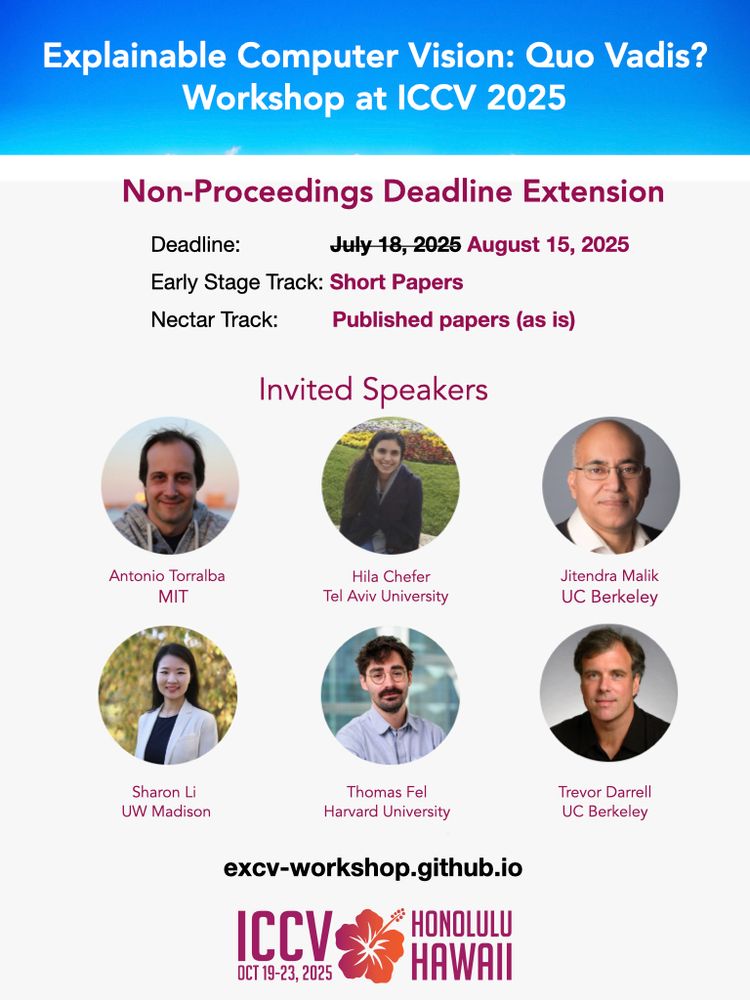

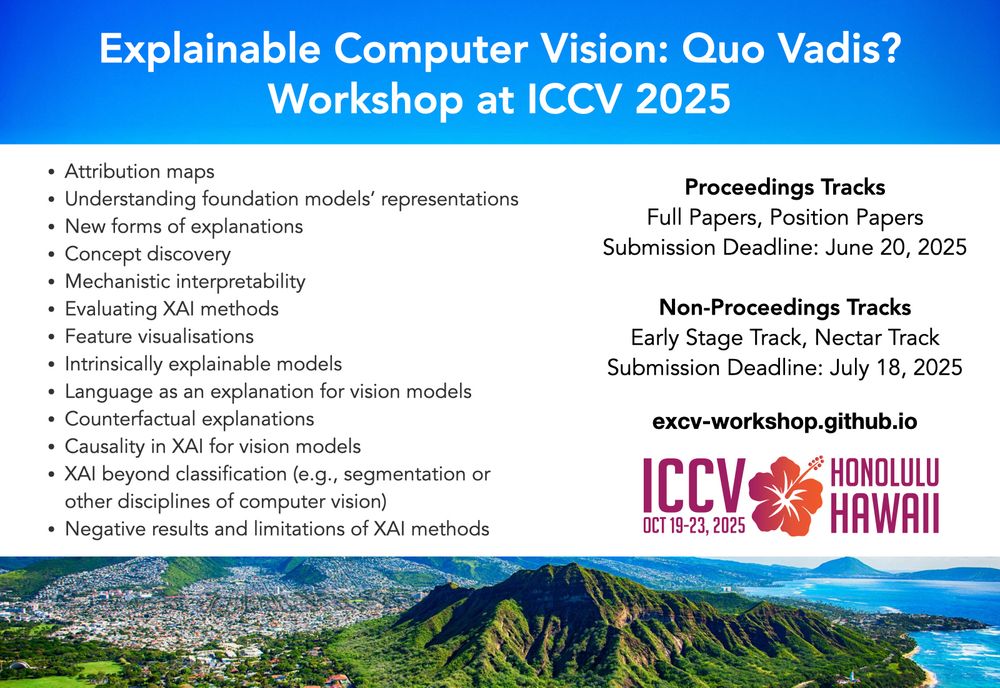

Our Non-proceedings track is open till August 15th for the eXCV workshop at ICCV.

Our nectar track accepts published papers, as is.

More info at: excv-workshop.github.io

@iccv.bsky.social #ICCV2025

Our Non-proceedings track is open till August 15th for the eXCV workshop at ICCV.

Our nectar track accepts published papers, as is.

More info at: excv-workshop.github.io

@iccv.bsky.social #ICCV2025

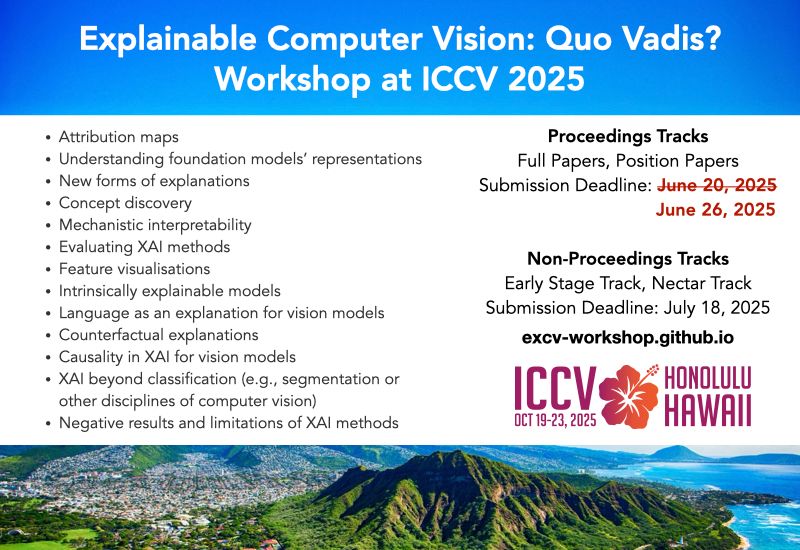

Our Non-proceedings track is still open!

Paper submission deadline: July 18, 2025

More info at: excv-workshop.github.io

@iccv.bsky.social #ICCV2025

Our Non-proceedings track is still open!

Paper submission deadline: July 18, 2025

More info at: excv-workshop.github.io

@iccv.bsky.social #ICCV2025

🌍: visinf.github.io/scenedino/

📃: arxiv.org/abs/2507.06230

🤗: huggingface.co/spaces/jev-a...

@jev-aleks.bsky.social @fwimbauer.bsky.social @olvrhhn.bsky.social @stefanroth.bsky.social @dcremers.bsky.social

🌍: visinf.github.io/scenedino/

📃: arxiv.org/abs/2507.06230

🤗: huggingface.co/spaces/jev-a...

@jev-aleks.bsky.social @fwimbauer.bsky.social @olvrhhn.bsky.social @stefanroth.bsky.social @dcremers.bsky.social

🗓️ Submissions open until June 26 AoE.

📄 Got accepted to ICCV? Congrats! Consider our non-proceedings track.

#ICCV2025 @iccv.bsky.social

🗓️ Submissions open until June 26 AoE.

📄 Got accepted to ICCV? Congrats! Consider our non-proceedings track.

#ICCV2025 @iccv.bsky.social

!

🔗 Project page: qbouniot.github.io/affscore_web...

Come join me on Sunday 15th June from 10:30 to 12:30, ExHall D Poster #402 #CVPR2025

!

🔗 Project page: qbouniot.github.io/affscore_web...

Come join me on Sunday 15th June from 10:30 to 12:30, ExHall D Poster #402 #CVPR2025

@iccv.bsky.social

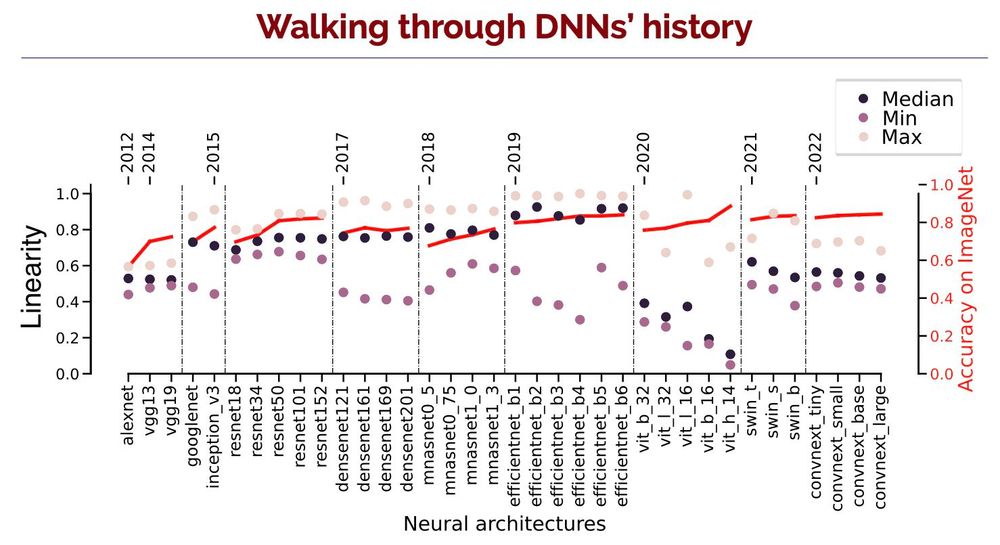

We show how to improve the interpretability of a CNN by disentangling a polysemantic channel into multiple monosemantic ones - without changing the function of the CNN.

by @robinhesse.bsky.social, Jonas Fischer, @simoneschaub.bsky.social, and @stefanroth.bsky.social

Paper: arxiv.org/abs/2504.12939

Talk: Thursday 11:40 AM, Grand ballroom C1

Poster: Thursday, 12:30 PM, ExHall D, Poster 31-60

We show how to improve the interpretability of a CNN by disentangling a polysemantic channel into multiple monosemantic ones - without changing the function of the CNN.

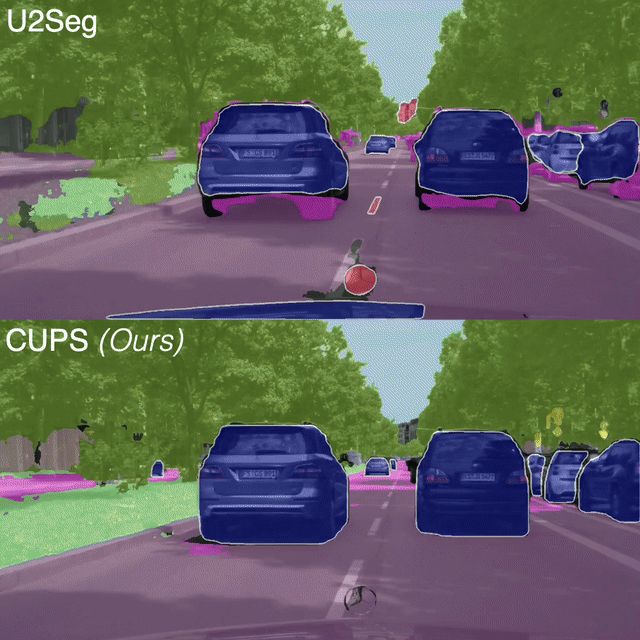

We present CUPS, the first unsupervised panoptic segmentation method trained directly on scene-centric imagery.

Using self-supervised features, depth & motion, we achieve SotA results!

🌎 visinf.github.io/cups

We present CUPS, the first unsupervised panoptic segmentation method trained directly on scene-centric imagery.

Using self-supervised features, depth & motion, we achieve SotA results!

🌎 visinf.github.io/cups

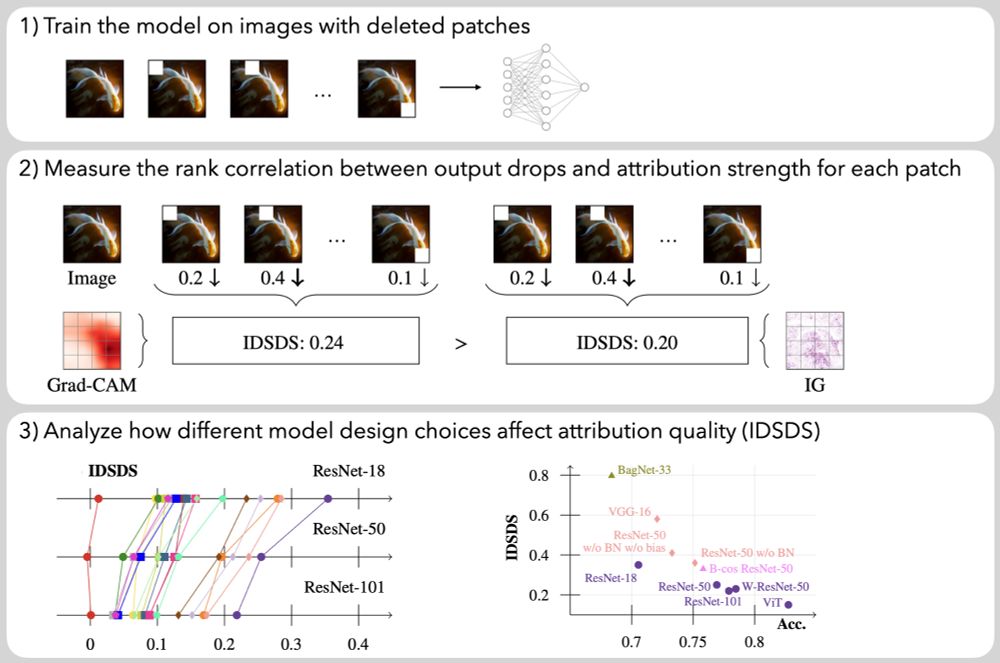

Paper: arxiv.org/abs/2407.11910

Code: github.com/visinf/idsds

Paper: arxiv.org/abs/2407.11910

Code: github.com/visinf/idsds