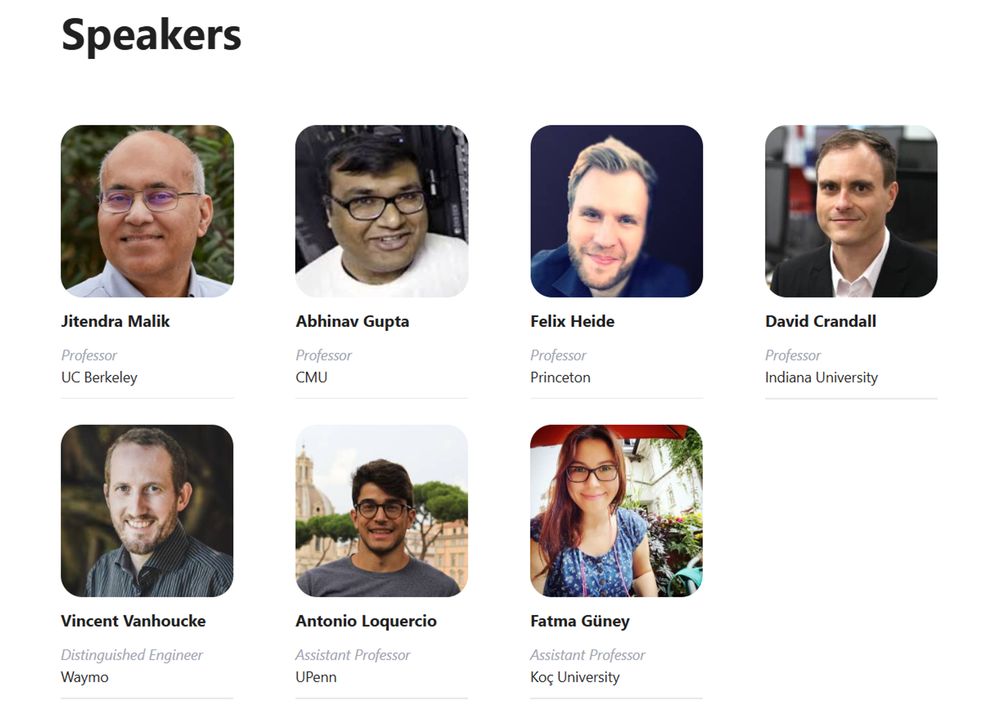

🗓️ June 12 • 📍 Room 202B, Nashville

Meet our incredible lineup of speakers covering topics from agile robotics to safe physical AI at: opendrivelab.com/cvpr2025/tut...

#cvpr2025

🗓️ June 12 • 📍 Room 202B, Nashville

Meet our incredible lineup of speakers covering topics from agile robotics to safe physical AI at: opendrivelab.com/cvpr2025/tut...

#cvpr2025

I'm afraid to announce that w/ our workshop on "Embodied Intelligence for Autonomous Systems on the Horizon" we will make this choice even harder: opendrivelab.com/cvpr2025/wor... #cvpr2025

I'm afraid to announce that w/ our workshop on "Embodied Intelligence for Autonomous Systems on the Horizon" we will make this choice even harder: opendrivelab.com/cvpr2025/wor... #cvpr2025

I'm afraid to announce that w/ our workshop on "Embodied Intelligence for Autonomous Systems on the Horizon" we will make this choice even harder: opendrivelab.com/cvpr2025/wor... #cvpr2025

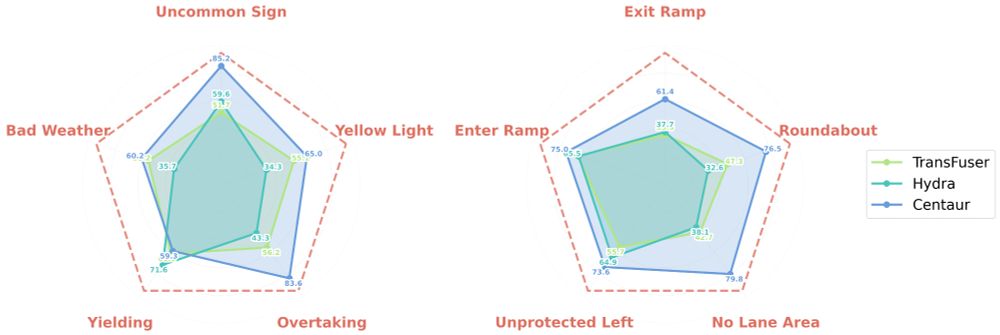

Two rounds: #CVPR2025 and #ICCV2025. $18K in prizes + several $1.5k travel grants. Submit in May for Round 1! opendrivelab.com/challenge2025/ 🧵👇

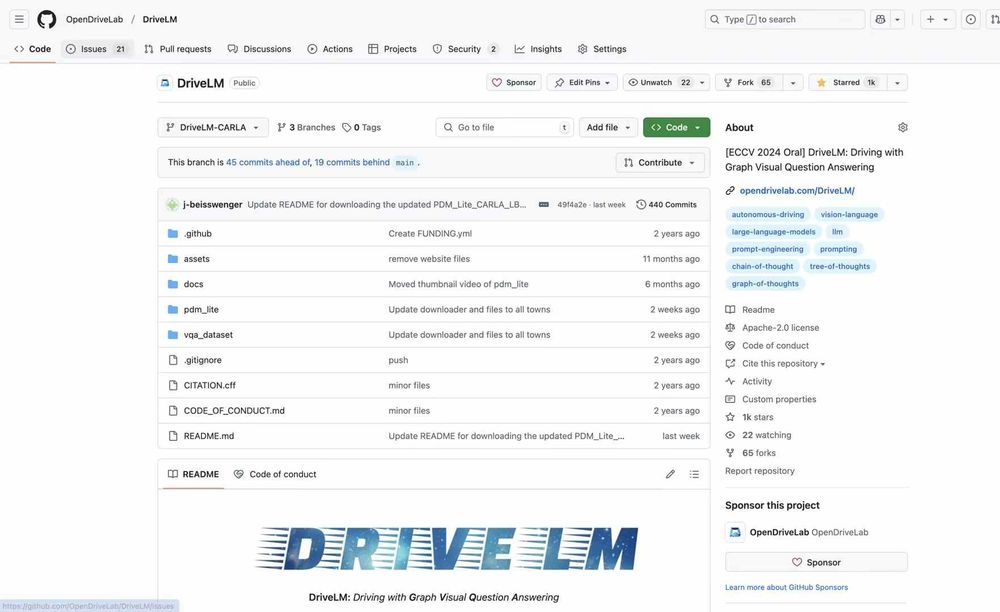

🚀 A milestone upgrade on the codebase of the #CVPR2023 best paper UniAD.

👉 Check out this branch github.com/OpenDriveLab..., and we will get you more details soon

🚀 A milestone upgrade on the codebase of the #CVPR2023 best paper UniAD.

👉 Check out this branch github.com/OpenDriveLab..., and we will get you more details soon

Visit the challenge website: opendrivelab.com/challenge2025

And more on #CVPR2025: opendrivelab.com/cvpr2025

Visit the challenge website: opendrivelab.com/challenge2025

And more on #CVPR2025: opendrivelab.com/cvpr2025

Github:

github.com/OpenDriveLab...

HuggingFace:

huggingface.co/agibot-world

1. Benchmark & Evaluation & Metrics

2. Data collection (especially tele-op)

3. Policy network architecture & training receipt.

1. Benchmark & Evaluation & Metrics

2. Data collection (especially tele-op)

3. Policy network architecture & training receipt.