Software engineer at Microsoft, opinions here are my own

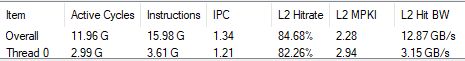

Good start on AMD. I save ~4 or ~12 ns for scalar and vector accesses

Good start on AMD. I save ~4 or ~12 ns for scalar and vector accesses

There's just not enough computing power available to get a good sample count while maintaining real-time performance. It's like setting ISO 102400 on a DSLR

There's just not enough computing power available to get a good sample count while maintaining real-time performance. It's like setting ISO 102400 on a DSLR

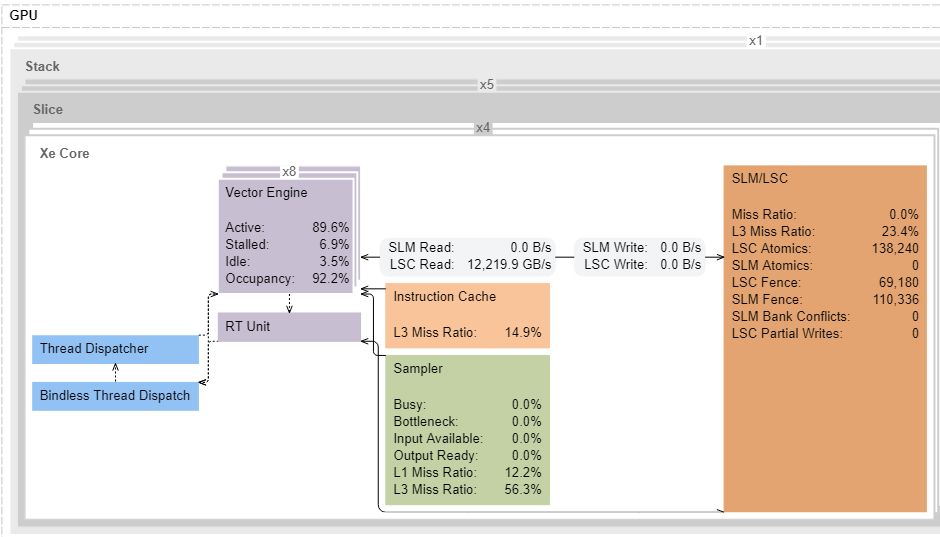

12.2 TB/s of L1 bandwidth, or ~214 bytes per Xe Core cycle

Theoretical is probably 256B/cycle. But close enough for now

12.2 TB/s of L1 bandwidth, or ~214 bytes per Xe Core cycle

Theoretical is probably 256B/cycle. But close enough for now

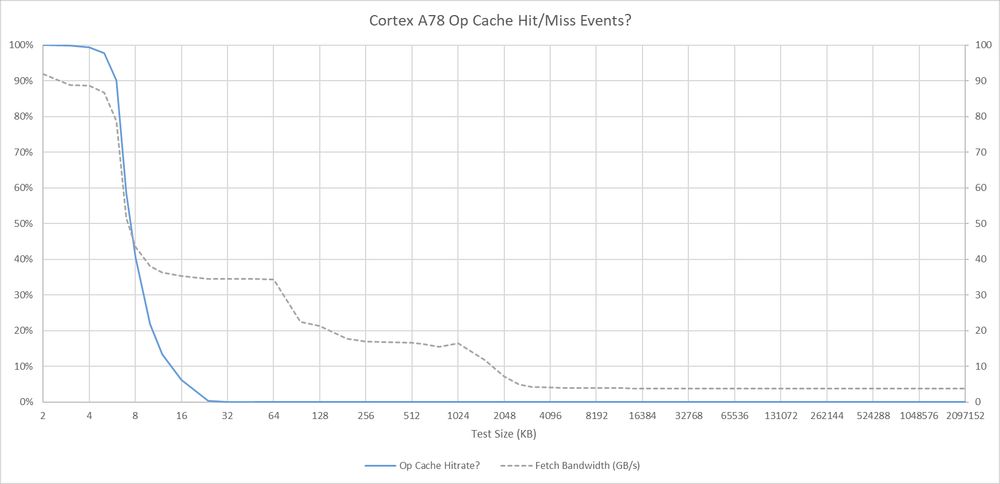

Stock: 600 pts

Op cache disabled: 525 pts

Stock: 600 pts

Op cache disabled: 525 pts

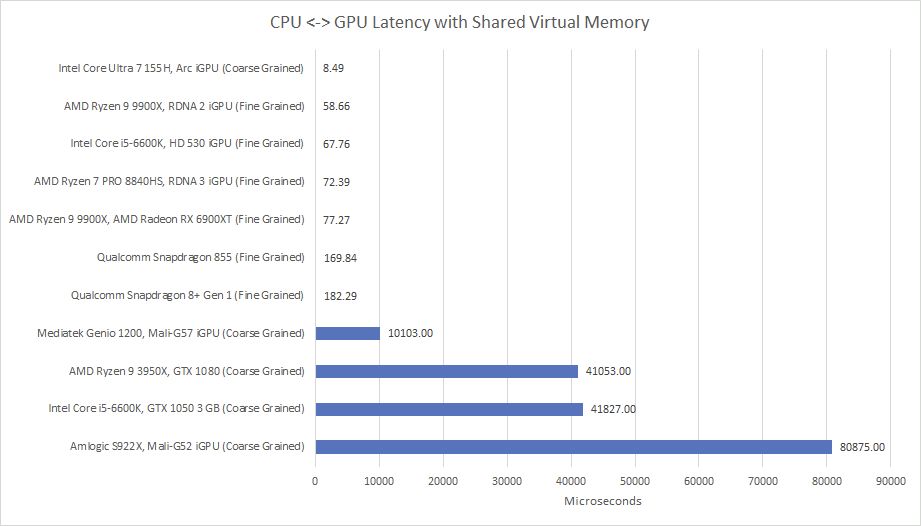

Here I'm testing OpenCL Shared Virtual Memory with a 256 MB buffer and only modifying one 32-bit value in it. Anything in the millisecond range implies the driver had to copy the entire buffer.

Here I'm testing OpenCL Shared Virtual Memory with a 256 MB buffer and only modifying one 32-bit value in it. Anything in the millisecond range implies the driver had to copy the entire buffer.

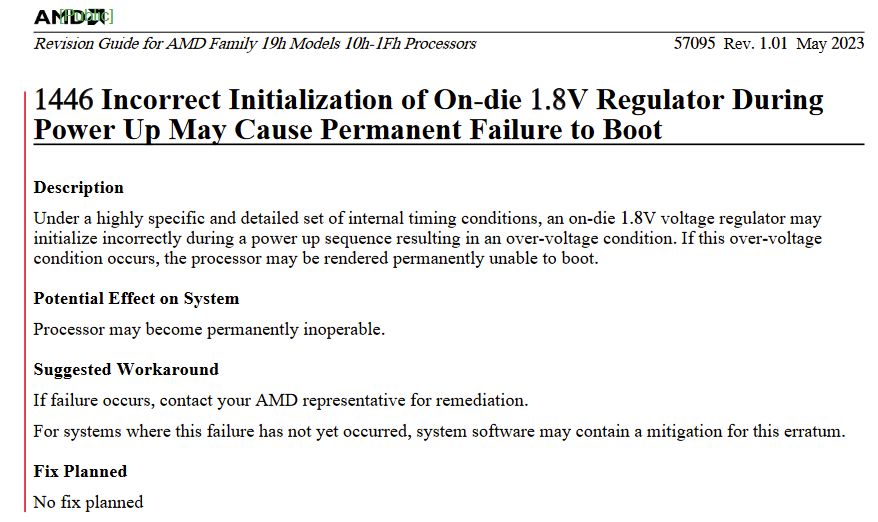

Discussing turning off Zen 4's op cache and its performance consequences, in video format :)

Discussing turning off Zen 4's op cache and its performance consequences, in video format :)

But I wish Intel would hurry up and get LNL/ARL documentation written up :/

But I wish Intel would hurry up and get LNL/ARL documentation written up :/

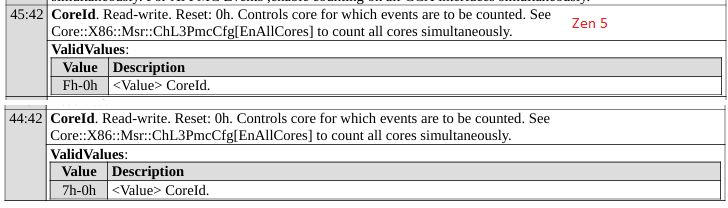

Of course this doesn't mean a 16 core CCX will show up, but it's interesting that AMD's laying the groundwork for it.

Of course this doesn't mean a 16 core CCX will show up, but it's interesting that AMD's laying the groundwork for it.

Likely doesn't affect performance, as the op cache has more than enough bandwidth to feed downstream stages.

Likely doesn't affect performance, as the op cache has more than enough bandwidth to feed downstream stages.