I also like bouldering, reading books, Berlin bookswap meetup, boardgames and anime.

SciVid offers cross-domain evaluation of video models in scientific applications, including medical CV, animal behavior, & weather forecasting 🧪🌍📽️🪰🐭🫀🌦️

📝 Check out our paper: arxiv.org/abs/2507.03578

[1/4]🧵

SciVid offers cross-domain evaluation of video models in scientific applications, including medical CV, animal behavior, & weather forecasting 🧪🌍📽️🪰🐭🫀🌦️

📝 Check out our paper: arxiv.org/abs/2507.03578

[1/4]🧵

A time lapse of me painting a fanart of Lycoris Recoil, Chisato, onto a Chopstick

A time lapse of me painting a fanart of Lycoris Recoil, Chisato, onto a Chopstick

Took me around 3hours to make them. There is a light firework in the background and if you hold them closer they really form the heart with their hands:-)

#Fanart

#LycorisRecoil

#ChisatoXTakina

#PaintingOnChopsticks

Took me around 3hours to make them. There is a light firework in the background and if you hold them closer they really form the heart with their hands:-)

#Fanart

#LycorisRecoil

#ChisatoXTakina

#PaintingOnChopsticks

😍🤩😍

I am looking forward for the real ones. Just some small adjustments to go! So happy to have this prototype now in my kitchen as cooking sticks🤗🤗 hope they arrive in Brazilia soon then, too!

😍🤩😍

I am looking forward for the real ones. Just some small adjustments to go! So happy to have this prototype now in my kitchen as cooking sticks🤗🤗 hope they arrive in Brazilia soon then, too!

(1/N)

(1/N)

arxiv.org/abs/2412.14294

Causal, 3× fewer parameters, 12× less memory, 5× higher FLOPs than (non-causal) ViViT, matching / outperforming on Kinetics & SSv2 action recognition.

Code and checkpoints out soon.

arxiv.org/abs/2412.14294

Causal, 3× fewer parameters, 12× less memory, 5× higher FLOPs than (non-causal) ViViT, matching / outperforming on Kinetics & SSv2 action recognition.

Code and checkpoints out soon.

SRT: srt-paper.github.io

OSRT: osrt-paper.github.io

RUST: rust-paper.github.io

DyST: dyst-paper.github.io

MooG: moog-paper.github.io

SRT: srt-paper.github.io

OSRT: osrt-paper.github.io

RUST: rust-paper.github.io

DyST: dyst-paper.github.io

MooG: moog-paper.github.io

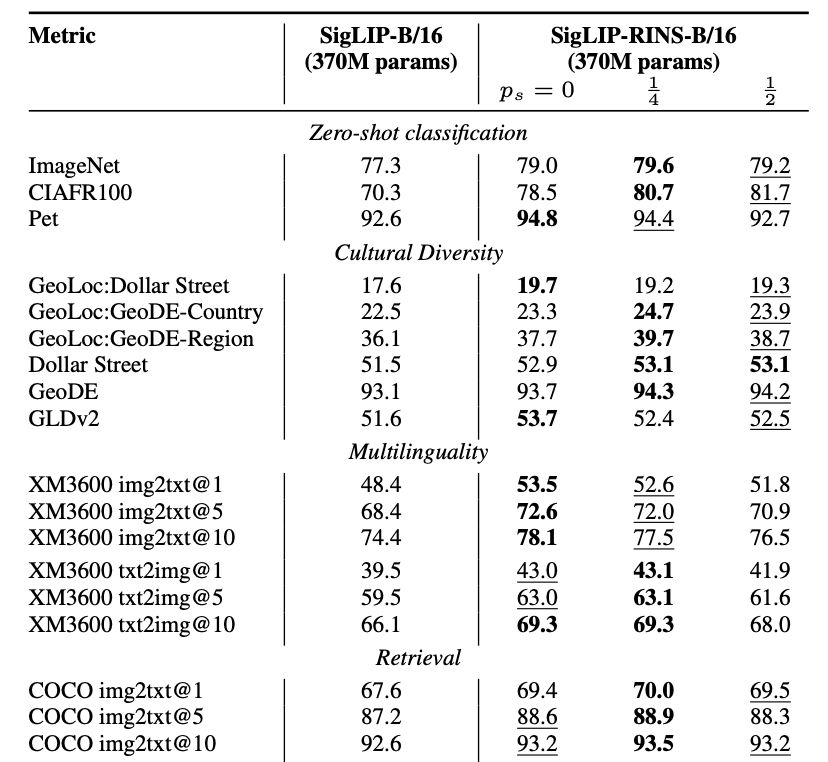

Turns out you can, and here is how: arxiv.org/abs/2411.15099

Really excited to this work on multimodal pretraining for my first bluesky entry!

🧵 A short and hopefully informative thread:

Turns out you can, and here is how: arxiv.org/abs/2411.15099

Really excited to this work on multimodal pretraining for my first bluesky entry!

🧵 A short and hopefully informative thread:

For science-minded folks, there are some very cool tricks 🚀…

If you use any of these emojis: 👩🔬|👩🏻🔬|👩🏼🔬|👩🏽🔬|👩🏾🔬|👩🏿🔬 your post will index on a Women in STEM feed

If you use 🧪 a Science feed

If you use 🧠 a Neuroscience feed

Go get it 🚀🧪🧠👩🔬💙🎊

For science-minded folks, there are some very cool tricks 🚀…

If you use any of these emojis: 👩🔬|👩🏻🔬|👩🏼🔬|👩🏽🔬|👩🏾🔬|👩🏿🔬 your post will index on a Women in STEM feed

If you use 🧪 a Science feed

If you use 🧠 a Neuroscience feed

Go get it 🚀🧪🧠👩🔬💙🎊