> https://antoine-moulin.github.io/

We speed up sampling of masked diffusion models by ~2x by using speculative sampling and a hybrid non-causal / causal transformer

arxiv.org/abs/2510.03929

w/ @vdebortoli.bsky.social, Jiaxin Shi, @arnauddoucet.bsky.social

We speed up sampling of masked diffusion models by ~2x by using speculative sampling and a hybrid non-causal / causal transformer

arxiv.org/abs/2510.03929

w/ @vdebortoli.bsky.social, Jiaxin Shi, @arnauddoucet.bsky.social

We're a research / engineering team working together in industries like health and logistics to ship ML tools that drastically improve productivity. If you're interested in ML and RL work that matters, come join us 😀

We're a research / engineering team working together in industries like health and logistics to ship ML tools that drastically improve productivity. If you're interested in ML and RL work that matters, come join us 😀

Huge thanks to my co-authors @rflamary.bsky.social and Bertrand Thirion !

arxiv.org/abs/2506.12025

(1/5)

Huge thanks to my co-authors @rflamary.bsky.social and Bertrand Thirion !

arxiv.org/abs/2506.12025

(1/5)

Don’t miss David and our amazing lineup of speakers—submit your latest RL work to our NeurIPS workshop.

📅 Extended deadline: Sept 2 (AoE)

Don’t miss David and our amazing lineup of speakers—submit your latest RL work to our NeurIPS workshop.

📅 Extended deadline: Sept 2 (AoE)

We look forward to receiving your submissions!

We look forward to receiving your submissions!

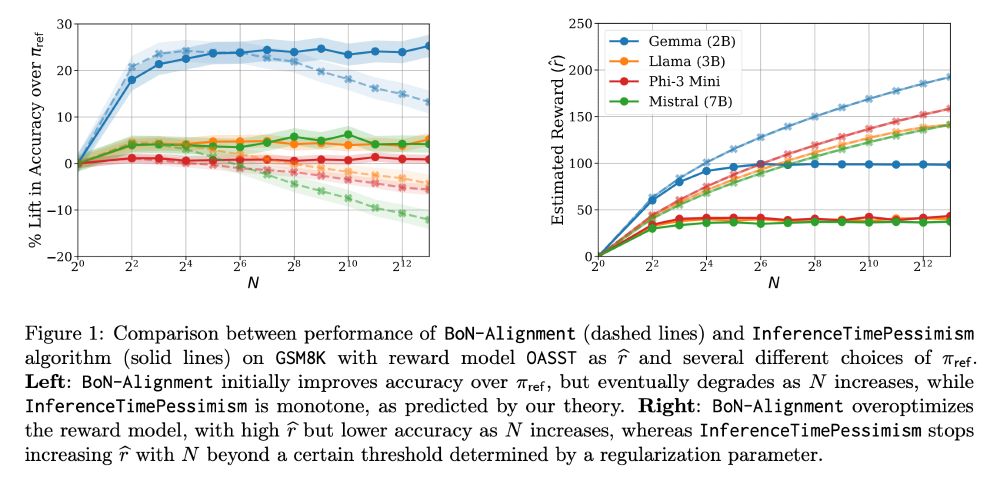

when the expert is hard to represent but the environment is simple, estimating a Q-value rather than the expert directly may be beneficial. lots of open questions left though!

when the expert is hard to represent but the environment is simple, estimating a Q-value rather than the expert directly may be beneficial. lots of open questions left though!

“Nonlinear Meta-learning Can Guarantee Faster Rates”

arxiv.org/abs/2307.10870

When does meta learning work? Spoiler: generalise to new tasks by overfitting on your training tasks!

Here is why:

🧵👇

“Nonlinear Meta-learning Can Guarantee Faster Rates”

arxiv.org/abs/2307.10870

When does meta learning work? Spoiler: generalise to new tasks by overfitting on your training tasks!

Here is why:

🧵👇

- learn distances between Markov chains

- extract "encoder-decoder" pairs for representation learning

- with sample- and computational-complexity guarantees

read on for some quick details..

1/n

- learn distances between Markov chains

- extract "encoder-decoder" pairs for representation learning

- with sample- and computational-complexity guarantees

read on for some quick details..

1/n

Diffusion models are _fast_, and hold immense promise to challenge autoregressive models as the de facto standard for language modeling.

Diffusion models are _fast_, and hold immense promise to challenge autoregressive models as the de facto standard for language modeling.

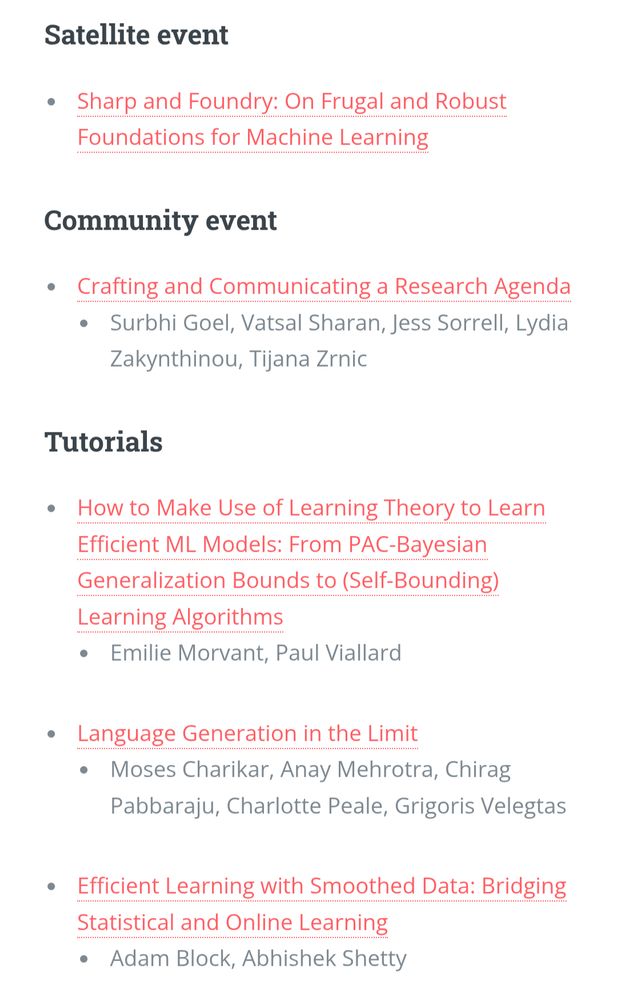

Exciting topics, and impressive slate of speakers and events, on June 30! The workshops have calls for contributions (⏰ May 16, 19, and 25): check them out!

learningtheory.org/colt2025/ind...

Exciting topics, and impressive slate of speakers and events, on June 30! The workshops have calls for contributions (⏰ May 16, 19, and 25): check them out!

learningtheory.org/colt2025/ind...

📝 Soliciting abstracts/posters exploring theoretical & practical aspects of post-training and RL with language models!

🗓️ Deadline: May 19, 2025

📝 Soliciting abstracts/posters exploring theoretical & practical aspects of post-training and RL with language models!

🗓️ Deadline: May 19, 2025

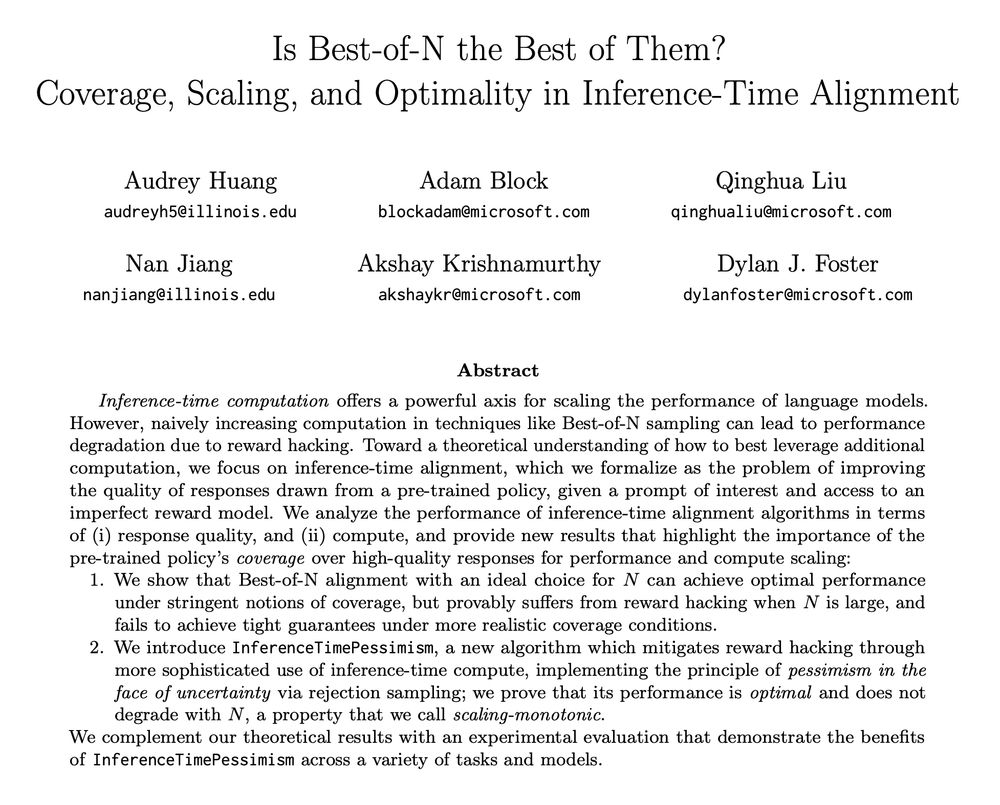

New paper (appearing at ICML) led by the amazing Audrey Huang (ahahaudrey.bsky.social) with Adam Block, Qinghua Liu, Nan Jiang, and Akshay Krishnamurthy (akshaykr.bsky.social).

1/11

New paper (appearing at ICML) led by the amazing Audrey Huang (ahahaudrey.bsky.social) with Adam Block, Qinghua Liu, Nan Jiang, and Akshay Krishnamurthy (akshaykr.bsky.social).

1/11

at #AISTATS2025

A spectral method for causal effect estimation with hidden confounders, for instrumental variable and proxy causal learning

arxiv.org/abs/2407.10448

Haotian Sun, @antoine-mln.bsky.social, Tongzheng Ren, Bo Dai

at #AISTATS2025

A spectral method for causal effect estimation with hidden confounders, for instrumental variable and proxy causal learning

arxiv.org/abs/2407.10448

Haotian Sun, @antoine-mln.bsky.social, Tongzheng Ren, Bo Dai

04/29: Max Simchowitz (CMU)

05/06: Jeongyeol Kwon (Univ. of Widsconsin-Madison)

05/20: Sikata Sengupta & Marcel Hussing (Univ. of Pennsylvania)

05/27: Dhruv Rohatgi (MIT)

06/03: David Janz (Univ. of Oxford)

06/10: Nneka Okolo (MIT)

"CONFIDENCE SEQUENCES FOR GENERALIZED LINEAR MODELS VIA REGRET ANALYSIS"

TL;DR: we reduce the problem of designing tight confidence sets for statistical models to proving the existence of small regret bounds in an online prediction game

read on for a quick thread 👀👀👀

1/

"CONFIDENCE SEQUENCES FOR GENERALIZED LINEAR MODELS VIA REGRET ANALYSIS"

TL;DR: we reduce the problem of designing tight confidence sets for statistical models to proving the existence of small regret bounds in an online prediction game

read on for a quick thread 👀👀👀

1/

04/29: Max Simchowitz (CMU)

05/06: Jeongyeol Kwon (Univ. of Widsconsin-Madison)

05/20: Sikata Sengupta & Marcel Hussing (Univ. of Pennsylvania)

05/27: Dhruv Rohatgi (MIT)

06/03: David Janz (Univ. of Oxford)

06/10: Nneka Okolo (MIT)

04/29: Max Simchowitz (CMU)

05/06: Jeongyeol Kwon (Univ. of Widsconsin-Madison)

05/20: Sikata Sengupta & Marcel Hussing (Univ. of Pennsylvania)

05/27: Dhruv Rohatgi (MIT)

06/03: David Janz (Univ. of Oxford)

06/10: Nneka Okolo (MIT)