The paper is the product. Algorithms be damned.

The paper is the product. Algorithms be damned.

rl-conference.cc/call_for_soc...

rl-conference.cc/call_for_soc...

Feel free to reach out if you're attending RLDM!

Feel free to reach out if you're attending RLDM!

Also, feel free to reach out to chat about continual, meta and/or reinforcement learning.

Also, feel free to reach out to chat about continual, meta and/or reinforcement learning.

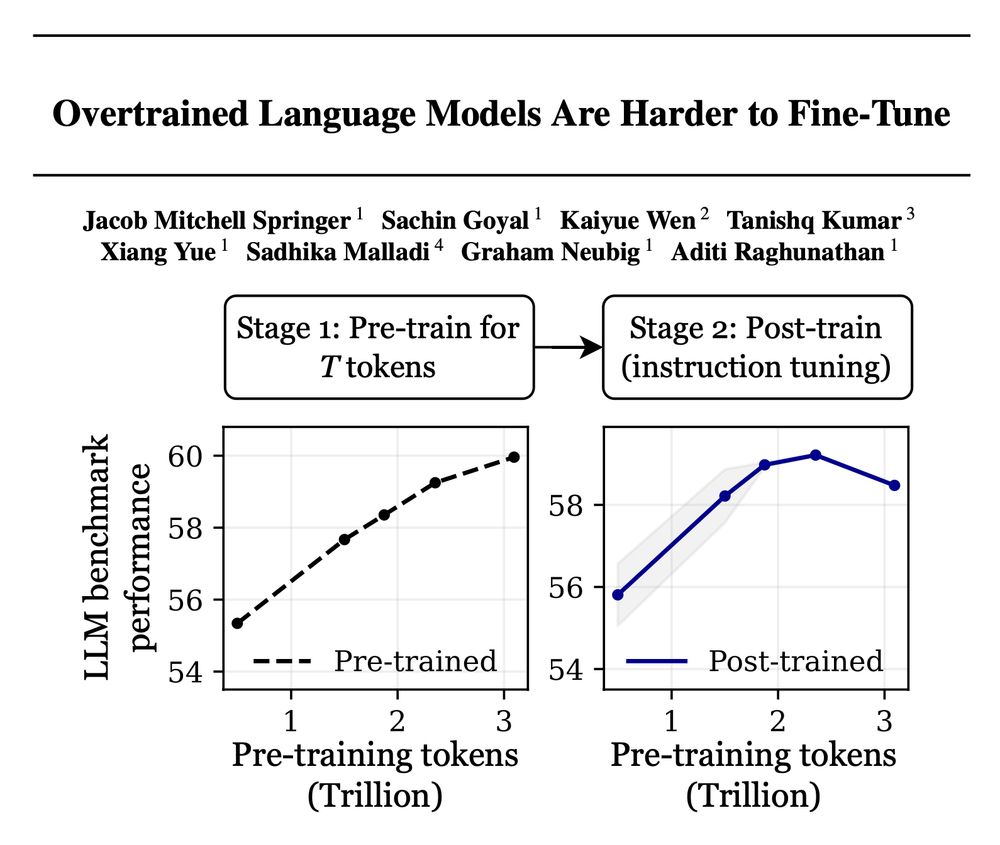

False! Scaling language models by adding more pre-training data can decrease your performance after post-training!

Introducing "catastrophic overtraining." 🥁🧵👇

arxiv.org/abs/2503.19206

1/10

To ensure we are open about it, we made those instructions public:

rl-conference.cc/reviewinstru...

To ensure we are open about it, we made those instructions public:

rl-conference.cc/reviewinstru...

Abstract deadline January 15: rldm.org/submit

Abstract deadline January 15: rldm.org/submit