📍 Edmonton, Canada 🇨🇦

🔗 https://webdocs.cs.ualberta.ca/~machado/

🗓️ Joined November, 2024

www.canada.ca/en/innovatio...

www.canada.ca/en/innovatio...

@ualberta.bsky.social + Amii.ca Fellow! 🥳 Recruiting students to develop theories of cognition in natural & artificial systems 🤖💭🧠. Find me at #NeurIPS2025 workshops (speaking coginterp.github.io/neurips2025 & organising @dataonbrainmind.bsky.social)

@ualberta.bsky.social + Amii.ca Fellow! 🥳 Recruiting students to develop theories of cognition in natural & artificial systems 🤖💭🧠. Find me at #NeurIPS2025 workshops (speaking coginterp.github.io/neurips2025 & organising @dataonbrainmind.bsky.social)

- CS Theory: tinyurl.com/zrh9mk69

- Network/Cyber Security: tinyurl.com/renxazzy

- Robotics/CV/Graphics: tinyurl.com/ypcsfbff

- CS Theory: tinyurl.com/zrh9mk69

- Network/Cyber Security: tinyurl.com/renxazzy

- Robotics/CV/Graphics: tinyurl.com/ypcsfbff

I can attest to how awesome our department and @amiithinks.bsky.social are!

(Official job posting coming soon.)

I can attest to how awesome our department and @amiithinks.bsky.social are!

(Official job posting coming soon.)

Huge congratulations, Hon Tik (Rick) Tse and Siddarth Chandrasekar.

My PhD student, Hon Tik Tse, led this work, and my MSc student, Siddarth Chandrasekar, assisted us.

arxiv.org/abs/2505.16217

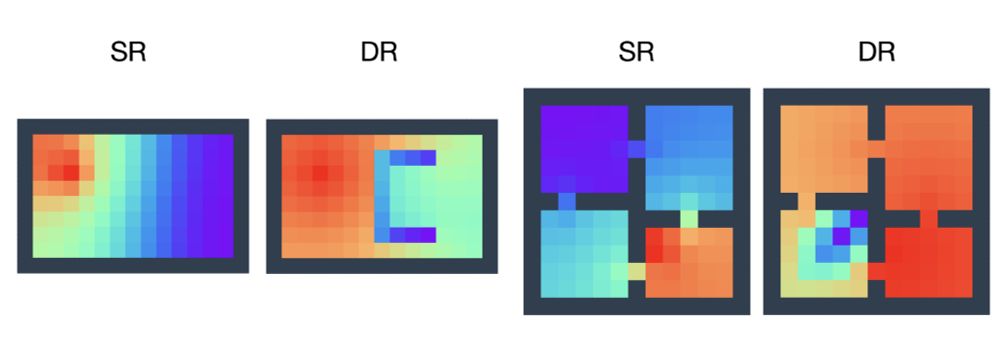

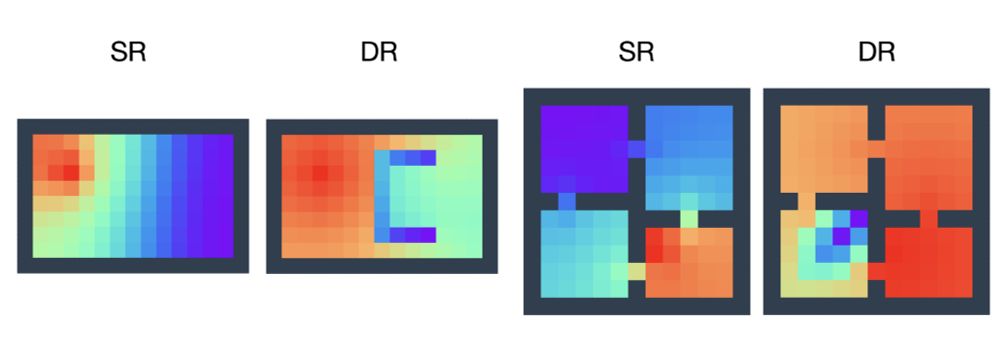

Basically, it's the SR with rewards. See below 👇

Huge congratulations, Hon Tik (Rick) Tse and Siddarth Chandrasekar.

rl-conference.cc/RLC2025Award...

rl-conference.cc/RLC2025Award...

* Invited Talks at Workshops:*

Tue 10:00: The Causal RL Workshop sites.google.com/uci.edu/crlw...

Tue 14:30: Inductive Biases in RL (IBRL) Workshop

sites.google.com/view/ibrl-wo...

Tue 15:00: Panel Discussion at IBRL Workshop

* Invited Talks at Workshops:*

Tue 10:00: The Causal RL Workshop sites.google.com/uci.edu/crlw...

Tue 14:30: Inductive Biases in RL (IBRL) Workshop

sites.google.com/view/ibrl-wo...

Tue 15:00: Panel Discussion at IBRL Workshop

@rl-conference.bsky.social is my favourite conference, and no, it is not because I am one of its organizers this year.

@rl-conference.bsky.social is my favourite conference, and no, it is not because I am one of its organizers this year.

If anything, I think this is very useful resource for anyone interested in this field!

But how do we discover such temporal structure?

Hierarchical RL provides a natural formalism-yet many questions remain open.

Here's our overview of the field🧵

If anything, I think this is very useful resource for anyone interested in this field!

Repo: github.com/machado-rese...

Website: agarcl.github.io

Preprint: arxiv.org/abs/2505.18347

Details below 👇

Repo: github.com/machado-rese...

Website: agarcl.github.io

Preprint: arxiv.org/abs/2505.18347

Details below 👇

My PhD student, Hon Tik Tse, led this work, and my MSc student, Siddarth Chandrasekar, assisted us.

arxiv.org/abs/2505.16217

Basically, it's the SR with rewards. See below 👇

My PhD student, Hon Tik Tse, led this work, and my MSc student, Siddarth Chandrasekar, assisted us.

arxiv.org/abs/2505.16217

Basically, it's the SR with rewards. See below 👇

I thoroughly enjoyed the RLC workshops last year, and this year, they seem to be as good as expected! That's very different from what I have been seeing in other conferences, just saying ...

rl-conference.cc/accepted_wor...

I thoroughly enjoyed the RLC workshops last year, and this year, they seem to be as good as expected! That's very different from what I have been seeing in other conferences, just saying ...

rl-conference.cc/accepted_wor...

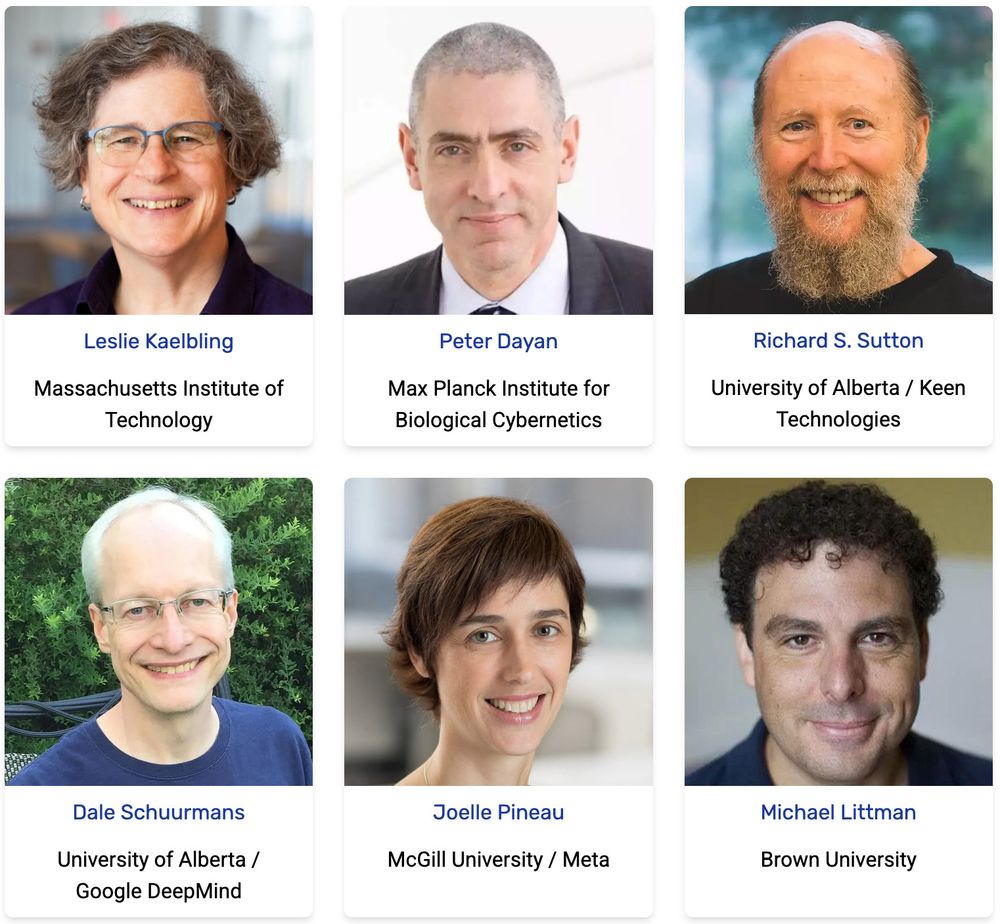

Like last year, we have a fantastic speaker lineup, including a Turing Award Winner 😉

rl-conference.cc

Like last year, we have a fantastic speaker lineup, including a Turing Award Winner 😉

rl-conference.cc

1. Sunday 11:15 for the New Faculty Highlights program

aaai.org/conference/a...

2. Tuesday 09:00 for the Bridging the Gap Between AI Planning and RL Workshop

prl-theworkshop.github.io/prl2025-aaai/

Let me know if you want to meet!

1. Sunday 11:15 for the New Faculty Highlights program

aaai.org/conference/a...

2. Tuesday 09:00 for the Bridging the Gap Between AI Planning and RL Workshop

prl-theworkshop.github.io/prl2025-aaai/

Let me know if you want to meet!

@rl-conference.bsky.social

@rl-conference.bsky.social

And within reason, abstracts, author order/list and titles can be changed after the abstract deadline.

And within reason, abstracts, author order/list and titles can be changed after the abstract deadline.

We present MaestroMotif, a method for skill design that produces highly capable and steerable hierarchical agents.

Paper: arxiv.org/abs/2412.08542

Code: github.com/mklissa/maestromotif

We need to fill in all the roles, and the higher the role, the fewer people we need, so if you are not upgraded, don't hate us 😅

We need to fill in all the roles, and the higher the role, the fewer people we need, so if you are not upgraded, don't hate us 😅

And please help us spread the word!