https://schaul.site44.com/ 🇱🇺

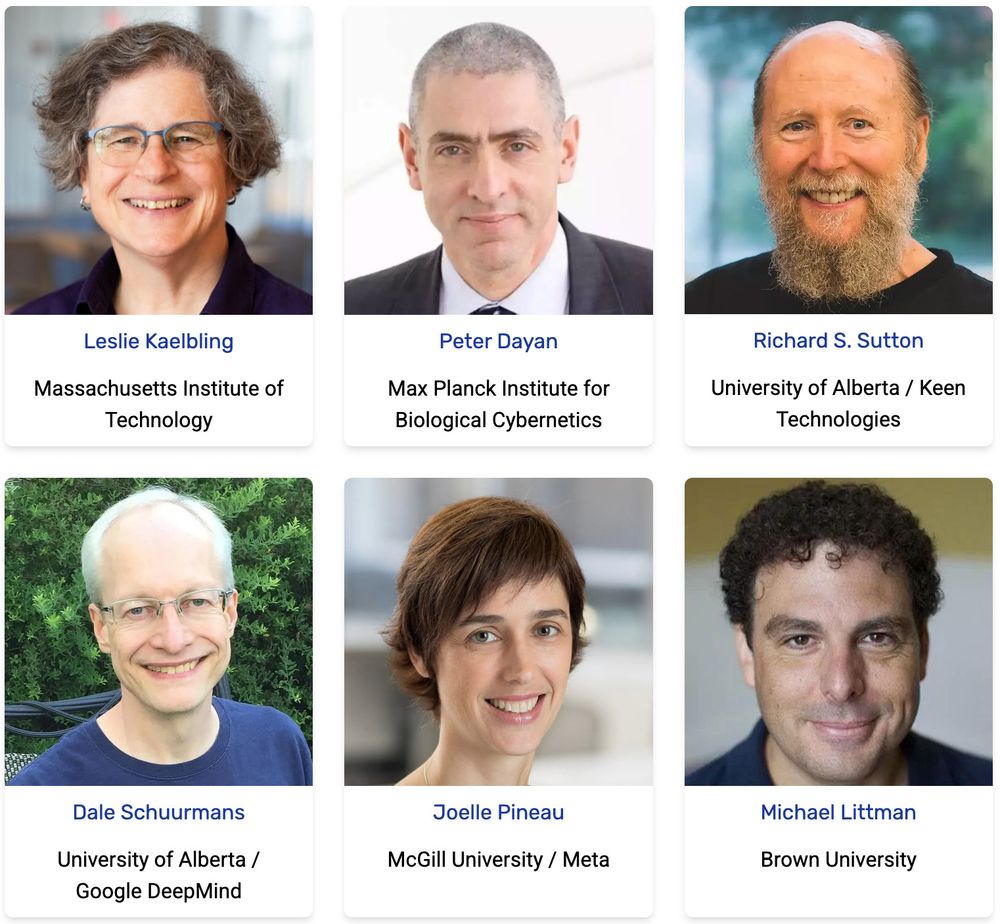

RLC is coming to Montreal, Quebec, in the summer: Aug 16–19, 2026!

Call for Papers is up now:

Abstract: Mar 1 (AOE)

Submission: Mar 5 (AOE)

Excited to see what you’ve been up to - Submit your best work!

rl-conference.cc/callforpaper...

Please share widely!

RLC is coming to Montreal, Quebec, in the summer: Aug 16–19, 2026!

Call for Papers is up now:

Abstract: Mar 1 (AOE)

Submission: Mar 5 (AOE)

Excited to see what you’ve been up to - Submit your best work!

rl-conference.cc/callforpaper...

Please share widely!

Does this make LLM training more efficient? Yes!

Would you like to know exactly how? arxiv.org/pdf/2505.17895

(come see us at NeurIPS too!)

Does this make LLM training more efficient? Yes!

Would you like to know exactly how? arxiv.org/pdf/2505.17895

(come see us at NeurIPS too!)

Find out! Keynotes of the RL Conference are online:

www.youtube.com/playlist?lis...

Wanting vs liking, Agent factories, Theoretical limit of LLMs, Pluralist value, RL teachers, Knowledge flywheels

(guess who talked about which!)

Find out! Keynotes of the RL Conference are online:

www.youtube.com/playlist?lis...

Wanting vs liking, Agent factories, Theoretical limit of LLMs, Pluralist value, RL teachers, Knowledge flywheels

(guess who talked about which!)

Reach out if you’d like to chat more!

Reach out if you’d like to chat more!

job-boards.greenhouse.io/deepmind/job...

Please spread the word!

job-boards.greenhouse.io/deepmind/job...

Please spread the word!

job-boards.greenhouse.io/deepmind/job...

Please spread the word!

The method we introduce in this paper is efficient because examples are chosen for their complementarity, leading to much steeper inference-time scaling! 🧪

arxiv.org/abs/2502.18487

🧵

The method we introduce in this paper is efficient because examples are chosen for their complementarity, leading to much steeper inference-time scaling! 🧪

@rl-conference.bsky.social

@rl-conference.bsky.social

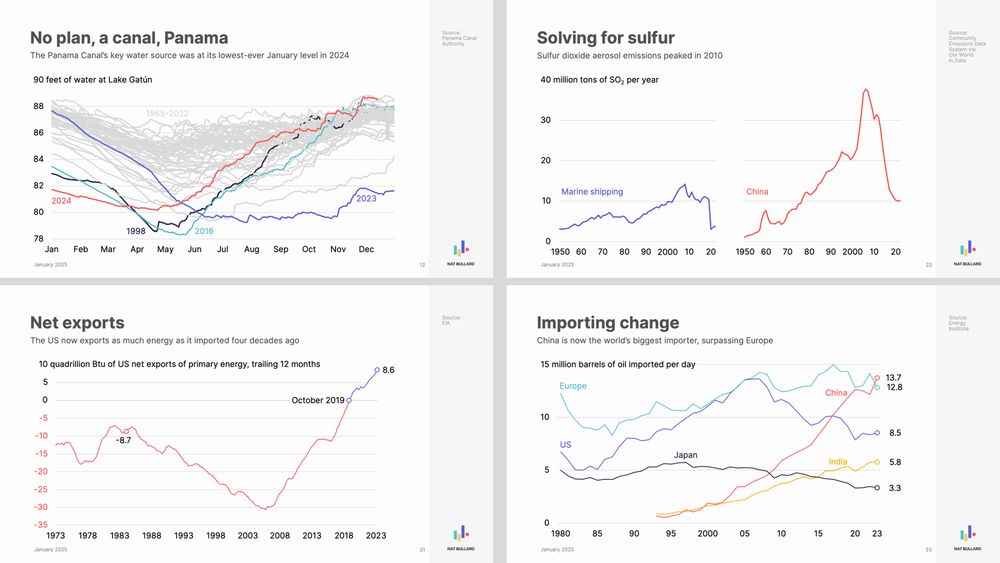

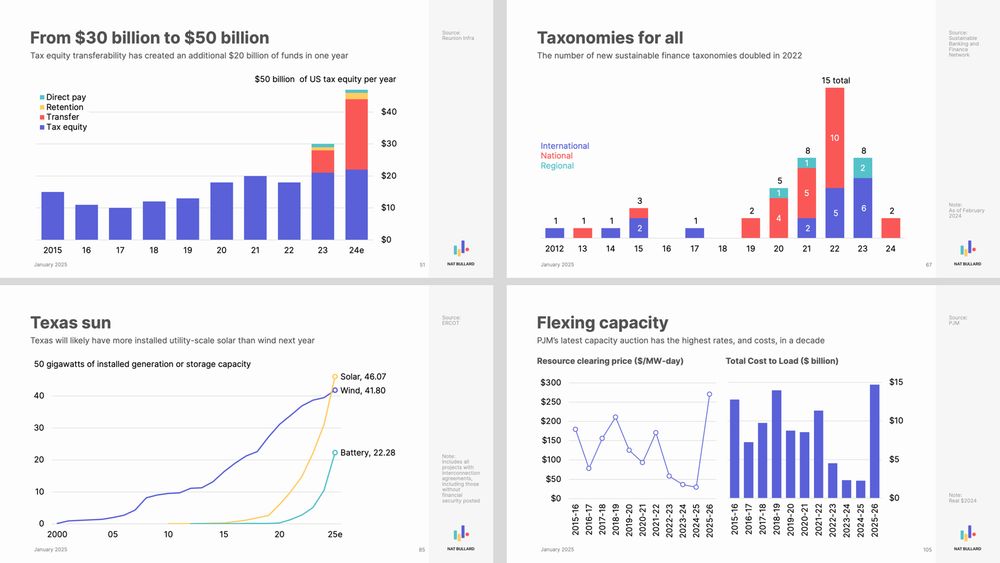

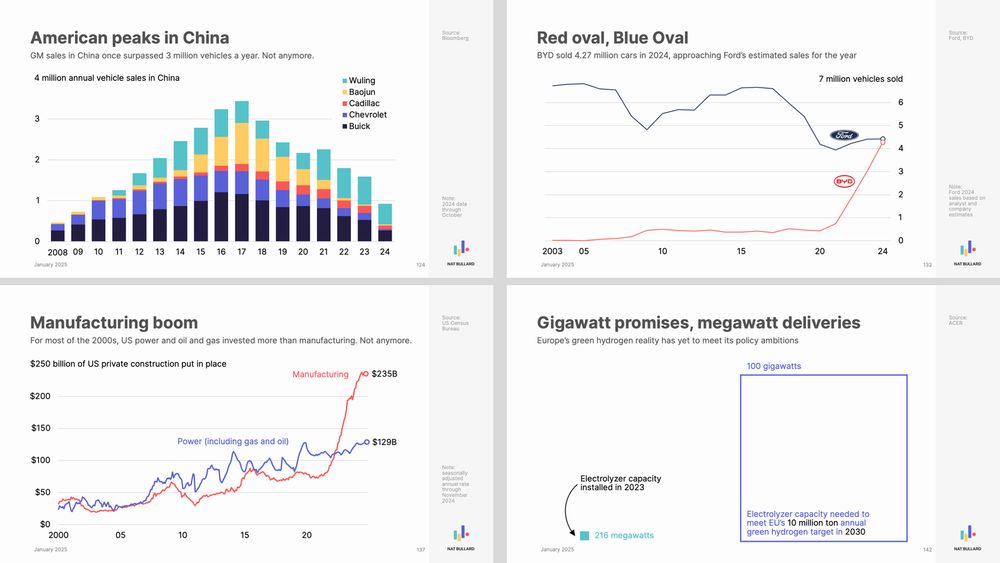

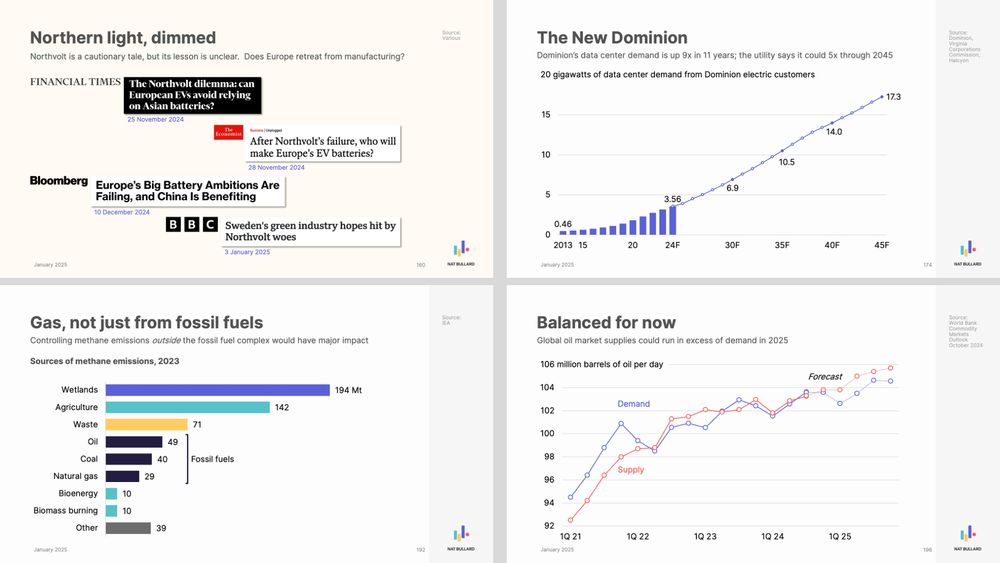

200 slides, covering everything from water levels in Lake Gatún to sulfur dioxide emissions to ESG fund flows to Chinese auto exports to artificial intelligence. www.nathanielbullard.com/presentations

www.youtube.com/watch?v=pkpJ...

www.youtube.com/watch?v=pkpJ...

My talk is today at 11:40am, West Meeting Room 220-222, #NeurIPS2024

language-gamification.github.io/schedule/

Thoughts about this and more here:

arxiv.org/abs/2411.16905

My talk is today at 11:40am, West Meeting Room 220-222, #NeurIPS2024

language-gamification.github.io/schedule/

Please help us spread the word.

rl-conference.cc/callforpaper...

Thoughts about this and more here:

arxiv.org/abs/2411.16905

Thoughts about this and more here:

arxiv.org/abs/2411.16905

Looking forward to seeing you all there!

@rl-conference.bsky.social

#reinforcementlearning

Looking forward to seeing you all there!

@rl-conference.bsky.social

#reinforcementlearning

I just wrote the NeurIPS board requesting them to consider joining Bluesky.

It took about 2 minutes. I invite you to do the same. neurips.cc/Help/Contact

If they changed the name of the conference for the greater good, there's a chance!

Please repost!