📍 Edmonton, Canada 🇨🇦

🔗 https://webdocs.cs.ualberta.ca/~machado/

🗓️ Joined November, 2024

- CS Theory: tinyurl.com/zrh9mk69

- Network/Cyber Security: tinyurl.com/renxazzy

- Robotics/CV/Graphics: tinyurl.com/ypcsfbff

- CS Theory: tinyurl.com/zrh9mk69

- Network/Cyber Security: tinyurl.com/renxazzy

- Robotics/CV/Graphics: tinyurl.com/ypcsfbff

@rl-conference.bsky.social is my favourite conference, and no, it is not because I am one of its organizers this year.

@rl-conference.bsky.social is my favourite conference, and no, it is not because I am one of its organizers this year.

Repo: github.com/machado-rese...

Website: agarcl.github.io

Preprint: arxiv.org/abs/2505.18347

Repo: github.com/machado-rese...

Website: agarcl.github.io

Preprint: arxiv.org/abs/2505.18347

It is perhaps no surprise that the classic algorithms we considered couldn't really make much progress in the full game.

It is perhaps no surprise that the classic algorithms we considered couldn't really make much progress in the full game.

arxiv.org/abs/2303.07507

arxiv.org/abs/2303.07507

Repo: github.com/machado-rese...

Website: agarcl.github.io

Preprint: arxiv.org/abs/2505.18347

Details below 👇

Repo: github.com/machado-rese...

Website: agarcl.github.io

Preprint: arxiv.org/abs/2505.18347

Details below 👇

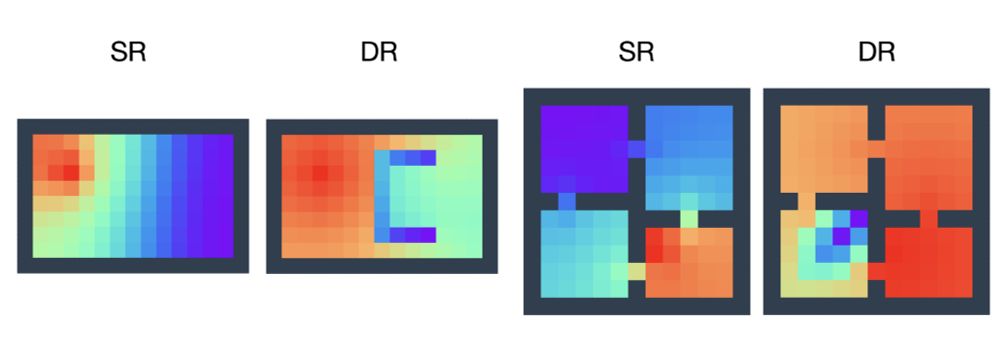

It captures the underlying dynamics of the environment, but it ignores the reward. What if it didn't ignore the reward function?

It captures the underlying dynamics of the environment, but it ignores the reward. What if it didn't ignore the reward function?

My PhD student, Hon Tik Tse, led this work, and my MSc student, Siddarth Chandrasekar, assisted us.

arxiv.org/abs/2505.16217

Basically, it's the SR with rewards. See below 👇

My PhD student, Hon Tik Tse, led this work, and my MSc student, Siddarth Chandrasekar, assisted us.

arxiv.org/abs/2505.16217

Basically, it's the SR with rewards. See below 👇

AI/ML/DL Theory: apps.ualberta.ca/careers/post...

AI + SWE: apps.ualberta.ca/careers/post...

Systems: apps.ualberta.ca/careers/post...

AI/ML/DL Theory: apps.ualberta.ca/careers/post...

AI + SWE: apps.ualberta.ca/careers/post...

Systems: apps.ualberta.ca/careers/post...