https://akshitab.github.io/

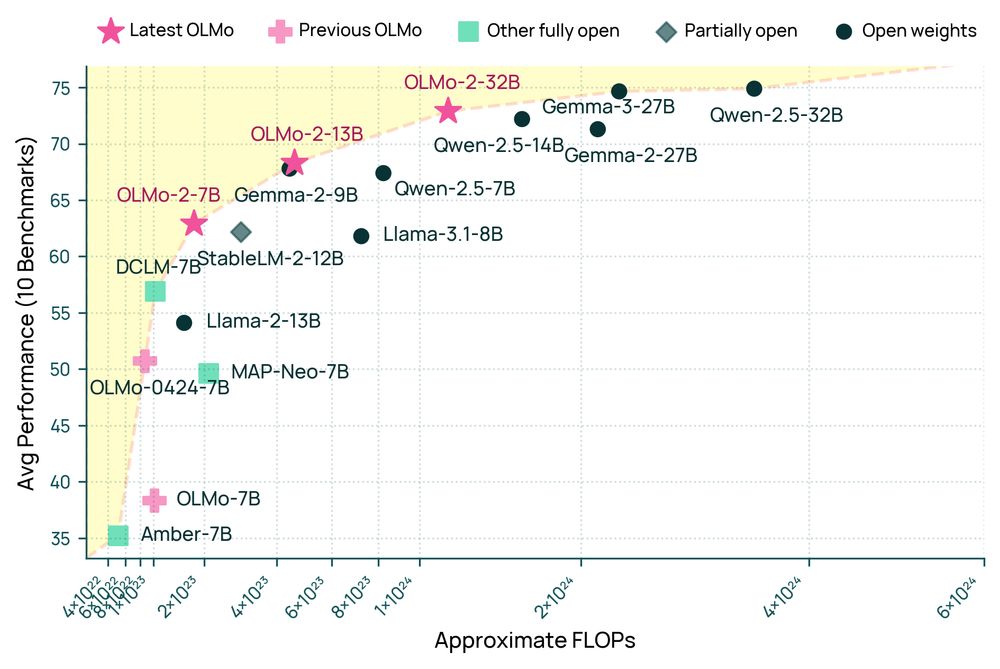

Best fully open 32B reasoning model & best 32B base model. 🧵

Best fully open 32B reasoning model & best 32B base model. 🧵

Read more about CAIA here: buff.ly/ACpxLNT

Read more about CAIA here: buff.ly/ACpxLNT

Comparable to best open-weight models, but a fraction of training compute. When you have a good recipe, ✨ magical things happen when you scale it up!

Comparable to best open-weight models, but a fraction of training compute. When you have a good recipe, ✨ magical things happen when you scale it up!

We are launching an iOS app–it runs OLMoE locally 📱 We're gonna see more on-device AI in 2025, and wanted to offer a simple way to prototype with it

App: apps.apple.com/us/app/ai2-o...

Code: github.com/allenai/OLMo...

Blog: allenai.org/blog/olmoe-app

As phones get faster, more AI will happen on device. With OLMoE, researchers, developers, and users can get a feel for this future: fully private LLMs, available anytime.

Learn more from @soldaini.net👇 youtu.be/rEK_FZE5rqQ

We are launching an iOS app–it runs OLMoE locally 📱 We're gonna see more on-device AI in 2025, and wanted to offer a simple way to prototype with it

App: apps.apple.com/us/app/ai2-o...

Code: github.com/allenai/OLMo...

Blog: allenai.org/blog/olmoe-app

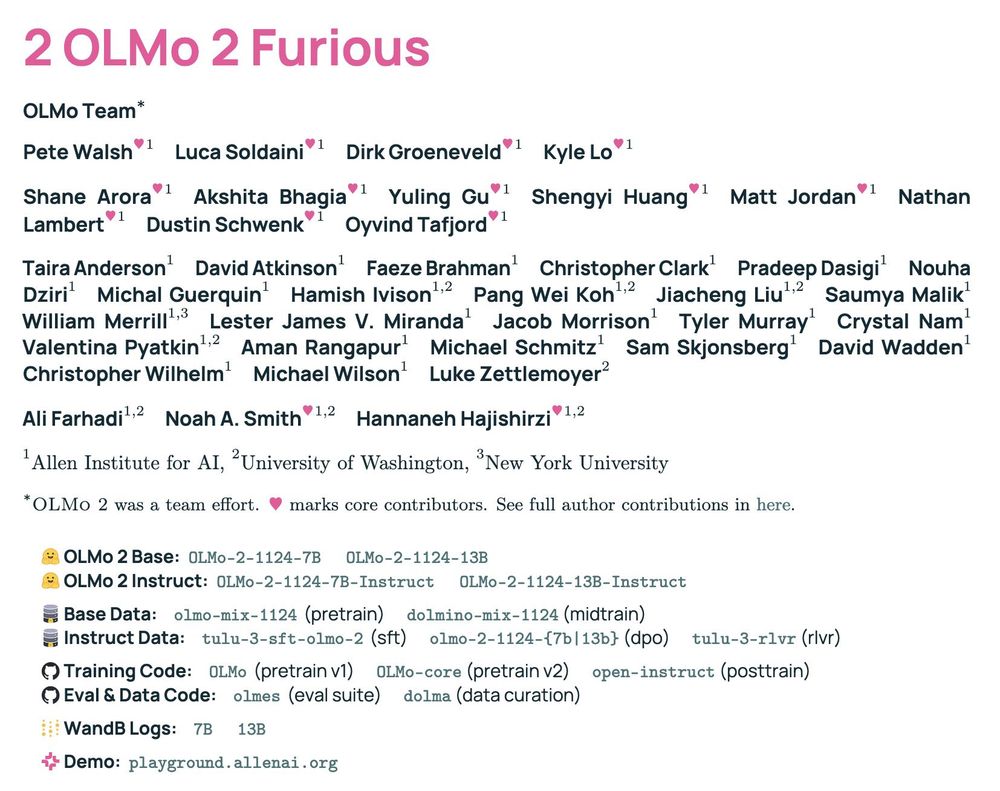

🚗 2 OLMo 2 Furious 🔥 is everythin we learned since OLMo 1, with deep dives into:

🚖 stable pretrain recipe

🚔 lr anneal 🤝 data curricula 🤝 soups

🚘 tulu post-train recipe

🚜 compute infra setup

👇🧵

🚗 2 OLMo 2 Furious 🔥 is everythin we learned since OLMo 1, with deep dives into:

🚖 stable pretrain recipe

🚔 lr anneal 🤝 data curricula 🤝 soups

🚘 tulu post-train recipe

🚜 compute infra setup

👇🧵

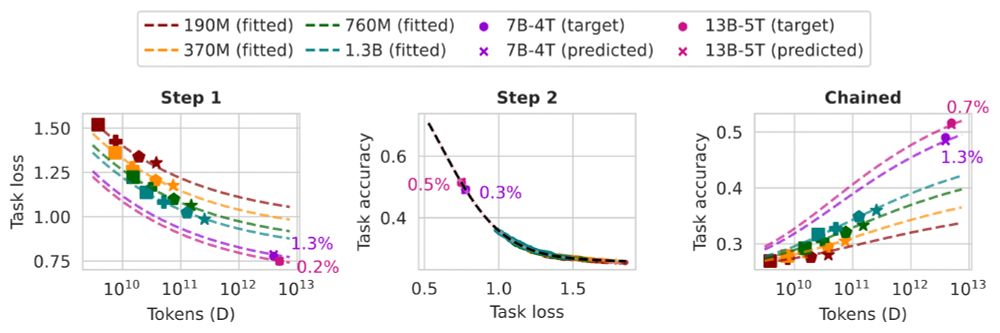

We develop task scaling laws and model ladders, which predict the accuracy on individual tasks by OLMo 2 7B & 13B models within 2 points of absolute error. The cost is 1% of the compute used to pretrain them.

We develop task scaling laws and model ladders, which predict the accuracy on individual tasks by OLMo 2 7B & 13B models within 2 points of absolute error. The cost is 1% of the compute used to pretrain them.

OLMo accelerates the study of LMs. We release *everything*, from toolkit for creating data (Dolma) to train/inf code

blog blog.allenai.org/olmo-open-la...

olmo paper allenai.org/olmo/olmo-pa...

dolma paper allenai.org/olmo/dolma-p...

OLMo accelerates the study of LMs. We release *everything*, from toolkit for creating data (Dolma) to train/inf code

blog blog.allenai.org/olmo-open-la...

olmo paper allenai.org/olmo/olmo-pa...

dolma paper allenai.org/olmo/dolma-p...

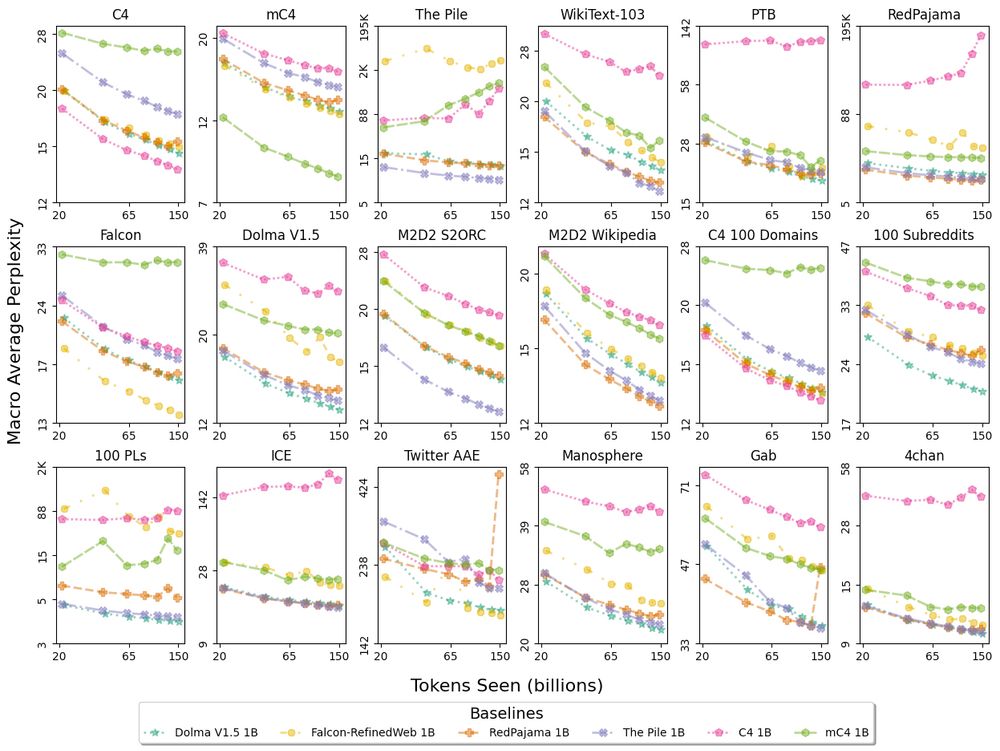

📈 Evaluating perplexity on just one corpus like C4 doesn't tell the whole story 📉

✨📃✨

We introduce Paloma, a benchmark of 585 domains from NY Times to r/depression on Reddit.

📈 Evaluating perplexity on just one corpus like C4 doesn't tell the whole story 📉

✨📃✨

We introduce Paloma, a benchmark of 585 domains from NY Times to r/depression on Reddit.