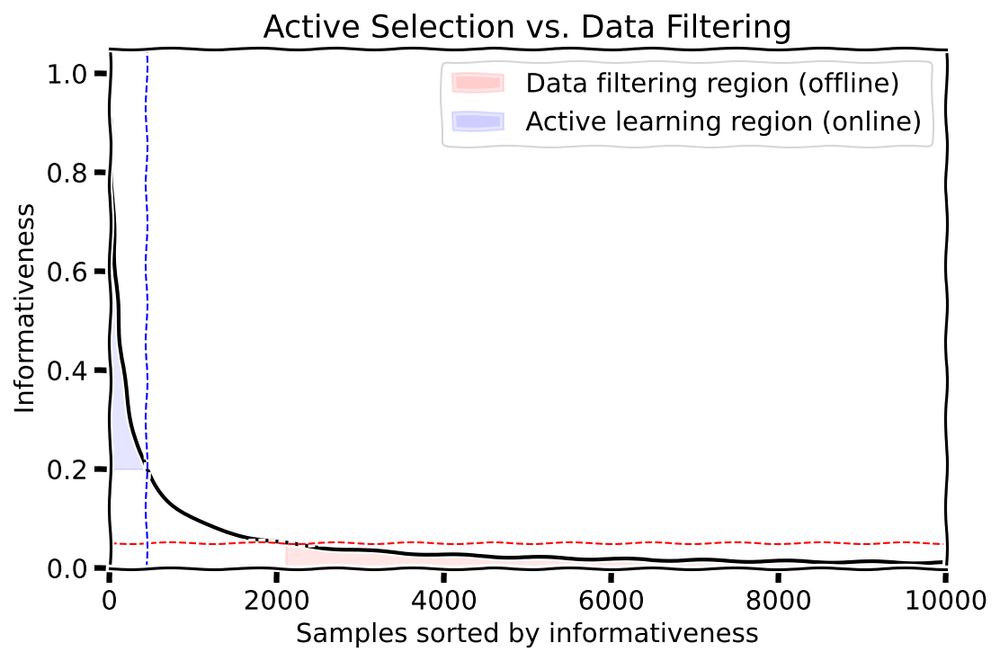

What is the fundamental difference between active learning and data filtering?

Well, obviously, the difference is that:

1/11

What is the fundamental difference between active learning and data filtering?

Well, obviously, the difference is that:

1/11

Two years ago, we started a series on Diffusion Models that covered everything related to these models in-depth. We decided to write those tutorials covering intuition and the fundamentals because we could not find any high-quality diffusion tutorials then.

Two years ago, we started a series on Diffusion Models that covered everything related to these models in-depth. We decided to write those tutorials covering intuition and the fundamentals because we could not find any high-quality diffusion tutorials then.

x.com/A_K_Nain/sta...

x.com/A_K_Nain/sta...

x.com/A_K_Nain/sta...

x.com/A_K_Nain/sta...

"Veo has achieved state of the art results in head-to-head comparisons of outputs by human raters over top video generation models"

"Veo has achieved state of the art results in head-to-head comparisons of outputs by human raters over top video generation models"

x.com/A_K_Nain/sta...

x.com/A_K_Nain/sta...

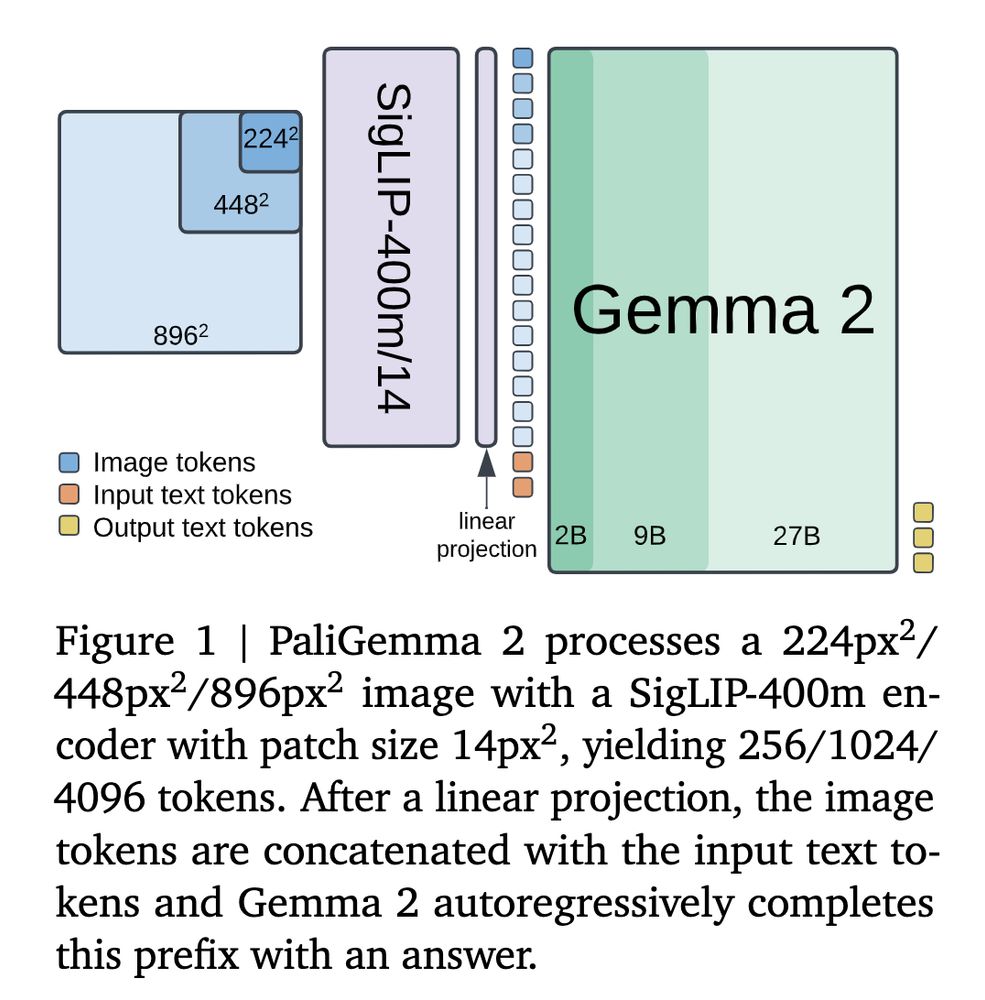

Google DeepMind announced PaliGemma 2 last week. It is an upgrade of the PaliGemma open Vision-Language Model (VLM) based on the Gemma 2 family of language models. What does this generation of PaliGemma bring to the table? I finished reading the technical report, and here is a summary:

Google DeepMind announced PaliGemma 2 last week. It is an upgrade of the PaliGemma open Vision-Language Model (VLM) based on the Gemma 2 family of language models. What does this generation of PaliGemma bring to the table? I finished reading the technical report, and here is a summary:

venturebeat.com/ai/emergence...

venturebeat.com/ai/emergence...

x.com/A_K_Nain/sta...

x.com/A_K_Nain/sta...

1. Laggy

2. Half the time the tabs don't work

3. Feed is still broken

4. No bookmarks yet

5. Hyperlinks work randomly

1. Laggy

2. Half the time the tabs don't work

3. Feed is still broken

4. No bookmarks yet

5. Hyperlinks work randomly

magic-with-latents.github.io/latent/posts...

magic-with-latents.github.io/latent/posts...

arxiv.org/abs/2411.11844

arxiv.org/abs/2411.11844

arxiv.org/abs/2411.13676

arxiv.org/abs/2411.13676

arxiv.org/abs/2411.14762

arxiv.org/abs/2411.14762