Come say hi 🦧 | Exploring LLM agents rn

huyenchip.com/2025/01/16/a...

Would love to hear from your experience about the pitfalls you've seen!

huyenchip.com/2025/01/16/a...

Would love to hear from your experience about the pitfalls you've seen!

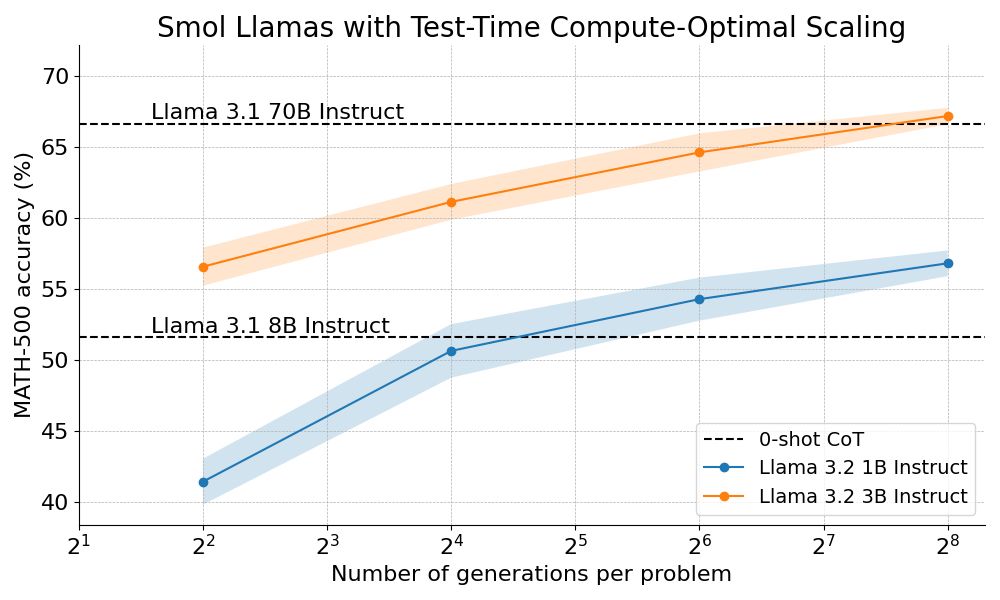

How? By combining step-wise reward models with tree search algorithms :)

We're open sourcing the full recipe and sharing a detailed blog post 👇

How? By combining step-wise reward models with tree search algorithms :)

We're open sourcing the full recipe and sharing a detailed blog post 👇

But for LLMs its all into the ctx_len. Some that are +5k tokens make models tweak and start outputting gibberish/multilingual

But for LLMs its all into the ctx_len. Some that are +5k tokens make models tweak and start outputting gibberish/multilingual