💬 AMA: Tue, Oct 28 — 8:00 PT / 16:00 CEST

💡 Bring your questions!

🔗 discord.gg/ai2

💬 AMA: Tue, Oct 28 — 8:00 PT / 16:00 CEST

💡 Bring your questions!

🔗 discord.gg/ai2

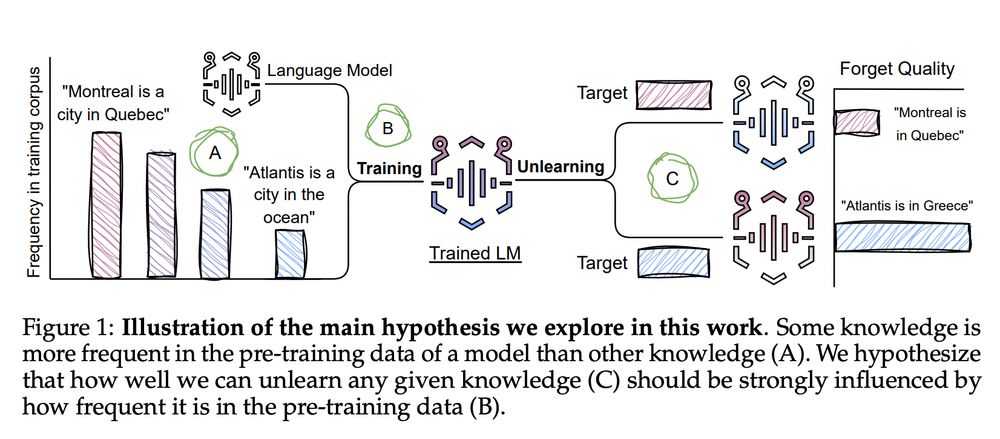

We show that data properties shape how LLMs forget — stop by to chat more!

🗓 Wednesday, Oct 8

🕓 4:30–6:30 pm

📍 poster #710 (session 4)

paper: arxiv.org/abs/2504.05058

Work with @mariusmosbach.bsky.social @sivareddyg.bsky.social

We show that data properties shape how LLMs forget — stop by to chat more!

🗓 Wednesday, Oct 8

🕓 4:30–6:30 pm

📍 poster #710 (session 4)

paper: arxiv.org/abs/2504.05058

Work with @mariusmosbach.bsky.social @sivareddyg.bsky.social

love the detailed montreal spots mentioned

consider including such a section in your next appendix!

(paper by @a-krishnan.bsky.social arxiv.org/pdf/2504.050...)

love the detailed montreal spots mentioned

consider including such a section in your next appendix!

(paper by @a-krishnan.bsky.social arxiv.org/pdf/2504.050...)

w/ Michelle Yang, @sivareddyg.bsky.social , @msonderegger.bsky.social and @dallascard.bsky.social👇(1/12)

w/ Michelle Yang, @sivareddyg.bsky.social , @msonderegger.bsky.social and @dallascard.bsky.social👇(1/12)

We are thrilled to announce that Prof. Karen Livescu will keynote our Special Session on Interpretable Audio and Speech Models at #Interspeech2025:

"What can interpretability do for us (and what can it not)?"

🗓️ Aug 18, 11:00

@interspeech.bsky.social

We are thrilled to announce that Prof. Karen Livescu will keynote our Special Session on Interpretable Audio and Speech Models at #Interspeech2025:

"What can interpretability do for us (and what can it not)?"

🗓️ Aug 18, 11:00

@interspeech.bsky.social

Co-authors: @hmohebbi.bsky.social, Arianna Bisazza, Afra Alishahi, @grzegorz.chrupala.me

Find the preprint here: arxiv.org/abs/2505.16406

Co-authors: @hmohebbi.bsky.social, Arianna Bisazza, Afra Alishahi, @grzegorz.chrupala.me

Find the preprint here: arxiv.org/abs/2505.16406

www.quantamagazine.org/when-chatgpt...

"On Linear Representations and Pretraining Data Frequency in Language Models":

We provide an explanation for when & why linear representations form in large (or small) language models.

Led by @jackmerullo.bsky.social, w/ @nlpnoah.bsky.social & @sarah-nlp.bsky.social

"On Linear Representations and Pretraining Data Frequency in Language Models":

We provide an explanation for when & why linear representations form in large (or small) language models.

Led by @jackmerullo.bsky.social, w/ @nlpnoah.bsky.social & @sarah-nlp.bsky.social

142-page report diving into the reasoning chains of R1. It spans 9 unique axes: safety, world modeling, faithfulness, long context, etc.

Now on arxiv: arxiv.org/abs/2504.07128

142-page report diving into the reasoning chains of R1. It spans 9 unique axes: safety, world modeling, faithfulness, long context, etc.

Now on arxiv: arxiv.org/abs/2504.07128

We are releasing the first benchmark to evaluate how well automatic evaluators, such as LLM judges, can evaluate web agent trajectories.

We are releasing the first benchmark to evaluate how well automatic evaluators, such as LLM judges, can evaluate web agent trajectories.

Also, we are organizing a workshop at #ICML2025 which is inspired by some of the questions discussed in the paper: actionable-interpretability.github.io

Also, we are organizing a workshop at #ICML2025 which is inspired by some of the questions discussed in the paper: actionable-interpretability.github.io

🔗: arxiv.org/abs/2504.05058

🔗: arxiv.org/abs/2504.05058

🌐 sites.google.com/view/vlms4all

🌐 sites.google.com/view/vlms4all

To find out, we introduce SafeArena (safearena.github.io), a benchmark to assess the capabilities of web agents to complete harmful web tasks. A thread 👇

To find out, we introduce SafeArena (safearena.github.io), a benchmark to assess the capabilities of web agents to complete harmful web tasks. A thread 👇

⏳ Reminder: The #Interspeech2025 deadline is approaching! 🚀 If your work focuses on interpretability in speech & audio, submit through our Special Session and showcase your research! 🎤

#Interpretability @interspeech.bsky.social

⏳ Reminder: The #Interspeech2025 deadline is approaching! 🚀 If your work focuses on interpretability in speech & audio, submit through our Special Session and showcase your research! 🎤

#Interpretability @interspeech.bsky.social