Yong Zheng-Xin (Yong)

@yongzx.bsky.social

ml research at Brown University // collab at Meta AI and Cohere For AI

🔗 yongzx.github.io

🔗 yongzx.github.io

Reposted by Yong Zheng-Xin (Yong)

It’s been two years since cross-lingual jailbreaks were first discovered. How far has the multilingual LLM safety research field advanced? 🤔

📏 Our comprehensive survey reveals that there is still a long way to go.

📏 Our comprehensive survey reveals that there is still a long way to go.

June 3, 2025 at 1:59 PM

It’s been two years since cross-lingual jailbreaks were first discovered. How far has the multilingual LLM safety research field advanced? 🤔

📏 Our comprehensive survey reveals that there is still a long way to go.

📏 Our comprehensive survey reveals that there is still a long way to go.

Reposted by Yong Zheng-Xin (Yong)

🚨LLM safety research needs to be at least as multilingual as our models.

What's the current stage and how to progress from here?

This work led by @yongzx.bsky.social has answers! 👇

What's the current stage and how to progress from here?

This work led by @yongzx.bsky.social has answers! 👇

It’s been two years since cross-lingual jailbreaks were first discovered. How far has the multilingual LLM safety research field advanced? 🤔

📏 Our comprehensive survey reveals that there is still a long way to go.

📏 Our comprehensive survey reveals that there is still a long way to go.

June 4, 2025 at 11:44 AM

🚨LLM safety research needs to be at least as multilingual as our models.

What's the current stage and how to progress from here?

This work led by @yongzx.bsky.social has answers! 👇

What's the current stage and how to progress from here?

This work led by @yongzx.bsky.social has answers! 👇

Reposted by Yong Zheng-Xin (Yong)

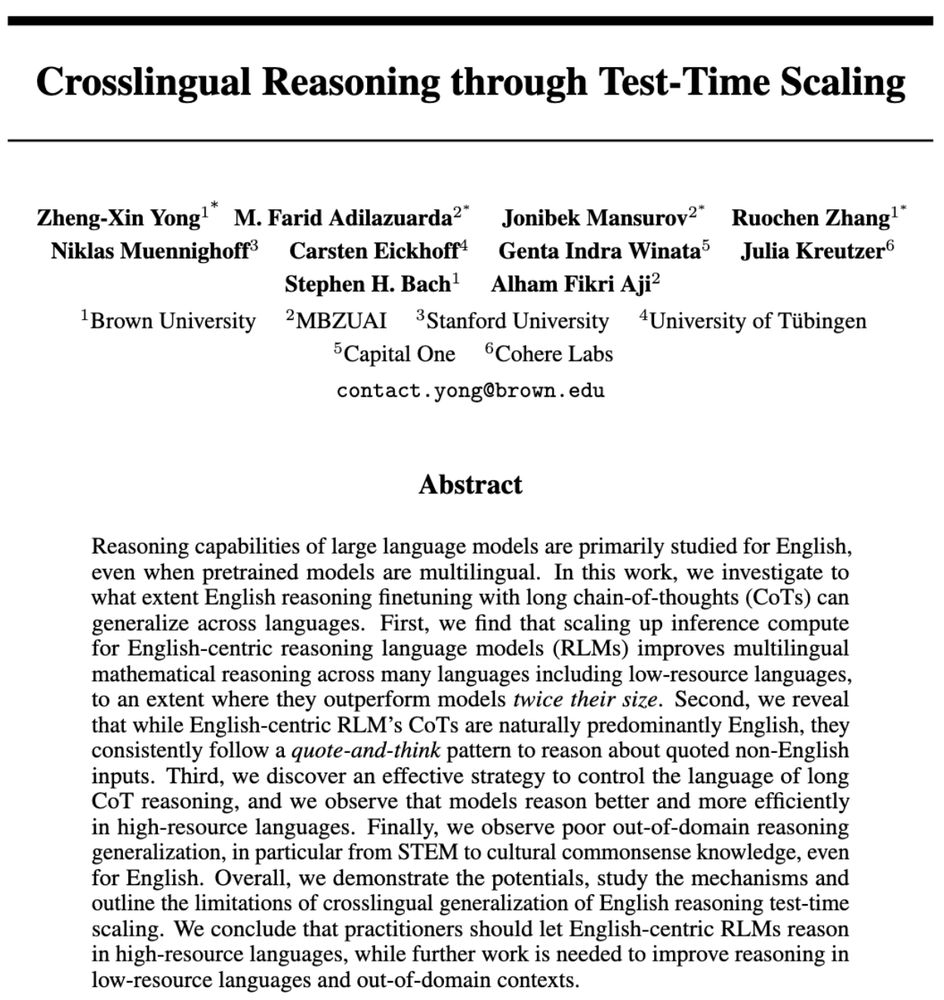

Can English-finetuned LLMs reason in other languages?

Short Answer: Yes, thanks to “quote-and-think” + test-time scaling. You can even force them to reason in a target language!

But:

🌐 Low-resource langs & non-STEM topics still tough.

New paper: arxiv.org/abs/2505.05408

Short Answer: Yes, thanks to “quote-and-think” + test-time scaling. You can even force them to reason in a target language!

But:

🌐 Low-resource langs & non-STEM topics still tough.

New paper: arxiv.org/abs/2505.05408

May 10, 2025 at 3:12 PM

Can English-finetuned LLMs reason in other languages?

Short Answer: Yes, thanks to “quote-and-think” + test-time scaling. You can even force them to reason in a target language!

But:

🌐 Low-resource langs & non-STEM topics still tough.

New paper: arxiv.org/abs/2505.05408

Short Answer: Yes, thanks to “quote-and-think” + test-time scaling. You can even force them to reason in a target language!

But:

🌐 Low-resource langs & non-STEM topics still tough.

New paper: arxiv.org/abs/2505.05408

Reposted by Yong Zheng-Xin (Yong)

Multilingual 🤝reasoning 🤝 test-time scaling 🔥🔥🔥

New preprint!

@yongzx.bsky.social has all the details 👇

New preprint!

@yongzx.bsky.social has all the details 👇

📣 New paper!

We observe that reasoning language models finetuned only on English data are capable of zero-shot cross-lingual reasoning through a "quote-and-think" pattern.

However, this does not mean they reason the same way across all languages or in new domains.

[1/N]

We observe that reasoning language models finetuned only on English data are capable of zero-shot cross-lingual reasoning through a "quote-and-think" pattern.

However, this does not mean they reason the same way across all languages or in new domains.

[1/N]

May 9, 2025 at 8:00 PM

Multilingual 🤝reasoning 🤝 test-time scaling 🔥🔥🔥

New preprint!

@yongzx.bsky.social has all the details 👇

New preprint!

@yongzx.bsky.social has all the details 👇

📣 New paper!

We observe that reasoning language models finetuned only on English data are capable of zero-shot cross-lingual reasoning through a "quote-and-think" pattern.

However, this does not mean they reason the same way across all languages or in new domains.

[1/N]

We observe that reasoning language models finetuned only on English data are capable of zero-shot cross-lingual reasoning through a "quote-and-think" pattern.

However, this does not mean they reason the same way across all languages or in new domains.

[1/N]

May 9, 2025 at 7:53 PM

📣 New paper!

We observe that reasoning language models finetuned only on English data are capable of zero-shot cross-lingual reasoning through a "quote-and-think" pattern.

However, this does not mean they reason the same way across all languages or in new domains.

[1/N]

We observe that reasoning language models finetuned only on English data are capable of zero-shot cross-lingual reasoning through a "quote-and-think" pattern.

However, this does not mean they reason the same way across all languages or in new domains.

[1/N]