🔗 yongzx.github.io

This suggests future work is also needed for cross-domain generalization of test-time scaling.

[11/N]

This suggests future work is also needed for cross-domain generalization of test-time scaling.

[11/N]

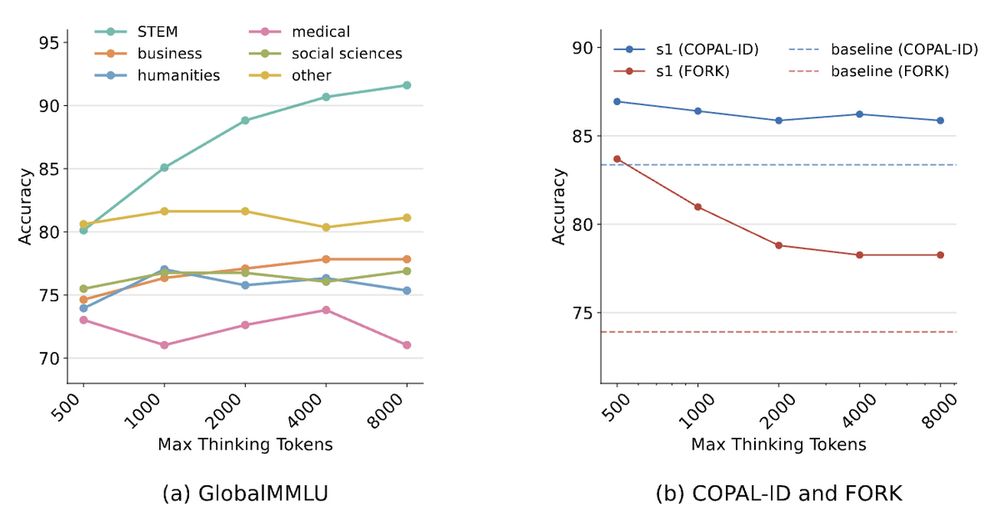

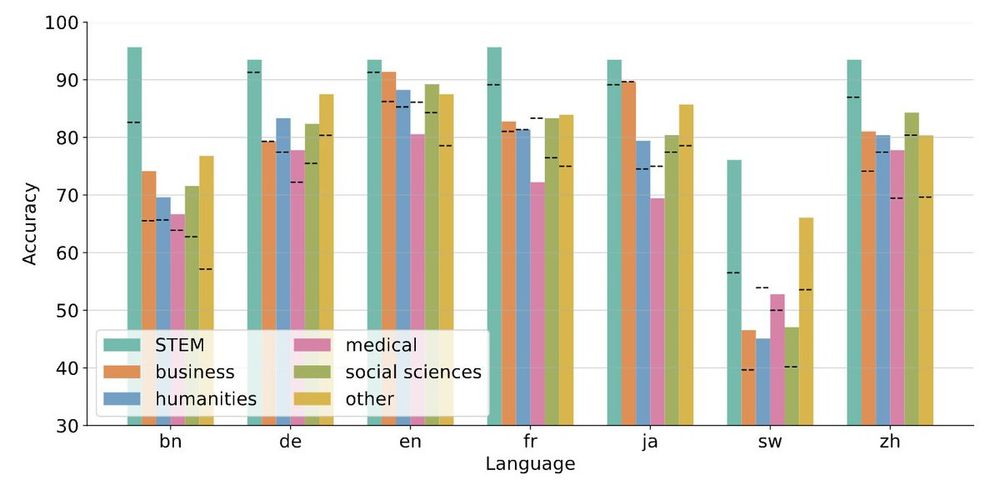

We evaluate multilingual cross-domain generalization on three benchmarks.

We observe poor transfer of math reasoning finetuning to other domain subjects like humanities and medical domains for the GlobalMMLU benchmark.

[10/N]

We evaluate multilingual cross-domain generalization on three benchmarks.

We observe poor transfer of math reasoning finetuning to other domain subjects like humanities and medical domains for the GlobalMMLU benchmark.

[10/N]

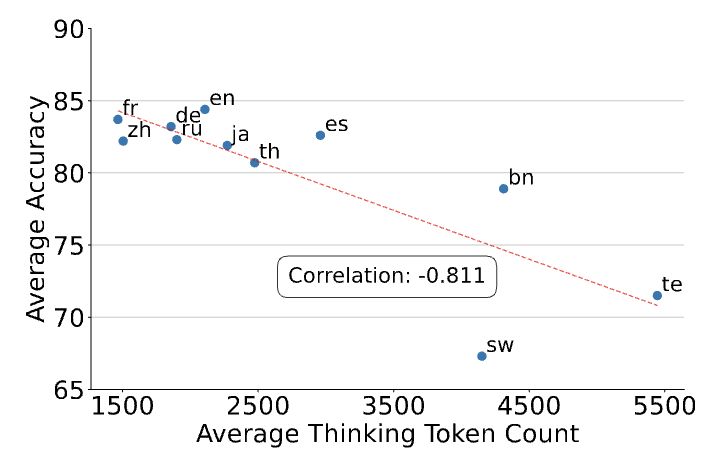

This double disadvantage suggests future work is needed for efficient reasoning in low-resource languages.

[9/N]

This double disadvantage suggests future work is needed for efficient reasoning in low-resource languages.

[9/N]

[8/N]

[8/N]

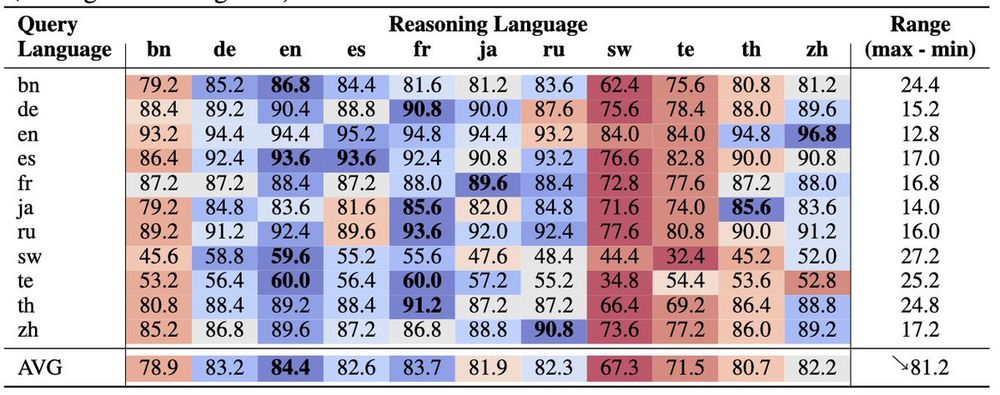

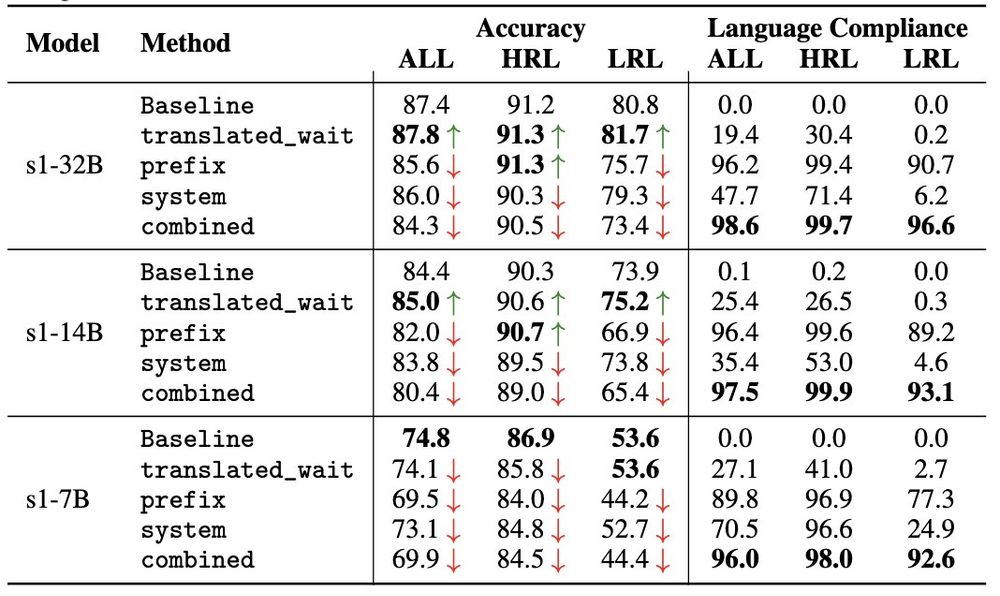

We show that we can force English-centric reasoning models to think in non-English languages through a combination of translated wait tokens, prefix, and system prompt.

They yield nearly 100% success rate (measured by language compliance)!

[7/N]

We show that we can force English-centric reasoning models to think in non-English languages through a combination of translated wait tokens, prefix, and system prompt.

They yield nearly 100% success rate (measured by language compliance)!

[7/N]

[6/N]

[6/N]

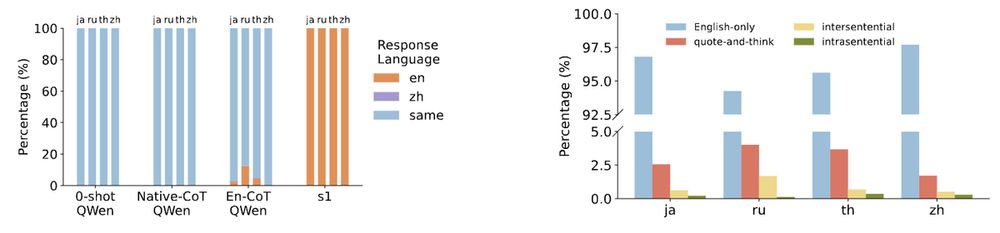

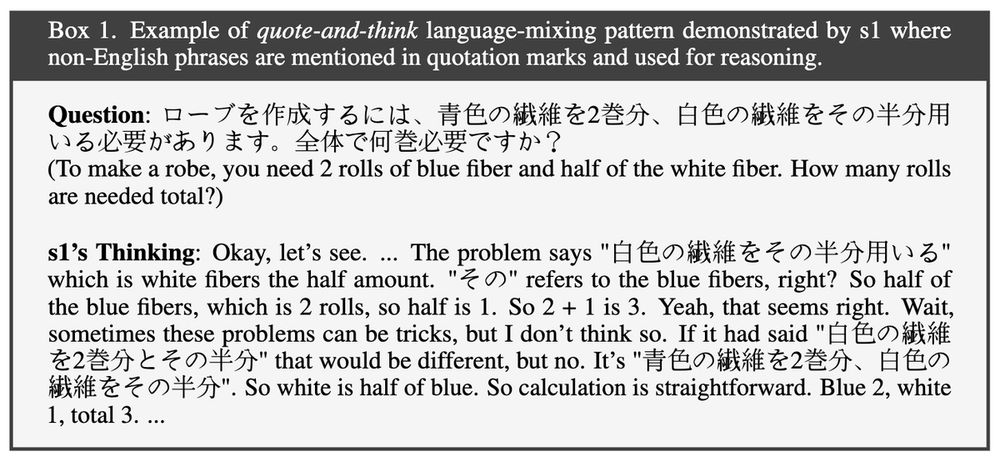

We analyze how English-centric reasoning models think for non-English questions, and we observe they mix languages through the sophisticated quote-and-think pattern.

[4/N]

We analyze how English-centric reasoning models think for non-English questions, and we observe they mix languages through the sophisticated quote-and-think pattern.

[4/N]

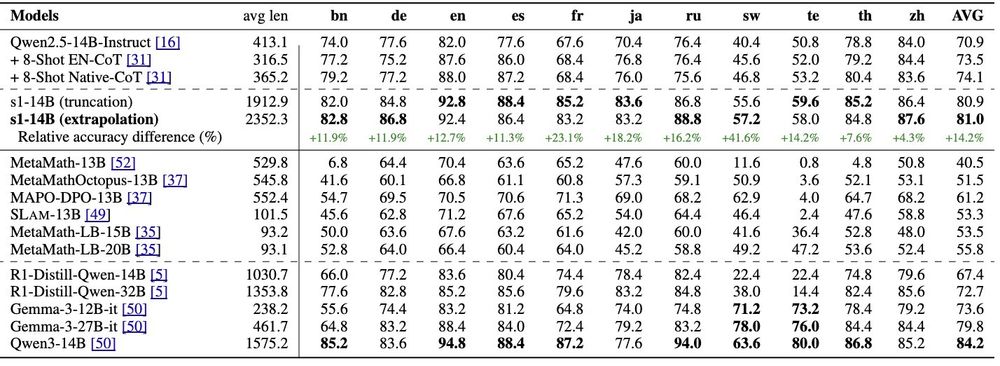

(1) models finetuned on multilingual math reasoning data and

(2) SOTA reasoning models (e.g., Gemma3 and r1-distilled models) twice its size.

[3/N]

(1) models finetuned on multilingual math reasoning data and

(2) SOTA reasoning models (e.g., Gemma3 and r1-distilled models) twice its size.

[3/N]

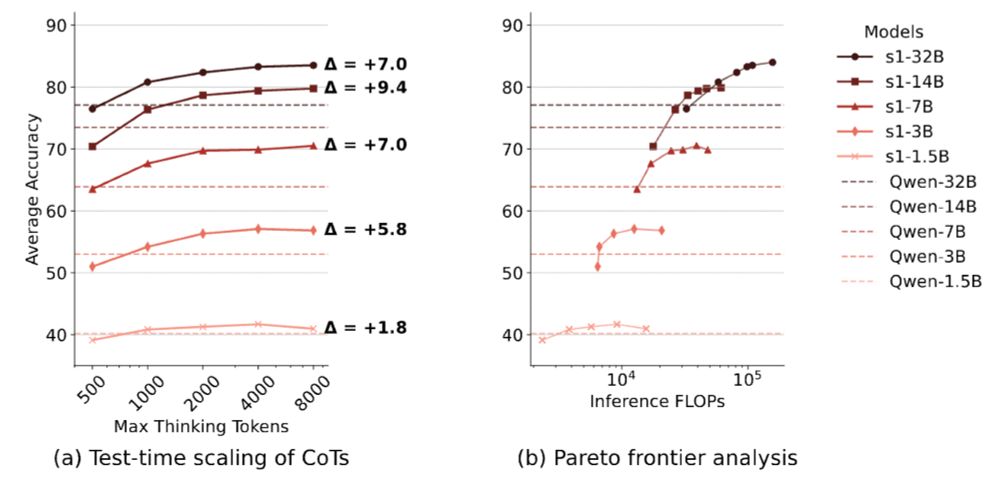

We observe crosslingual benefits of test-time scaling for English-centric reasoning models with ≥3B parameters.

Furthermore, with sufficient test-time compute, 14B models can even outperform 32B models on the MGSM benchmark!

[2/N]

We observe crosslingual benefits of test-time scaling for English-centric reasoning models with ≥3B parameters.

Furthermore, with sufficient test-time compute, 14B models can even outperform 32B models on the MGSM benchmark!

[2/N]

We observe that reasoning language models finetuned only on English data are capable of zero-shot cross-lingual reasoning through a "quote-and-think" pattern.

However, this does not mean they reason the same way across all languages or in new domains.

[1/N]

We observe that reasoning language models finetuned only on English data are capable of zero-shot cross-lingual reasoning through a "quote-and-think" pattern.

However, this does not mean they reason the same way across all languages or in new domains.

[1/N]