Dr. Émile P. Torres

@xriskology.bsky.social

Philosopher and historian of human extinction (sadly, an increasingly relevant topic). Coined the term “TESCREAL” w/ Timnit Gebru. Salon, Truthdig, WaPo, Aeon, etc.

Pinned

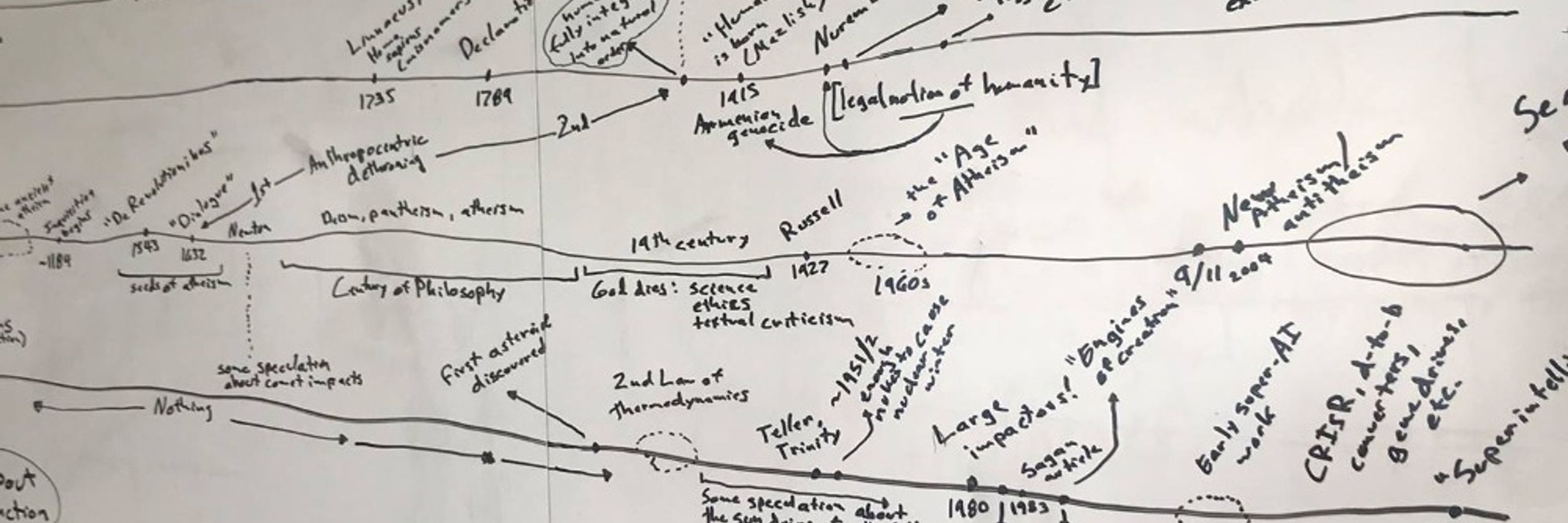

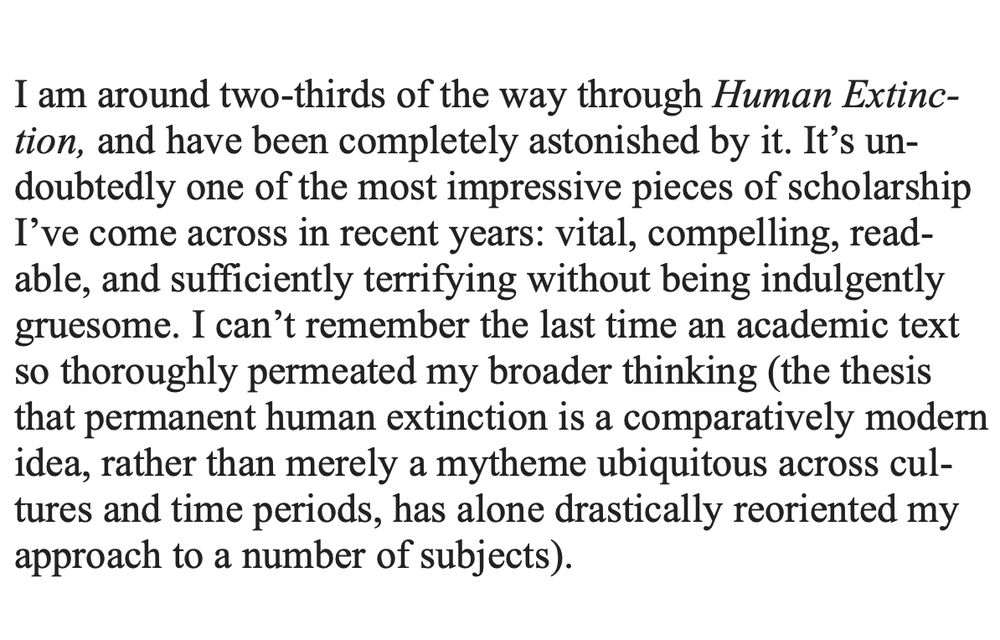

A nice message about my book "Human Extinction: A History of the Science and Ethics of Annihilation" from someone at the London Review of Books. Sharing it with permission! :-)

Ever come across Etymology Nerd's videos on YouTube or TikTok? Well, he joined Kate Willett and I to discuss his fascinating and brilliant new book, Algospeak. It was an incredibly fun conversation, which you can listen to here: www.patreon.com/posts/algosp...

Algospeak, Vibes, and Post-Rats with Adam Aleksic (Etymology Nerd) | Dystopia Now

Get more from Dystopia Now on Patreon

www.patreon.com

November 10, 2025 at 5:22 PM

Ever come across Etymology Nerd's videos on YouTube or TikTok? Well, he joined Kate Willett and I to discuss his fascinating and brilliant new book, Algospeak. It was an incredibly fun conversation, which you can listen to here: www.patreon.com/posts/algosp...

New article on Sam Altman's long history of abusive, toxic, manipulative, duplicitous behavior (those aren't my words, but the words of people who have directly interacted with him). The guy is a complete conman, worthy of the derogatory moniker "Scam Altman." Read more:

Is Sam Altman a Sociopath?

On his long history of mendacious, manipulative, and abusive behavior. (1,500 words.)

www.realtimetechpocalypse.com

November 7, 2025 at 9:29 PM

New article on Sam Altman's long history of abusive, toxic, manipulative, duplicitous behavior (those aren't my words, but the words of people who have directly interacted with him). The guy is a complete conman, worthy of the derogatory moniker "Scam Altman." Read more:

New article on some surprising words from the TESCREAL extremist Nick Bostrom. He suggests that Yudkowsky wants to *never* build ASI, which is unequivocally false. He also says that we should risk the total annihilation of everyone on Earth for a chance of becoming immortal, lol.

Nick Bostrom Splits With Eliezer Yudkowsky: "If Nobody Builds It, Everyone Dies"

Bostrom misleads in suggesting that Yudkowsky doesn't want ASI asap. Here's what he's saying ... (930 words)

www.realtimetechpocalypse.com

November 3, 2025 at 6:18 PM

New article on some surprising words from the TESCREAL extremist Nick Bostrom. He suggests that Yudkowsky wants to *never* build ASI, which is unequivocally false. He also says that we should risk the total annihilation of everyone on Earth for a chance of becoming immortal, lol.

Reposted by Dr. Émile P. Torres

“Who gets to be seen as fully human in this world? Our job is to expand that and to push and make that as wide as it can possibly be.”

Artist Mimi Ọnụọha's work questions the contradictions of technological progress. Anika Collier Navaroli spoke to her for a special podcast series:

Artist Mimi Ọnụọha's work questions the contradictions of technological progress. Anika Collier Navaroli spoke to her for a special podcast series:

Through to Thriving: Connecting Art and Policy with Mimi Ọnụọha | TechPolicy.Press

Mimi Ọnụọha's work "questions and exposes the contradictory logics of technological progress."

www.techpolicy.press

November 2, 2025 at 2:37 PM

“Who gets to be seen as fully human in this world? Our job is to expand that and to push and make that as wide as it can possibly be.”

Artist Mimi Ọnụọha's work questions the contradictions of technological progress. Anika Collier Navaroli spoke to her for a special podcast series:

Artist Mimi Ọnụọha's work questions the contradictions of technological progress. Anika Collier Navaroli spoke to her for a special podcast series:

At 7:02 in this NHL recap, the commentator declares that the Blue Jays just won the World Series, as a crowd of Canadians (in Edmonton) erupt with rambunctious applause. What's going on here?? youtu.be/ho3DjTOepG0?...

November 2, 2025 at 2:42 PM

At 7:02 in this NHL recap, the commentator declares that the Blue Jays just won the World Series, as a crowd of Canadians (in Edmonton) erupt with rambunctious applause. What's going on here?? youtu.be/ho3DjTOepG0?...

Reposted by Dr. Émile P. Torres

New article just published! I address Bill Gates' recent pivot away from climate change, and explain why his message is both dangerous (emboldening climate deniers) and incoherent (climate change mitigation is inextricably bound up with alleviating global poverty). GRRRR!!!

Why Bill Gates’ Pivot Away from Climate Change Is So Dangerous (and Totally Incoherent)

My apologies for not having published an article earlier this week — I’ve been moving, and so have had much less time than usual to write!

www.realtimetechpocalypse.com

October 31, 2025 at 11:11 PM

New article just published! I address Bill Gates' recent pivot away from climate change, and explain why his message is both dangerous (emboldening climate deniers) and incoherent (climate change mitigation is inextricably bound up with alleviating global poverty). GRRRR!!!

Fantastic article by @garethwatkins.bsky.social. Highly recommended reading:

www.newstatesman.com/ideas/2025/1...

www.newstatesman.com/ideas/2025/1...

The guru of the AI apocalypse

Eliezer Yudkowsky is confusing a very human obsession with death and a very modern fear of a techno-deity

www.newstatesman.com

November 1, 2025 at 12:48 AM

Fantastic article by @garethwatkins.bsky.social. Highly recommended reading:

www.newstatesman.com/ideas/2025/1...

www.newstatesman.com/ideas/2025/1...

Reposted by Dr. Émile P. Torres

The most extreme form of eugenics ideology.

It’s like Nazis said:

“No, actually we don’t care about any creature with blood in it. We just want to pave the earth with data centres and upload our Aryan brains into the cloud.”

It’s like Nazis said:

“No, actually we don’t care about any creature with blood in it. We just want to pave the earth with data centres and upload our Aryan brains into the cloud.”

October 27, 2025 at 5:33 AM

The most extreme form of eugenics ideology.

It’s like Nazis said:

“No, actually we don’t care about any creature with blood in it. We just want to pave the earth with data centres and upload our Aryan brains into the cloud.”

It’s like Nazis said:

“No, actually we don’t care about any creature with blood in it. We just want to pave the earth with data centres and upload our Aryan brains into the cloud.”

Reposted by Dr. Émile P. Torres

It seems that the new book by Eliezer Yudkowsky and Nate Soares is getting traction and @theguardian.com even wrote about it. So here is a a different viewpoint by @xriskology.bsky.social

www.truthdig.com/articles/und...

www.truthdig.com/articles/und...

Under a Mask of AI Doomerism, the Familiar Face of Eugenics - Truthdig

In a new book, Eliezer Yudkowsky and Nate Soares hide their radical transhumanist agenda under the cover of concern about “AI safety.”

www.truthdig.com

October 28, 2025 at 6:26 PM

It seems that the new book by Eliezer Yudkowsky and Nate Soares is getting traction and @theguardian.com even wrote about it. So here is a a different viewpoint by @xriskology.bsky.social

www.truthdig.com/articles/und...

www.truthdig.com/articles/und...

New article just published! I address Bill Gates' recent pivot away from climate change, and explain why his message is both dangerous (emboldening climate deniers) and incoherent (climate change mitigation is inextricably bound up with alleviating global poverty). GRRRR!!!

Why Bill Gates’ Pivot Away from Climate Change Is So Dangerous (and Totally Incoherent)

My apologies for not having published an article earlier this week — I’ve been moving, and so have had much less time than usual to write!

www.realtimetechpocalypse.com

October 31, 2025 at 11:11 PM

New article just published! I address Bill Gates' recent pivot away from climate change, and explain why his message is both dangerous (emboldening climate deniers) and incoherent (climate change mitigation is inextricably bound up with alleviating global poverty). GRRRR!!!

Reposted by Dr. Émile P. Torres

It was an absolute pleasure to get to sit down with @xriskology.bsky.social and chat about the eugenic cult ideologies driving the "AI" race in Silicon Valley.

Listen in to find out how these ideas evolved directly out of Western Christianity /1

unwell-to-begin-with.podbean.com/e/episode-3-...

Listen in to find out how these ideas evolved directly out of Western Christianity /1

unwell-to-begin-with.podbean.com/e/episode-3-...

Episode 3: Silicon Valley's TESCREAL eugenics cult with Dr. Émile Torres | Unwell to Begin With

“Many people find these ideologies to be so risible and silly that they wonder why anyone would spend time talking about them. And… the best response I have is that the reason we should talk about the...

unwell-to-begin-with.podbean.com

October 26, 2025 at 4:29 PM

It was an absolute pleasure to get to sit down with @xriskology.bsky.social and chat about the eugenic cult ideologies driving the "AI" race in Silicon Valley.

Listen in to find out how these ideas evolved directly out of Western Christianity /1

unwell-to-begin-with.podbean.com/e/episode-3-...

Listen in to find out how these ideas evolved directly out of Western Christianity /1

unwell-to-begin-with.podbean.com/e/episode-3-...

New article on CogPGT, "the world’s most powerful genetic predictor of IQ," plus other examples of how influential eugenics has become in recent years. I also explain why I think "IQ" is an utterly meaningless measure of "intelligence." (Ever heard of the "BITCH" test? Lol.) What do you think?

From Trump to TESCREAL, Eugenics Is Everywhere

Most people think eugenics largely vanished after the Nazis' atrocities. But that's not true: eugenics is alive and well, and probably more influential today than ever. (2,200 words.)

www.realtimetechpocalypse.com

October 26, 2025 at 4:24 PM

New article on CogPGT, "the world’s most powerful genetic predictor of IQ," plus other examples of how influential eugenics has become in recent years. I also explain why I think "IQ" is an utterly meaningless measure of "intelligence." (Ever heard of the "BITCH" test? Lol.) What do you think?

Reposted by Dr. Émile P. Torres

He’s designing the ballroom with AI isn’t he www.nytimes.com/interactive/...

October 25, 2025 at 3:45 PM

He’s designing the ballroom with AI isn’t he www.nytimes.com/interactive/...

Reposted by Dr. Émile P. Torres

This was a great read, and raises an important question.

New article about the Future of Life Institute's (@futureoflife.org) statement on the race to build "superintelligent" machines, which was signed by people on all sides of the political spectrum. I also signed this statement, and explain why here: www.realtimetechpocalypse.com/p/when-is-it...

When Is It Morally Acceptable To Align With Steve Bannon?

I need your help!!

www.realtimetechpocalypse.com

October 24, 2025 at 4:22 AM

This was a great read, and raises an important question.

Reposted by Dr. Émile P. Torres

«When they [Yudkowsky and Soares] look up at the stars, they don’t see beauty in the pristine firmament but a vast reservoir of untapped resources to be exploited for the purpose of maximizing profit and “value.”»

Excellent from @xriskology.bsky.social !

www.truthdig.com/articles/und...

Excellent from @xriskology.bsky.social !

www.truthdig.com/articles/und...

Under a Mask of AI Doomerism, the Familiar Face of Eugenics - Truthdig

In a new book, Eliezer Yudkowsky and Nate Soares hide their radical transhumanist agenda under the cover of concern about “AI safety.”

www.truthdig.com

October 24, 2025 at 9:40 AM

«When they [Yudkowsky and Soares] look up at the stars, they don’t see beauty in the pristine firmament but a vast reservoir of untapped resources to be exploited for the purpose of maximizing profit and “value.”»

Excellent from @xriskology.bsky.social !

www.truthdig.com/articles/und...

Excellent from @xriskology.bsky.social !

www.truthdig.com/articles/und...

New article about the Future of Life Institute's (@futureoflife.org) statement on the race to build "superintelligent" machines, which was signed by people on all sides of the political spectrum. I also signed this statement, and explain why here: www.realtimetechpocalypse.com/p/when-is-it...

When Is It Morally Acceptable To Align With Steve Bannon?

I need your help!!

www.realtimetechpocalypse.com

October 23, 2025 at 4:59 PM

New article about the Future of Life Institute's (@futureoflife.org) statement on the race to build "superintelligent" machines, which was signed by people on all sides of the political spectrum. I also signed this statement, and explain why here: www.realtimetechpocalypse.com/p/when-is-it...

Friends: I just put together a website dedicated to "Silicon Valley pro-extinctionism." It includes a list of articles that I've recently published on the topic. In my view, this is one of the most important issues that almost no one is talking about. See the link below!

RESOURCES | Émile P. Torres

www.xriskology.com

October 21, 2025 at 5:29 PM

Friends: I just put together a website dedicated to "Silicon Valley pro-extinctionism." It includes a list of articles that I've recently published on the topic. In my view, this is one of the most important issues that almost no one is talking about. See the link below!

New podcast episode!! This one was extremely interesting, imo--Kate Willett (@katewillett.bsky.social) explores the evolution of comedy over the past 10-15 years, from the days of Broad City up to contemporary bro culture, and how tech has influenced this. Really fascinating stuff! Listen below:

October 21, 2025 at 4:48 PM

New podcast episode!! This one was extremely interesting, imo--Kate Willett (@katewillett.bsky.social) explores the evolution of comedy over the past 10-15 years, from the days of Broad City up to contemporary bro culture, and how tech has influenced this. Really fascinating stuff! Listen below:

In case you missed it, here's my most recent newsletter article -- on Eliezer Yudkowsky's very long history of defending incredibly dumb ideas. Why anyone takes this guy seriously will forever be mysterious to me. www.realtimetechpocalypse.com/p/eliezer-yu...

Eliezer Yudkowsky's Long History of Bad Ideas

Two days ago, Truthdig published my review of Eliezer Yudkowsky and Nate Soares’ book If Anyone Builds It, Everyone Dies. Please read and share it, if you have the time.

www.realtimetechpocalypse.com

October 20, 2025 at 6:23 PM

In case you missed it, here's my most recent newsletter article -- on Eliezer Yudkowsky's very long history of defending incredibly dumb ideas. Why anyone takes this guy seriously will forever be mysterious to me. www.realtimetechpocalypse.com/p/eliezer-yu...

Reposted by Dr. Émile P. Torres

Here I worked for years to discredit a bonkers idea:

That *if* a fully autonomous AI system would *ever* develop through capitalist exploitation and replacement of workers, that such a system could even be *theoretically* controlled to stay safe.

1/

That *if* a fully autonomous AI system would *ever* develop through capitalist exploitation and replacement of workers, that such a system could even be *theoretically* controlled to stay safe.

1/

![Where I strongly disagree with the authors is on the question of whether ASI can ever be controlled. Yudkowsky and Soares believe controllability is feasible if Al safety research leads the way over Al capabilities research. "The ASI alignment problem," they write, "is possible to solve in principle." But I see no reason for accepting this claim (and they provide no convincing reasons for believing it). How could we possibly ensure that a dynamic, constantly evolving cluster of self-improving algorithms remains under our control for more than a flash? As the computer scientist Roman Yampolskiy compellingly makes the point:

We don't have static software. We have a system which is dynamically learning, changing, rewriting code indefinitely. It's a perpetual motion problem we're trying to solve. We know in physics you cannot create [al perpetual motion device. But in Al, in computer science, we're saying we can create [a] perpetual safety device, which will always guarantee that the new iteration is just as safe.](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:wwoynpmtuwhhfus356bnkz7w/bafkreids3p7ygnpixpeu2yciwtzfyym6eq7s2altkgntrlsjwl4iejyqea@jpeg)

October 18, 2025 at 1:41 AM

Here I worked for years to discredit a bonkers idea:

That *if* a fully autonomous AI system would *ever* develop through capitalist exploitation and replacement of workers, that such a system could even be *theoretically* controlled to stay safe.

1/

That *if* a fully autonomous AI system would *ever* develop through capitalist exploitation and replacement of workers, that such a system could even be *theoretically* controlled to stay safe.

1/

Reposted by Dr. Émile P. Torres

This is why I included Yudkowsky, along with his supposed foes Musk, Thiel, Altman et al, in this linked thread btw. @xriskology.bsky.social delineates just how eugenic it is

bsky.app/profile/mrvi...

bsky.app/profile/mrvi...

My review of Yudkowsky and Soares' new book: If Anyone Builds It, Everyone Dies. I consider Yudkowsky to be an intellectual fraud, and his book is nothing more than TESCREAL propaganda--in truth, he's pro-ASI and pro-extinctionist. Read more below:

www.truthdig.com/articles/und...

www.truthdig.com/articles/und...

Under a Mask of AI Doomerism, the Familiar Face of Eugenics - Truthdig

In a new book, Eliezer Yudkowsky and Nate Soares hide their radical transhumanist agenda under the cover of concern about “AI Safety.”

www.truthdig.com

October 18, 2025 at 3:35 AM

This is why I included Yudkowsky, along with his supposed foes Musk, Thiel, Altman et al, in this linked thread btw. @xriskology.bsky.social delineates just how eugenic it is

bsky.app/profile/mrvi...

bsky.app/profile/mrvi...

Article on Eliezer Yudkowsky's long history of terrible ideas. The guy has an ego the size of Jupiter, sees himself as a messianic figure on a mission to save posthumanity, & pontificates about topics he doesn't understand--including computer science--despite being frequently wrong about everything.

Eliezer Yudkowsky's Long History of Bad Ideas

Two days ago, Truthdig published my review of Eliezer Yudkowsky and Nate Soares’ book If Anyone Builds It, Everyone Dies. Please read and share it, if you have the time.

www.realtimetechpocalypse.com

October 19, 2025 at 4:51 PM

Article on Eliezer Yudkowsky's long history of terrible ideas. The guy has an ego the size of Jupiter, sees himself as a messianic figure on a mission to save posthumanity, & pontificates about topics he doesn't understand--including computer science--despite being frequently wrong about everything.

Reposted by Dr. Émile P. Torres

The tech billionaires charting our future right now are kooks. And we should really start acknowledging and discussing them as such.

New article on three lies that longtermists spread about their ideology. These are: (1) That they care about avoiding human extinction. (2) That they care about the long-term future. And (3) that "future people matter." I explain why these are lies below!! Like, share, and subscribe for more. :-)

Three Lies Longtermists Like To Tell About Their Bizarre Beliefs

Longtermists don't actually care about avoiding human extinction. They don't care about the long-term future of humanity. And they don't really care about future people.

www.realtimetechpocalypse.com

October 17, 2025 at 2:54 AM

The tech billionaires charting our future right now are kooks. And we should really start acknowledging and discussing them as such.

Reposted by Dr. Émile P. Torres

This's is why, on the surface, for a bit there, I'd say the @sentientism.bsky.social & Effective Altruism folks appeared aligned.

But, EA fails the way many ideologies fail, in that it does not give proper consideration to autonomy & consent of sentient beings to expand our circles of compassion.

But, EA fails the way many ideologies fail, in that it does not give proper consideration to autonomy & consent of sentient beings to expand our circles of compassion.

New article on three lies that longtermists spread about their ideology. These are: (1) That they care about avoiding human extinction. (2) That they care about the long-term future. And (3) that "future people matter." I explain why these are lies below!! Like, share, and subscribe for more. :-)

Three Lies Longtermists Like To Tell About Their Bizarre Beliefs

Longtermists don't actually care about avoiding human extinction. They don't care about the long-term future of humanity. And they don't really care about future people.

www.realtimetechpocalypse.com

October 17, 2025 at 3:35 AM

This's is why, on the surface, for a bit there, I'd say the @sentientism.bsky.social & Effective Altruism folks appeared aligned.

But, EA fails the way many ideologies fail, in that it does not give proper consideration to autonomy & consent of sentient beings to expand our circles of compassion.

But, EA fails the way many ideologies fail, in that it does not give proper consideration to autonomy & consent of sentient beings to expand our circles of compassion.

Reposted by Dr. Émile P. Torres

“It’s infuriating that longtermists keep telling these lies to trick people into thinking their view is more reasonable than it is. In truth, longtermism is an extremely radical view most morally sane people will vociferously reject if they understand what it’s really about.”

New article on three lies that longtermists spread about their ideology. These are: (1) That they care about avoiding human extinction. (2) That they care about the long-term future. And (3) that "future people matter." I explain why these are lies below!! Like, share, and subscribe for more. :-)

Three Lies Longtermists Like To Tell About Their Bizarre Beliefs

Longtermists don't actually care about avoiding human extinction. They don't care about the long-term future of humanity. And they don't really care about future people.

www.realtimetechpocalypse.com

October 17, 2025 at 7:35 AM

“It’s infuriating that longtermists keep telling these lies to trick people into thinking their view is more reasonable than it is. In truth, longtermism is an extremely radical view most morally sane people will vociferously reject if they understand what it’s really about.”