📍York, UK

🔗 https://www-users.york.ac.uk/~waps101/

tl;dr: it is good, even feels like human, but not perfect.

ducha-aiki.github.io/wide-baselin...

tl;dr: it is good, even feels like human, but not perfect.

ducha-aiki.github.io/wide-baselin...

(E.g., where does that “log” come from? Are there other possible formulas?)

Yet there's an intuitive & almost inevitable way to arrive at this expression.

(E.g., where does that “log” come from? Are there other possible formulas?)

Yet there's an intuitive & almost inevitable way to arrive at this expression.

x.com/chrisoffner3...

x.com/chrisoffner3...

X. Nicole Han, T. Zickler and K. Nishino (Harvard+Kyoto)

Diffusion-based SFS lets you sample multistable shape perception!

Nicole at poster on Th 12/12 11am East A-C 1308

vision.ist.i.kyoto-u.ac.jp/research/mss...

X. Nicole Han, T. Zickler and K. Nishino (Harvard+Kyoto)

Diffusion-based SFS lets you sample multistable shape perception!

Nicole at poster on Th 12/12 11am East A-C 1308

vision.ist.i.kyoto-u.ac.jp/research/mss...

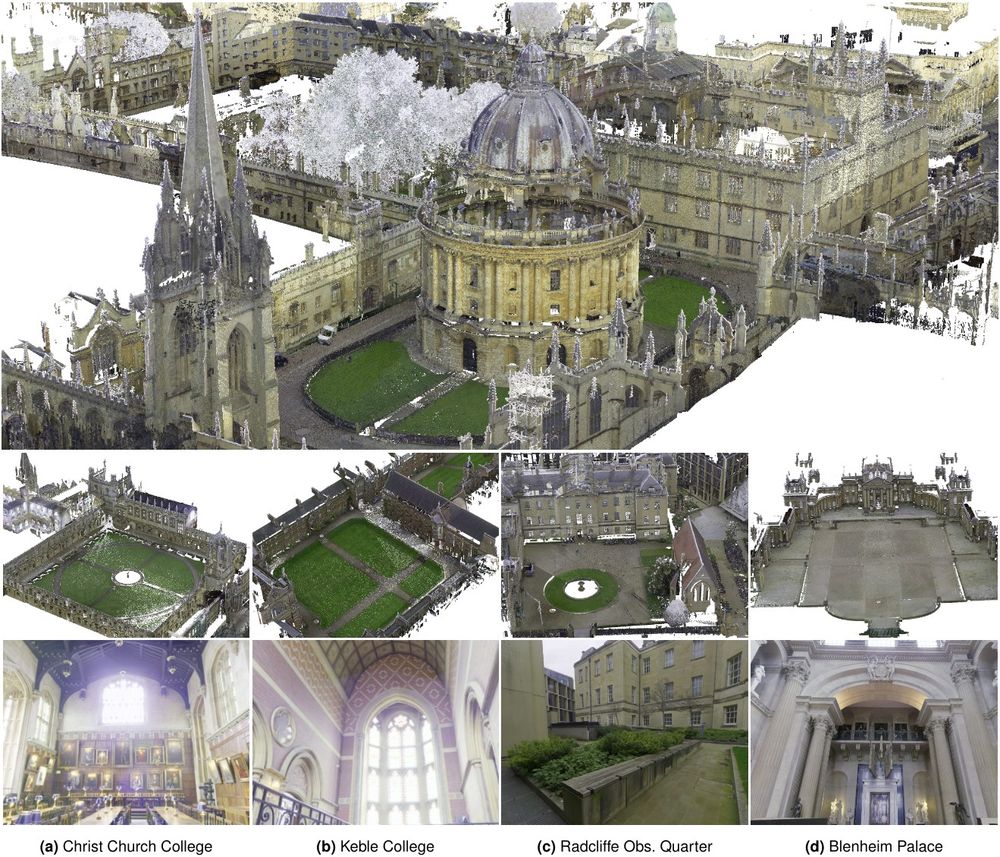

dynamic.robots.ox.ac.uk/datasets/oxf...

dynamic.robots.ox.ac.uk/datasets/oxf...

Accurate, fast, & robust structure + camera estimation from casual monocular videos of dynamic scenes!

MegaSaM outputs camera parameters and consistent video depth, scaling to long videos with unconstrained camera paths and complex scene dynamics!

Accurate, fast, & robust structure + camera estimation from casual monocular videos of dynamic scenes!

MegaSaM outputs camera parameters and consistent video depth, scaling to long videos with unconstrained camera paths and complex scene dynamics!

The renamed:

Bluesky-sized history of neuroscience (biased by my interests)

The renamed:

Bluesky-sized history of neuroscience (biased by my interests)

deepmind.google/discover/blo...

deepmind.google/discover/blo...

“I tested the idea we discussed last time. Here are some results. It does not work. (… awkward silence)”

Such conversations happen so many times when meetings with students. How do we move forward?

You need …

“I tested the idea we discussed last time. Here are some results. It does not work. (… awkward silence)”

Such conversations happen so many times when meetings with students. How do we move forward?

You need …

youtu.be/NLnPG95vNhQ?...

youtu.be/NLnPG95vNhQ?...

Noah Snavely @snavely.bsky.social from Cornell & Google DeepMind! 🌟

🕒 You have now 24 HOURS to ask him anything — drop your questions in the comments below!

Keep it engaging but respectful!

Noah Snavely @snavely.bsky.social from Cornell & Google DeepMind! 🌟

🕒 You have now 24 HOURS to ask him anything — drop your questions in the comments below!

Keep it engaging but respectful!

A method for decomposing a video into complete layers, including objects and their associated effects (e.g., shadows, reflections).

It enables a wide range of cool applications, such as video stylization, compositions, moment retiming, and object removal.

A method for decomposing a video into complete layers, including objects and their associated effects (e.g., shadows, reflections).

It enables a wide range of cool applications, such as video stylization, compositions, moment retiming, and object removal.

HF: huggingface.co/Lightricks/L...

Gradio: huggingface.co/spaces/Light...

Github: github.com/Lightricks/L...

Look at that prompt example though. Need to be a proper writer to get that quality.

HF: huggingface.co/Lightricks/L...

Gradio: huggingface.co/spaces/Light...

Github: github.com/Lightricks/L...

Look at that prompt example though. Need to be a proper writer to get that quality.

-NeurIPS2024 Communication Chairs

-NeurIPS2024 Communication Chairs

page: genechou.com/kfcw

page: genechou.com/kfcw