@quanquangu.bsky.social. I really like how they explore new techniques for RLHF

@quanquangu.bsky.social. I really like how they explore new techniques for RLHF

go.bsky.app/2qnppia

Simply the best fully open models yet.

Really proud of the work & the amazing team at

@ai2.bsky.social

Simply the best fully open models yet.

Really proud of the work & the amazing team at

@ai2.bsky.social

MARS is a new exciting variance reduction technique from @quanquangu.bsky.social 's group which can help stabilize and accelerate your deep learning pipeline. All that is needed is a gradient buffer. Here MARS speeds up the convergence of PSGD ultimately leading to a better solution.

MARS is a new exciting variance reduction technique from @quanquangu.bsky.social 's group which can help stabilize and accelerate your deep learning pipeline. All that is needed is a gradient buffer. Here MARS speeds up the convergence of PSGD ultimately leading to a better solution.

go.bsky.app/2qnppia

Did I miss anyone? Tag them or let me know what to add!

go.bsky.app/VjpyyRw

Did I miss anyone? Tag them or let me know what to add!

go.bsky.app/VjpyyRw

AI for Science summit.

In person places are full, but you can still join us online (click on the below to sign up).

science.ai.cam.ac.uk/events/ai-fo...

AI for Science summit.

In person places are full, but you can still join us online (click on the below to sign up).

science.ai.cam.ac.uk/events/ai-fo...

go.bsky.app/LWyGAAu

go.bsky.app/LWyGAAu

go.bsky.app/2qnppia

go.bsky.app/2qnppia

Looking forward to seeing you all there!

@rl-conference.bsky.social

#reinforcementlearning

Looking forward to seeing you all there!

@rl-conference.bsky.social

#reinforcementlearning

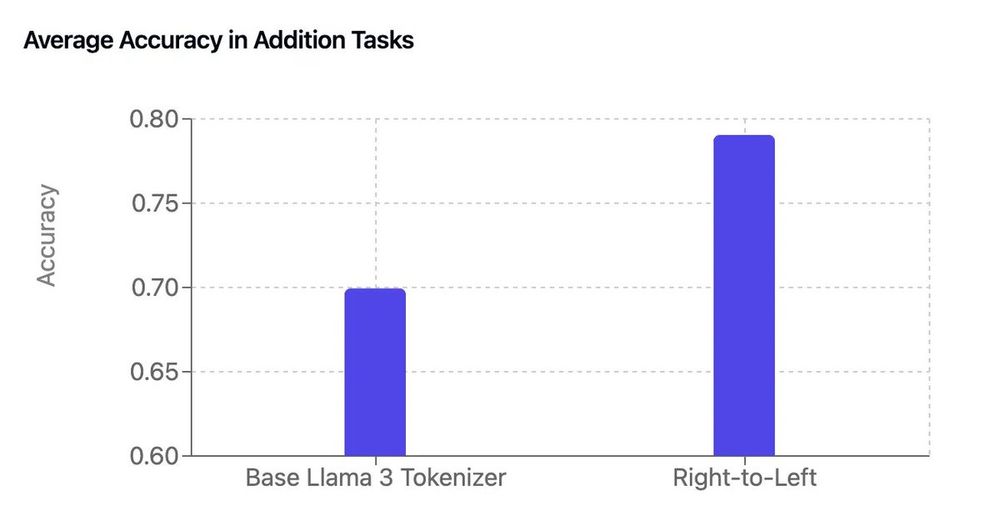

By adding a few lines of code to the base Llama 3 tokenizer, he got a free boost in arithmetic performance 😮

[thread]

By adding a few lines of code to the base Llama 3 tokenizer, he got a free boost in arithmetic performance 😮

[thread]

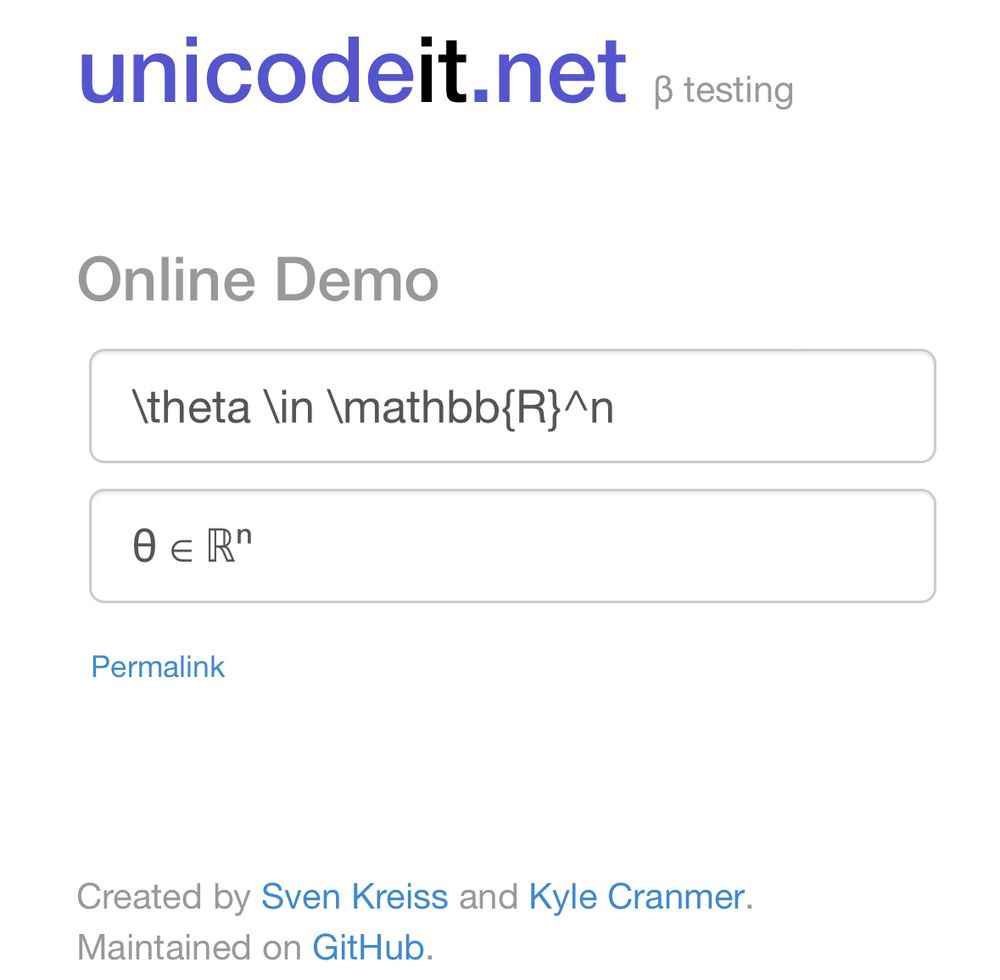

For example: θ ∈ ℝⁿ or pp̅ → μ⁺μ⁻

Use website or install system-wide in Linux, macOS, or windows

www.unicodeit.net

(Created several years ago with @svenkreiss.bsky.social)

For example: θ ∈ ℝⁿ or pp̅ → μ⁺μ⁻

Use website or install system-wide in Linux, macOS, or windows

www.unicodeit.net

(Created several years ago with @svenkreiss.bsky.social)