TRL maintainer

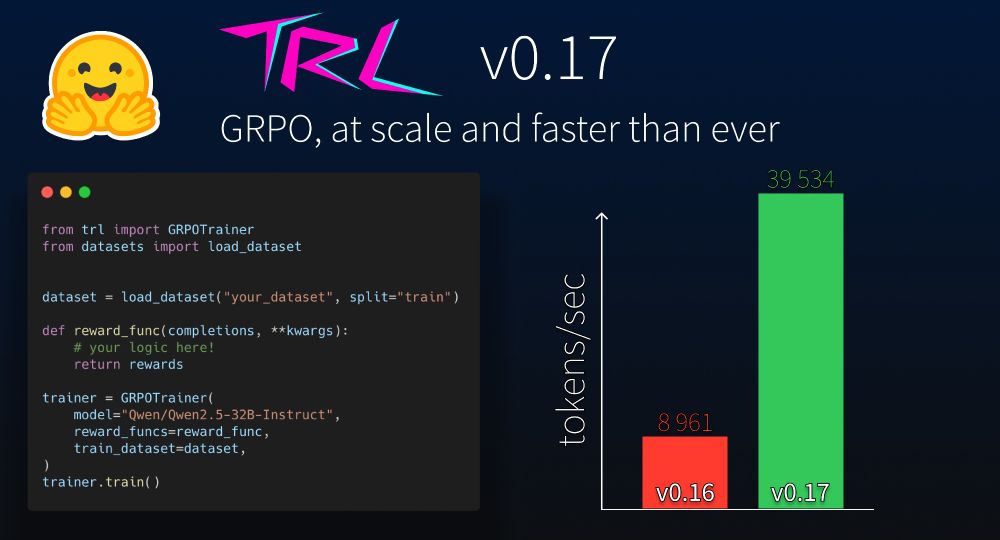

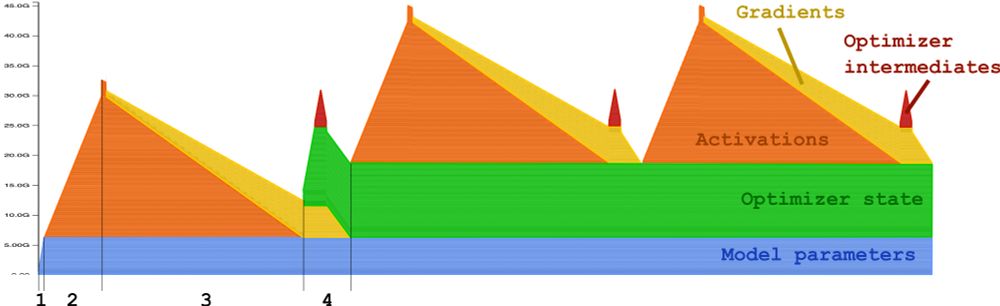

TRL 0.14 brings *GRPO*, the RL algorithm behind 🐳 DeekSeek-R1 .

⚡ Blazing fast generation with vLLM integration.

📉 Optimized training with DeepSpeed ZeRO 1/2/3.

TRL 0.14 brings *GRPO*, the RL algorithm behind 🐳 DeekSeek-R1 .

⚡ Blazing fast generation with vLLM integration.

📉 Optimized training with DeepSpeed ZeRO 1/2/3.

=> huggingface.co/blog/open-r1

=> huggingface.co/blog/open-r1

Hugging Face is openly reproducing the pipeline of 🐳 DeepSeek-R1. Open data, open training. open models, open collaboration.

🫵 Let's go!

github.com/huggingface/...

Hugging Face is openly reproducing the pipeline of 🐳 DeepSeek-R1. Open data, open training. open models, open collaboration.

🫵 Let's go!

github.com/huggingface/...

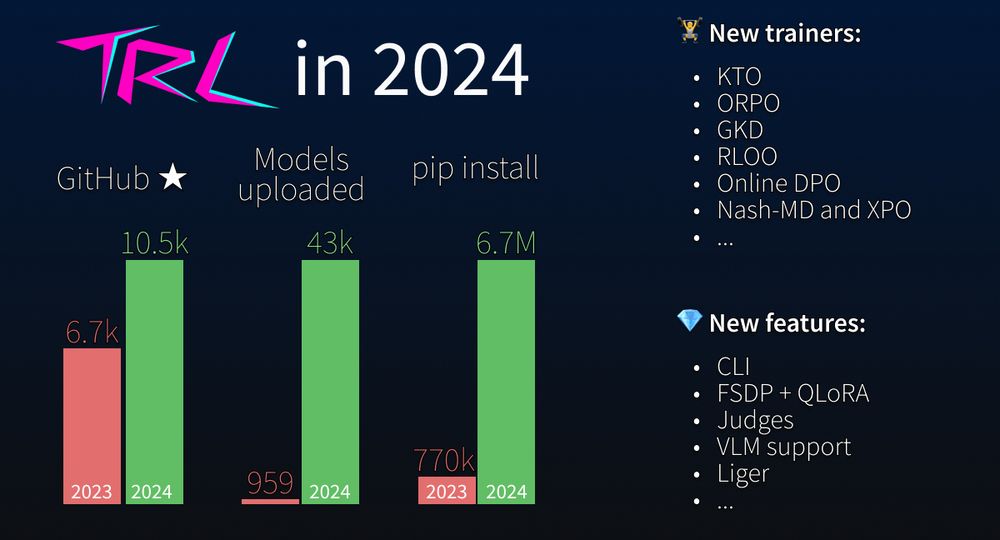

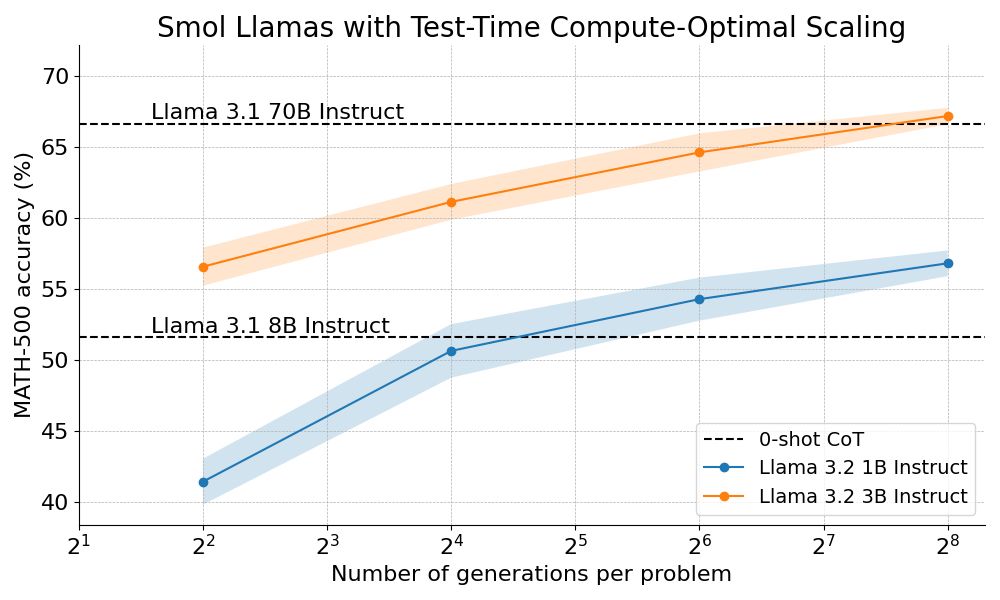

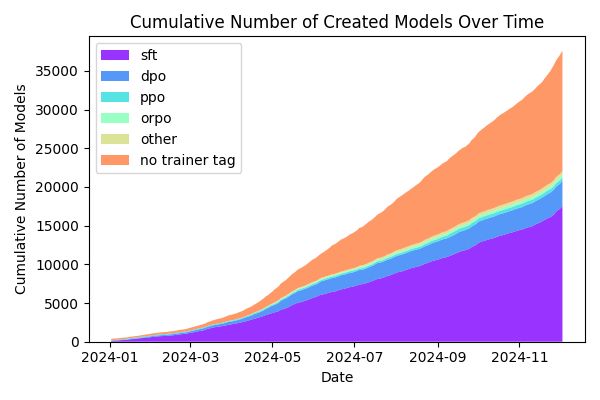

It has seen impressive growth this year. Lots of new features, an improved codebase, and this has translated into increased usage. You can count on us to do even more in 2025.

It has seen impressive growth this year. Lots of new features, an improved codebase, and this has translated into increased usage. You can count on us to do even more in 2025.

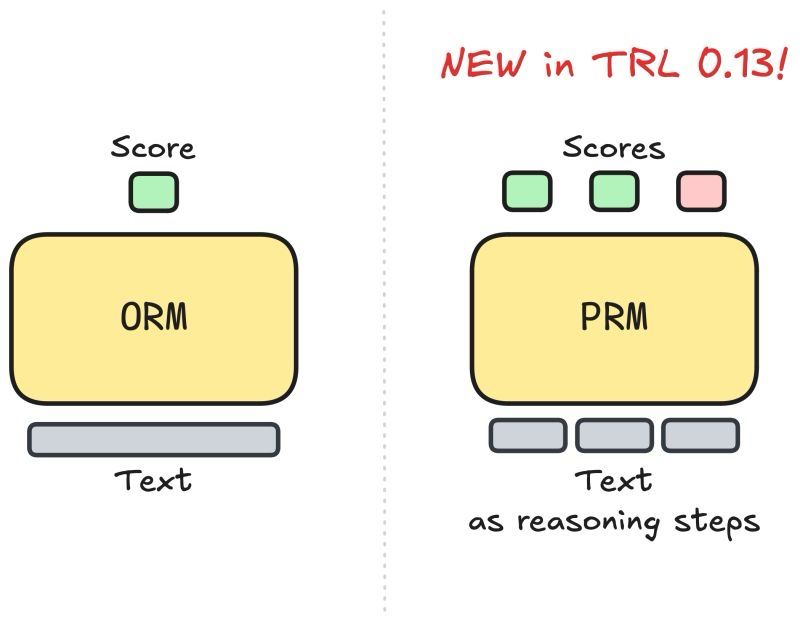

Featuring a Process-supervised Reward Models (PRM) Trainer 🏋️

PRMs empower LLMs to "think before answering"—a key feature behind OpenAI's o1 launch just two weeks ago. 🚀

Featuring a Process-supervised Reward Models (PRM) Trainer 🏋️

PRMs empower LLMs to "think before answering"—a key feature behind OpenAI's o1 launch just two weeks ago. 🚀

How? By combining step-wise reward models with tree search algorithms :)

We're open sourcing the full recipe and sharing a detailed blog post 👇

How? By combining step-wise reward models with tree search algorithms :)

We're open sourcing the full recipe and sharing a detailed blog post 👇

How about doing the same next year?

How about doing the same next year?

bsky.app/profile/benb...

>> huggingface.co/docs/trl/mai...

bsky.app/profile/benb...

>> huggingface.co/docs/trl/mai...

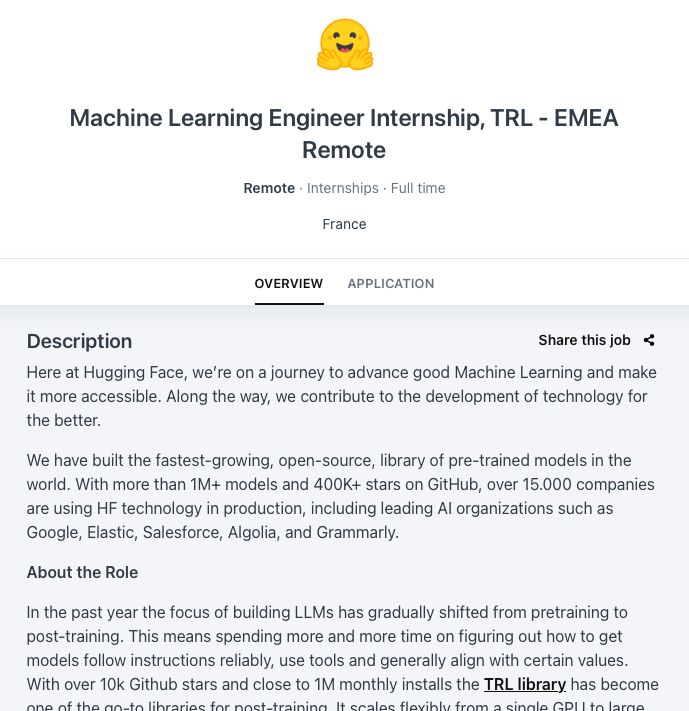

🧑💻 Full remote

🤯 Exciting subjects

🌍 Anywhere in the world

🤸🏻 Flexible working hours

Link to apply in comment 👇

🧑💻 Full remote

🤯 Exciting subjects

🌍 Anywhere in the world

🤸🏻 Flexible working hours

Link to apply in comment 👇

US: apply.workable.com/huggingface/...

EMEA: apply.workable.com/huggingface/...

US: apply.workable.com/huggingface/...

EMEA: apply.workable.com/huggingface/...

🤔 Let me know if you would like a dedicated course on TRL basics.

🤔 Let me know if you would like a dedicated course on TRL basics.

🤔 Let me know if you would like a dedicated course on TRL basics.

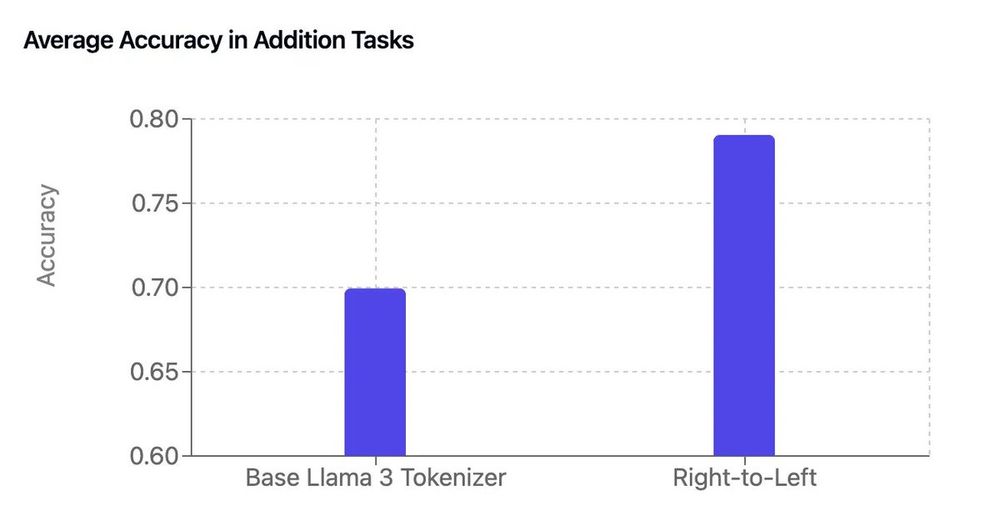

By adding a few lines of code to the base Llama 3 tokenizer, he got a free boost in arithmetic performance 😮

[thread]

By adding a few lines of code to the base Llama 3 tokenizer, he got a free boost in arithmetic performance 😮

[thread]

This blog post from @muellerzr.bsky.social saved my day.

muellerzr.github.io/til/argparse...

This blog post from @muellerzr.bsky.social saved my day.

muellerzr.github.io/til/argparse...

thanks @philschmid.bsky.social for the finetuning code

thanks @huggingface.bsky.social for the smol model

thanks @qgallouedec.bsky.social and friends for TRL

The magic? Versioning chunks, not files, giving rise to:

🧠 Smarter storage

⏩ Faster uploads

🚀 Efficient downloads

Curious? Read the blog and let us know how it could help your workflows!

The magic? Versioning chunks, not files, giving rise to:

🧠 Smarter storage

⏩ Faster uploads

🚀 Efficient downloads

Curious? Read the blog and let us know how it could help your workflows!