Scientist | Statistician | Bayesian | Author of brms | Member of the Stan and BayesFlow development teams

Website: https://paulbuerkner.com

Opinions are my own

Huge thanks to:

• My supervisors @paulbuerkner.com @stefanradev.bsky.social @avehtari.bsky.social 👥

• The committee @ststaab.bsky.social @mniepert.bsky.social 📝

• The institutions @ellis.eu @unistuttgart.bsky.social @aalto.fi 🏫

• My wonderful collaborators 🧡

#PhDone 🎓

Huge thanks to:

• My supervisors @paulbuerkner.com @stefanradev.bsky.social @avehtari.bsky.social 👥

• The committee @ststaab.bsky.social @mniepert.bsky.social 📝

• The institutions @ellis.eu @unistuttgart.bsky.social @aalto.fi 🏫

• My wonderful collaborators 🧡

#PhDone 🎓

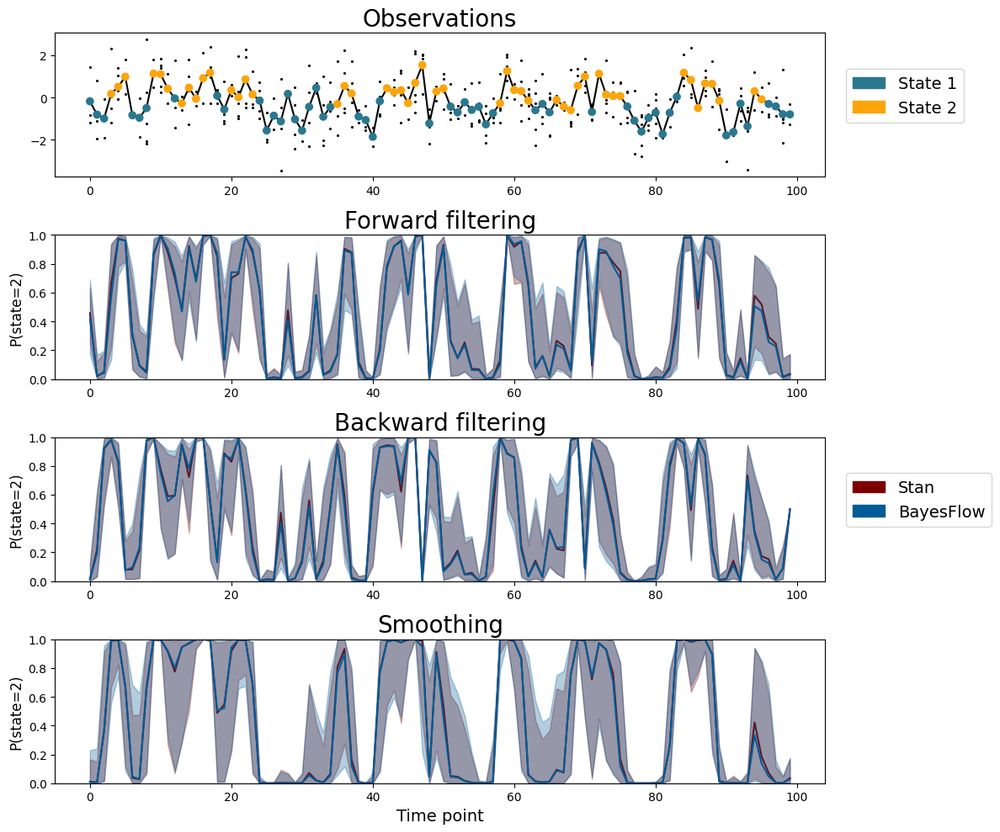

The amortized approximator from BayesFlow closely matches the results of expensive-but-trustworthy HMC with Stan.

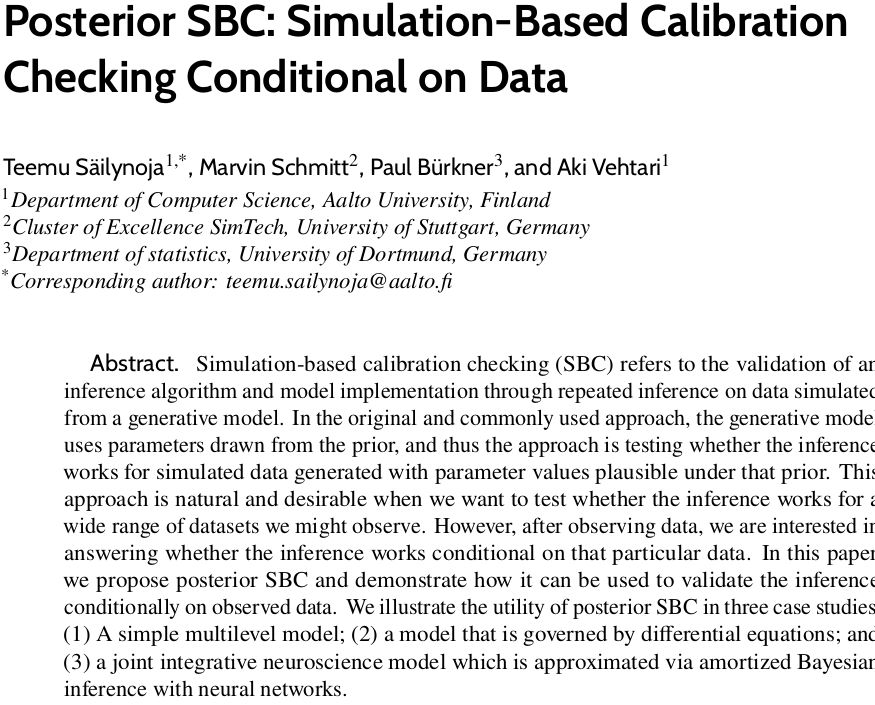

Check out the preprint and code by @kucharssim.bsky.social and @paulbuerkner.com👇

The amortized approximator from BayesFlow closely matches the results of expensive-but-trustworthy HMC with Stan.

Check out the preprint and code by @kucharssim.bsky.social and @paulbuerkner.com👇

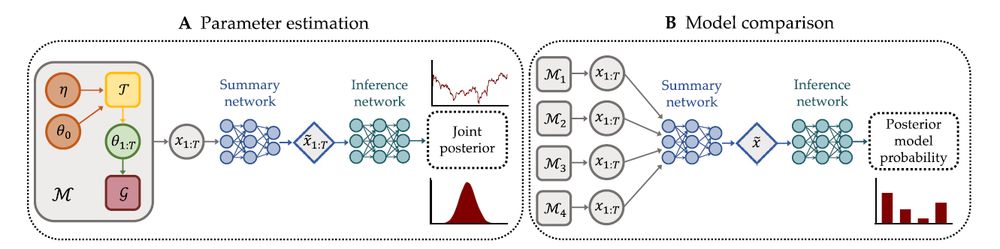

BayesFlow allows:

• Approximating the joint posterior of model parameters and mixture indicators

• Inferences for independent and dependent mixtures

• Amortization for fast and accurate estimation

📄 Preprint

💻 Code

arxiv.org/abs/2502.03279 1/7

arxiv.org/abs/2502.03279 1/7

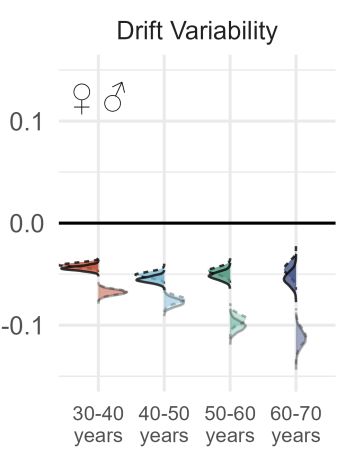

BayesFlow facilitated efficient inference for complex decision-making models, scaling Bayesian workflows to big data.

🔗Paper

BayesFlow facilitated efficient inference for complex decision-making models, scaling Bayesian workflows to big data.

🔗Paper

Sign up to the seminar’s mailing list below to get the meeting link 👇

Sign up to the seminar’s mailing list below to get the meeting link 👇

Including my alma mater, the University of Münster.

HT @thereallorenzmeyer.bsky.social nachrichten.idw-online.de/2025/01/10/h...

Including my alma mater, the University of Münster.

HT @thereallorenzmeyer.bsky.social nachrichten.idw-online.de/2025/01/10/h...

- checking the long tails (few long RTs make the tail estimation unwieldy)

- low initial values for ndt

- careful prior checks

- pathfinder estimation of initial values

still with increasing data, chains get stuck

- checking the long tails (few long RTs make the tail estimation unwieldy)

- low initial values for ndt

- careful prior checks

- pathfinder estimation of initial values

still with increasing data, chains get stuck

It’s not a part of the process that can be skipped; it’s the entire point.

It’s not a part of the process that can be skipped; it’s the entire point.

2️⃣ BayesFlow handles amortized parameter estimation in the SBI setting.

📣 Shoutout to @masonyoungblood.bsky.social & @sampassmore.bsky.social

📄 Preprint: osf.io/preprints/ps...

💻 Code: github.com/masonyoungbl...

2️⃣ BayesFlow handles amortized parameter estimation in the SBI setting.

📣 Shoutout to @masonyoungblood.bsky.social & @sampassmore.bsky.social

📄 Preprint: osf.io/preprints/ps...

💻 Code: github.com/masonyoungbl...

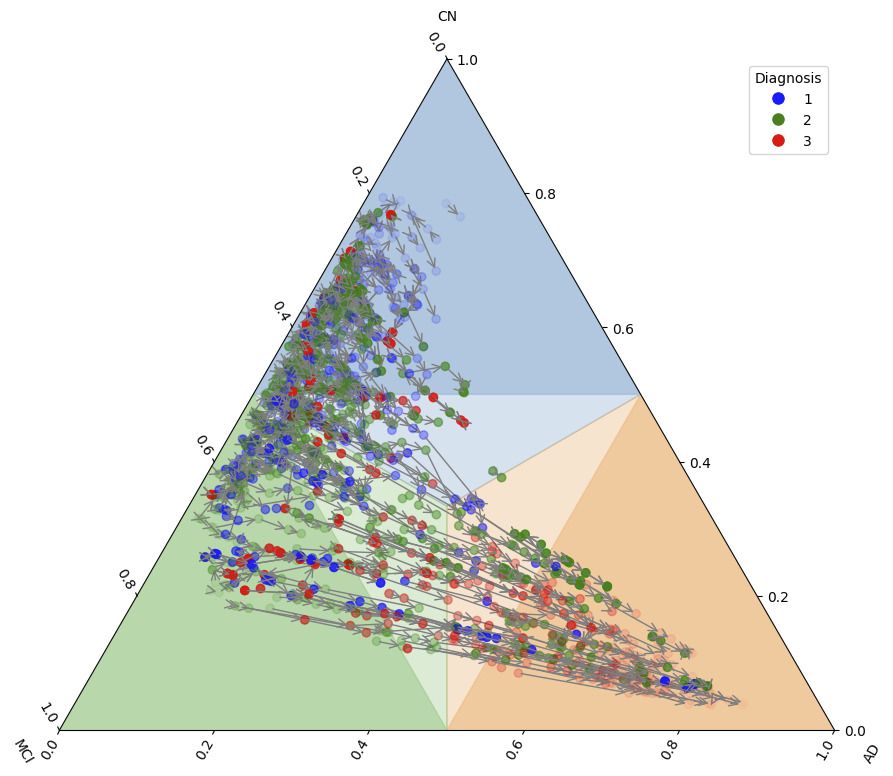

⋅ Joint estimation of stationary and time-varying parameters

⋅ Amortized parameter inference and model comparison

⋅ Multi-horizon predictions and leave-future-out CV

📄 Paper 1

📄 Paper 2

💻 BayesFlow Code

⋅ Joint estimation of stationary and time-varying parameters

⋅ Amortized parameter inference and model comparison

⋅ Multi-horizon predictions and leave-future-out CV

📄 Paper 1

📄 Paper 2

💻 BayesFlow Code

www.statnews.com/sponsor/2024...

www.statnews.com/sponsor/2024...

I definitely like where it's heading!

Personal highlights: data explorer, command palette, help on hover + extensions 👇🏻📚

I definitely like where it's heading!

Personal highlights: data explorer, command palette, help on hover + extensions 👇🏻📚

- adding lower/upper bounds to ordered vectors (removing positive ordered since it's achieved by lb=0)

Highlights:

New constraints for stochastic matrices and zero-sum vectors

Easier user-defined constraints

Improved diagnostics

New distribution, beta_negative_binomial

- adding lower/upper bounds to ordered vectors (removing positive ordered since it's achieved by lb=0)

In our new paper, we (Florence Bockting, @stefanradev.bsky.social and me) develop a method for expert prior elicitation using generative neural networks and simulation-based learning.

arxiv.org/abs/2411.15826

In our new paper, we (Florence Bockting, @stefanradev.bsky.social and me) develop a method for expert prior elicitation using generative neural networks and simulation-based learning.

arxiv.org/abs/2411.15826

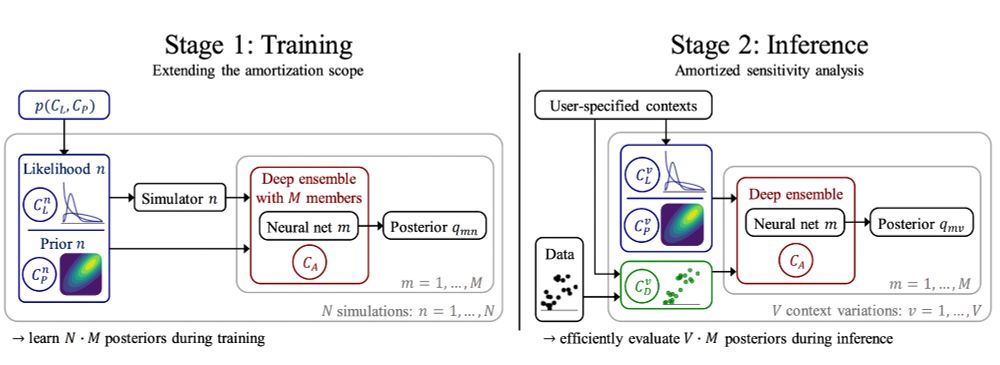

Sensitivity-aware amortized inference explores the iceberg:

⋅ Test alternative priors, likelihoods, and data perturbations

⋅ Deep ensembles flag misspecification issues

⋅ No model refits required during inference

🔗 openreview.net/forum?id=Kxt...

Sensitivity-aware amortized inference explores the iceberg:

⋅ Test alternative priors, likelihoods, and data perturbations

⋅ Deep ensembles flag misspecification issues

⋅ No model refits required during inference

🔗 openreview.net/forum?id=Kxt...

How it works: Write in markdown and use Quarto to convert it to html, pdf, epub, ...

I produce my books with Quarto (web + ebook + print version). But you can also use it for websites, reports, dashboards, ...

quarto.org

How it works: Write in markdown and use Quarto to convert it to html, pdf, epub, ...

I produce my books with Quarto (web + ebook + print version). But you can also use it for websites, reports, dashboards, ...

quarto.org

Pessimist: The cup is half empty

Frequentist: *takes a deep breath* The probability that the cup is half full given the observed volume of water (or more extreme volumes) is larger than 5% so I cannot reject the null hypothesis that the cup is half full.

Pessimist: The cup is half empty

Bayesian:

Pessimist: The cup is half empty

Frequentist: *takes a deep breath* The probability that the cup is half full given the observed volume of water (or more extreme volumes) is larger than 5% so I cannot reject the null hypothesis that the cup is half full.

Pessimist: the cup is half empty

Comparative cognition researcher: I wonder if this animal will drop some stones into this cup

Pessimist: the cup is half empty

Developmentalist: even when I demonstrate that the taller cup holds the same amount of water as the wider cup, this kid thinks the tall one has more

Pessimist: the cup is half empty

Linguist: quantities are described with regard to a reference state; thus we can efficiently convey whether the cup was more recently full or empty

Pessimist: the cup is half empty

Comparative cognition researcher: I wonder if this animal will drop some stones into this cup