http://nicolas-dufour.github.io

- 19x faster convergence ⚡

- 370x less FLOPS than FLUX-dev 📉

Video: youtube.com/live/DXQ7FZA...

Big thanks to the jury @dlarlus.bsky.social @ptrkprz.bsky.social @gtolias.bsky.social A. Efros & T. Karras

Video: youtube.com/live/DXQ7FZA...

Big thanks to the jury @dlarlus.bsky.social @ptrkprz.bsky.social @gtolias.bsky.social A. Efros & T. Karras

I was so in awe of the presentation that I even forgot to take pictures 😅

I was so in awe of the presentation that I even forgot to take pictures 😅

No more post-training alignment!

We integrate human alignment right from the start, during pretraining!

Results:

✨ 19x faster convergence ⚡

✨ 370x less compute 💻

🔗 Explore the project: nicolas-dufour.github.io/miro/

No more post-training alignment!

We integrate human alignment right from the start, during pretraining!

Results:

✨ 19x faster convergence ⚡

✨ 370x less compute 💻

🔗 Explore the project: nicolas-dufour.github.io/miro/

- 19x faster convergence ⚡

- 370x less FLOPS than FLUX-dev 📉

Read the thread for all the great details.

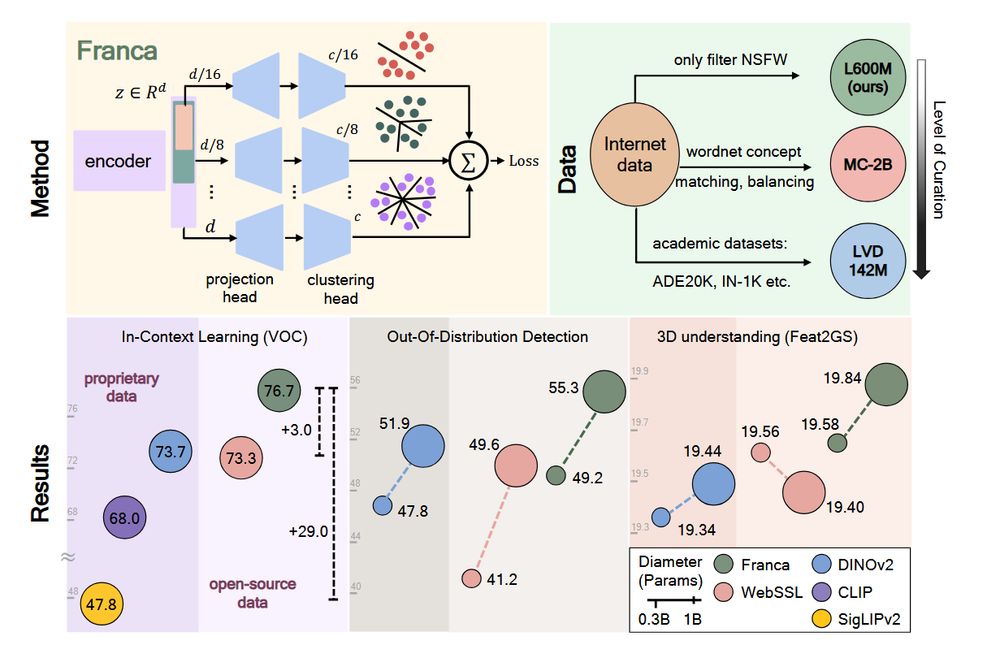

The main conclusion I draw from this work is that better pretraining, in particular by conditioning on better data, allows us to train SOTA models at a fraction of the cost.

- 19x faster convergence ⚡

- 370x less FLOPS than FLUX-dev 📉

Read the thread for all the great details.

The main conclusion I draw from this work is that better pretraining, in particular by conditioning on better data, allows us to train SOTA models at a fraction of the cost.

- 19x faster convergence ⚡

- 370x less FLOPS than FLUX-dev 📉

- 19x faster convergence ⚡

- 370x less FLOPS than FLUX-dev 📉

Broadcast available at gdr-iasis.cnrs.fr/reunions/mod...

Broadcast available at gdr-iasis.cnrs.fr/reunions/mod...

Do you think there would be people in for that? Do you think it would make for a nice competition?

Do you think there would be people in for that? Do you think it would make for a nice competition?

Scale is a religion and if you go against it, you're a heretic and you should burn, "despite [the reviewers] final ratings".

But scale is still not necessary!

Side note: First time swinging reviews up (from 2,2,4,4 to 2,4,4,5) does not get the paper accepted. Strange days.

And if I told you that the model was mostly trained on ImageNet with a bit of artistic fine-tuning at 1024 resolution, still really bad?

Scale is a religion and if you go against it, you're a heretic and you should burn, "despite [the reviewers] final ratings".

But scale is still not necessary!

Side note: First time swinging reviews up (from 2,2,4,4 to 2,4,4,5) does not get the paper accepted. Strange days.

We got lost in latent space. Join us 👇

We got lost in latent space. Join us 👇

📜: arxiv.org/abs/2508.03934

Short summary 👇

📜: arxiv.org/abs/2508.03934

Short summary 👇

It outperforms CLIP-like models (SigLip2, finetuned StreetCLIP)… and that’s shocking 🤯

Why? CLIP models have an innate advantage — they literally learn place names + images. DinoV3 doesn’t.

It outperforms CLIP-like models (SigLip2, finetuned StreetCLIP)… and that’s shocking 🤯

Why? CLIP models have an innate advantage — they literally learn place names + images. DinoV3 doesn’t.

And if I told you that the model was mostly trained on ImageNet with a bit of artistic fine-tuning at 1024 resolution, still really bad?

And if I told you that the model was mostly trained on ImageNet with a bit of artistic fine-tuning at 1024 resolution, still really bad?

If you want to learn more nicolas-dufour.github.io/plonk

www.youtube.com/watch?v=s5oH...

If you want to learn more nicolas-dufour.github.io/plonk

www.youtube.com/watch?v=s5oH...

👀 But NeurIPS now requires at least one author to attend in San Diego or Mexico (and not just virtually as before). This is detrimental to many. Why not allow presenting at EurIPS or online?

1/4

EurIPS is a community-organized conference where you can present accepted NeurIPS 2025 papers, endorsed by @neuripsconf.bsky.social and @nordicair.bsky.social and is co-developed by @ellis.eu

eurips.cc

👀 But NeurIPS now requires at least one author to attend in San Diego or Mexico (and not just virtually as before). This is detrimental to many. Why not allow presenting at EurIPS or online?

1/4

(fun fact: there is no hot air ballon emoji, but @loicland.bsky.social made a tikz macro for it! 😅)

Cc @loicland.bsky.social @davidpicard.bsky.social @vickykalogeiton.bsky.social

(fun fact: there is no hot air ballon emoji, but @loicland.bsky.social made a tikz macro for it! 😅)

Cc @loicland.bsky.social @davidpicard.bsky.social @vickykalogeiton.bsky.social

Cc @loicland.bsky.social @davidpicard.bsky.social @vickykalogeiton.bsky.social