More info about us and our projects ⬇️

🍼 Natalité en berne : la France doit-elle refaire des bébés à tout prix ?

🚙 Dès ses débuts, la voiture électrique avait son avenir plombé

🐙 Un poulpe qui joue du piano ?

🍼 Natalité en berne : la France doit-elle refaire des bébés à tout prix ?

🚙 Dès ses débuts, la voiture électrique avait son avenir plombé

🐙 Un poulpe qui joue du piano ?

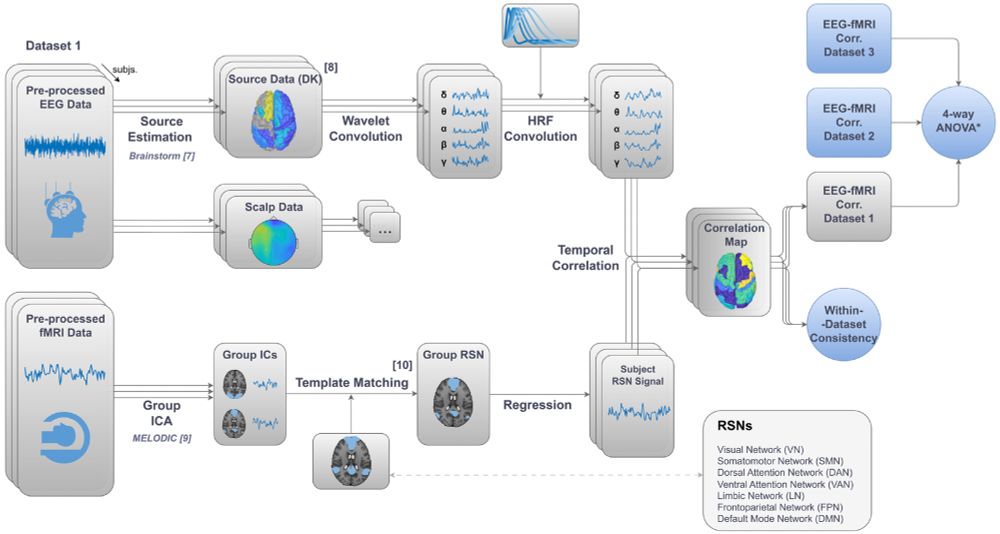

#compneuro #neuroskyence #EEG

#compneuro #neuroskyence #EEG

Below, an overview by @scienmag.bsky.social

#compneuro #neuroskyence

Below, an overview by @scienmag.bsky.social

#compneuro #neuroskyence

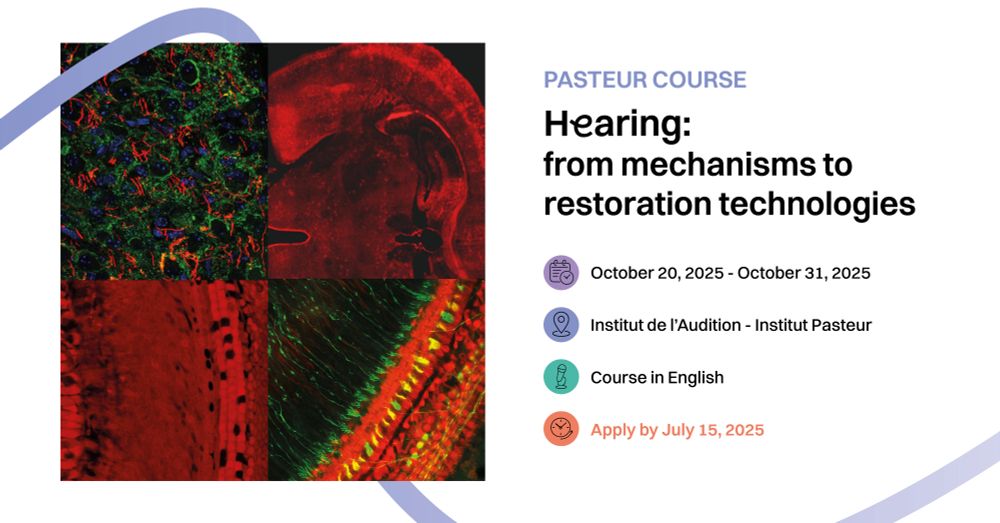

- #MyPhDattheInstitutPasteur

Découvrez Olesia, doctorante à l’Institut de l’Audition, qui explore une approche novatrice pour améliorer la lecture chez les enfants dyslexiques : stimuler le cerveau avec des sons.

Plus d'info en commentaires 💡

- #MyPhDattheInstitutPasteur

Découvrez Olesia, doctorante à l’Institut de l’Audition, qui explore une approche novatrice pour améliorer la lecture chez les enfants dyslexiques : stimuler le cerveau avec des sons.

Plus d'info en commentaires 💡

Really excited that this collaboration is finally out! It shows that combining oscillatory dynamics with hierarchical predictive frameworks generates speech perception that is robust to temporal distortions: similarly to human behavior and more so than current ASR models.

🔓 rdcu.be/eImit

Really excited that this collaboration is finally out! It shows that combining oscillatory dynamics with hierarchical predictive frameworks generates speech perception that is robust to temporal distortions: similarly to human behavior and more so than current ASR models.

✒️S. Jhilal, @sissymarche.bsky.social, B. Thirion, B. Soudrie, A-L Giraud & E. Mandonnet

✒️S. Jhilal, @sissymarche.bsky.social, B. Thirion, B. Soudrie, A-L Giraud & E. Mandonnet

1) neural oscillatory dynamics in temporal processing of speech

2) Algorithms for speech intelligibility in cochlear implants

DM me if interested!

www.pasteur.fr/ppu

1) neural oscillatory dynamics in temporal processing of speech

2) Algorithms for speech intelligibility in cochlear implants

DM me if interested!

www.pasteur.fr/ppu

Consistency of resting-state correlations between fMRI networks and EEG band power

doi.org/10.1162/IMAG...

Consistency of resting-state correlations between fMRI networks and EEG band power

doi.org/10.1162/IMAG...

@bathellierlab.bsky.social

#Neuroscience

@bathellierlab.bsky.social

#Neuroscience

Final call for this advanced Pasteur Course on hearing science and auditory restoration.

🎯For Master’s students, PhD candidates, clinicians & hearing professionals.

Apply now 👉 bit.ly/HearingCourse

Final call for this advanced Pasteur Course on hearing science and auditory restoration.

🎯For Master’s students, PhD candidates, clinicians & hearing professionals.

Apply now 👉 bit.ly/HearingCourse

People with tinnitus often complain about attention difficulties so we made them perform several tasks (n=200). After correcting for comorbidities, no deficits in selective attention or executive functions could be observed but they exhibited poorer control of arousal.

People with tinnitus often complain about attention difficulties so we made them perform several tasks (n=200). After correcting for comorbidities, no deficits in selective attention or executive functions could be observed but they exhibited poorer control of arousal.

Nouvelle plateforme de marque, nouvelle identité visuelle, nouvelle signature : "Pour chaque vie, la science agit" 💙

#InstitutPasteur

Nouvelle plateforme de marque, nouvelle identité visuelle, nouvelle signature : "Pour chaque vie, la science agit" 💙

#InstitutPasteur