IssueBench, our attempt to fix this, is accepted at TACL, and I will be at #EMNLP2025 next week to talk about it!

New results 🧵

We just released IssueBench – the largest, most realistic benchmark of its kind – to answer this question more robustly than ever before.

Long 🧵with spicy results 👇

IssueBench, our attempt to fix this, is accepted at TACL, and I will be at #EMNLP2025 next week to talk about it!

New results 🧵

Please share with your networks. I am the search chair and happy to answer questions!

Please share with your networks. I am the search chair and happy to answer questions!

When people use these tools do they tend to stay on the platform instead of being referred elsewhere? Could this lead to the end of the open web? #pacss2025 #polnet2025

When people use these tools do they tend to stay on the platform instead of being referred elsewhere? Could this lead to the end of the open web? #pacss2025 #polnet2025

Despite recent results, SAEs aren't dead! They can still be useful to mech interp, and also much more broadly: across FAccT, computational social science, and ML4H. 🧵

Despite recent results, SAEs aren't dead! They can still be useful to mech interp, and also much more broadly: across FAccT, computational social science, and ML4H. 🧵

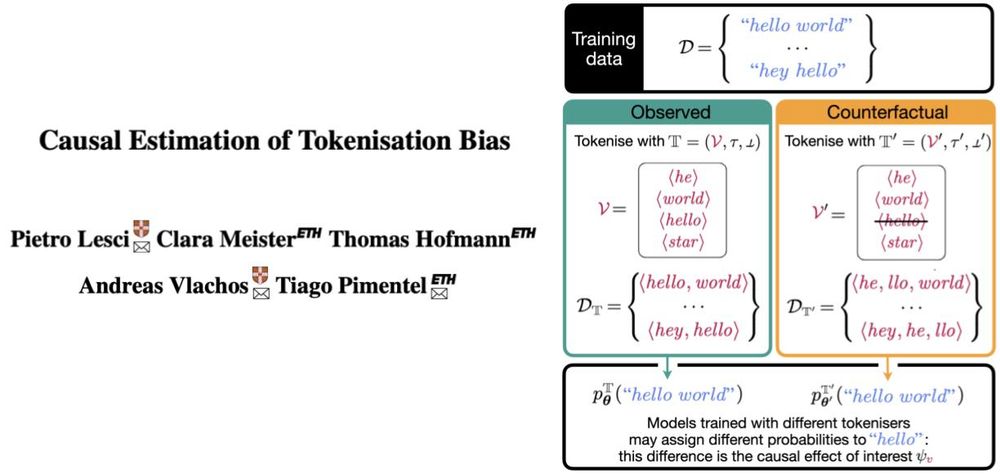

Definitely an approach that more papers talking about effects can incorporate to better clarify what the phenomenon they are studying.

Definitely an approach that more papers talking about effects can incorporate to better clarify what the phenomenon they are studying.

We show that linguistic generalization in language models can be due to underlying analogical mechanisms.

Shoutout to my amazing co-authors @weissweiler.bsky.social, @davidrmortensen.bsky.social, Hinrich Schütze, and Janet Pierrehumbert!

What generalization mechanisms shape the language skills of LLMs?

Prior work has claimed that LLMs learn language via rules.

We revisit the question and find that superficially rule-like behavior of LLMs can be traced to underlying analogical processes.

🧵

We show that linguistic generalization in language models can be due to underlying analogical mechanisms.

Shoutout to my amazing co-authors @weissweiler.bsky.social, @davidrmortensen.bsky.social, Hinrich Schütze, and Janet Pierrehumbert!

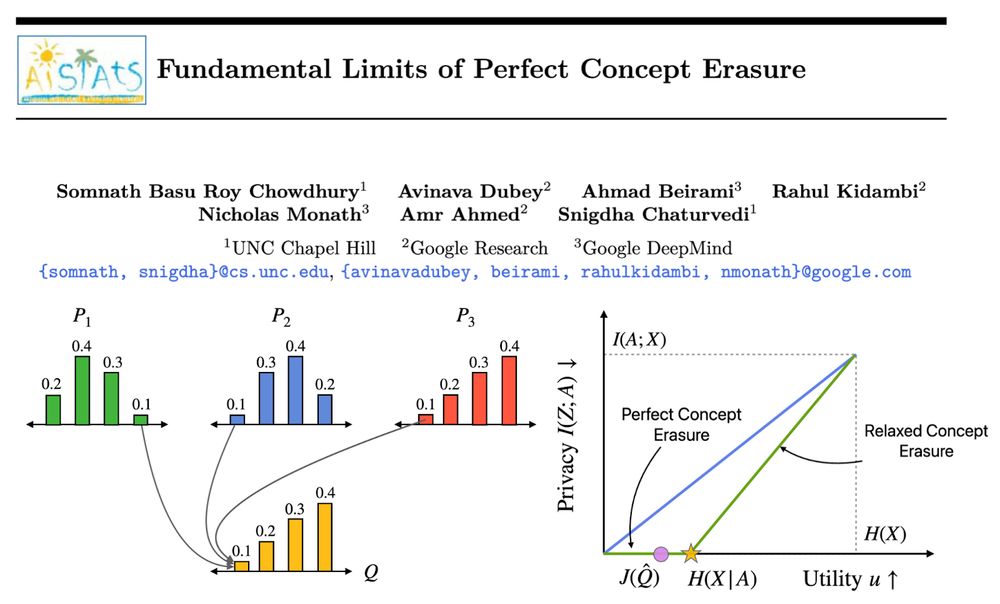

Our method, Perfect Erasure Functions (PEF), erases concepts perfectly from LLM representations. We analytically derive PEF w/o parameter estimation. PEFs achieve pareto optimal erasure-utility tradeoff backed w/ theoretical guarantees. #AISTATS2025 🧵

Our method, Perfect Erasure Functions (PEF), erases concepts perfectly from LLM representations. We analytically derive PEF w/o parameter estimation. PEFs achieve pareto optimal erasure-utility tradeoff backed w/ theoretical guarantees. #AISTATS2025 🧵

Join our team @CSMaP_NYU as a Research Engingeer and help us build the tools that power cutting-edge research on the digital public sphere.

🚀 Apply now!

apply.interfolio.com/165833

Join our team @CSMaP_NYU as a Research Engingeer and help us build the tools that power cutting-edge research on the digital public sphere.

🚀 Apply now!

apply.interfolio.com/165833

The @lmarena.bsky.social has become the go-to evaluation for AI progress.

Our release today demonstrates the difficulty in maintaining fair evaluations on the Arena, despite best intentions.

The @lmarena.bsky.social has become the go-to evaluation for AI progress.

Our release today demonstrates the difficulty in maintaining fair evaluations on the Arena, despite best intentions.

🆕 paper: dl.acm.org/doi/10.1145/...

🆕 paper: dl.acm.org/doi/10.1145/...

We propose 😎 𝗠𝗜𝗕: a 𝗠echanistic 𝗜nterpretability 𝗕enchmark!

We propose 😎 𝗠𝗜𝗕: a 𝗠echanistic 𝗜nterpretability 𝗕enchmark!

From a simple observational measure of overthinking, we introduce Thought Terminator, a black-box, training-free decoding technique where RMs set their own deadlines and follow them

arxiv.org/abs/2504.13367

From a simple observational measure of overthinking, we introduce Thought Terminator, a black-box, training-free decoding technique where RMs set their own deadlines and follow them

arxiv.org/abs/2504.13367

What's driving performance: architecture or data?

To find out we pretrained ModernBERT on the same dataset as CamemBERTaV2 (a DeBERTaV3 model) to isolate architecture effects.

Here are our findings:

What's driving performance: architecture or data?

To find out we pretrained ModernBERT on the same dataset as CamemBERTaV2 (a DeBERTaV3 model) to isolate architecture effects.

Here are our findings:

Some interesting work from co-authors and myself on this problem (short thread):

- arxiv.org/abs/2403.03814

- aclanthology.org/2024.finding...

Some interesting work from co-authors and myself on this problem (short thread):

- arxiv.org/abs/2403.03814

- aclanthology.org/2024.finding...

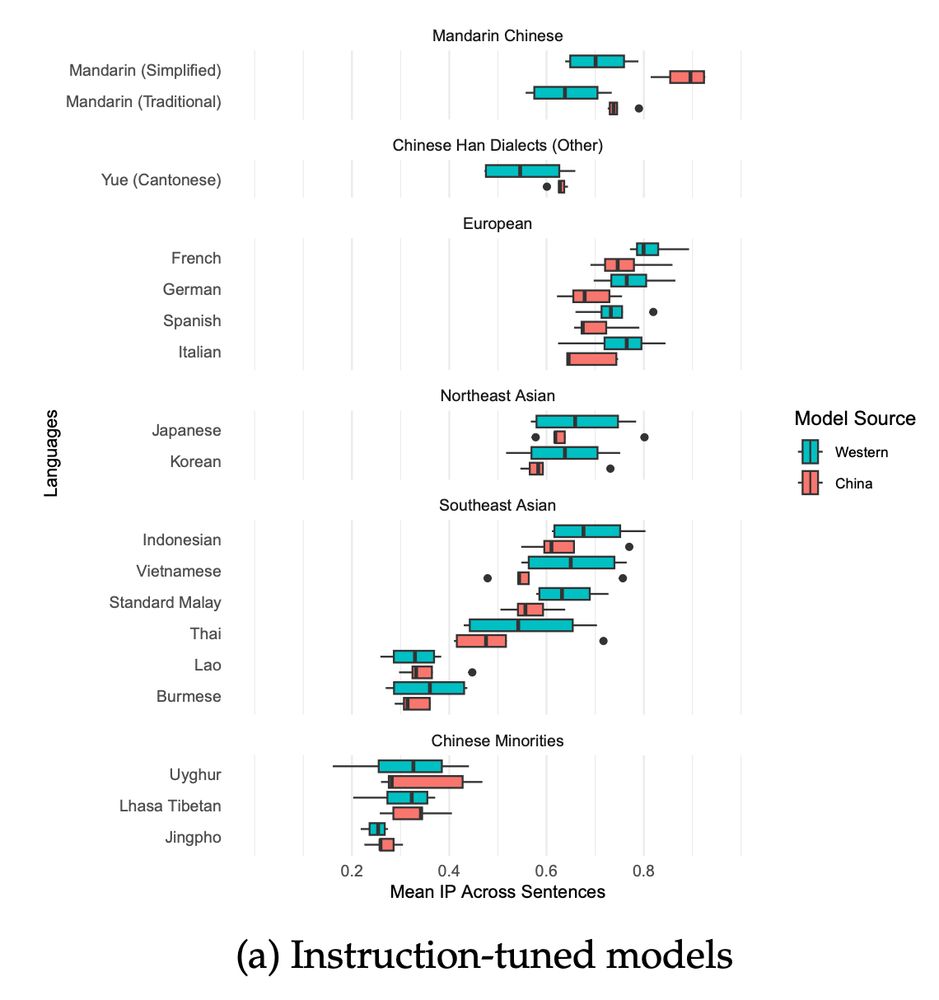

📜Paper: arxiv.org/abs/2504.07072

💿Data: hf.co/datasets/Coh...

🌐Website: cohere.com/research/kal...

Huge thanks to everyone involved! This was a big collaboration 👏

📜Paper: arxiv.org/abs/2504.07072

💿Data: hf.co/datasets/Coh...

🌐Website: cohere.com/research/kal...

Huge thanks to everyone involved! This was a big collaboration 👏

Unso Jo and @dmimno.bsky.social . Link to paper: arxiv.org/pdf/2504.00289

🧵

Unso Jo and @dmimno.bsky.social . Link to paper: arxiv.org/pdf/2504.00289

🧵

We create ONERULER 💍, a multilingual long-context benchmark that allows for nonexistent needles. Turns out NIAH isn't so easy after all!

Our analysis across 26 languages 🧵👇

We create ONERULER 💍, a multilingual long-context benchmark that allows for nonexistent needles. Turns out NIAH isn't so easy after all!

Our analysis across 26 languages 🧵👇

Website: actionable-interpretability.github.io

Deadline: May 9

> Follow @actinterp.bsky.social

> Website actionable-interpretability.github.io

@talhaklay.bsky.social @anja.re @mariusmosbach.bsky.social @sarah-nlp.bsky.social @iftenney.bsky.social

Paper submission deadline: May 9th!

Website: actionable-interpretability.github.io

Deadline: May 9