Postdoc at the Technion. PhD from Politecnico di Milano.

https://muttimirco.github.io

Only one paper to review at a time and <= 6 per year, reviewers report greater satisfaction than reviewing for conferences!

Only one paper to review at a time and <= 6 per year, reviewers report greater satisfaction than reviewing for conferences!

Register now 👇 and join us in Tübingen for 3 days (17th-19th September) full of inspiring talks, posters and many social activities to push the boundaries of the RL community!

Register now 👇 and join us in Tübingen for 3 days (17th-19th September) full of inspiring talks, posters and many social activities to push the boundaries of the RL community!

Thought I’d share a few ICML papers on this direction. Let’s dive in👇

But first… what is convex RL?

🧵

1/n

Thought I’d share a few ICML papers on this direction. Let’s dive in👇

But first… what is convex RL?

🧵

1/n

In our latest work at @icmlconf.bsky.social, we reimagine bandit algorithms to get *efficient* and *interpretable* exploration.

A 🧵 below

1/n

In our latest work at @icmlconf.bsky.social, we reimagine bandit algorithms to get *efficient* and *interpretable* exploration.

A 🧵 below

1/n

Kudos to the awesome students Vincenzo and @ricczamboni.bsky.social for their work under the wise supervision of Marcello.

Next week we will present our paper “Enhancing Diversity in Parallel Agents: A Maximum Exploration Story” with V. De Paola, @mircomutti.bsky.social and M. Restelli at @icmlconf.bsky.social!

(1/N)

Kudos to the awesome students Vincenzo and @ricczamboni.bsky.social for their work under the wise supervision of Marcello.

If we think carefully, we are (implicitly) making three claims.

#FoundationsOfReinforcementLearning #sneakpeek

If we think carefully, we are (implicitly) making three claims.

#FoundationsOfReinforcementLearning #sneakpeek

- researchers write papers no one reads

- reviewers don't have time to review, shamed to coauthors, use LLMs instead of reading

- authors try to fool said LLMs with prompt injection

- evaling researchers based on # of papers (no time to read)

Dystopic.

- researchers write papers no one reads

- reviewers don't have time to review, shamed to coauthors, use LLMs instead of reading

- authors try to fool said LLMs with prompt injection

- evaling researchers based on # of papers (no time to read)

Dystopic.

When? September 17-19, 2025.

More news to come soon, stay tuned!

When? September 17-19, 2025.

More news to come soon, stay tuned!

The recording is available in case you missed it: youtu.be/pNos7AHGMXw

The recording is available in case you missed it: youtu.be/pNos7AHGMXw

If interested but cannot join, here's the arxiv arxiv.org/abs/2504.04505

Joint work with Jeongyeol, Shie, and @aviv-tamar.bsky.social

In two weeks, April 8, Mirco Mutti will talk about "A Classification View on Meta Learning Bandits"

If interested but cannot join, here's the arxiv arxiv.org/abs/2504.04505

Joint work with Jeongyeol, Shie, and @aviv-tamar.bsky.social

In two weeks, April 8, Mirco Mutti will talk about "A Classification View on Meta Learning Bandits"

In two weeks, April 8, Mirco Mutti will talk about "A Classification View on Meta Learning Bandits"

- Summary

- Comment

- Evaluation

Curious of alternative arguments, as it looks like conferences are going in a different direction

- Summary

- Comment

- Evaluation

Curious of alternative arguments, as it looks like conferences are going in a different direction

Paper: www.arxiv.org/abs/2412.18907

Project page: sites.google.com/view/ec-diff...

Code: github.com/carl-qi/EC-D...

If you're interested, feel free to reach out. If you're not personally interested but know someone who might be, please let them know!

If you're interested, feel free to reach out. If you're not personally interested but know someone who might be, please let them know!

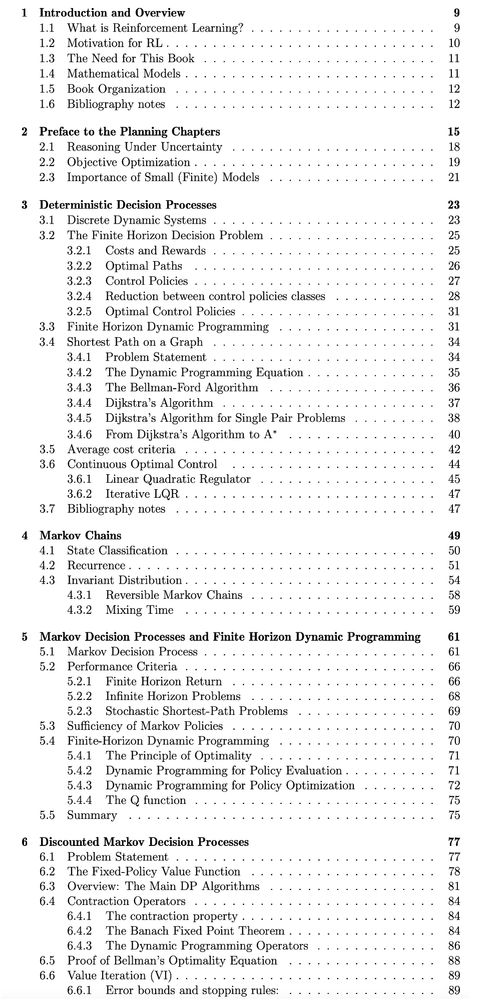

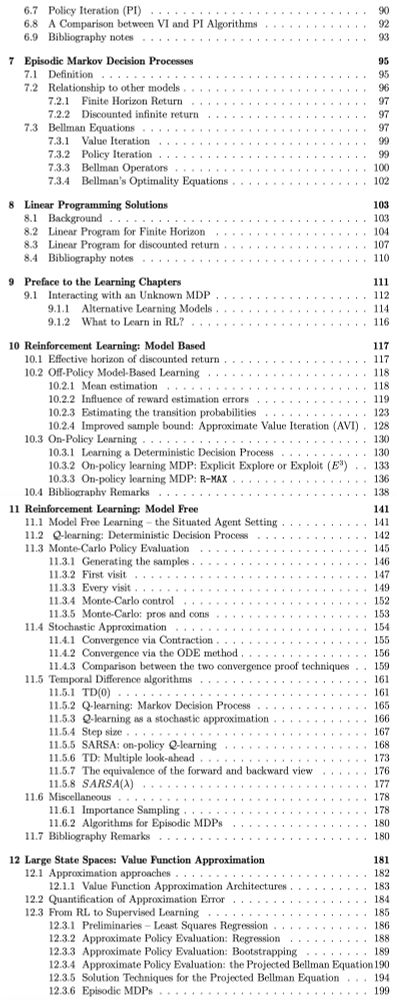

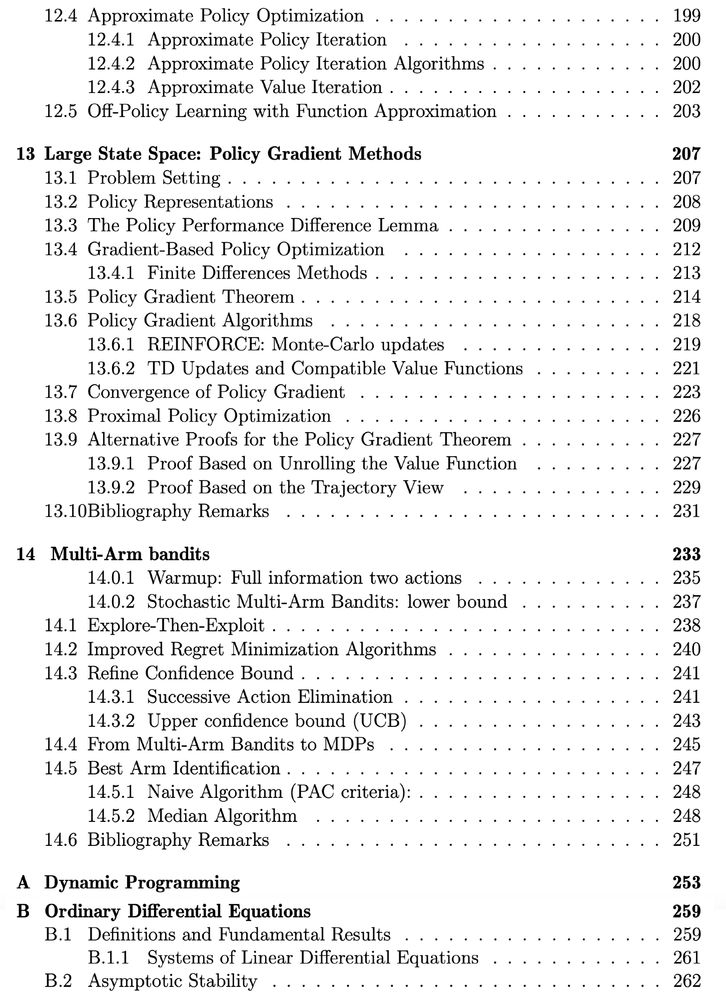

Check out new book draft:

Reinforcement Learning - Foundations sites.google.com/view/rlfound...

W/ Shie Mannor & Yishay Mansour

This is a rigorous first course in RL, based on our teaching at TAU CS and Technion ECE.

go.bsky.app/3WPHcHg

go.bsky.app/3WPHcHg