cset.georgetown.edu/publication/...

Identifying assumptions can help policymakers make informed, flexible decisions about AI under uncertainty.

cset.georgetown.edu/publication/...

Identifying assumptions can help policymakers make informed, flexible decisions about AI under uncertainty.

In the latest @csetgeorgetown.bsky.social ETO AGORA roundup, four CSET experts dig into the Plan’s policy impact and what’s next for AI governance. eto.tech/blog/agora-a...

In the latest @csetgeorgetown.bsky.social ETO AGORA roundup, four CSET experts dig into the Plan’s policy impact and what’s next for AI governance. eto.tech/blog/agora-a...

One interesting aspect is its statement that the federal government should withhold AI-related funding from states with "burdensome AI regulations."

This could be cause for concern.

One interesting aspect is its statement that the federal government should withhold AI-related funding from states with "burdensome AI regulations."

This could be cause for concern.

eto.tech/blog/ai-laws... 🧵1/3

eto.tech/blog/ai-laws... 🧵1/3

thehill.com/opinion/tech...

thehill.com/opinion/tech...

One crucial reason is that states play a critical role in building AI governance infrastructure.

Check out this new op-ed by @jessicaji.bsky.social, myself, and @minanrn.bsky.social on this topic!

thehill.com/opinion/tech...

One crucial reason is that states play a critical role in building AI governance infrastructure.

Check out this new op-ed by @jessicaji.bsky.social, myself, and @minanrn.bsky.social on this topic!

thehill.com/opinion/tech...

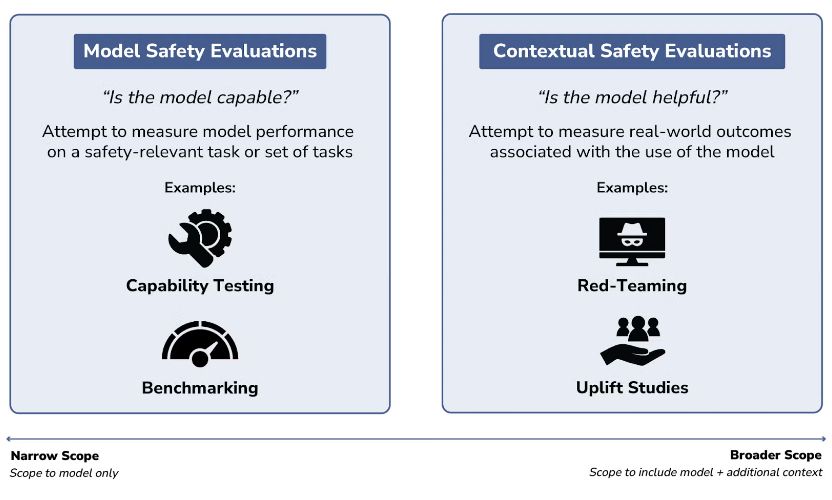

There's no perfect method, but safety evaluations are the best tool we have.

That said, different evals answer different questions about a model!

Effectively evaluating AI models is more crucial than ever. But how do AI evaluations actually work?

In their new explainer,

@jessicaji.bsky.social, @vikramvenkatram.bsky.social &

@stephbatalis.bsky.social break down the different fundamental types of AI safety evaluations.

There's no perfect method, but safety evaluations are the best tool we have.

That said, different evals answer different questions about a model!

cset.georgetown.edu/publication/...

cset.georgetown.edu/publication/...

For the National Interest, @jack-corrigan.bsky.social and I discuss a potential change that could benefit public access to medical drugs.

nationalinterest.org/blog/techlan...

For the National Interest, @jack-corrigan.bsky.social and I discuss a potential change that could benefit public access to medical drugs.

nationalinterest.org/blog/techlan...

CSET's @miahoffmann.bsky.social & @ojdaniels.bsky.social have a new piece out for @techpolicypress.bsky.social.

Read it now 👇

CSET's @miahoffmann.bsky.social & @ojdaniels.bsky.social have a new piece out for @techpolicypress.bsky.social.

Read it now 👇

cset.georgetown.edu/publication/...

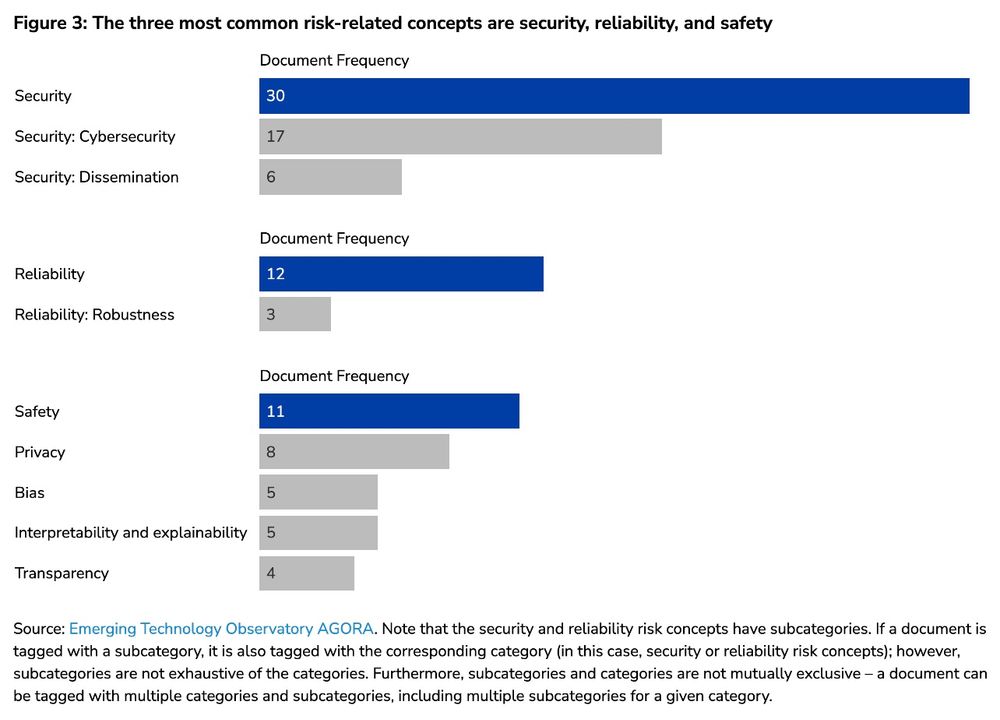

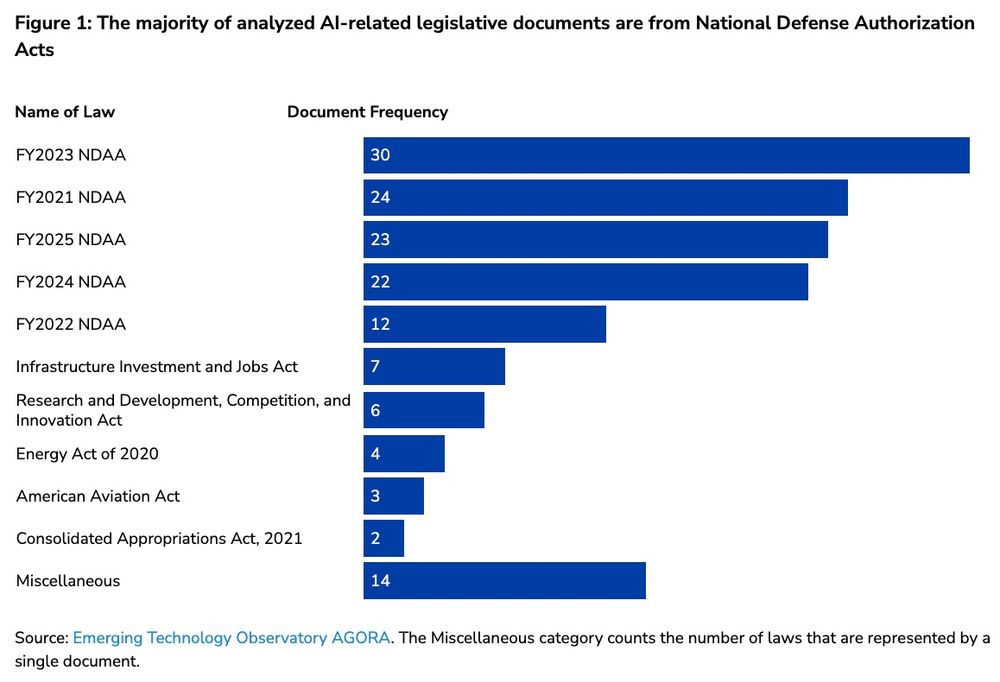

Led by @minanrn.bsky.social and Christian Schoeberl!

@csetgeorgetown.bsky.social

cset.georgetown.edu/publication/...

Led by @minanrn.bsky.social and Christian Schoeberl!

@csetgeorgetown.bsky.social

A new article from @miahoffmann.bsky.social, @minanrn.bsky.social, and @ojdaniels.bsky.social.

A new article from @miahoffmann.bsky.social, @minanrn.bsky.social, and @ojdaniels.bsky.social.

CSET is looking for a Research Fellow to analyze topics related to the development, deployment, and operations of AI & ML tools in the national security space.

Interested or know someone who would be? Learn more and apply 👇 cset.georgetown.edu/job/research...

CSET is looking for a Research Fellow to analyze topics related to the development, deployment, and operations of AI & ML tools in the national security space.

Interested or know someone who would be? Learn more and apply 👇 cset.georgetown.edu/job/research...

www.gzeromedia.com/gzero-ai/5-a...

www.gzeromedia.com/gzero-ai/5-a...