AI agents pose new risks. Monitoring is essential to ensure effective oversight and intervention when needed. Our paper presents a framework for real-time failure detection that takes into account stakes, reversibility and affordances of agent actions.

AI agents pose new risks. Monitoring is essential to ensure effective oversight and intervention when needed. Our paper presents a framework for real-time failure detection that takes into account stakes, reversibility and affordances of agent actions.

Can the new EU AI Code of Practice change the global AI safety landscape?

As companies like Anthropic, OpenAI, and Google sign on, CSET’s @miahoffmann.bsky.social explores the code’s Safety and Security chapter. cset.georgetown.edu/article/eu-a...

Can the new EU AI Code of Practice change the global AI safety landscape?

As companies like Anthropic, OpenAI, and Google sign on, CSET’s @miahoffmann.bsky.social explores the code’s Safety and Security chapter. cset.georgetown.edu/article/eu-a...

One interesting aspect is its statement that the federal government should withhold AI-related funding from states with "burdensome AI regulations."

This could be cause for concern.

One interesting aspect is its statement that the federal government should withhold AI-related funding from states with "burdensome AI regulations."

This could be cause for concern.

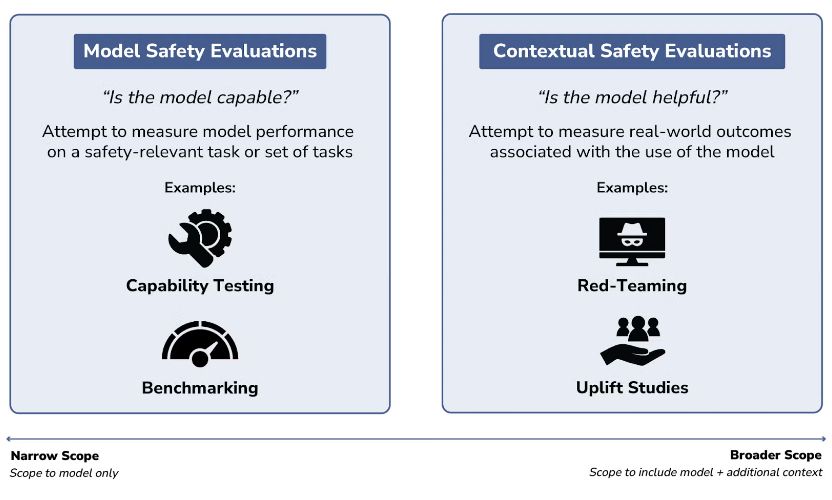

Effectively evaluating AI models is more crucial than ever. But how do AI evaluations actually work?

In their new explainer,

@jessicaji.bsky.social, @vikramvenkatram.bsky.social &

@stephbatalis.bsky.social break down the different fundamental types of AI safety evaluations.

Effectively evaluating AI models is more crucial than ever. But how do AI evaluations actually work?

In their new explainer,

@jessicaji.bsky.social, @vikramvenkatram.bsky.social &

@stephbatalis.bsky.social break down the different fundamental types of AI safety evaluations.

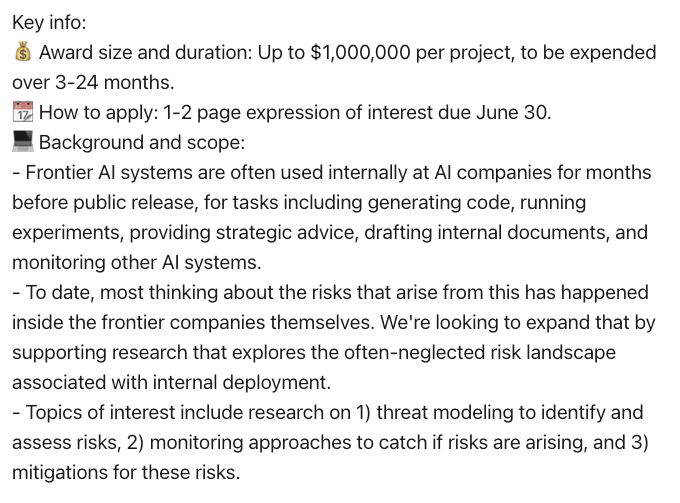

Internal deployments of frontier AI models are an underexplored source of risk. My program at @csetgeorgetown.bsky.social just opened a call for research ideas—EOIs due Jun 30.

Full details ➡️ cset.georgetown.edu/wp-content/u...

Summary ⬇️

Internal deployments of frontier AI models are an underexplored source of risk. My program at @csetgeorgetown.bsky.social just opened a call for research ideas—EOIs due Jun 30.

Full details ➡️ cset.georgetown.edu/wp-content/u...

Summary ⬇️

CSET is looking for a Media Engagement Specialist to amplify our research. If you're a strategic communicator who can craft press releases, media pitches, & social content, apply by March 17, 2025! cset.georgetown.edu/job/media-en...

CSET is looking for a Media Engagement Specialist to amplify our research. If you're a strategic communicator who can craft press releases, media pitches, & social content, apply by March 17, 2025! cset.georgetown.edu/job/media-en...

When: Tuesday, 3/25 at 12PM ET 📅

What’s next for AI red-teaming? And how do we make it more useful?

Join Tori Westerhoff, Christina Liaghati, Marius Hobbhahn, and CSET's @dr-bly.bsky.social * @jessicaji.bsky.social for a great discussion: cset.georgetown.edu/event/whats-...

When: Tuesday, 3/25 at 12PM ET 📅

What’s next for AI red-teaming? And how do we make it more useful?

Join Tori Westerhoff, Christina Liaghati, Marius Hobbhahn, and CSET's @dr-bly.bsky.social * @jessicaji.bsky.social for a great discussion: cset.georgetown.edu/event/whats-...

CSET's @miahoffmann.bsky.social & @ojdaniels.bsky.social have a new piece out for @techpolicypress.bsky.social.

Read it now 👇

CSET's @miahoffmann.bsky.social & @ojdaniels.bsky.social have a new piece out for @techpolicypress.bsky.social.

Read it now 👇

We’re hiring a software engineer to support @emergingtechobs.bsky.social. Help build high-quality public tools and datasets to inform critical decisions on emerging tech issues.

Interested or know someone who would be? Learn more and apply 👇 cset.georgetown.edu/job/software...

We’re hiring a software engineer to support @emergingtechobs.bsky.social. Help build high-quality public tools and datasets to inform critical decisions on emerging tech issues.

Interested or know someone who would be? Learn more and apply 👇 cset.georgetown.edu/job/software...

A new article from @miahoffmann.bsky.social, @minanrn.bsky.social, and @ojdaniels.bsky.social.

A new article from @miahoffmann.bsky.social, @minanrn.bsky.social, and @ojdaniels.bsky.social.

If you answered Yes to any of these, check out our Starter Pack and follow my brilliant colleagues working on these topics! bsky.app/starter-pack...

If you answered Yes to any of these, check out our Starter Pack and follow my brilliant colleagues working on these topics! bsky.app/starter-pack...

There are many steps in the pathway to biological harm, including risks posed by AI. CSET Fellow @stephbatalis.bsky.social offers a suite of corresponding policy and governance tools to help mitigate biorisk.

Read more here 👇 cset.georgetown.edu/publication/...

There are many steps in the pathway to biological harm, including risks posed by AI. CSET Fellow @stephbatalis.bsky.social offers a suite of corresponding policy and governance tools to help mitigate biorisk.

Read more here 👇 cset.georgetown.edu/publication/...