hannamw.github.io

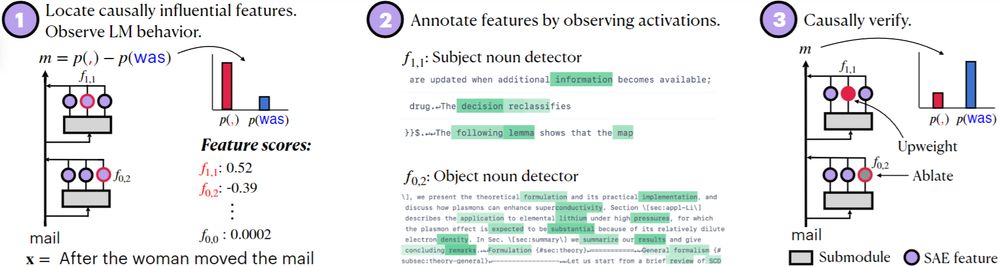

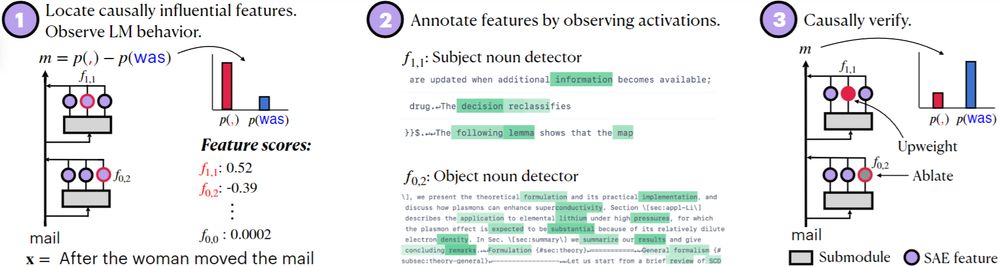

In a new paper, @amuuueller.bsky.social and I use mech interp tools to study how LMs process structurally ambiguous sentences. We show LMs rely on both syntactic & spurious features! 1/10

We propose 😎 𝗠𝗜𝗕: a 𝗠echanistic 𝗜nterpretability 𝗕enchmark!

We propose 😎 𝗠𝗜𝗕: a 𝗠echanistic 𝗜nterpretability 𝗕enchmark!

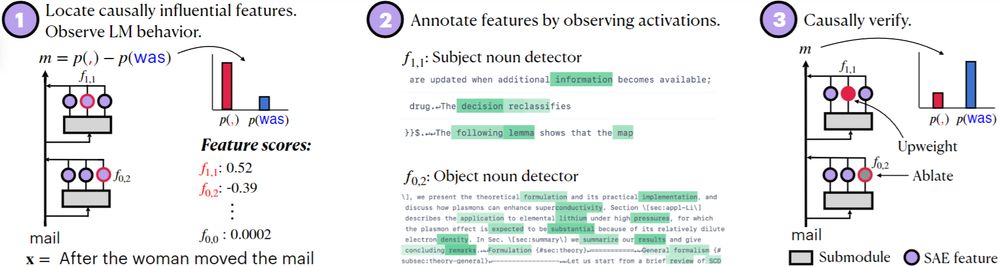

Do LLMs show similar mechanisms? @michaelwhanna.bsky.social and I investigate: arxiv.org/abs/2412.05353

Do LLMs show similar mechanisms? @michaelwhanna.bsky.social and I investigate: arxiv.org/abs/2412.05353

LLMs can hallucinate - but did you know they can do so with high certainty even when they know the correct answer? 🤯

We find those hallucinations in our latest work with @itay-itzhak.bsky.social, @fbarez.bsky.social, @gabistanovsky.bsky.social and Yonatan Belinkov

LLMs can hallucinate - but did you know they can do so with high certainty even when they know the correct answer? 🤯

We find those hallucinations in our latest work with @itay-itzhak.bsky.social, @fbarez.bsky.social, @gabistanovsky.bsky.social and Yonatan Belinkov

In a new paper, @amuuueller.bsky.social and I use mech interp tools to study how LMs process structurally ambiguous sentences. We show LMs rely on both syntactic & spurious features! 1/10

The Re-Align Workshop is coming back to #ICLR2025

Our CfP is up! Come share your representational alignment work at our interdisciplinary workshop at

@iclr-conf.bsky.social

Deadline is 11:59 pm AOE on Feb 3rd

representational-alignment.github.io

The Re-Align Workshop is coming back to #ICLR2025

Our CfP is up! Come share your representational alignment work at our interdisciplinary workshop at

@iclr-conf.bsky.social

Deadline is 11:59 pm AOE on Feb 3rd

representational-alignment.github.io

In a new paper, @amuuueller.bsky.social and I use mech interp tools to study how LMs process structurally ambiguous sentences. We show LMs rely on both syntactic & spurious features! 1/10

In a new paper, @amuuueller.bsky.social and I use mech interp tools to study how LMs process structurally ambiguous sentences. We show LMs rely on both syntactic & spurious features! 1/10

I'm hiring a fully funded PhD student to work on mechanistic interpretability at @uva-amsterdam.bsky.social. If you're interested in reverse engineering modern deep learning architectures, please apply: vacatures.uva.nl/UvA/job/PhD-...

I'm hiring a fully funded PhD student to work on mechanistic interpretability at @uva-amsterdam.bsky.social. If you're interested in reverse engineering modern deep learning architectures, please apply: vacatures.uva.nl/UvA/job/PhD-...