Research in physics-inspired and geometric deep learning.

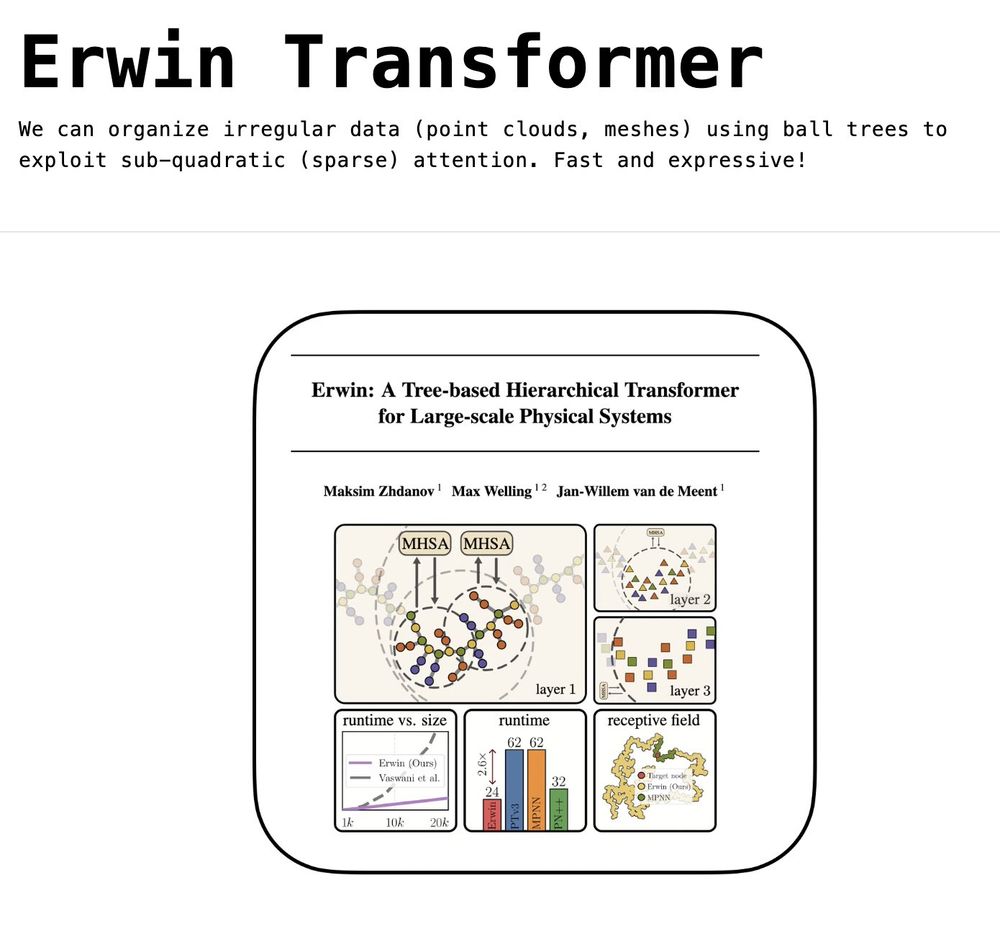

I write about our recent work on using hierarchical trees to enable sparse attention over irregular data (point clouds, meshes) - Erwin Transformer, accepted to ICML 2025

blog: maxxxzdn.github.io/blog/erwin/

paper: arxiv.org/abs/2502.17019

Compressed version in the thread below:

I write about our recent work on using hierarchical trees to enable sparse attention over irregular data (point clouds, meshes) - Erwin Transformer, accepted to ICML 2025

blog: maxxxzdn.github.io/blog/erwin/

paper: arxiv.org/abs/2502.17019

Compressed version in the thread below:

joint work with @wellingmax.bsky.social and @jwvdm.bsky.social

preprint: arxiv.org/abs/2502.17019

code: github.com/maxxxzdn/erwin

joint work with @wellingmax.bsky.social and @jwvdm.bsky.social

preprint: arxiv.org/abs/2502.17019

code: github.com/maxxxzdn/erwin

1/N 🧵

Core components of NSA:

• Dynamic hierarchical sparse strategy

• Coarse-grained token compression

• Fine-grained token selection

1/N 🧵

Details below 👇

Details below 👇

Deadlines:

- Postdoc: Jan 5th (Sunday)

- PhD: Jan 7th (Tuesday)

More info below – Please share with researchers and students who might be interested in joining us!

Deadlines:

- Postdoc: Jan 5th (Sunday)

- PhD: Jan 7th (Tuesday)

More info below – Please share with researchers and students who might be interested in joining us!

wired: trees

wired: trees

Yesterday we kicked off the holidays with a festive group dinner and a fun Secret Santa exchange. 🎅

Wishing everyone a restful and joyful winter break and a happy new year! ❄️💫

I'm hiring a fully funded PhD student to work on mechanistic interpretability at @uva-amsterdam.bsky.social. If you're interested in reverse engineering modern deep learning architectures, please apply: vacatures.uva.nl/UvA/job/PhD-...

I'm hiring a fully funded PhD student to work on mechanistic interpretability at @uva-amsterdam.bsky.social. If you're interested in reverse engineering modern deep learning architectures, please apply: vacatures.uva.nl/UvA/job/PhD-...

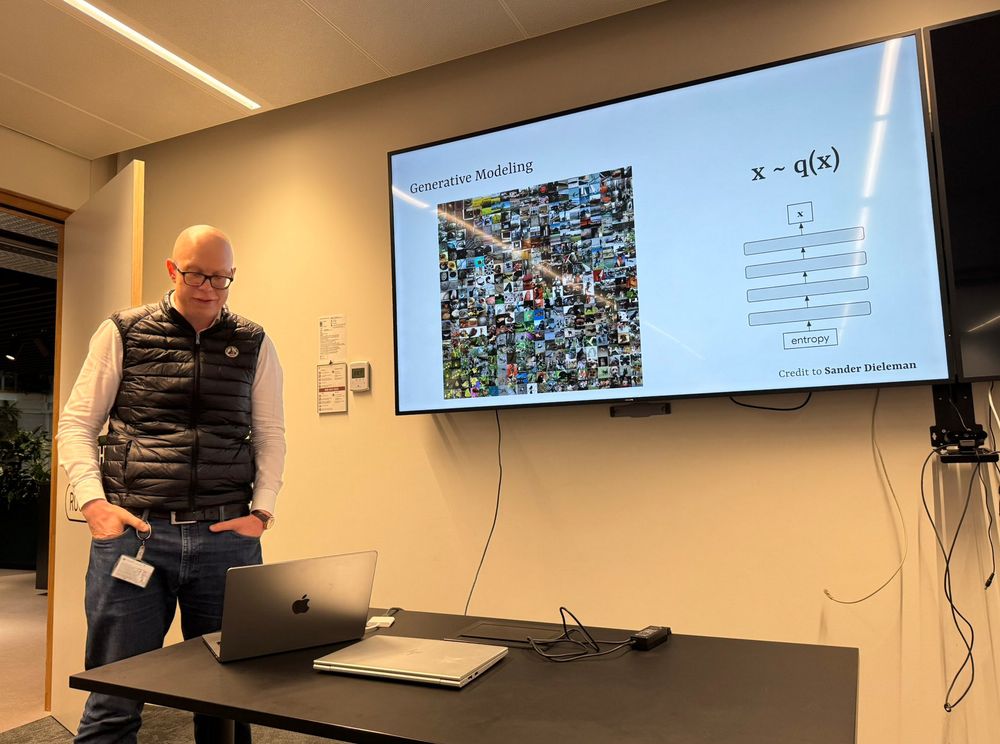

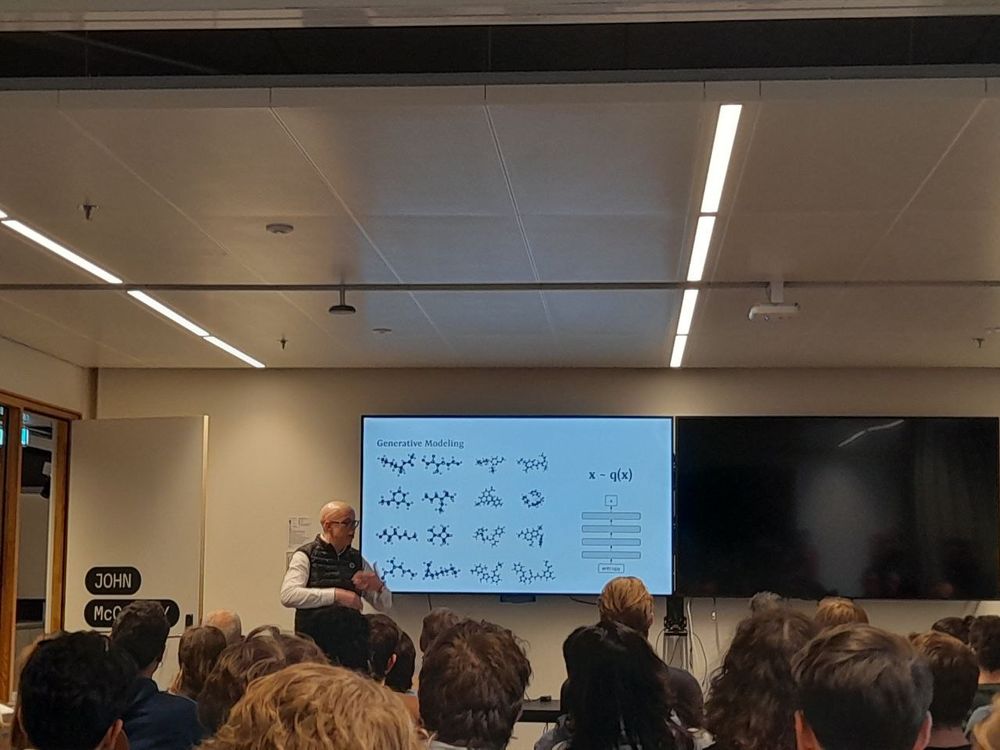

Submissions include generative modelling, AI4Science, geometric deep learning, reinforcement learning and early exiting. See the thread for the full list!

🧵1 / 12

Submissions include generative modelling, AI4Science, geometric deep learning, reinforcement learning and early exiting. See the thread for the full list!

🧵1 / 12

I am also *hiring* for a postdoc position on data-efficient surrogate models for fluid dynamics. Come talk to me if you are on the market!

I am also *hiring* for a postdoc position on data-efficient surrogate models for fluid dynamics. Come talk to me if you are on the market!

The keynote was by @canaesseth.bsky.social , who talked about "Diffusion, Flows and other stories", presenting his 5 papers accepted at NeurIPS! 💥

The keynote was by @canaesseth.bsky.social , who talked about "Diffusion, Flows and other stories", presenting his 5 papers accepted at NeurIPS! 💥

Julia will be presenting the paper at LoG on Thursday as a spotlight oral, and also at the NeurReps Workshop at NeurIPS Workshop next month.

📄: arxiv.org/abs/2410.20516

💻: github.com/smsharma/eqn...

Julia will be presenting the paper at LoG on Thursday as a spotlight oral, and also at the NeurReps Workshop at NeurIPS Workshop next month.

📄: arxiv.org/abs/2410.20516

💻: github.com/smsharma/eqn...

With this starter pack you can easily connect with us and keep up to date with all the member's research and news 🦋

go.bsky.app/8EGigUy

With this starter pack you can easily connect with us and keep up to date with all the member's research and news 🦋

go.bsky.app/8EGigUy

Looking forward to share our research here on 🦋 !

Looking forward to share our research here on 🦋 !