Research in physics-inspired and geometric deep learning.

you can find the code here: github.com/maxxxzdn/erwin

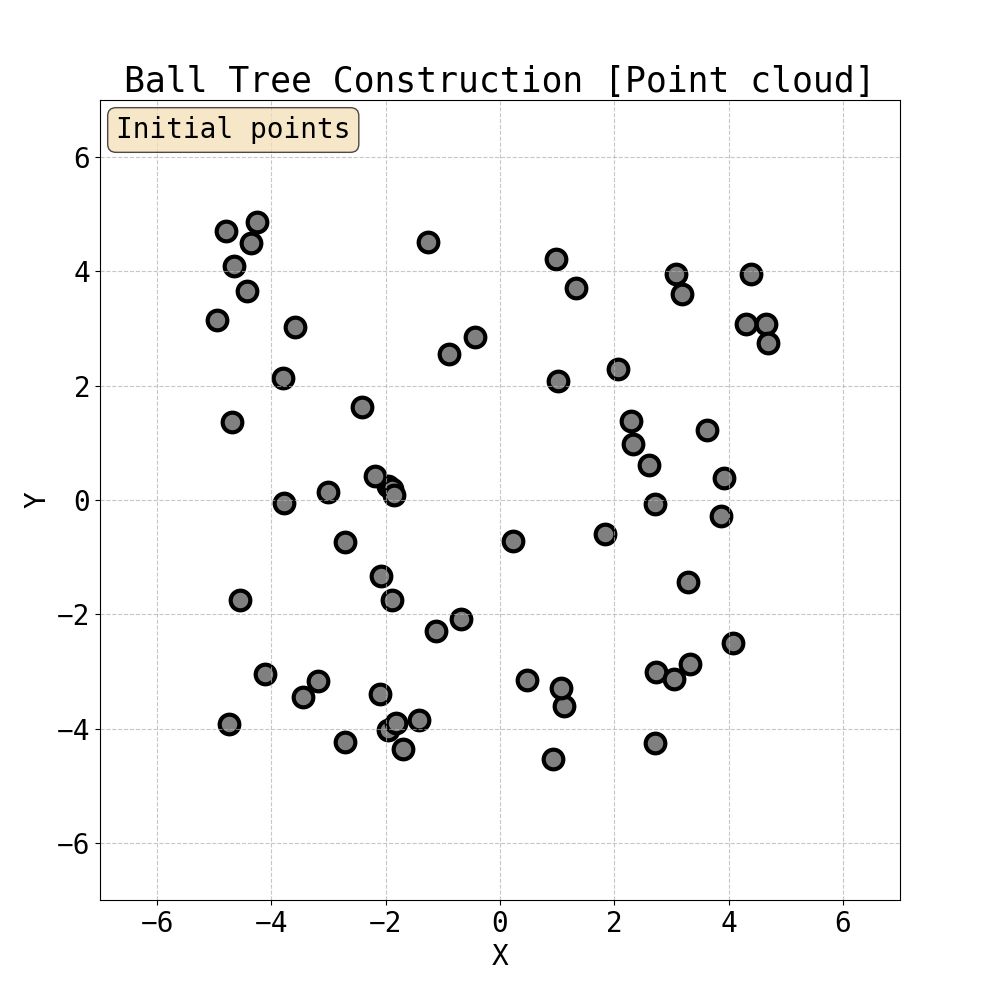

and here is a cute visualisation of Ball Tree building:

you can find the code here: github.com/maxxxzdn/erwin

and here is a cute visualisation of Ball Tree building:

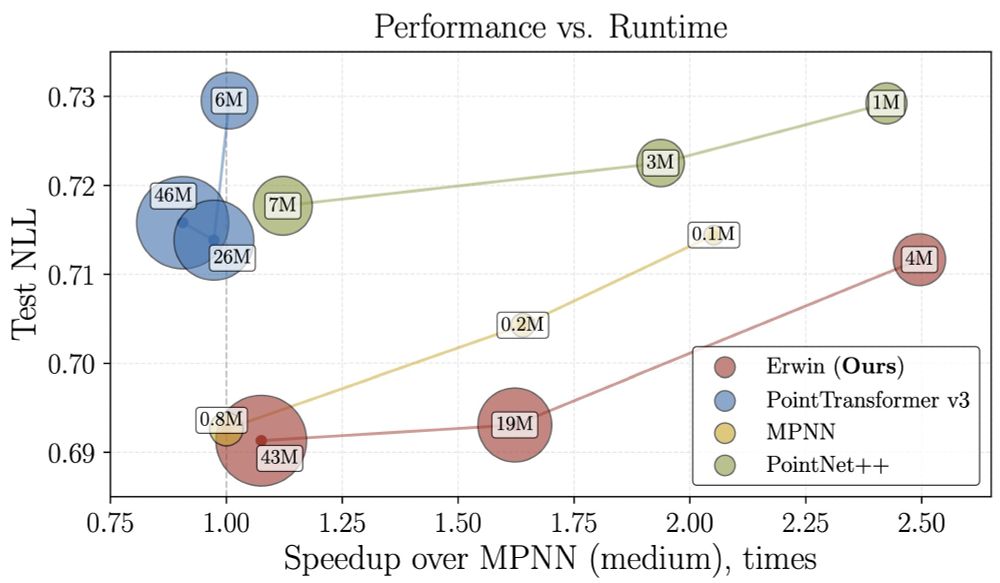

The key insight is that by using Erwin, we can afford larger bottleneck sizes while maintaining efficiency.

Stay tuned for updates!

The key insight is that by using Erwin, we can afford larger bottleneck sizes while maintaining efficiency.

Stay tuned for updates!

Accepted to Long-Context Foundation Models at ICML 2025!

paper: arxiv.org/abs/2506.12541

Accepted to Long-Context Foundation Models at ICML 2025!

paper: arxiv.org/abs/2506.12541

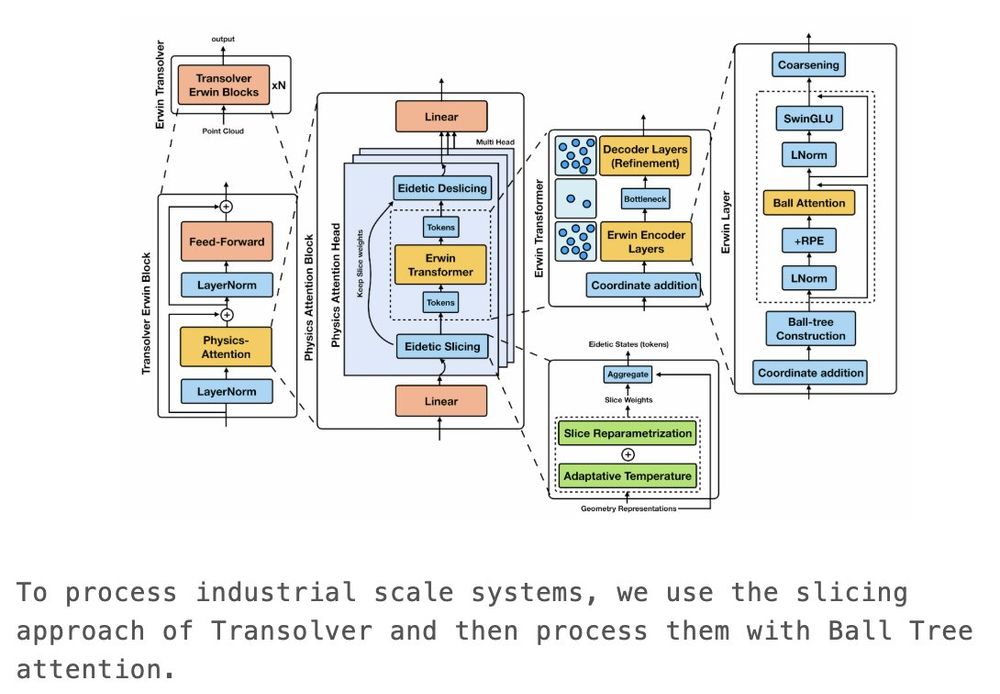

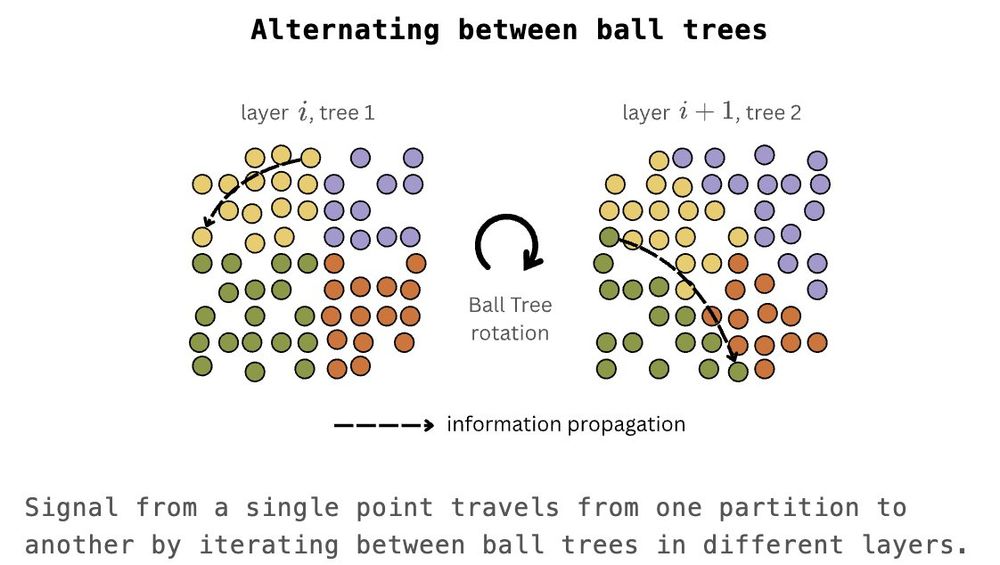

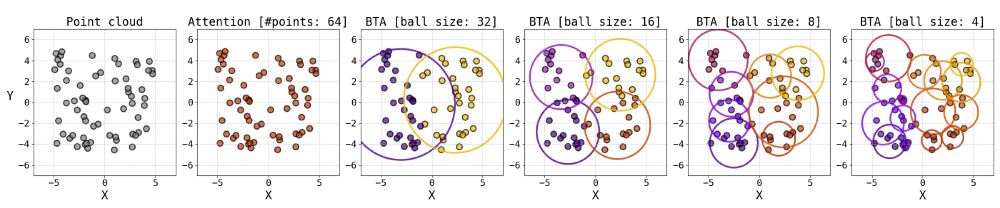

To overcome this, we adapt the idea from the Swin Transformer, but instead of shifting windows, we rotate trees.

To overcome this, we adapt the idea from the Swin Transformer, but instead of shifting windows, we rotate trees.

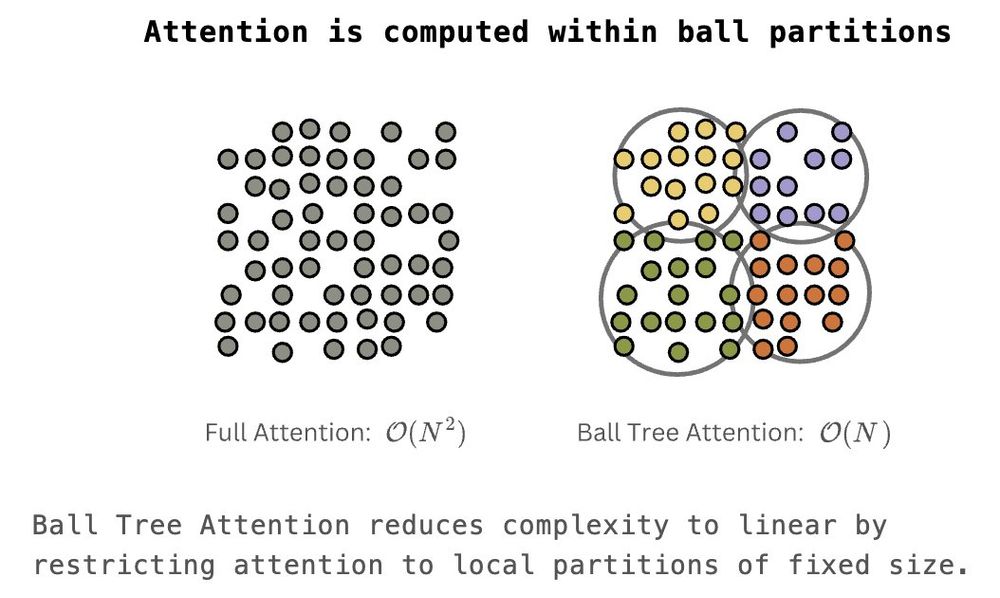

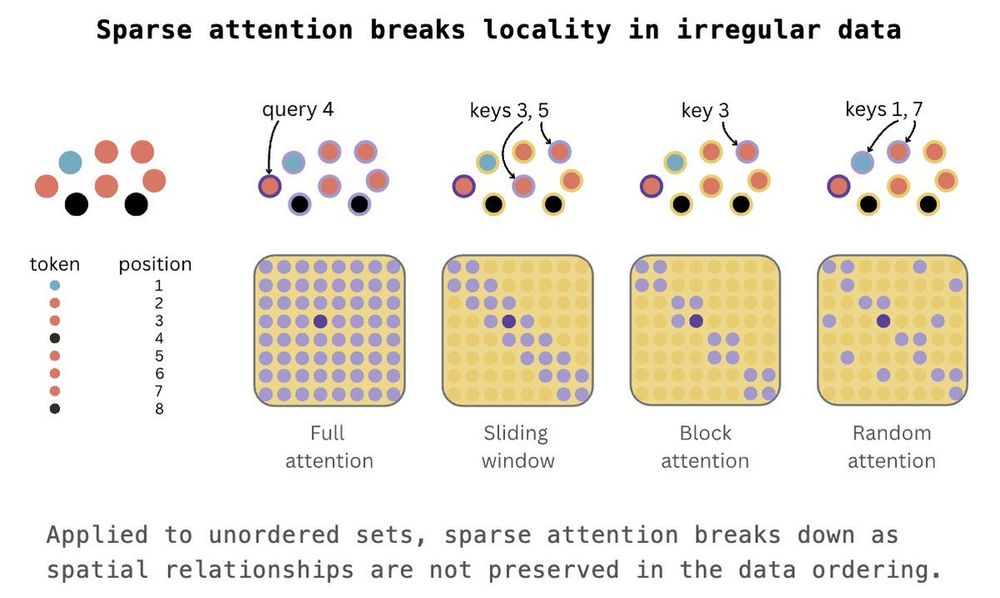

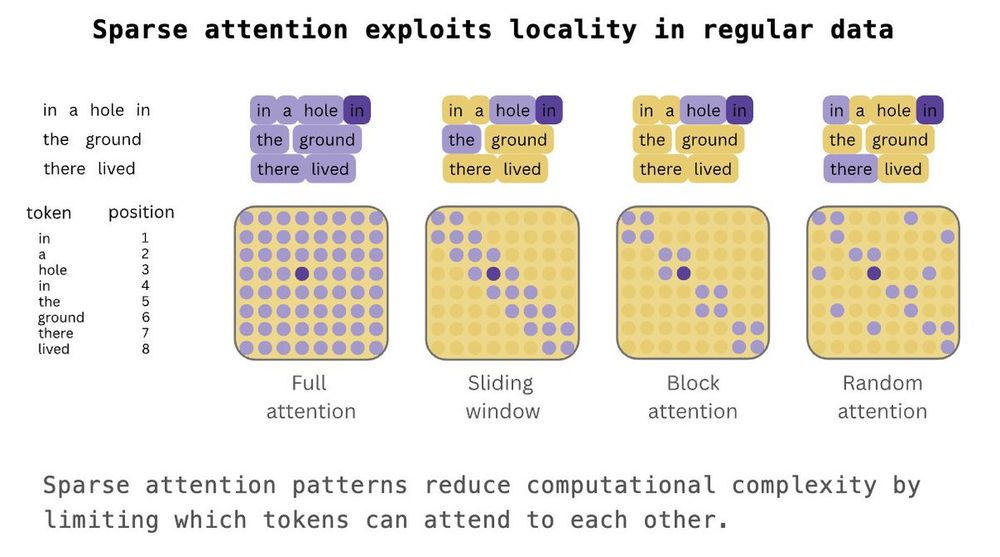

That allows us to restrict attention computation to local partitions, reducing the cost to linear.

That allows us to restrict attention computation to local partitions, reducing the cost to linear.

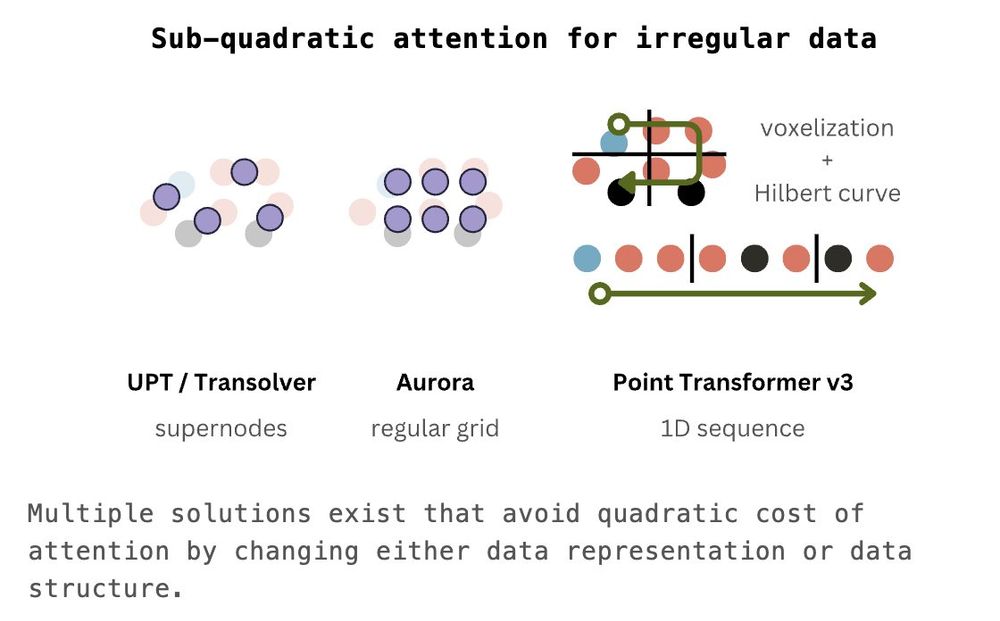

There are two ways: either change the data representation or the data structure.

There are two ways: either change the data representation or the data structure.

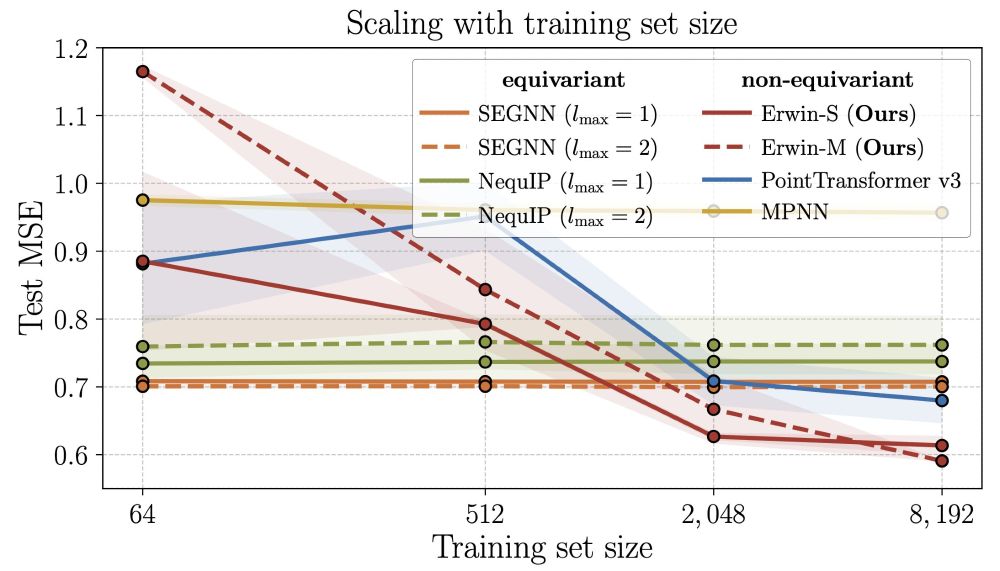

We believe models like Erwin will enable the application of deep learning to physical tasks that handle large particle systems and where runtime was previously a bottleneck.

for details, see the preprint: arxiv.org/abs/2502.17019

We believe models like Erwin will enable the application of deep learning to physical tasks that handle large particle systems and where runtime was previously a bottleneck.

for details, see the preprint: arxiv.org/abs/2502.17019

15/N

15/N

14/N

14/N

13/N

13/N

12/N

12/N

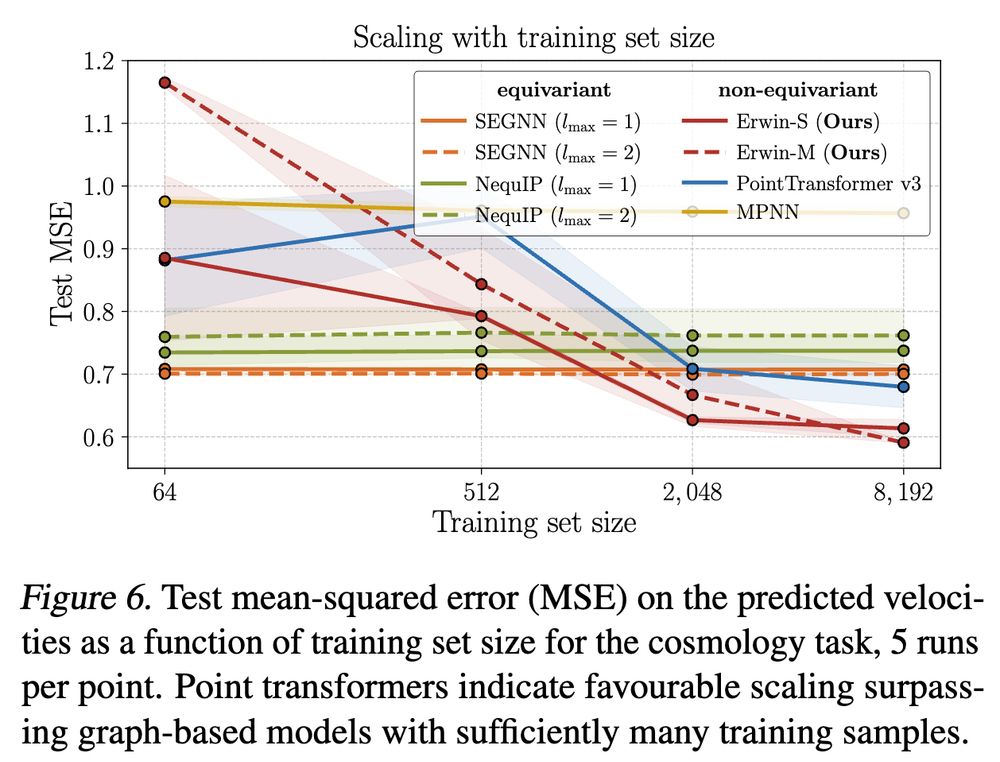

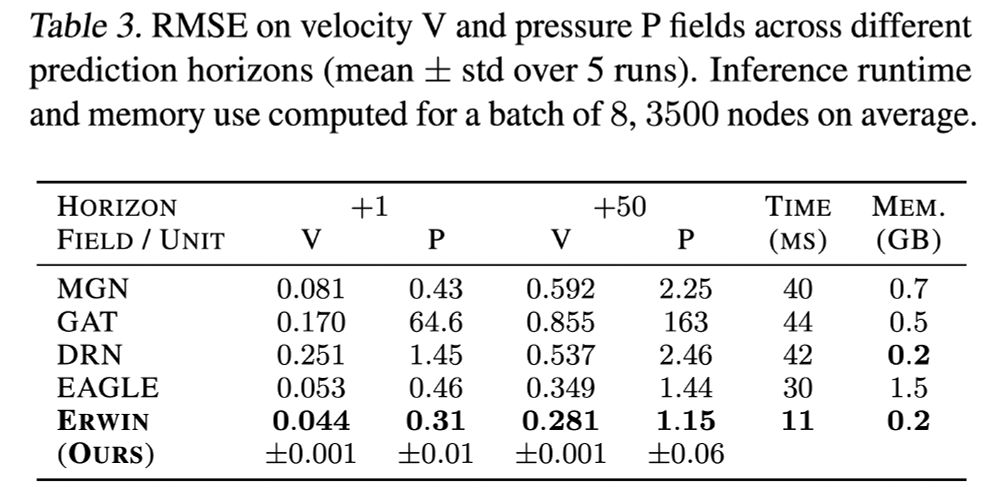

- cosmology (5k nodes per data point)

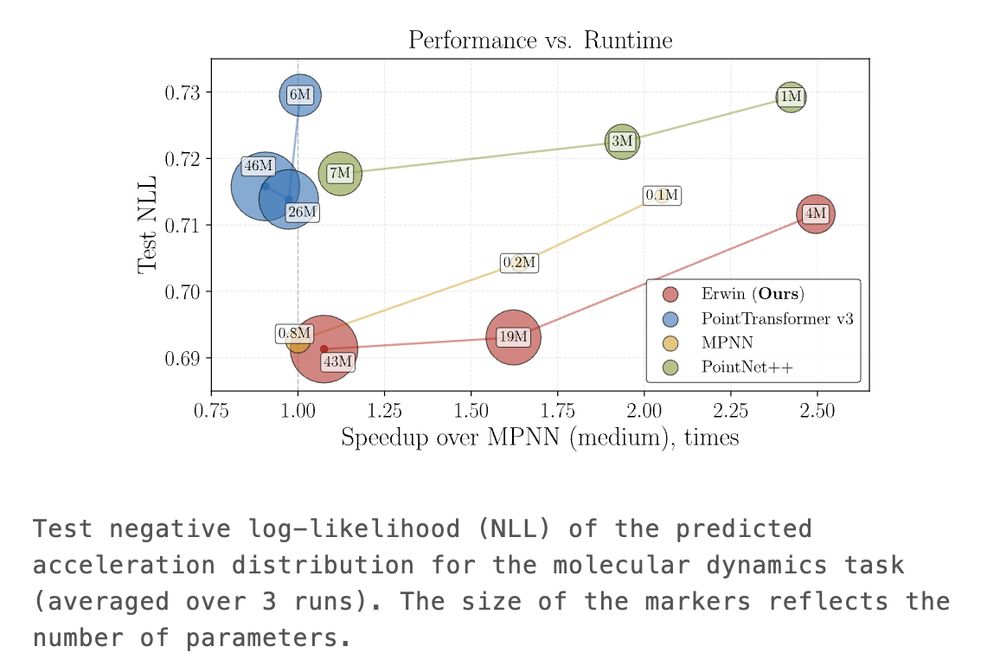

- molecular dynamics (~1k nodes per data point)

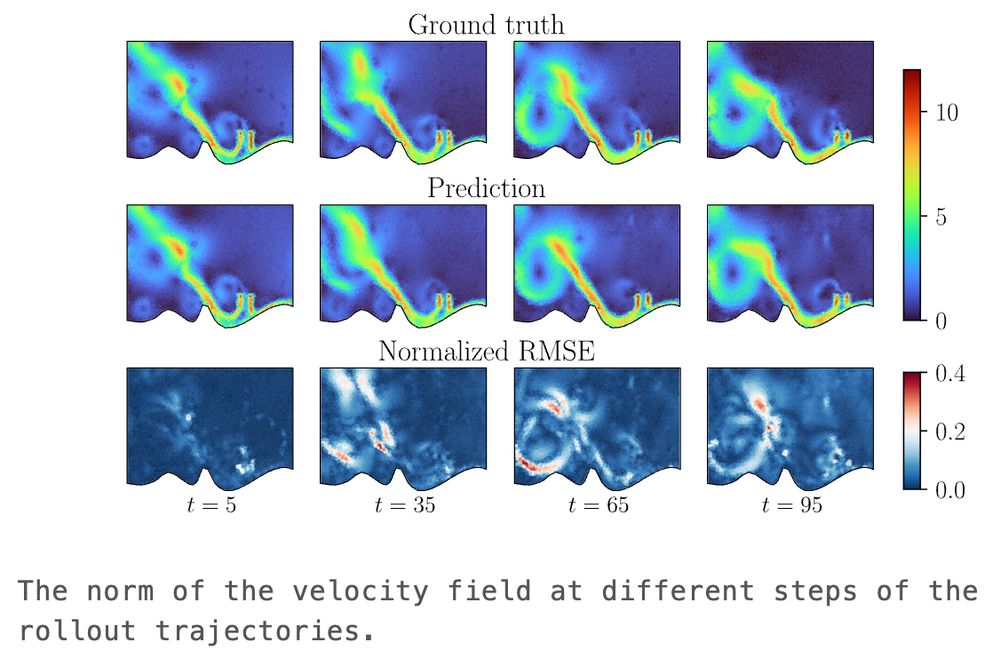

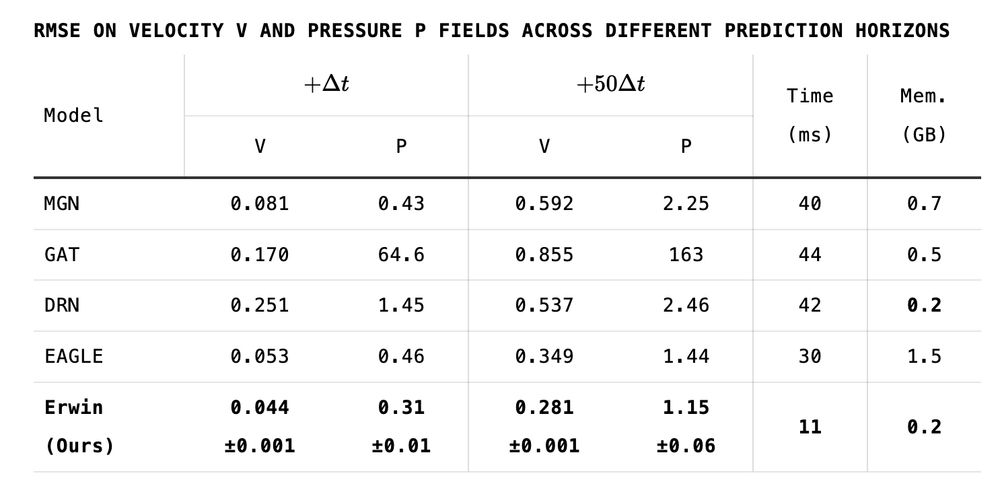

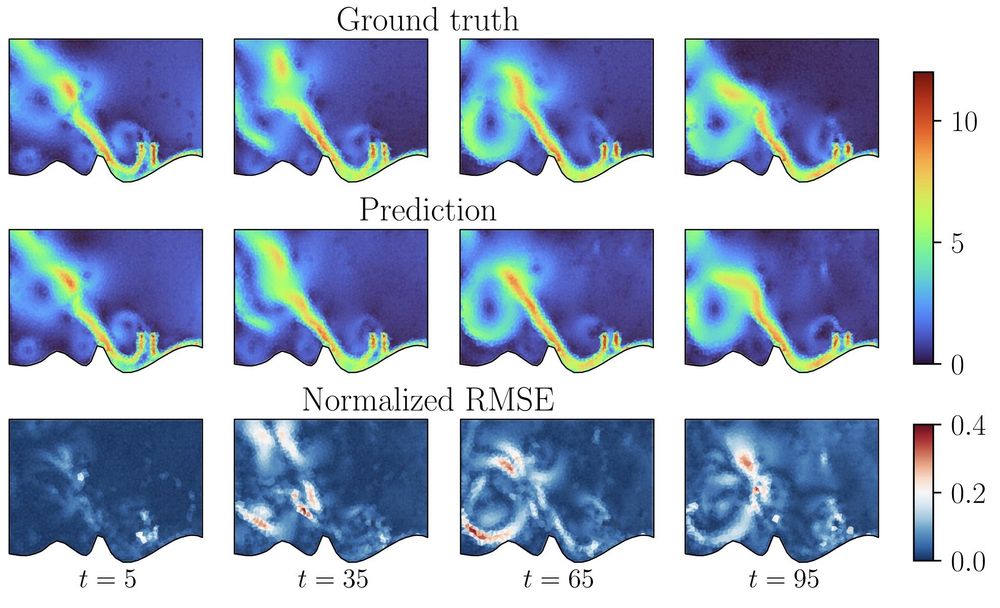

- turbulent fluid dynamics (~3.5k nodes per data point)

11/N

- cosmology (5k nodes per data point)

- molecular dynamics (~1k nodes per data point)

- turbulent fluid dynamics (~3.5k nodes per data point)

11/N

10/N

10/N

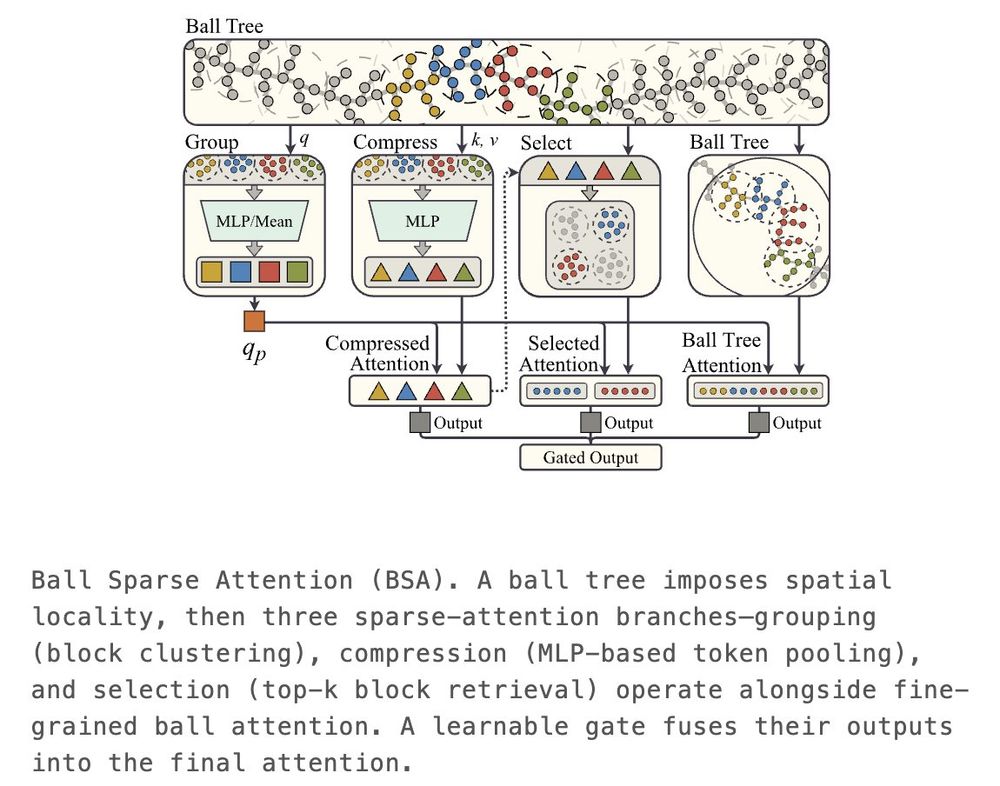

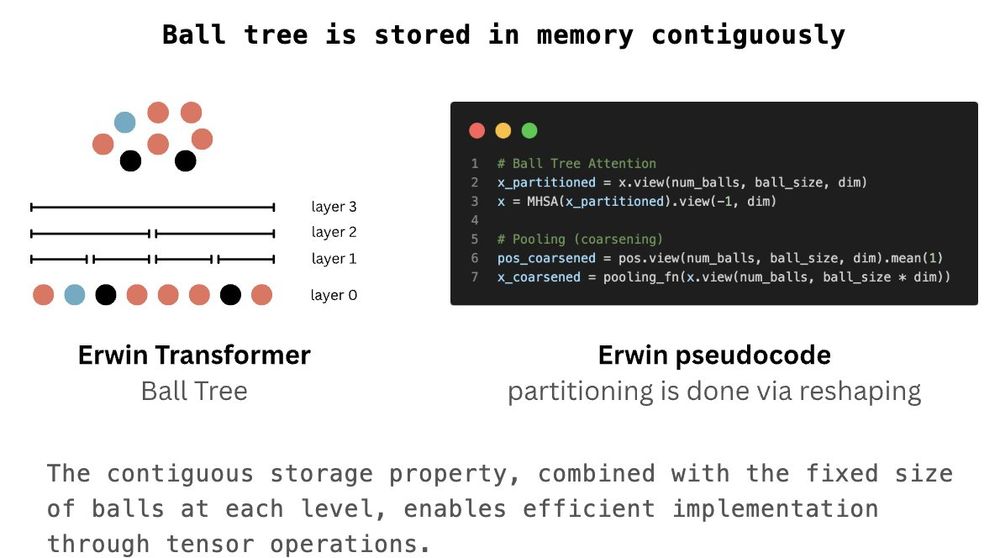

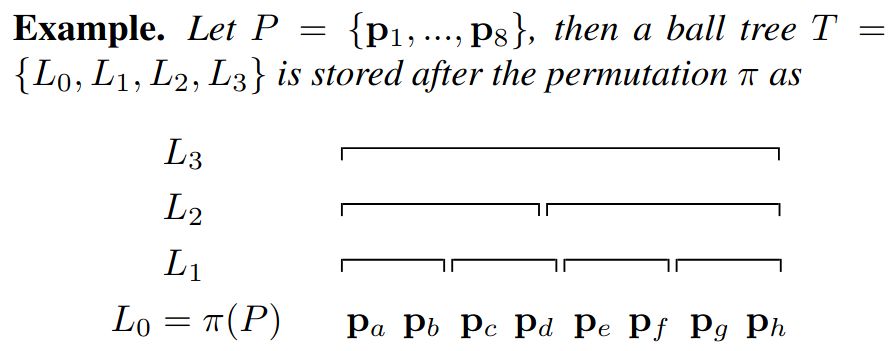

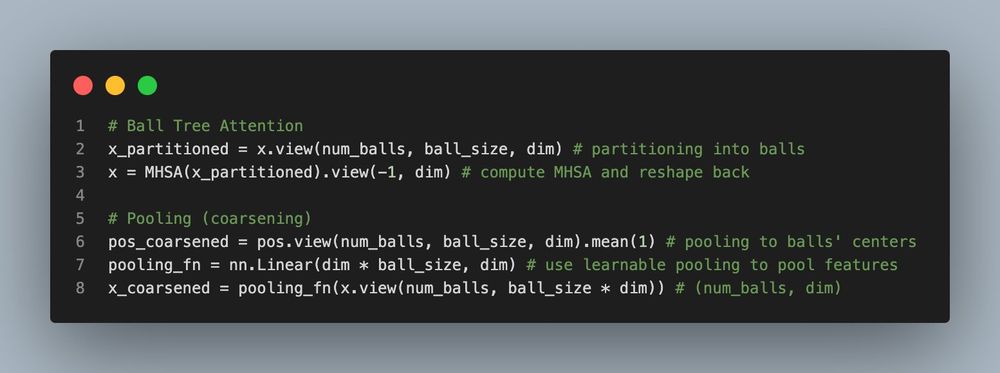

This property is critical and allows us to implement the key operations described above simply via .view() or .mean()!

9/N

This property is critical and allows us to implement the key operations described above simply via .view() or .mean()!

9/N

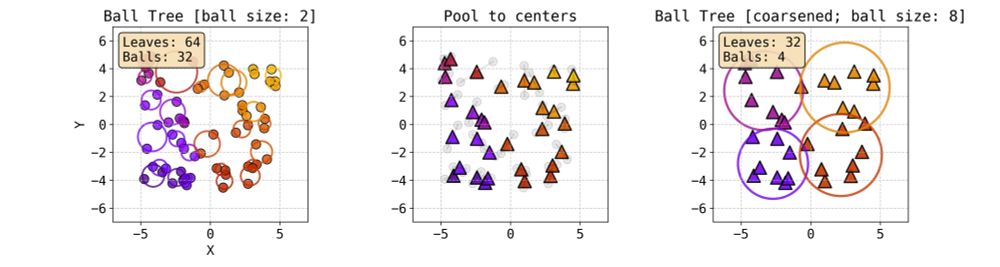

This allows us to learn multi-scale features and effectively increase the model's receptive field.

8/N

This allows us to learn multi-scale features and effectively increase the model's receptive field.

8/N

Once the tree is built, one can choose the level of the tree and compute attention (Ball Tree Attention, BTA) within the balls in parallel.

7/N

Once the tree is built, one can choose the level of the tree and compute attention (Ball Tree Attention, BTA) within the balls in parallel.

7/N