Researcher @msftresearch.bsky.social; working on autonomous agents in video games; PhD Univ of Edinburgh ; Ex Huawei Noah’s Ark Lab, Dematic; Young researcher HLF 2022

What you get, why you should be interested and more, all below in a short 🧵👇

An errata of these corrections and the updated book PDF can already be found at www.marl-book.com

Learn more at the blog post: msft.it/6047QMkd3

and the arXiv preprint: arxiv.org/abs/2601.21718

Learn more at the blog post: msft.it/6047QMkd3

and the arXiv preprint: arxiv.org/abs/2601.21718

📅 January 20th (Tomorrow!)

5pm GMT/ 6pm CET/ 9am PST

📍 Online (link below)

Everyone is welcome! Link via calendar invite and more details:

cohere.com/events/coher...

📅 January 20th (Tomorrow!)

5pm GMT/ 6pm CET/ 9am PST

📍 Online (link below)

Everyone is welcome! Link via calendar invite and more details:

cohere.com/events/coher...

apply.careers.microsoft.com/careers/job/...

apply.careers.microsoft.com/careers/job/...

I'm also on the academic job market for assistant professorship positions in Europe - if you believe I could be a good fit, please reach out, I'd love to connect and chat over☕!

I'm also on the academic job market for assistant professorship positions in Europe - if you believe I could be a good fit, please reach out, I'd love to connect and chat over☕!

@euripsconf.bsky.social too.)

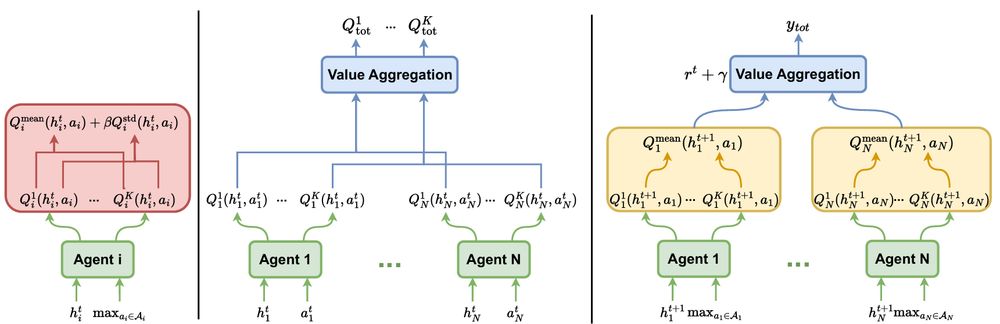

TL;DR: Coupling obs and agent IDs can hurt performance in MARL. Agent-conditioned hypernets cleanly decouple grads and enable specialisation.

📜: arxiv.org/abs/2412.04233

@euripsconf.bsky.social too.)

TL;DR: Coupling obs and agent IDs can hurt performance in MARL. Agent-conditioned hypernets cleanly decouple grads and enable specialisation.

📜: arxiv.org/abs/2412.04233

If you are around, hmu, happy to chat about Multi-Agent Systems (MARL, agentic systems), open-endedness, environments, or anything related! 🎉

If you are around, hmu, happy to chat about Multi-Agent Systems (MARL, agentic systems), open-endedness, environments, or anything related! 🎉

Anyone is welcome to attend!

We organise regular meetings to discuss recent papers in Reinforcement Learning (RL), Multi-Agent RL and related areas (open-ended learning, LLM agents, robotics, etc).

Meetings take place online and are open to everyone 😊

Anyone is welcome to attend!

Full posting to come in a bit.

Full posting to come in a bit.

Full posting to come in a bit.

An errata of these corrections and the updated book PDF can already be found at www.marl-book.com

What you get, why you should be interested and more, all below in a short 🧵👇

An errata of these corrections and the updated book PDF can already be found at www.marl-book.com

Multiagent Learning Session

Where: Ambassador Ballroom 1 & 2

When: 14:00 - 14:13

I’ll also present the poster later at the Learn track of the poster session at 15:45 - 16:30

I'll be presenting the oral at the Multi-agent Learning 1 session on Wednesday (2:00 - 3:45pm), and the poster after 3:45pm!

Paper: arxiv.org/abs/2302.03439

Multiagent Learning Session

Where: Ambassador Ballroom 1 & 2

When: 14:00 - 14:13

I’ll also present the poster later at the Learn track of the poster session at 15:45 - 16:30

If you'd like to chat, feel free to DM me!

If you'd like to chat, feel free to DM me!

sites.google.com/view/excd-20...

sites.google.com/view/excd-20...

It was super cool to see the vision come to life as an intern and everything coming together now after re-joining the team 👏

It was super cool to see the vision come to life as an intern and everything coming together now after re-joining the team 👏

@sharky6000.bsky.social has been an amazing mentor who gave me lots of motivation and advice for my journey forward!

Deadline in 7 days so apply soon 👇

Check out the Doctoral Consortium at #AAMAS 2025! Deadline: Jan 17th.

aamas2025.org/index.php/co...

@sharky6000.bsky.social has been an amazing mentor who gave me lots of motivation and advice for my journey forward!

Deadline in 7 days so apply soon 👇

More details: www.marl-book.com

More details: www.marl-book.com