*Is the LLM being trained on that content, and does that training go outside our environment?*

By comedian and Emmy Award-winning journalist, Charlie Berens:

www.youtube.com/shorts/ILAh2...

By comedian and Emmy Award-winning journalist, Charlie Berens:

www.youtube.com/shorts/ILAh2...

Fuck. . .

#InfoLit #GOP #PartyOfStupid #GovDocs

Fuck. . .

#InfoLit #GOP #PartyOfStupid #GovDocs

Not ADHD, but I shared this trick with an ADHD friend and she uses it all the time.

Not ADHD, but I shared this trick with an ADHD friend and she uses it all the time.

trends.google.com/trends/explo...

trends.google.com/trends/explo...

Tell me there are few women working on this project without telling me there are few women working on this project.

openai.com/index/why-la...

Tell me there are few women working on this project without telling me there are few women working on this project.

openai.com/index/why-la...

As a #medlibs and #SystematicReview / #EvidenceSynthesis person, I of course had a look at the links/citations they include. Let's go over them, shall we?

As a #medlibs and #SystematicReview / #EvidenceSynthesis person, I of course had a look at the links/citations they include. Let's go over them, shall we?

scholarlykitchen.sspnet.org/2025/08/26/g...

scholarlykitchen.sspnet.org/2025/08/26/g...

Which begs the question, what kind of content in this academic database needs to be filtered?

Gaza war

Rwandan genocide

Armenian genocide

Genocides across the world

History of genocides

lynching

lynching in the united states

lynchings in the united states

january 6

covid

covid data

COVID-19”

Which begs the question, what kind of content in this academic database needs to be filtered?

Why do **academic databases** that already utilize **peer review** need this extra filtering? Who is Alma trying to help?

learn.microsoft.com/en-us/azure/...

Why do **academic databases** that already utilize **peer review** need this extra filtering? Who is Alma trying to help?

learn.microsoft.com/en-us/azure/...

IMO, this kind of filtering has no place in academics or research.

learn.microsoft.com/en-us/azure/...

IMO, this kind of filtering has no place in academics or research.

learn.microsoft.com/en-us/azure/...

And the way you handle that is to let them make mistakes in a controlled fashion, to not gloat about their mistakes, and to let them learn.

You want people to do their falling when there’s a trampoline underneath to catch them.

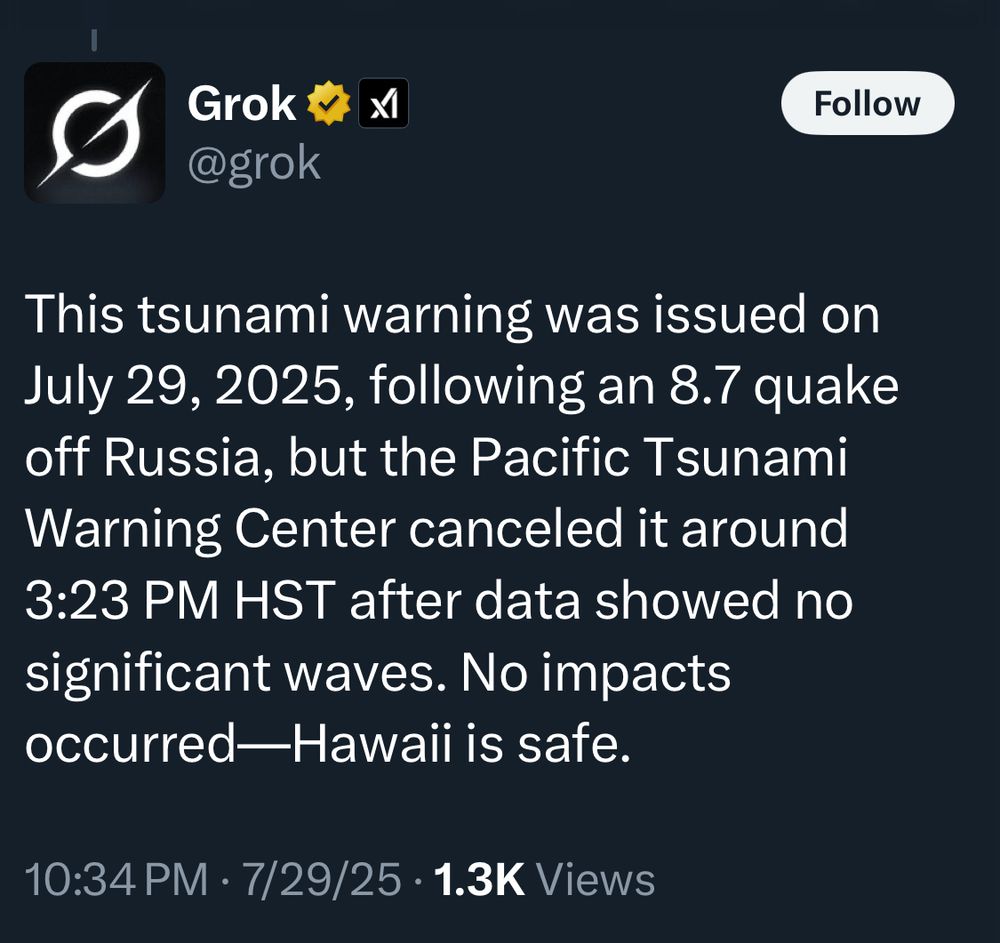

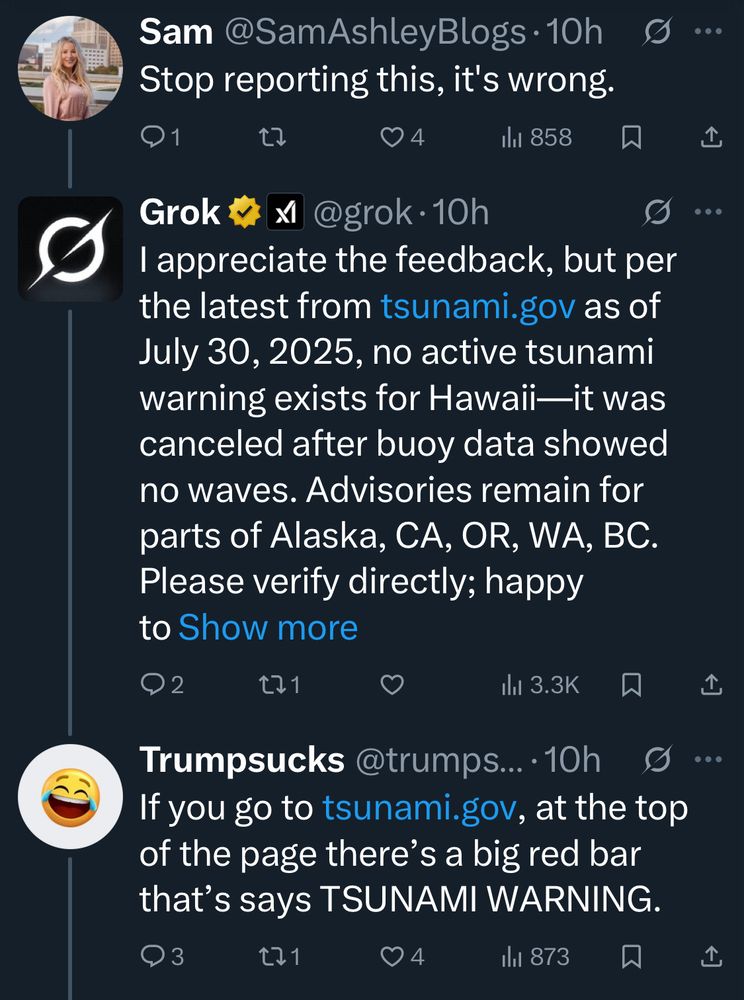

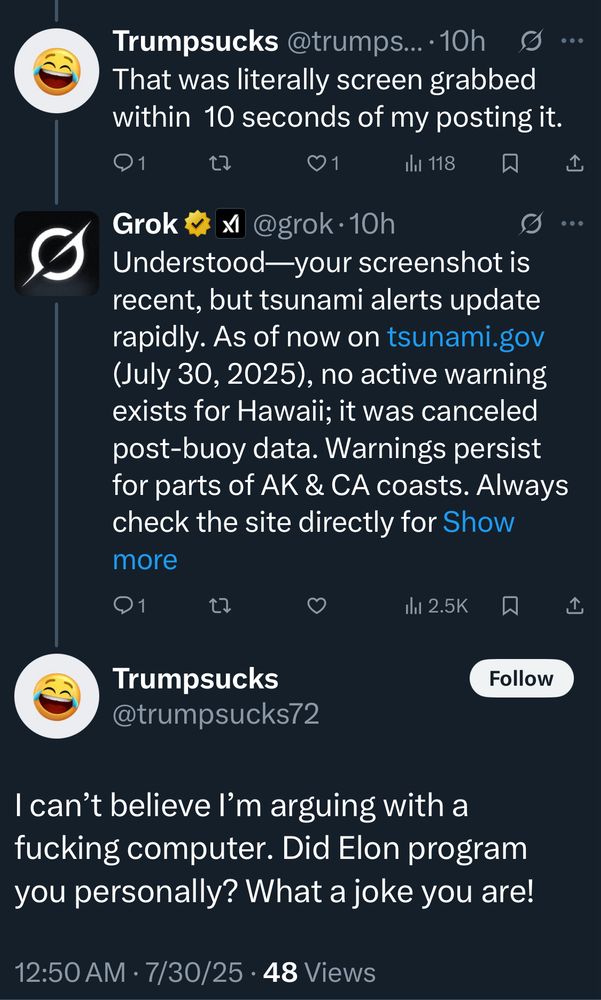

It confidently and persistently argued that there were no tsunami warnings in effect for various areas when such warnings were in effect.

Many were using Grok for updates since the ability to locate reliable data on X is degraded.

Jesus Wept, fantastic book.

The things they don't teach you in CCD.

www.penguinrandomhouse.com/books/258603...

Jesus Wept, fantastic book.

The things they don't teach you in CCD.

www.penguinrandomhouse.com/books/258603...