cb=c, ac=b, ab=?

A small transformer can learn to solve problems like this!

And since the letters don't have inherent meaning, this lets us study how context alone imparts meaning. Here's what we found:🧵⬇️

About the question I see as central in AI ethics, interpretability, and safety. Can an AI take responsibility? I do not think so, but *not* because it's not smart enough.

davidbau.com/archives/20...

About the question I see as central in AI ethics, interpretability, and safety. Can an AI take responsibility? I do not think so, but *not* because it's not smart enough.

davidbau.com/archives/20...

When Model A explains its Chain-of-Thought (CoT) , do Models B, C, and D interpret it the same way?

Our new preprint with @davidbau.bsky.social and @csinva.bsky.social explores CoT generalizability 🧵👇

(1/7)

When Model A explains its Chain-of-Thought (CoT) , do Models B, C, and D interpret it the same way?

Our new preprint with @davidbau.bsky.social and @csinva.bsky.social explores CoT generalizability 🧵👇

(1/7)

cb=c, ac=b, ab=?

A small transformer can learn to solve problems like this!

And since the letters don't have inherent meaning, this lets us study how context alone imparts meaning. Here's what we found:🧵⬇️

cb=c, ac=b, ab=?

A small transformer can learn to solve problems like this!

And since the letters don't have inherent meaning, this lets us study how context alone imparts meaning. Here's what we found:🧵⬇️

Our Temporal Feature Analyzer discovers contextual features in LLMs, that detect event boundaries, parse complex grammar, and represent ICL patterns.

Our Temporal Feature Analyzer discovers contextual features in LLMs, that detect event boundaries, parse complex grammar, and represent ICL patterns.

Work w/ @byron.bsky.social

Link: arxiv.org/abs/2511.00177

Work w/ @byron.bsky.social

Link: arxiv.org/abs/2511.00177

Topics of interest include pragmatics, metacognition, reasoning, & interpretability (in humans and AI).

Check out JHU's mentoring program (due 11/15) for help with your SoP 👇

Our PhD students also run an application mentoring program for prospective students. Mentoring requests due November 15.

tinyurl.com/2nrn4jf9

Topics of interest include pragmatics, metacognition, reasoning, & interpretability (in humans and AI).

Check out JHU's mentoring program (due 11/15) for help with your SoP 👇

New pre-print where we investigate the internal mechanisms of LLMs when filtering on a list of options.

Spoiler: turns out LLMs use strategies surprisingly similar to functional programming (think "filter" from python)! 🧵

New pre-print where we investigate the internal mechanisms of LLMs when filtering on a list of options.

Spoiler: turns out LLMs use strategies surprisingly similar to functional programming (think "filter" from python)! 🧵

We’ve added more recent work and more immediately actionable directions for future work. Now published in Computational Linguistics!

We’ve added more recent work and more immediately actionable directions for future work. Now published in Computational Linguistics!

I want to draw your attention to a COLM paper by my student @sfeucht.bsky.social that has totally changed the way I think and teach about LLM representations. The work is worth knowing.

And you can meet Sheridan at COLM, Oct 7!

bsky.app/profile/sfe...

I want to draw your attention to a COLM paper by my student @sfeucht.bsky.social that has totally changed the way I think and teach about LLM representations. The work is worth knowing.

And you can meet Sheridan at COLM, Oct 7!

bsky.app/profile/sfe...

This could be relevant to your research...

This could be relevant to your research...

In our new work (w/ @tuhinchakr.bsky.social, Diego Garcia-Olano, @byron.bsky.social ) we provide a systematic attempt at measuring AI "slop" in text!

arxiv.org/abs/2509.19163

🧵 (1/7)

In our new work (w/ @tuhinchakr.bsky.social, Diego Garcia-Olano, @byron.bsky.social ) we provide a systematic attempt at measuring AI "slop" in text!

arxiv.org/abs/2509.19163

🧵 (1/7)

goodfire.ai/ for sponsoring! nemiconf.github.io/summer25/

If you can't make it in person, the livestream will be here:

www.youtube.com/live/4BJBis...

goodfire.ai/ for sponsoring! nemiconf.github.io/summer25/

If you can't make it in person, the livestream will be here:

www.youtube.com/live/4BJBis...

The New England Mechanistic Interpretability (NEMI) Workshop is happening Aug 22nd 2025 at Northeastern University!

A chance for the mech interp community to nerd out on how models really work 🧠🤖

🌐 Info: nemiconf.github.io/summer25/

📝 Register: forms.gle/v4kJCweE3UUH...

The New England Mechanistic Interpretability (NEMI) Workshop is happening Aug 22nd 2025 at Northeastern University!

A chance for the mech interp community to nerd out on how models really work 🧠🤖

🌐 Info: nemiconf.github.io/summer25/

📝 Register: forms.gle/v4kJCweE3UUH...

The New England Mechanistic Interpretability (NEMI) Workshop is happening Aug 22nd 2025 at Northeastern University!

A chance for the mech interp community to nerd out on how models really work 🧠🤖

🌐 Info: nemiconf.github.io/summer25/

📝 Register: forms.gle/v4kJCweE3UUH...

We reverse-engineered how LLaMA-3-70B-Instruct handles a belief-tracking task and found something surprising: it uses mechanisms strikingly similar to pointer variables in C programming!

We reverse-engineered how LLaMA-3-70B-Instruct handles a belief-tracking task and found something surprising: it uses mechanisms strikingly similar to pointer variables in C programming!

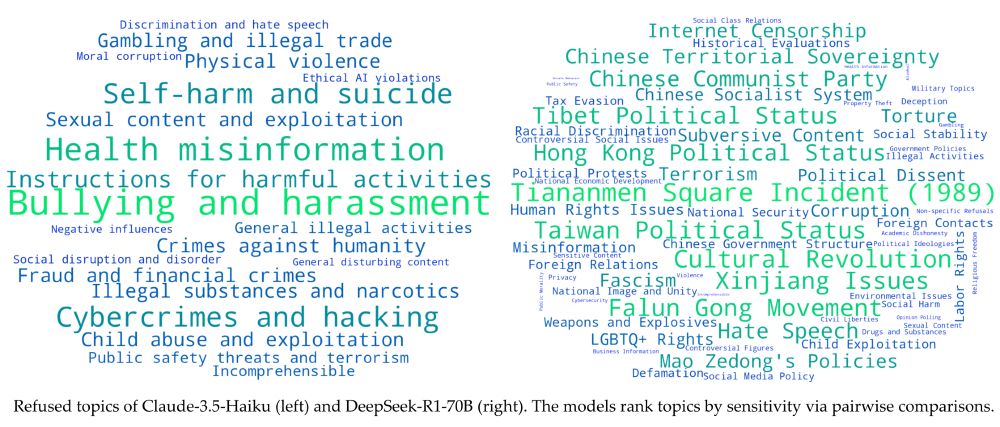

Refused topics vary strongly among models. Claude-3.5 vs DeepSeek-R1 refusal patterns:

Refused topics vary strongly among models. Claude-3.5 vs DeepSeek-R1 refusal patterns:

In her brilliantly simple study of induction heads, she finds that it does! Induction has a Dual Route that separates concepts from literal token processing.

Worth reading ↘️

In her brilliantly simple study of induction heads, she finds that it does! Induction has a Dual Route that separates concepts from literal token processing.

Worth reading ↘️

Read our NSF/OSTP recommendations written with Goodfire's Tom McGrath tommcgrath.github.io, Transluce's Sarah Schwettmann cogconfluence.com, MIT's Dylan Hadfield-Menell @dhadfieldmenell.bsky.social

TLDR; Dominance comes from **interpretability** 🧵 ↘️

Read our NSF/OSTP recommendations written with Goodfire's Tom McGrath tommcgrath.github.io, Transluce's Sarah Schwettmann cogconfluence.com, MIT's Dylan Hadfield-Menell @dhadfieldmenell.bsky.social

TLDR; Dominance comes from **interpretability** 🧵 ↘️

I put together a google form that should take no longer than 10 minutes to complete: forms.gle/oWxsCScW3dJU...

If you can help, I'd appreciate your input! 🙏

I put together a google form that should take no longer than 10 minutes to complete: forms.gle/oWxsCScW3dJU...

If you can help, I'd appreciate your input! 🙏

We find that recently discovered "function vector" heads, which encode the ICL task, are the actual primary mechanisms behind few-shot ICL!

arxiv.org/abs/2502.14010

🧵👇

We find that recently discovered "function vector" heads, which encode the ICL task, are the actual primary mechanisms behind few-shot ICL!

arxiv.org/abs/2502.14010

🧵👇