At: CIMCYC, Granada. Formerly: VU Amsterdam & Donders Institute.

https://www.richter-neuroscience.com/

🚨 New paper 🚨 out now in @cp-iscience.bsky.social with @paulapena.bsky.social and @mruz.bsky.social

www.cell.com/iscience/ful...

Summary 🧵 below 👇

Perspective article w/ Cem Uran, @martinavinck.bsky.social & @predictivebrain.bsky.social now in @imagingneurosci.bsky.social, highlighting recent work on the nature of surprise reflected in visual prediction errors.

🧵👇

Perspective article w/ Cem Uran, @martinavinck.bsky.social & @predictivebrain.bsky.social now in @imagingneurosci.bsky.social, highlighting recent work on the nature of surprise reflected in visual prediction errors.

🧵👇

🚨 New paper 🚨 out now in @cp-iscience.bsky.social with @paulapena.bsky.social and @mruz.bsky.social

www.cell.com/iscience/ful...

Summary 🧵 below 👇

🚨 New paper 🚨 out now in @cp-iscience.bsky.social with @paulapena.bsky.social and @mruz.bsky.social

www.cell.com/iscience/ful...

Summary 🧵 below 👇

direct.mit.edu/jocn/article...

(still uncorrected proofs, but they should post the corrected one soon--also OA is forthcoming, for now PDF at brainandexperience.org/pdf/10.1162-...)

direct.mit.edu/jocn/article...

(still uncorrected proofs, but they should post the corrected one soon--also OA is forthcoming, for now PDF at brainandexperience.org/pdf/10.1162-...)

Poster B23 · Wednesday at 1:00 pm · de Brug.

Poster B23 · Wednesday at 1:00 pm · de Brug.

Reach out if you are interested in any of the above, I'll be at CCN next week!

Reach out if you are interested in any of the above, I'll be at CCN next week!

www.ucl.ac.uk/work-at-ucl/...

www.ucl.ac.uk/work-at-ucl/...

Join the FLARE project @cimcyc.bsky.social to study sudden perceptual learning using fMRI, RSA, and DNNs.

🧠 2 years, fully funded, flexible start

More info 👉 gonzalezgarcia.github.io/postdoc/

DMs or emails welcome! Please share!

Join the FLARE project @cimcyc.bsky.social to study sudden perceptual learning using fMRI, RSA, and DNNs.

🧠 2 years, fully funded, flexible start

More info 👉 gonzalezgarcia.github.io/postdoc/

DMs or emails welcome! Please share!

Work with @zejinlu.bsky.social @sushrutthorat.bsky.social and Radek Cichy

arxiv.org/abs/2507.03168

Work with @zejinlu.bsky.social @sushrutthorat.bsky.social and Radek Cichy

arxiv.org/abs/2507.03168

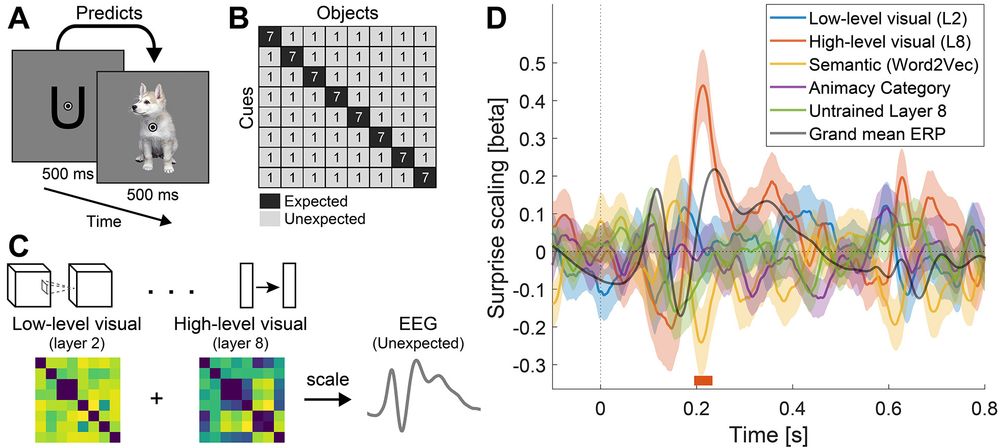

EEG shows that high-level visual surprise emerges rapidly and modulates neural responses ~200ms after stimulus onset.

New preprint with @paulapena.bsky.social and @mruz.bsky.social available here: doi.org/10.1101/2025...

Summary 🧵 below

EEG shows that high-level visual surprise emerges rapidly and modulates neural responses ~200ms after stimulus onset.

New preprint with @paulapena.bsky.social and @mruz.bsky.social available here: doi.org/10.1101/2025...

Summary 🧵 below

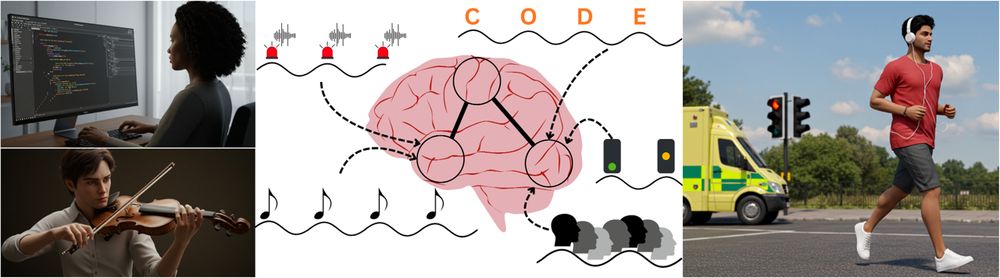

Are sensory sampling rhythms fixed by intrinsically-determined processes, or do they couple to external structure? Here we highlight the incompatibility between these accounts and propose a resolution [1/6]

Are sensory sampling rhythms fixed by intrinsically-determined processes, or do they couple to external structure? Here we highlight the incompatibility between these accounts and propose a resolution [1/6]

www.jobs.ac.uk/job/DNJ330/p...

www.jobs.ac.uk/job/DNJ330/p...

Together with @predictivebrain.bsky.social and Sonja Kotz we are looking for a PhD candidate to join a great project on the biological foundations of perceptual decisions vacancies.maastrichtuniversity.nl/job/Maastric...

Happy to answer any question you may have!

Together with @predictivebrain.bsky.social and Sonja Kotz we are looking for a PhD candidate to join a great project on the biological foundations of perceptual decisions vacancies.maastrichtuniversity.nl/job/Maastric...

Happy to answer any question you may have!

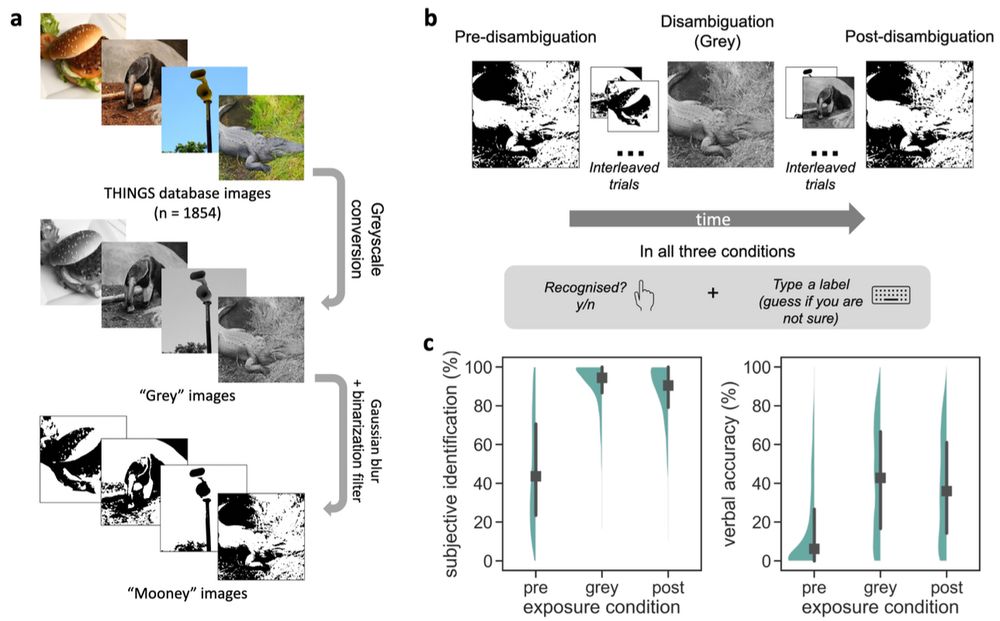

We created ~2k images and collected ~100k responses to study visual ambiguity.

www.biorxiv.org/content/10.1...

We created ~2k images and collected ~100k responses to study visual ambiguity.

www.biorxiv.org/content/10.1...

We add to the (lack of) evidence for expectation effects in carefully-controlled predictive cueing designs.

doi.org/10.1111/psyp...

Led by Carla den Ouden and Máire Kashyap, collaborating with Morgan Kikkawa

We add to the (lack of) evidence for expectation effects in carefully-controlled predictive cueing designs.

doi.org/10.1111/psyp...

Led by Carla den Ouden and Máire Kashyap, collaborating with Morgan Kikkawa

PhD: www.mpi.nl/career-educa...

Postdoc: www.mpi.nl/career-educa...

(please share widely)

PhD: www.mpi.nl/career-educa...

Postdoc: www.mpi.nl/career-educa...

(please share widely)

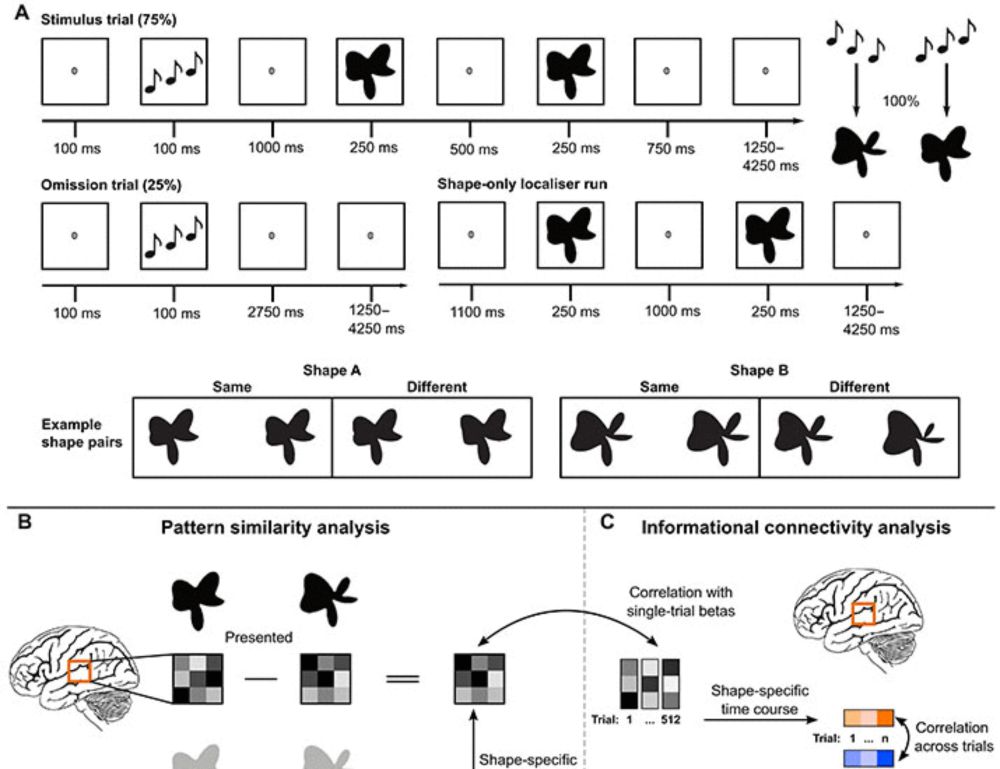

we've found that visual cortex, even when just viewing natural scenes, predicts *higher-level* visual features

The aligns with developments in ML, but challenges some assumptions about early sensory cortex

www.biorxiv.org/content/10.1...

we've found that visual cortex, even when just viewing natural scenes, predicts *higher-level* visual features

The aligns with developments in ML, but challenges some assumptions about early sensory cortex

www.biorxiv.org/content/10.1...

www.nature.com/articles/s41...

www.nature.com/articles/s41...

In collaboration with Dirk van Moorselaar and @jthee.bsky.social we investigated how the brain suppresses distracting stimuli before they appear.

Paper here: doi.org/10.7554/eLif...

Summary 🧵 below

In collaboration with Dirk van Moorselaar and @jthee.bsky.social we investigated how the brain suppresses distracting stimuli before they appear.

Paper here: doi.org/10.7554/eLif...

Summary 🧵 below