David Richter

@davidrichter.bsky.social

Cognitive Neuroscientist | Predictive Processing & Perception Researcher.

At: CIMCYC, Granada. Formerly: VU Amsterdam & Donders Institute.

https://www.richter-neuroscience.com/

At: CIMCYC, Granada. Formerly: VU Amsterdam & Donders Institute.

https://www.richter-neuroscience.com/

If you’re into predictive processing and curious about the ‘what & when of visual surprise’, come see me at #CCN2025 in Amsterdam!

Poster B23 · Wednesday at 1:00 pm · de Brug.

Poster B23 · Wednesday at 1:00 pm · de Brug.

August 11, 2025 at 3:46 PM

If you’re into predictive processing and curious about the ‘what & when of visual surprise’, come see me at #CCN2025 in Amsterdam!

Poster B23 · Wednesday at 1:00 pm · de Brug.

Poster B23 · Wednesday at 1:00 pm · de Brug.

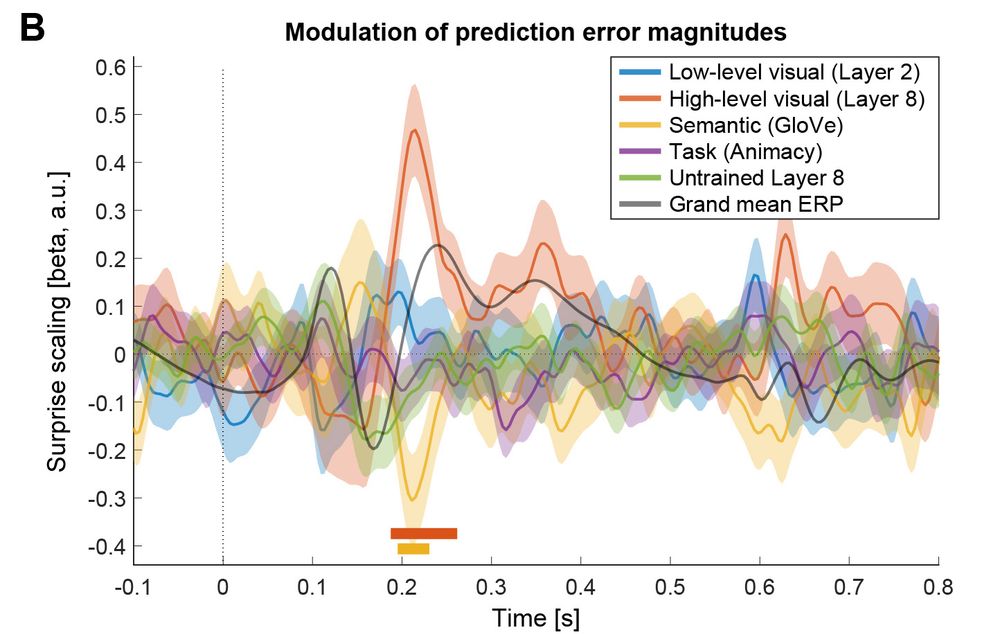

Next, we turned to the key questions – when and what kind of surprise drive visually evoked responses? Results showed that neural responses around 200ms post-stimulus onset over parieto-occipital electrodes were selectively enhanced by high-level visual surprise.

June 26, 2025 at 10:22 AM

Next, we turned to the key questions – when and what kind of surprise drive visually evoked responses? Results showed that neural responses around 200ms post-stimulus onset over parieto-occipital electrodes were selectively enhanced by high-level visual surprise.

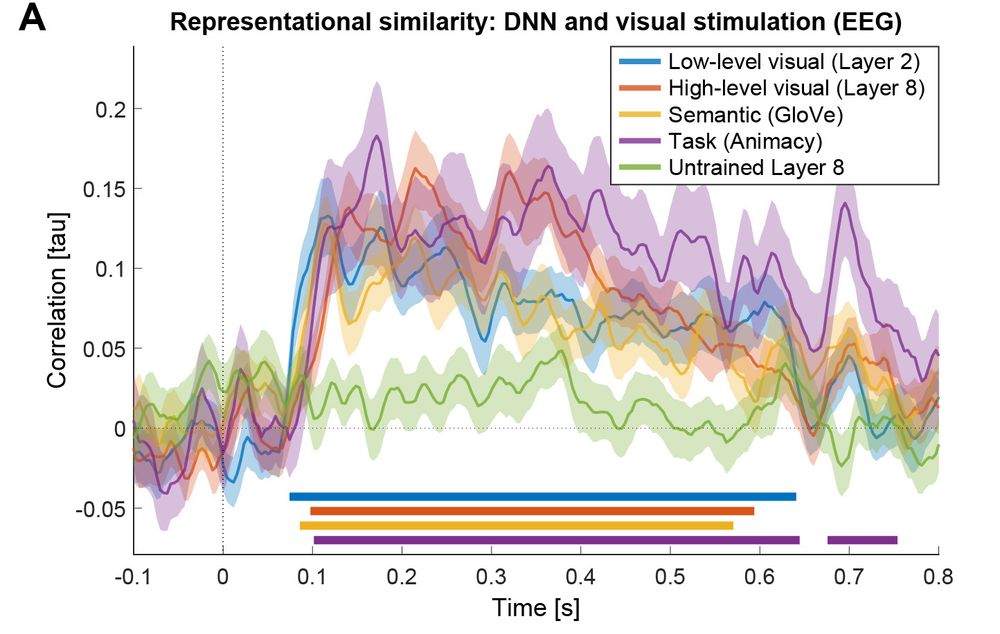

First, as a sanity check, we used RSA to show that the DNN and other models of interest (a semantic word-based and a task model) well explained the EEG response irrespective of surprise.

June 26, 2025 at 10:22 AM

First, as a sanity check, we used RSA to show that the DNN and other models of interest (a semantic word-based and a task model) well explained the EEG response irrespective of surprise.

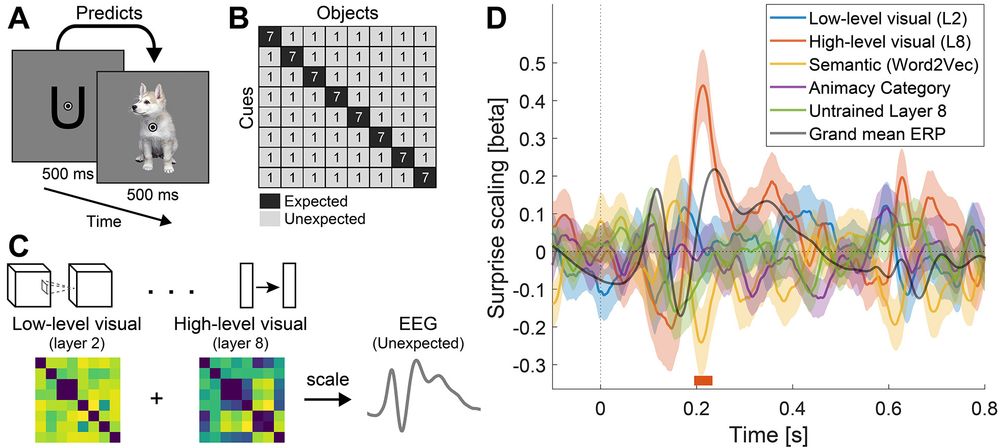

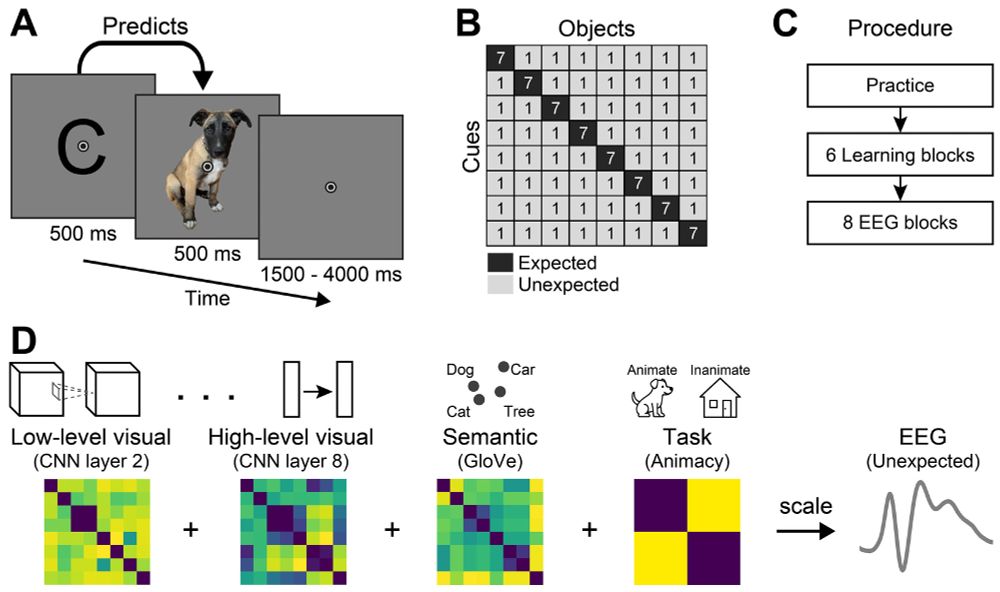

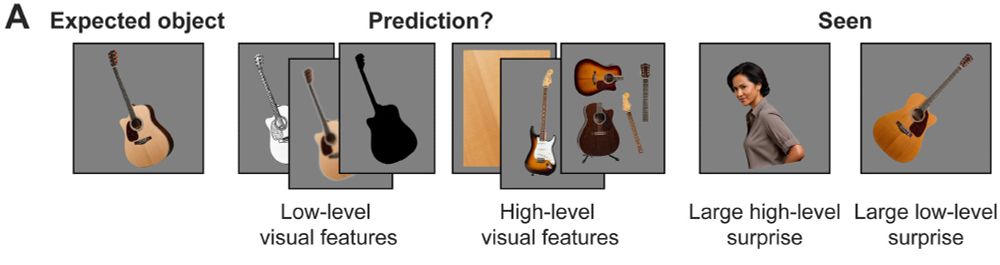

We investigated these questions using EEG and a visual DNN. Participants viewed object images that were probabilistically predicted by preceding cues. We then quantified trial-by-trial surprise at low-levels (early DNN layers) and high-levels (late DNN layers) of visual feature abstraction.

June 26, 2025 at 10:22 AM

We investigated these questions using EEG and a visual DNN. Participants viewed object images that were probabilistically predicted by preceding cues. We then quantified trial-by-trial surprise at low-levels (early DNN layers) and high-levels (late DNN layers) of visual feature abstraction.

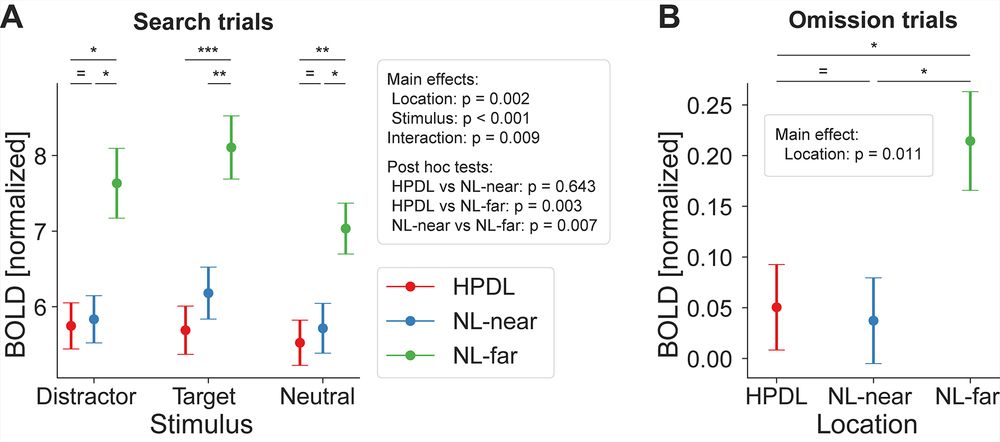

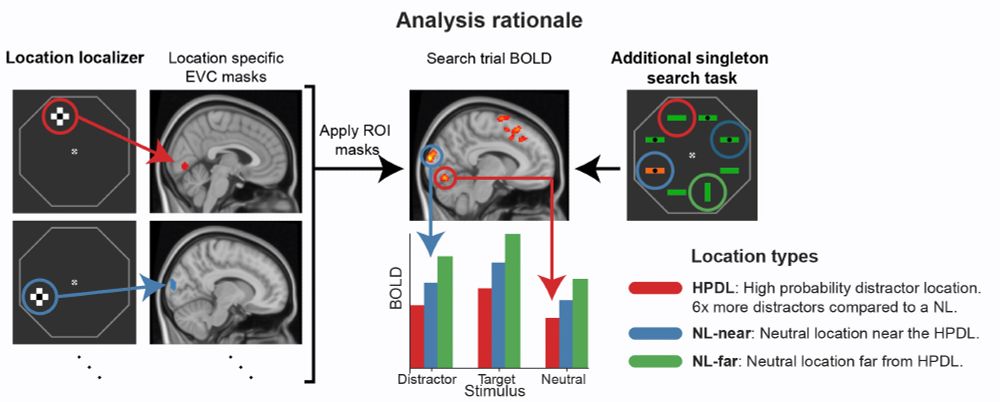

Neural responses in EVC were proactively suppressed at the HPDL, even when no search display was presented. This suggests that distractor suppression can be driven by anticipation alone [panel B below].

March 21, 2025 at 3:01 PM

Neural responses in EVC were proactively suppressed at the HPDL, even when no search display was presented. This suggests that distractor suppression can be driven by anticipation alone [panel B below].

We used fMRI to investigate how the brain may implement distractor suppression in early visual cortex (EVC). Participants performed a visual search task where a salient color distractor appeared more often at one location – the "High Probability Distractor Location" (HPDL).

March 21, 2025 at 3:01 PM

We used fMRI to investigate how the brain may implement distractor suppression in early visual cortex (EVC). Participants performed a visual search task where a salient color distractor appeared more often at one location – the "High Probability Distractor Location" (HPDL).

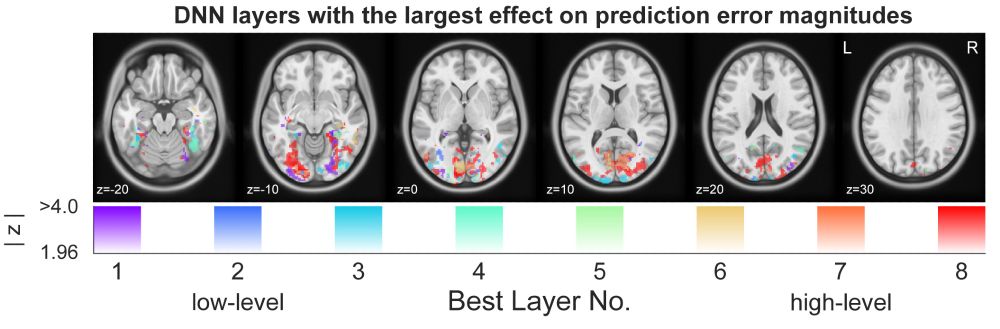

Looking at the DNN layers with the most pronounced effect on prediction error magnitudes revealed a striking difference compared to the localizer run shown before. Late layers (i.e. high-level visual surprise; orange-red colors) dominated the scaling of prediction errors.

November 13, 2024 at 3:28 PM

Looking at the DNN layers with the most pronounced effect on prediction error magnitudes revealed a striking difference compared to the localizer run shown before. Late layers (i.e. high-level visual surprise; orange-red colors) dominated the scaling of prediction errors.

In contrast, prediction errors in both early and higher visual cortex, including in V1, primarily scaled with high-level (layer 8), but not low-level (layer 2) visual surprise.

November 13, 2024 at 3:28 PM

In contrast, prediction errors in both early and higher visual cortex, including in V1, primarily scaled with high-level (layer 8), but not low-level (layer 2) visual surprise.

Results:

First, we validated our feature model using data from a localizer run without stimulus predictability. Here we found an anticipated pattern of the DNN model mirroring a gradient from low-to-high level visual features in visual cortex.

First, we validated our feature model using data from a localizer run without stimulus predictability. Here we found an anticipated pattern of the DNN model mirroring a gradient from low-to-high level visual features in visual cortex.

November 13, 2024 at 3:28 PM

Results:

First, we validated our feature model using data from a localizer run without stimulus predictability. Here we found an anticipated pattern of the DNN model mirroring a gradient from low-to-high level visual features in visual cortex.

First, we validated our feature model using data from a localizer run without stimulus predictability. Here we found an anticipated pattern of the DNN model mirroring a gradient from low-to-high level visual features in visual cortex.

We quantified low- and high-level visual surprise elicited by unexpected images contingent on the expectation on each trial using a visual DNN. We then examined whether and where sensory responses to unexpected inputs were increased as a function of low- vs. high-level visual surprise.

November 13, 2024 at 3:28 PM

We quantified low- and high-level visual surprise elicited by unexpected images contingent on the expectation on each trial using a visual DNN. We then examined whether and where sensory responses to unexpected inputs were increased as a function of low- vs. high-level visual surprise.

Let's consider an example: Within V1, which one of the two unexpected but seen images on the right ("Seen") would evoke a larger surprise response given the expectation to see the guitar on the left ("Expected object")?

November 13, 2024 at 3:28 PM

Let's consider an example: Within V1, which one of the two unexpected but seen images on the right ("Seen") would evoke a larger surprise response given the expectation to see the guitar on the left ("Expected object")?