https://pehlevan.seas.harvard.edu/

pehlevan.seas.harvard.edu/resources-0

#RL #hippocampus

#RL #hippocampus

Hippocampal neurons that initially encode reward shift their tuning over the course of days to precede or predict reward.

Full text here:

rdcu.be/eY5nh

Hippocampal neurons that initially encode reward shift their tuning over the course of days to precede or predict reward.

Full text here:

rdcu.be/eY5nh

Hippocampal neurons that initially encode reward shift their tuning over the course of days to precede or predict reward.

Full text here:

rdcu.be/eY5nh

(Plausibly, yes 😊)

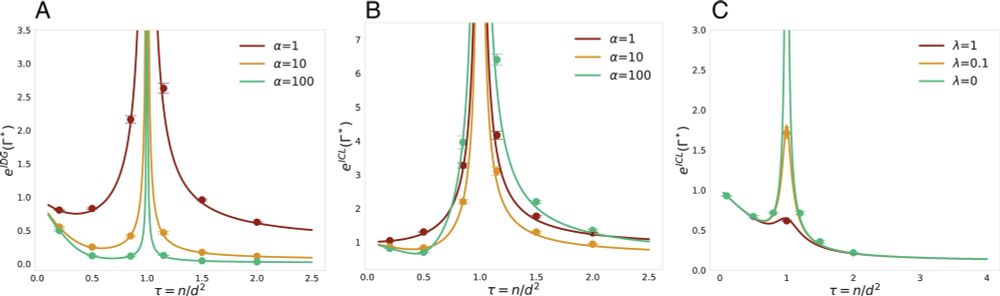

@david-g-clark.bsky.social and Ashok Litwin-Kumar! Timely too, as “low-D manifold” has been trending again. (If you read thru the end, we escape Flatland and return to the glorious high-D world we deserve.) www.biorxiv.org/content/10.6...

(Plausibly, yes 😊)

Discover their innovative research shaping the future of AI 👉 bit.ly/47Do4R3

#AI

Discover their innovative research shaping the future of AI 👉 bit.ly/47Do4R3

#AI

We propose a model that separates estimation of odor concentration and presence and map it on olfactory bulb circuits

Led by @chenjiang01.bsky.social and @mattyizhenghe.bsky.social joint work with @jzv.bsky.social and with @neurovenki.bsky.social @cpehlevan.bsky.social

We propose a model that separates estimation of odor concentration and presence and map it on olfactory bulb circuits

Led by @chenjiang01.bsky.social and @mattyizhenghe.bsky.social joint work with @jzv.bsky.social and with @neurovenki.bsky.social @cpehlevan.bsky.social

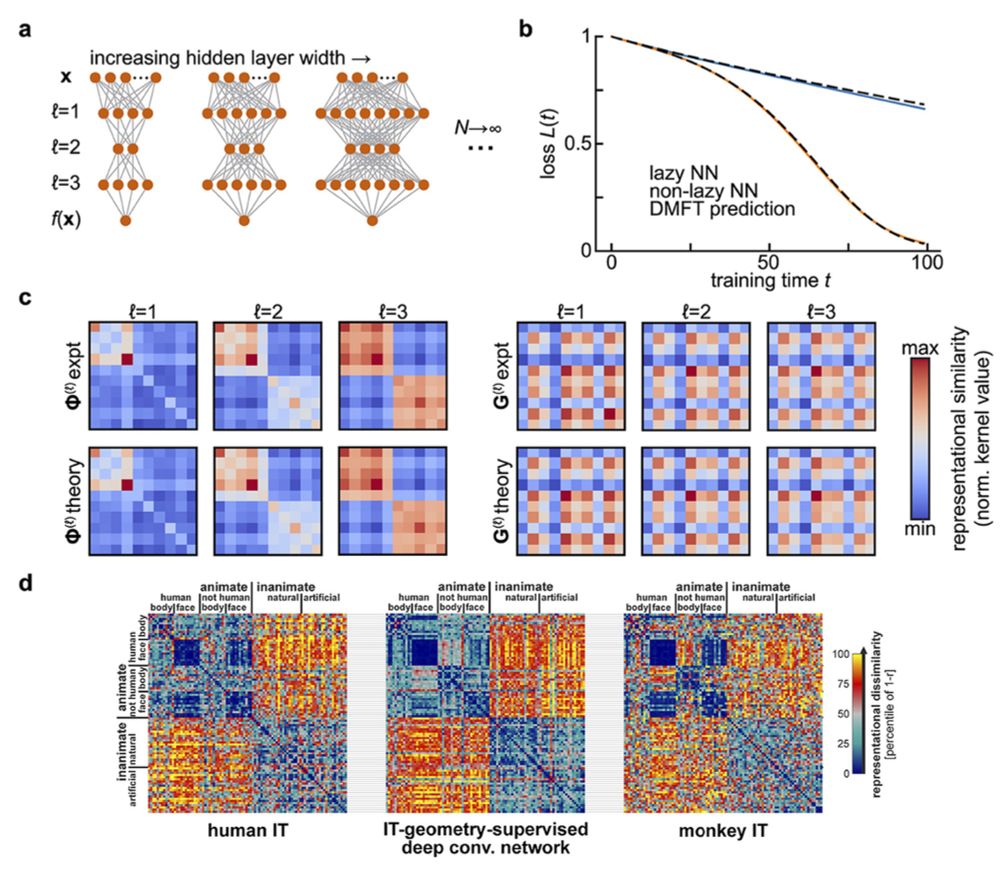

For neuro fans: conn. structure can be invisible in single neurons but shape pop. activity

For low-rank RNN fans: a theory of rank=O(N)

For physics fans: fluctuations around DMFT saddle⇒dimension of activity

For neuro fans: conn. structure can be invisible in single neurons but shape pop. activity

For low-rank RNN fans: a theory of rank=O(N)

For physics fans: fluctuations around DMFT saddle⇒dimension of activity

POD Postdoc: oden.utexas.edu/programs-and... CSEM PhD: oden.utexas.edu/academics/pr...

POD Postdoc: oden.utexas.edu/programs-and... CSEM PhD: oden.utexas.edu/academics/pr...

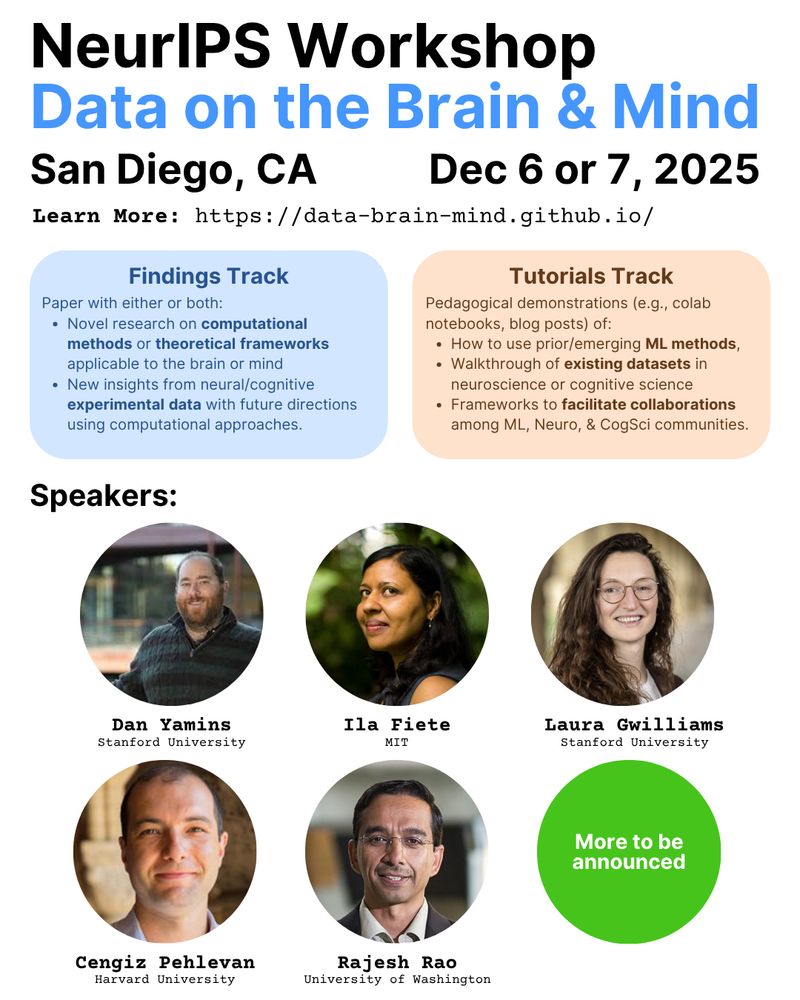

🔍 Submit to our Finding or Tutorials track on OpenReview.

Findings track submission: openreview.net/group?id=Neu...

Tutorial track submission: openreview.net/group?id=Neu...

More info: data-brain-mind.github.io

🔍 Submit to our Finding or Tutorials track on OpenReview.

Findings track submission: openreview.net/group?id=Neu...

Tutorial track submission: openreview.net/group?id=Neu...

More info: data-brain-mind.github.io

www.youtube.com/watch?v=KVRF...

www.youtube.com/watch?v=KVRF...

kempnerinstitute.harvard.edu/kempner-inst...

kempnerinstitute.harvard.edu/kempner-inst...

@simonsfoundation.org Simons Collaboration on the Physics of Learning and Neural Computation!

www.simonsfoundation.org/2025/08/18/s...

#AI #neuroscience #NeuroAI #physics #ANNs

@simonsfoundation.org Simons Collaboration on the Physics of Learning and Neural Computation!

www.simonsfoundation.org/2025/08/18/s...

#AI #neuroscience #NeuroAI #physics #ANNs

📣 Call for: Findings (4- or 8-page) + Tutorials tracks

🎙️ Speakers include @dyamins.bsky.social @lauragwilliams.bsky.social @cpehlevan.bsky.social

🌐 Learn more: data-brain-mind.github.io

📣 Call for: Findings (4- or 8-page) + Tutorials tracks

🎙️ Speakers include @dyamins.bsky.social @lauragwilliams.bsky.social @cpehlevan.bsky.social

🌐 Learn more: data-brain-mind.github.io

Check it out now at PNAS:

doi.org/10.1073/pnas...

(2/2)

Check it out now at PNAS:

doi.org/10.1073/pnas...

(2/2)

bit.ly/4lPK15p

#AI @pnas.org

(1/2)

bit.ly/4lPK15p

#AI @pnas.org

(1/2)